Hello!

This project is a simple implementation of a frogger-like game, with two AI agents that learn to play it using Deep Q-learning and NEAT. It was developed by Tomer Meidan, Omer Ferster and I, from The Hebrew University of Jerusalem, as part of the course "Introduction to Artificial Intelligence" (2024).

Please read assets/README.md to further understand why the assets in this repository do not match those in the gameplay.

To install the required packages, use your favorite package manager with python 3.12 to initialize a new environment. Then, run the following command to install the required packages:

pip install -r requirements.txtThere are several configuration flags that can be inputted to the program, to change the behavior of the game and the learning algorithms. The flags are as follows:

--agent: The agent to use. Can be eitherdqn,neat,random,onlyuporhuman. Default israndom.--fps: The frames per second of the game. Default is 5.--grid_like: Whether to use a grid-like environment or a continuous one. Default isFalse.--train: Whether to include the train obstacle or not. Default isFalse.--neat_config: The configuration file for NEAT. Default isneat-config.txt.--generations: The number of generations to run NEAT for. Default is 200.--plot: Whether to plot the results or not. Default isFalse.--test: Whether to test a model or not. Default is None.*tests all available models will be tested. Can also be a specific model name to be tested.--multi_test: Whether to test multiple models or not. Default isFalse.

- Note that when the game runs, you can click on "space" to change the speed of the game from 5 fps to the original fps given under the

fpsflag.

The game has three difficulties:

- Easy: The basic difficulty, with cars moving horizontally.

- Medium: The intermediate difficulty, with cars moving horizontally and a train moving horizontally.

- Hard: The hardest difficulty, with cars moving horizontally, a train moving horizontally and logs moving vertically.

To change the difficulty, you can change the --train flag to True and --water to True.

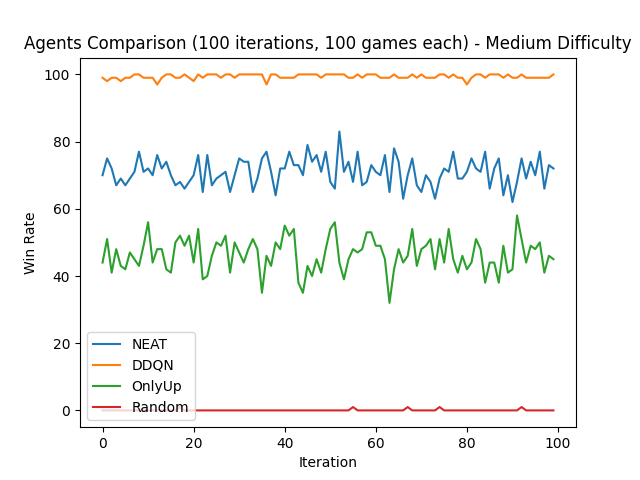

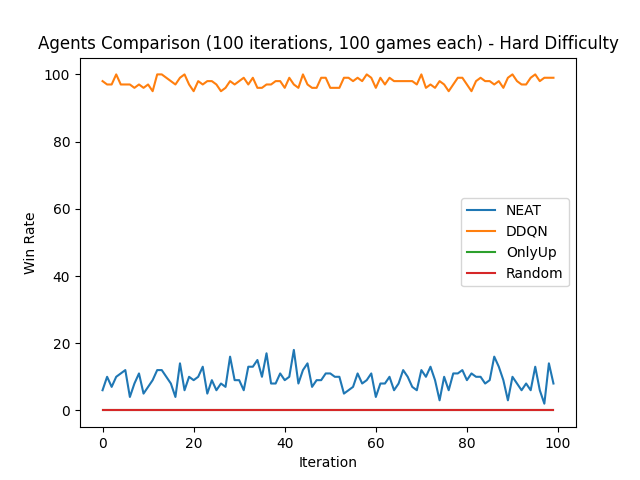

During our time working on the project, we tweaked and tuned our agents to achieve the best results possible. Here are the results of our base agents and best agents:

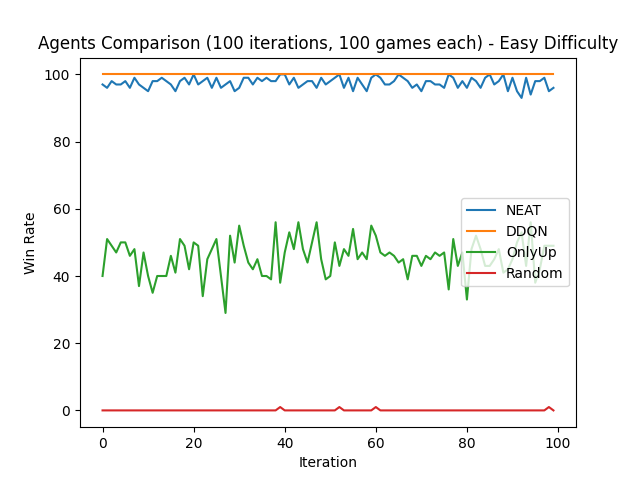

The random agent is the first base-line agent we decided to implement. It simply chooses a random action from the available actions at each time step. The win rate of this agent is 0% even with only the cars obstacle.

To run this model, use the following command:

python main.py --agent=random --grid_like --fps=500 --lives=1 --games=100The only up agent is the second base-line agent we decided to implement. It simply chooses the "up" action at each time step. The win rate of this agent is ~40% with only the cars obstacle, ~40% with cars and a train and 0% win rate with cars, train and water section. The reason this agent has a higher win rate than the random agent is because sometimes, going just up is enough to avoid all cars. However, most times the agent will encounter obstacles, and the only-up agent, has no idea how to deal with them.

To run this model, use the following command:

python main.py --agent=onlyup --grid_like --fps=500 --lives=1 --games=100The best agents we managed to train using the NEAT algorithm achieved the following:

- In easy mode, the best agent achieved a win rate of 99%.

- In medium mode, the best agent achieved a win rate of 72%.

- In hard mode, the best agent achieved a win rate of 8%.

In this section, we'll go over the exact configuration and code that lead to us getting these results.

You can test these models yourself. They are included in this project under: models/neat/:

models/neat/easy - 99%.pklmodels/neat/medium - 72%.pklmodels/neat/hard - 8%.pkl

To run any model, use the following command (replace with desired model name):

python main.py --agent=neat --grid_like --fps=500 --lives=1 --games=100 --test="easy - 99%.pkl" [--train] [--water]Let's go over the main parts of the training of this model:

-

Run command:

python main.py --agent=neat --grid_like --fps=500 --lives=3 --generations=300 --plot [--train] [--water]

-

Configuration file:

neat-config.txt -

State representation:

src/agents/neat/neat_player.py -

Fitness function:

src/agents/neat/neat_game.py

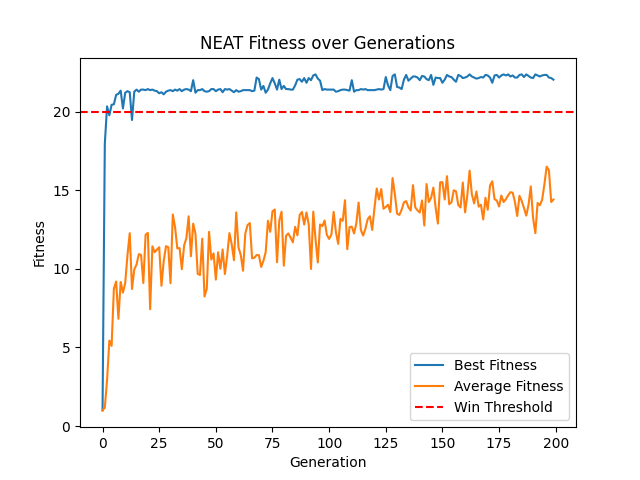

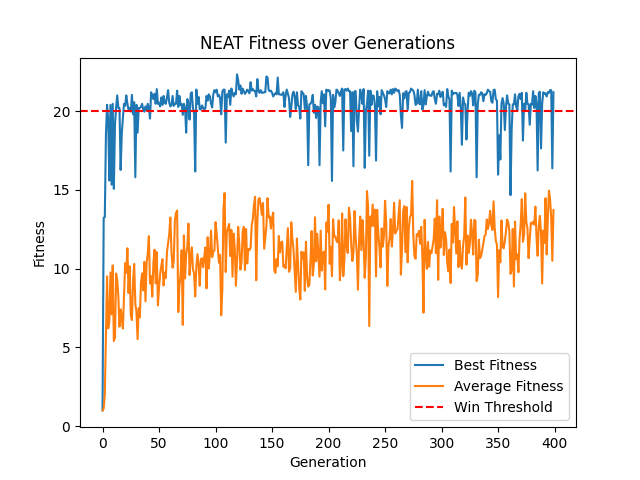

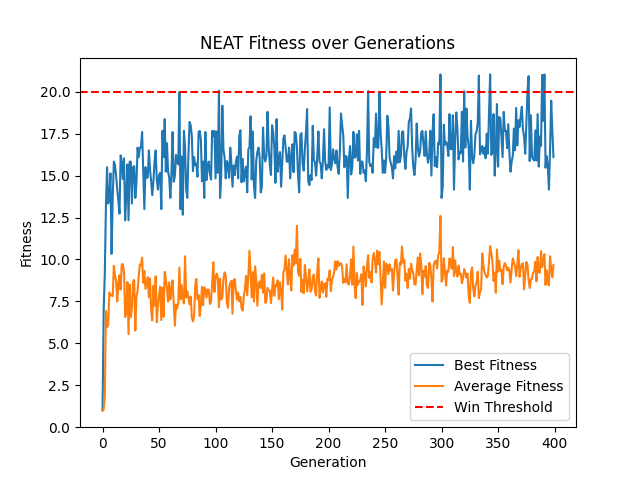

The following are the plots of the training of the NEAT algorithm, for each difficulty - easy, medium and hard respectively:

As you can see, in the easy task, NEAT was able to converge to a good solution pretty quickly, and the more complexity we introduce, the more the algorithm struggles.

The best agents we managed to train using the DDQN algorithm achieved the following:

- In easy mode, the best agent achieved a win rate of 100%.

- In medium mode, the best agent achieved a win rate of 100%.

- In hard mode, the best agent achieved a win rate of 99%.

In this section, we'll go over the exact configuration and code that lead to us getting these results.

- It is important to note that the exact variation of the DQN algorithm we used is the Double DQN algorithm.

You can test these models yourself. They are included in this project under: models/dqn/:

models/dqn/easy - 100%.pklmodels/dqn/medium - 100%.pklmodels/dqn/hard - 99%.pkl

To run any model, use the following command (replace with desired model name):

python main.py --agent=dqn --grid_like --fps=500 --lives=1 --games=100 --test="easy - 100%.pth" [--train] [--water]Let's go over the main parts of the training of this model:

-

Run command:

python main.py --agent=dqn --grid_like --fps=500 --lives=1 --games=2000 --plot [--train] [--water]

-

Model:

src/agents/dqn/dqn_model.py: -

Agent:

src/agents/dqn/dqn_agent.py: -

State representation:

src/agents/dqn/dqn_player.py -

Reward function:

src/agents/dqn/dqn_game.py

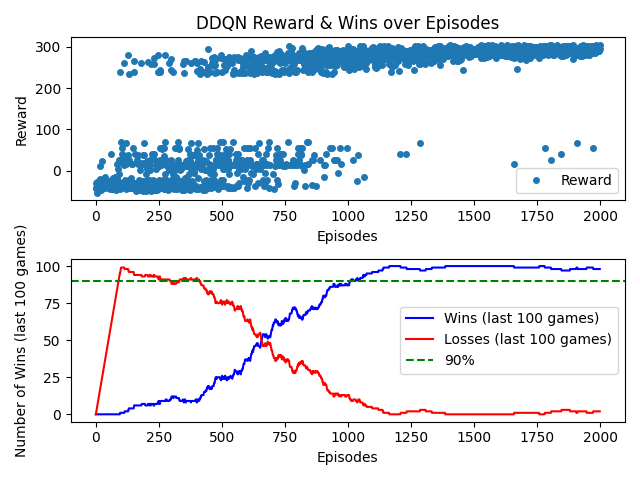

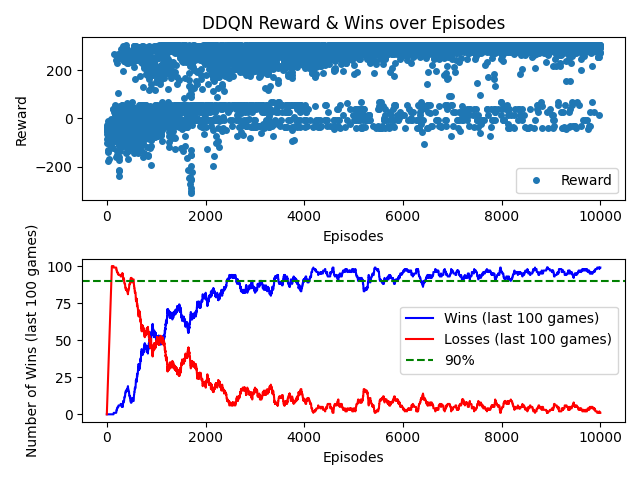

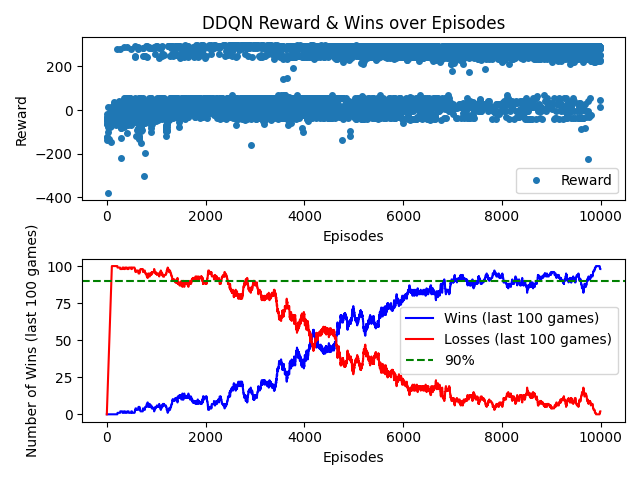

The following are the plots of the training of the NEAT algorithm, for each difficulty - easy, medium and hard respectively:

It is noticeable that the DQN algorithm was able to converge to a good solution pretty quickly in the easy task, requiring just 1000 episodes. However, it took way longer in the medium and hard tasks, requiring 4000 and 6000 episodes respectively.

It did, however, reached very good results overall compared to NEAT.

We can see that the DQN algorithm was able to achieve better results than the NEAT algorithm in all difficulties. The two base-line agents, random and only-up, struggled to achieve good results and shuttered completely in the hard difficulty.

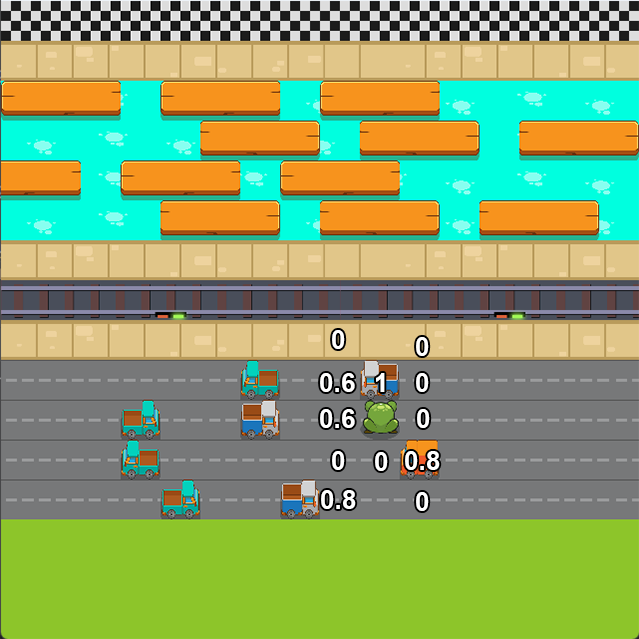

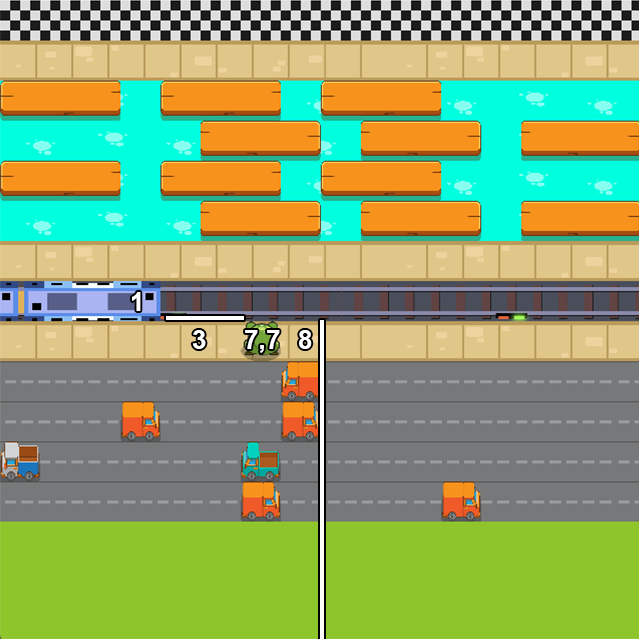

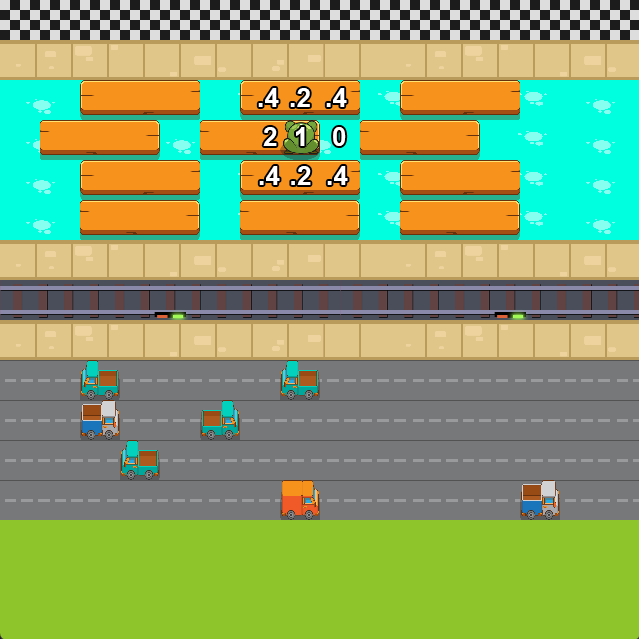

This section shows some images from the game: