This is a small project which aims to create a Neural Network from scratch, without using any external libraries except for numpy. The project is written in Python and uses the MNIST dataset to train the Neural Network. The Neural Network is trained using the backpropagation algorithm and Adam optimizer. I drew inspiration from how PyTorch models the different classes, functions and components.

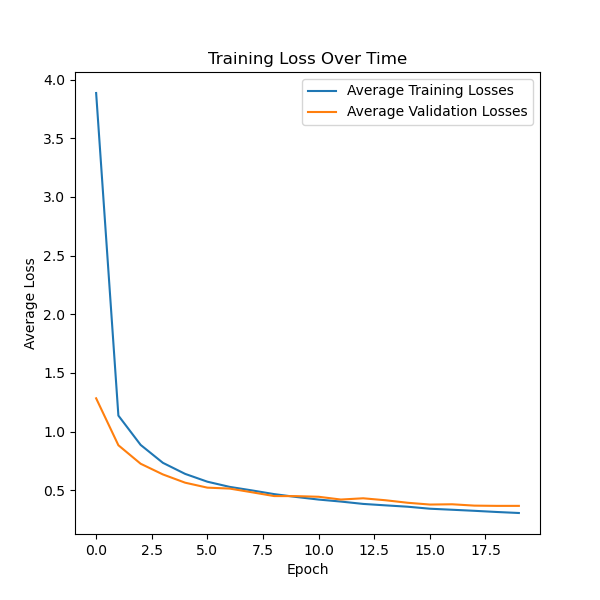

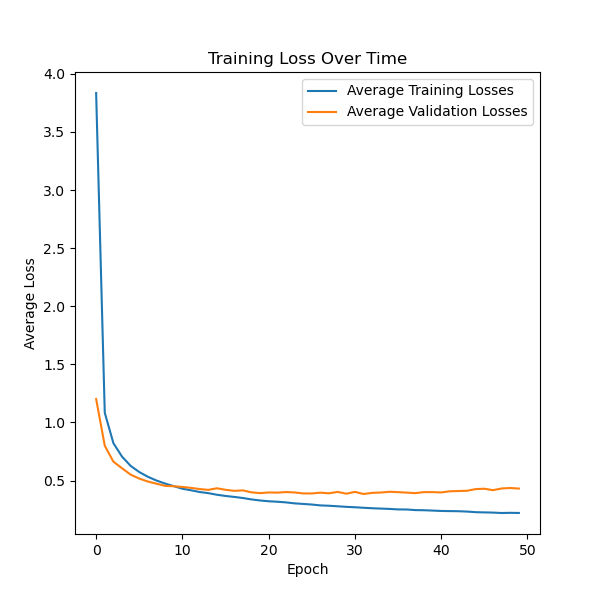

There's a slight, known, issue with overfitting. This can probably be solved by using Weight Decay, Dropout, or other regularization techniques. However, I am still satisfied with the results and decided to leave it as it is.