This AI Entity Framework is a cutting-edge system designed for crafting intelligent agent(s). These agent(s) are not just typical AI entities; they are foundational, adaptable, and capable of underpinning a wide array of applications. 🌟 This framework aspires to maximize the benefits of foundational models while minimizing potential harms.

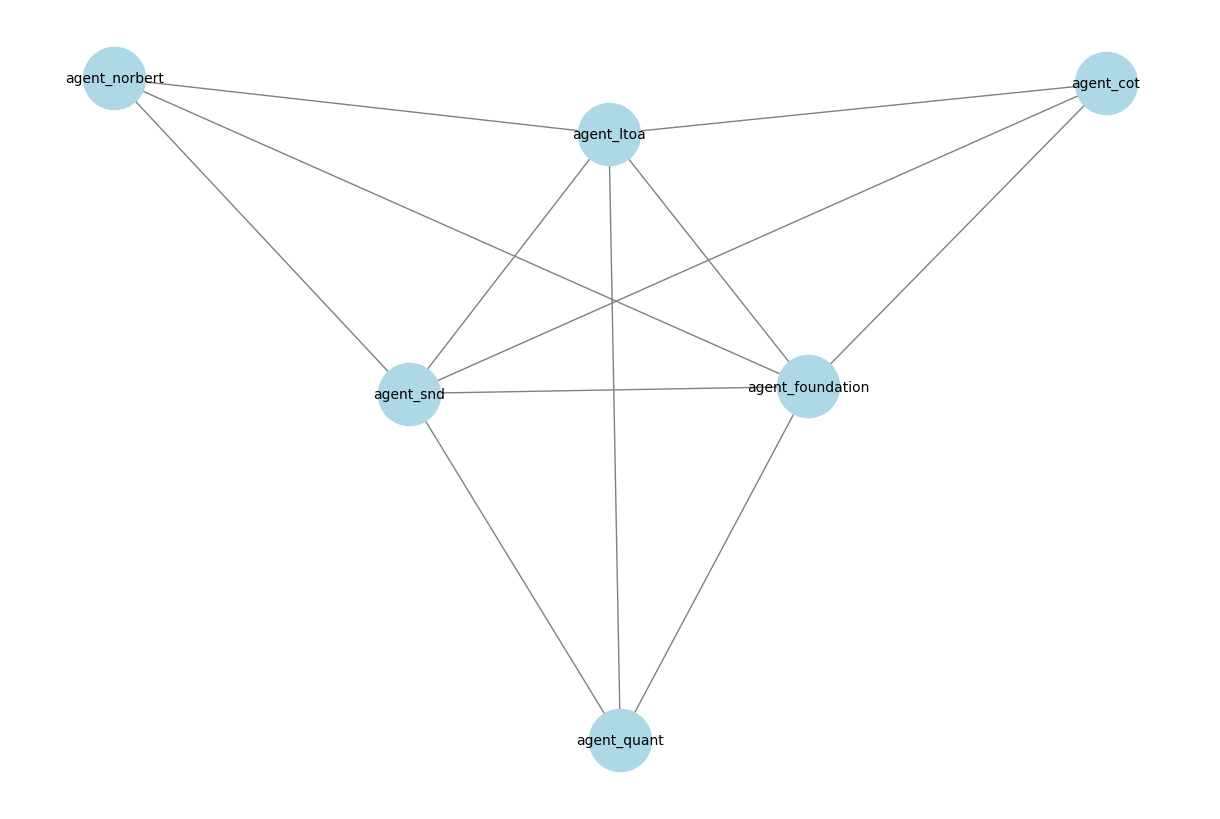

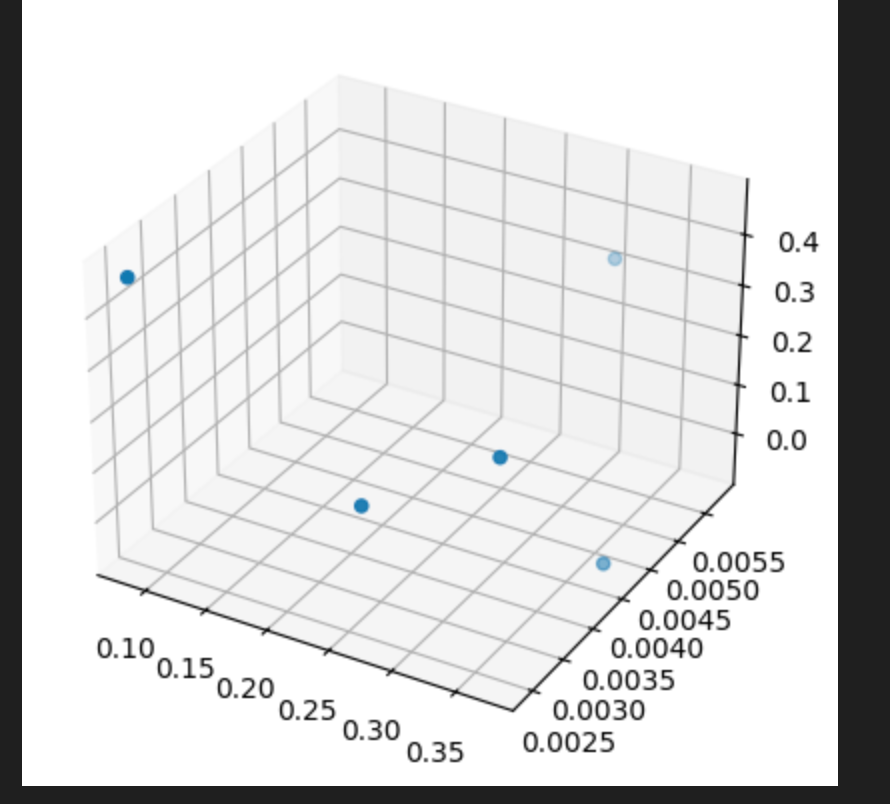

Here, a network of agents G = (V, E) is created based on vector representation of diversity scores taken from the agent(s) repsonse(s) to a prompt. V = {jaccard_index, dot_product, entropy}

Where probablities are calculated from vector = {P(Word|All_Response) for Word in Response} and where an Edge E = |state_t0 * state_T1| <= Threshold for KNN

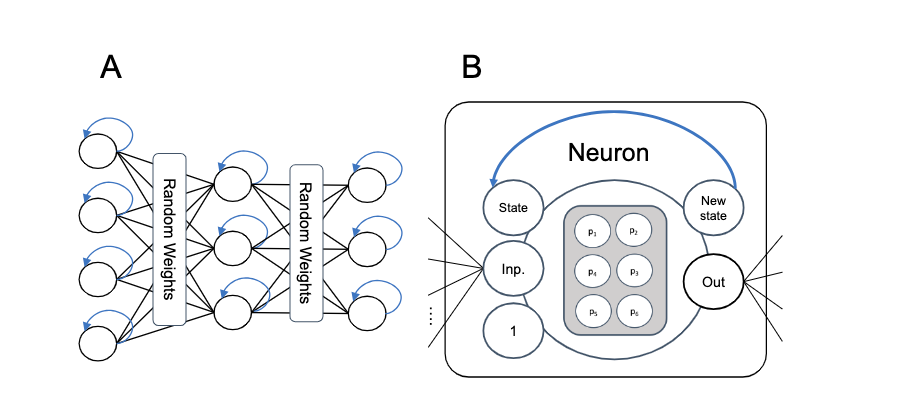

🧠🌌 Create a bounded high diminsional plane of semantic understanding of agent answers using the Neuron class. Mimicing the theory behind this paper the scope of the bounded space would be 500 words max.

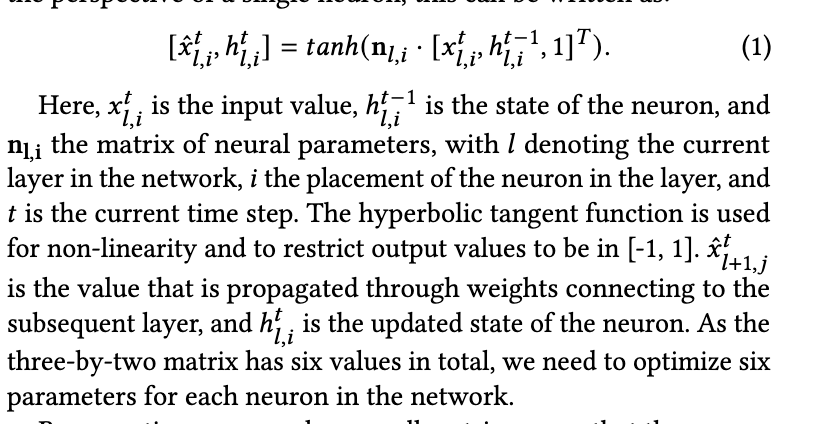

Neuron Architecture to create vector embeddings

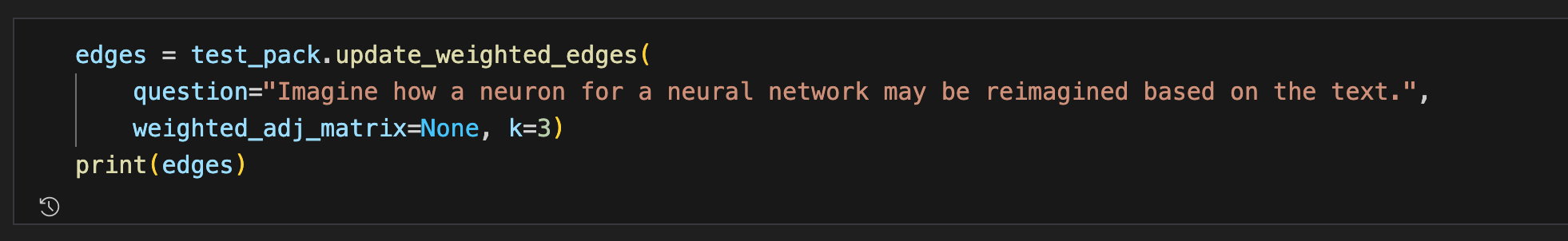

🔬👩💻 Analyze the network of agents and their responses. Change edge update algorithm from KNN to heuristic based so that diversity in connections may happen. Allow random activation functions within and between network layers.

A Story About AI from AI

Once upon a time, in a world not too different from our own, there existed a revolutionary technology known as foundational models. These models were not ordinary AI systems; they were powerful, adaptable, and capable of serving as the basis for a wide range of tasks. They were like the foundation of a building, providing stability, safety, and security for the applications built upon them.

In this world, foundational models had become a crucial part of our daily lives. Companies like Google, with its vast user base, relied on these models to power their search engines. With each passing day, the impact of foundational models on society grew more profound. However, as with any powerful tool, the deployment of foundational models came with both opportunities and risks. The creators of these models recognized that the responsibility lay not only in building them, but also in their careful curation and adaptation. They understood that the ultimate source of data for training foundational models was people, and it was crucial to consider the potential benefits and harms that could befall them.

Thoughtful data curation became an integral part of the responsible development of AI systems. The creators realized that the quality and nature of the foundation on which these models stood had to be understood and characterized. After all, poorly-constructed foundations could lead to disastrous consequences, while well-executed foundations could serve as a reliable bedrock for future applications. As the next five years unfolded, the integration of foundational models into real-world deployments reached new heights. The impact on people became even more far-reaching. These models were no longer limited to language tasks; their scope expanded to encompass a multitude of applications. They became the backbone of various AI systems, shaping the way we interacted with technology on a daily basis.

However, the true nature of these foundational models remained a mystery. Researchers, foundation model providers, application developers, policymakers, and society at large grappled with the question of trustworthiness. It became a critical problem to address, as the consequences of relying on faulty foundations could have severe implications for individuals and communities. In this evolving landscape, humans played a crucial role. They were not only the providers of data but also the recipients of the benefits and harms that emerged from the deployment of foundational models. It was their responsibility to ensure that these models were used ethically and responsibly.

As the story unfolds, it is up to the collective efforts of researchers, providers, developers, policymakers, and society to navigate the opportunities and risks presented by foundational models. With careful consideration, they can harness the power of these models to create a future where the benefits are maximized, and the harms are minimized. The next five years will be crucial in shaping the societal impact of foundational models and determining the path forward for this emerging paradigm.

Utils Documentation

-

Agent Class 🌟: The core of the framework, embodying a top-level AI agent.

- Initialization: Specify name, path, type, and embedding parameters.

- Integration: Combines Encoder, DB, and NewCourse instances.

- Functionalities: Supports course creation, chat interactions, and instance management.

-

ChatBot Module 💬: Manages the agent's conversational abilities.

- Chat Handling: Manages chat loading and interactions 🔄.

- Integration: Seamlessly works with the Agent class.

-

NewCourse Module 📖: Facilitates new course creation and management.

- Course Creation: Enables creation from documents.

- Content Management: Supports content updates and loading.

-

Encoder Module: Responsible for data encoding and processing.

- Document Handling: Manages document encoding and vector databases 💾.

- Embedding Management: Handles embedding parameters.

-

Pack Class 🐺: Integrates multiple AI agents for collaborative processing and decision-making.

- Initialization: Sets up with a list of agent specifications and optional embedding parameters. Default parameters are provided if not specified.

- Composition: Combines three AI agents into a unified framework, allowing for complex interactions and data processing.

- Functionalities: Manages agent interactions, updates network connections, and processes collective responses. Supports document loading for agents, and enables comprehensive data analysis through graph representation and diversity metrics.

- Integration: Works with Knn for nearest neighbor calculations, utilizes ThoughtDiversity for diversity metrics, and interfaces with individual agents for specific tasks like document processing and chat interactions.

-

ThoughtDiversity Class 🌐: Evaluates the diversity of thought in a collective of AI agents.

- Initialization: Sets up with an Agent_Pack instance, preparing it for diversity analysis.

- Functionalities: Conducts Monte Carlo simulations to assess thought diversity, calculates Shannon Entropy and True Diversity scores, and determines Wasserstein metrics for comparative analysis.

- Analysis Tools: Includes methods for creating probability vectors from agent responses, calculating Shannon Entropy and True Diversity, and computing Wasserstein distances between probability vectors.

- Application: Aims to provide a quantitative measure of thought diversity within a group of AI agents, enhancing decision-making and problem-solving processes.

-

Neuron Class 🧠: Represents a single neuron in a neural network for complex data processing and learning. Implementation of the following paper

- Initialization: Configures with initial input, unique ID, layer position, mean and standard deviation for state initialization, and bias. Initializes random weights and state.

- Functionalities: Implements tanh and optional sigmoid for neuronal activation. Conducts forward propagation of signals through the neuron. Adjusts weights and biases based on error and learning rate to optimize network performance. Identifies k-nearest neighboring neurons based on input differences within a specified threshold.

- State Management: Provides methods to get and set the neuron’s state and weights, ensuring dynamic adaptability during the network's learning process.

- Visualization: Capable of plotting signal strengths and neuronal changes, aiding in the analysis and understanding of the neural network’s behavior.

Create Your Own 🤖

-

Clone the Repository 🌠:

git clone https://github.com/LilaShiba/SND_Agents.git - Ensure Python Environment 🐍 >= 3.10.

-

Install Dependencies 🧬:

Installs necessary packages like numpy, openAI, etc, ensuring smooth operation of the framework.

pip install -r requirements.txt - Get Your OpenAI API Key🤖: Sign Up to get semantic embeddings

-

Initialize the Agent 🤖:

Execute this to kickstart your AI agent's journey.

python main.py

embedding_params = ["facebook-dpr-ctx_encoder-multiset-base", 200, 25, 0.7]

document_path = 'documents/meowsmeowing.pdf'

db_path = 'chroma_db/agent_snd'

# (name, resource_path, chain_of_thought_bool, [LLM model, chunck size, overlap, creativity], new_course_bool)

testAgent = Agent('agent_snd', db_path, 0, embedding_params, True)

testAgent.start_chat()# embedding paths

learning_to_act = "chroma_db/agent_ltoa"

system_neural_diversity = "chroma_db/agent_snd"

foundational_models = "chroma_db/agent_foundation"

# llm settings

embedding_params = [

["facebook-dpr-ctx_encoder-multiset-base", 200, 25, 0.9],

["facebook-dpr-ctx_encoder-multiset-base", 200, 25, 0.1],

["facebook-dpr-ctx_encoder-multiset-base", 200, 25, 0.5],

["facebook-dpr-ctx_encoder-multiset-base", 200, 25, 0.9],

["facebook-dpr-ctx_encoder-multiset-base", 200, 25, 0.1],

["facebook-dpr-ctx_encoder-multiset-base", 200, 25, 0.5]

]

# name, path, cot_type, new_bool

agent_specs = [

['agent_ltoa', learning_to_act, 0, True],

['agent_snd', system_neural_diversity, 0, True],

['agent_foundation', foundational_models, 0, True],

['agent_quant', 'documents/VisualizingQuantumCircuitProbability.pdf', 1, False],

['agent_norbert', 'documents/Norman-CognitiveEngineering.pdf', 1, False]

]

test_pack = Pack(agent_specs, embedding_params)