Custom ComfyUI Nodes for interacting with Ollama using the ollama python client.

Integrate the power of LLMs into ComfyUI workflows easily or just experiment with GPT.

To use this properly, you would need a running Ollama server reachable from the host that is running ComfyUI.

- Install ComfyUI

- git clone in the

custom_nodesfolder inside your ComfyUI installation or download as zip and unzip the contents tocustom_nodes/compfyui-ollama. - Start/restart ComfyUI

Or

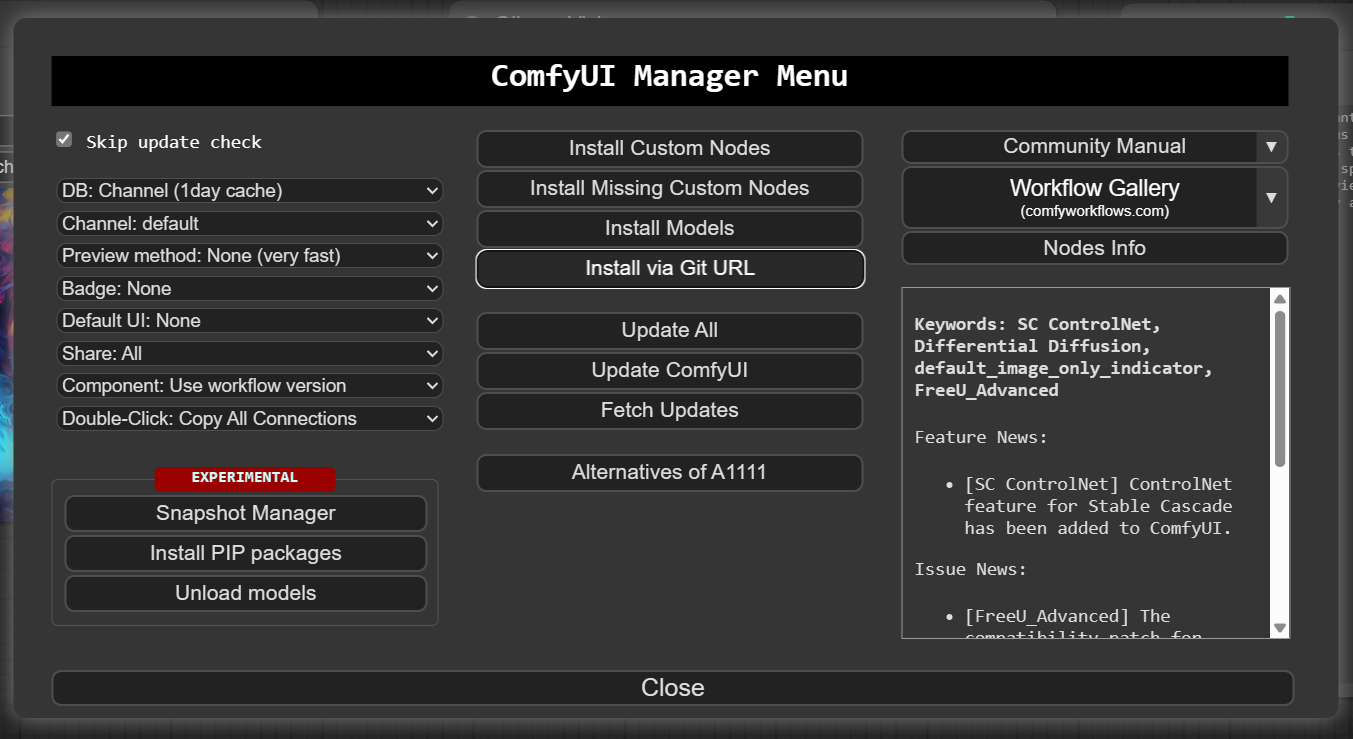

use the compfyui manager "install via git url".

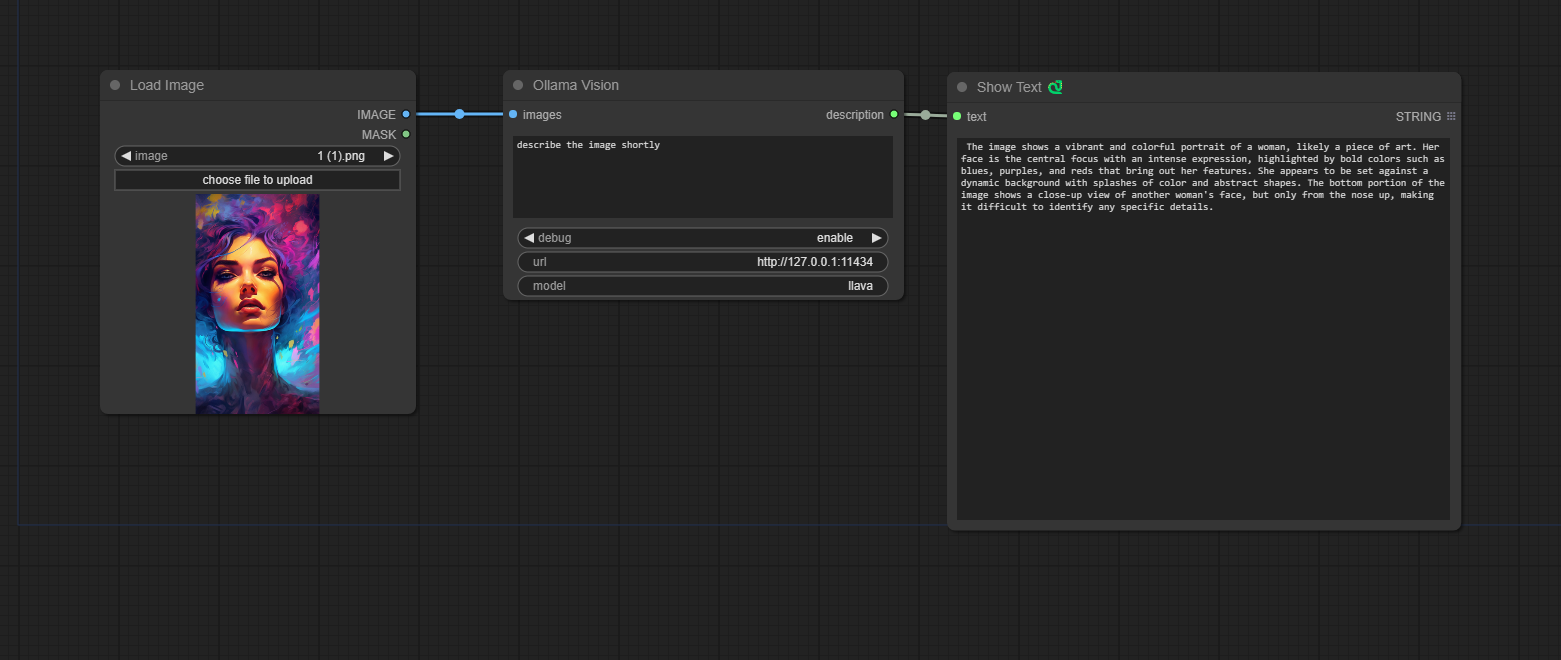

A node that gives an ability to query input images.

A model name should be model with Vision abilities, for example: https://ollama.com/library/llava.

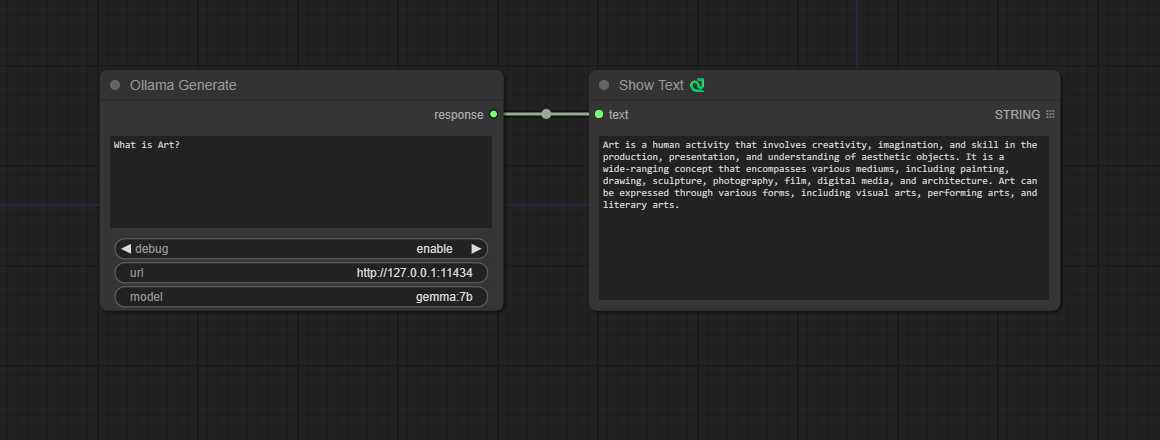

A node that gives an ability to query an LLM via given prompt.

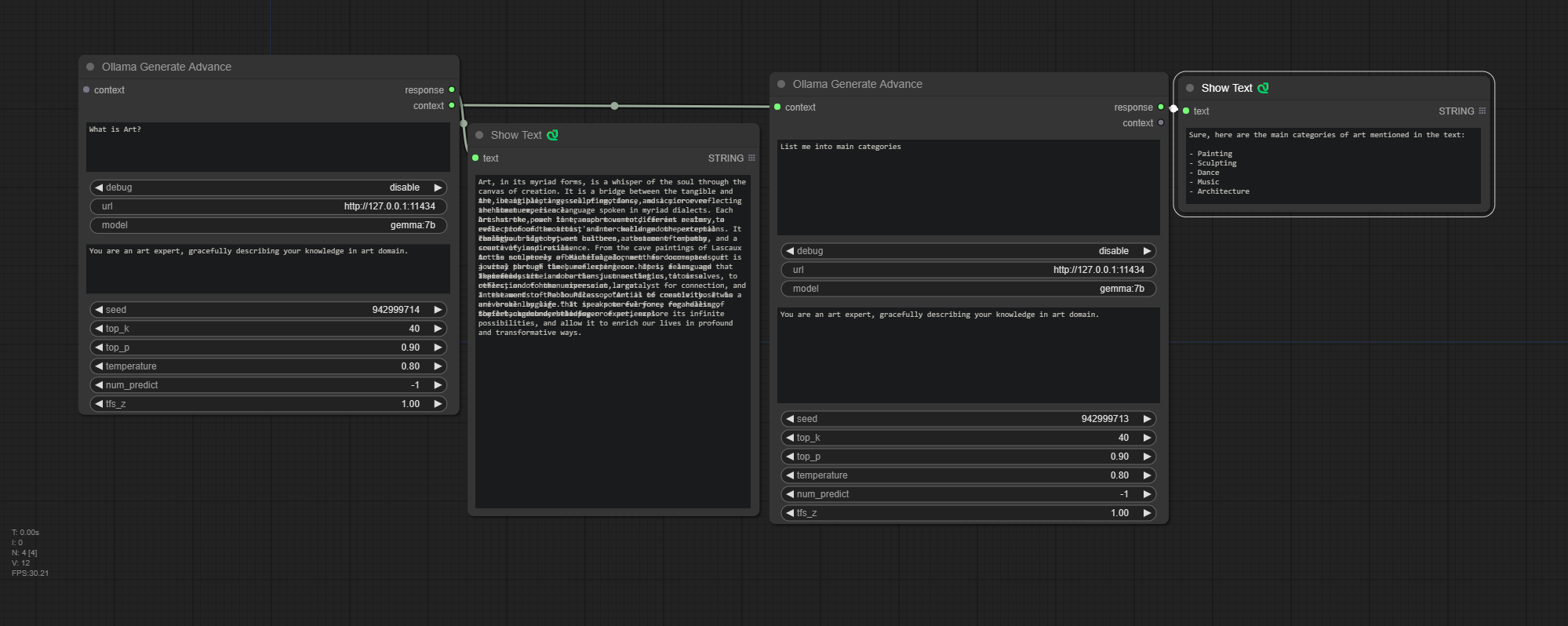

A node that gives an ability to query an LLM via given prompt with fine tune parameters and an ability to preserve context for generate chaining.

Check ollama api docs to get info on the parameters.

More params info

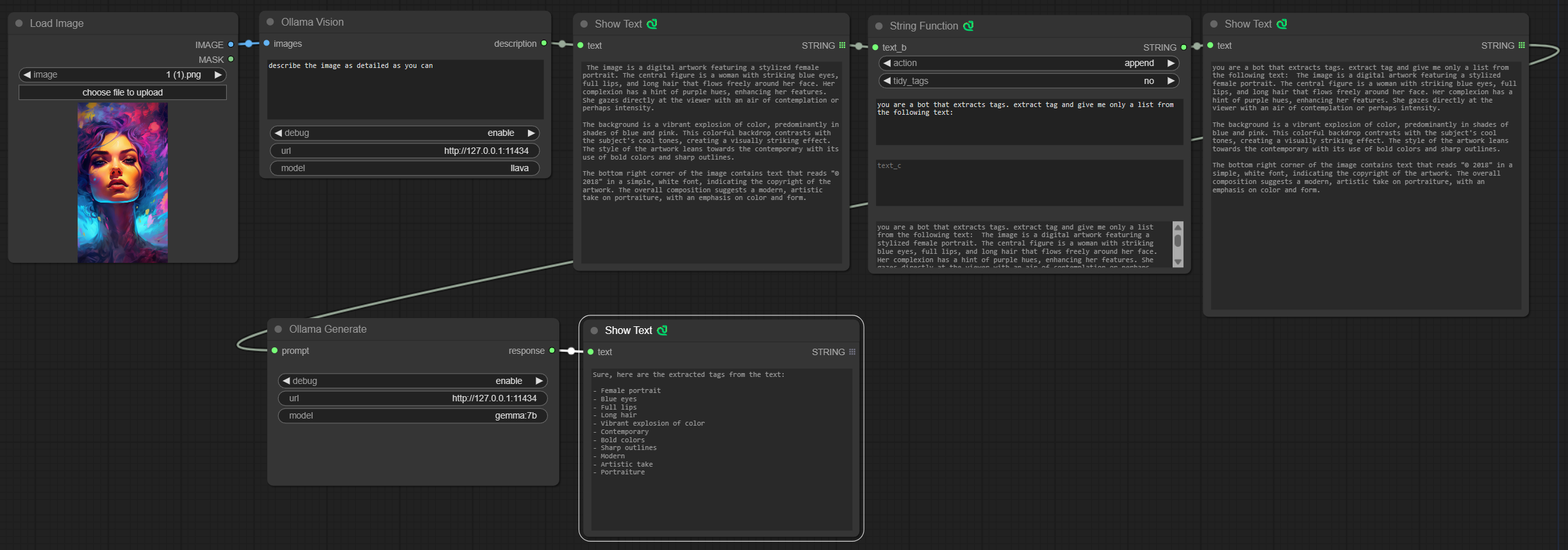

Consider the following workflow of vision an image, and perform additional text processing with desired LLM. In the OllamaGenerate node set the prompt as input.

The custom Text Nodes in the examples can be found here: https://github.com/pythongosssss/ComfyUI-Custom-Scripts