[Home Page] [Open Access] [arXiv v1] [arXiv v2]

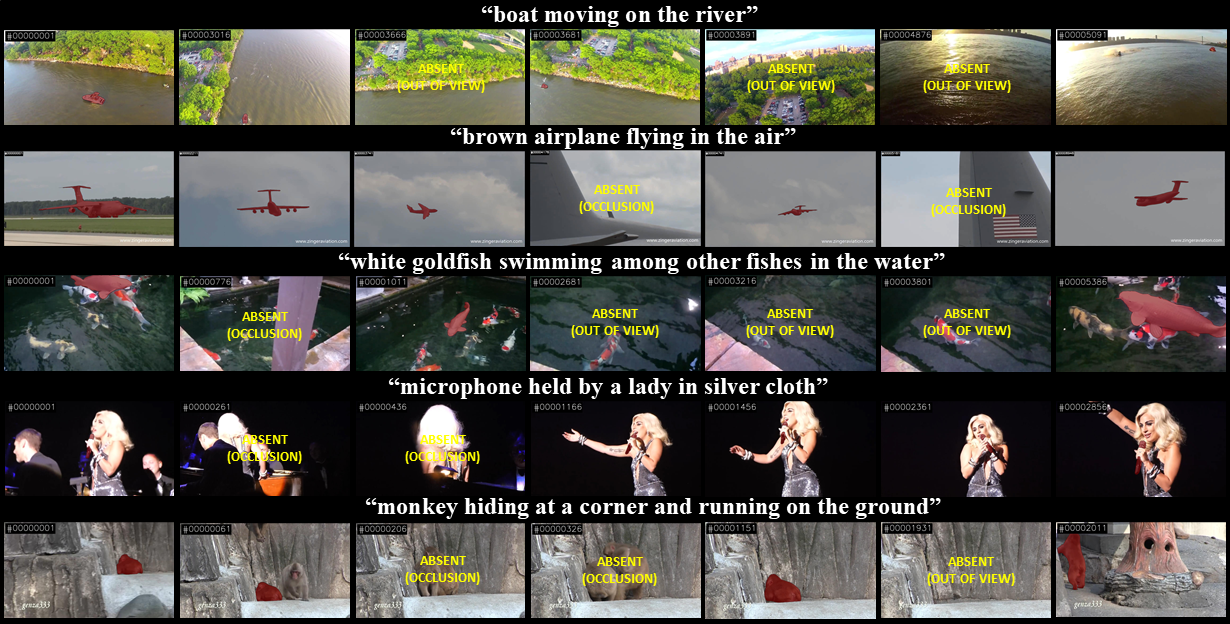

LVOS V1 is a benchmark for long-term video object segmentation. LVOS consists of 220 videos, 120 for training(annotations public), 50 for validation(annotations public) and 50 for testing(annotations unpublic). LVOS provides each video with high-quality and dense pixel-wise annotation.

For LVOS V2, we expand the number of videos from 220 to 720, 420 for training, 140 for validation, and 160 for testing. For training and validation sets, we have released the images and annotations, while for testing set, we just publish the images and the masks of first frame.

Download LVOS V2 dataset from Google Drive ( Train | Eval | Test ), or Baidu Drive ( Train | Eval | Test ).

After unzipping image data, please download meta jsons from Google Drive | Baidu Drive and put them under corresponding floder.

Organize as follows:

{LVOS V2 ROOT}

|-- train

|-- JPEGImages

|-- video1

|-- 00000001.jpg

|-- ...

|-- Annotations

|-- video1

|-- 00000001.jpg

|-- ...

|-- train_meta.json

|-- val

|-- ...

|-- test

|-- ...

x_meta.json

{

"videos": {

"<video_id>": {

"objects": {

"<object_id>": {

"frame_range": {

"start": <start_frame>,

"end": <end_frame>,

"frame_nums": <frame_nums>

}

}

}

}

}

}

x_meta_attribute.json

{

"sets": [

x

],

"attributes": [

<attribute>

],

"videos": {

"<video_id>": {

"objects": {

"<object_id>": {

"frame_range": {

"start": <start_frame>,

"end": <end_frame>,

"frame_nums": <frame_nums>

}

}

},

"set": <set>

"attributes": [<attribute 1>, <attribute 2>]

"num_scribbles": <num_scribbles>

"name": <video_id>

"image_size": [<height>, <width>]

}

}

}

# <video_id> is the name of video.

# <object_id> is the same as the pixel values of object in annotated segmentation PNG files.

# <frame_id> is the 5-digit index of frame in video, and not necessary to start from 0.

# <start_frame> is the start frame id of target object.

# <end_frame> is the end frame id of target object.

# <frame_nums> is the number of existing frames of target object.

# <set> is the set.

# <attribute 1> is the attribute of video.

# <num_scribbles> is the number of scribbles for interactivate vos task, which is only available for validation and test set.

# <height>, <width> is the height and width of frame.

Download LVOS V1 dataset from Google Drive ( Train | Eval | Test ), Baidu Drive ( Train | Eval | Test ), or Kaggle (Train | Eval | Test ).

After unzipping image data, please download meta jsons from Google Drive | Baidu Drive | Kaggle and put them under corresponding folder.

For the language caption, please download the meta jsons from Google Drive | Baidu Drive | Kaggle and put them under corresponding folder.

Organize as follows:

{LVOS V1 ROOT}

|-- train

|-- JPEGImages

|-- video1

|-- 00000001.jpg

|-- ...

|-- Annotations

|-- video1

|-- 00000001.jpg

|-- ...

|-- train_meta.json

|-- train_expression_meta.json

|-- val

|-- ...

|-- test

|-- ...

x_meta.json

{

"videos": {

"<video_id>": {

"objects": {

"<object_id>": {

"frame_range": {

"start": <start_frame>,

"end": <end_frame>,

"frame_nums": <frame_nums>

}

}

}

}

}

}

x_expression_meta.json

{

"videos": {

"<video_id>": {

"objects": {

"<object_id>": {

"frame_range": {

"start": <start_frame>,

"end": <end_frame>,

"frame_nums": <frame_nums>

}

"caption": <caption>

}

}

}

}

}

# <object_id> is the same as the pixel values of object in annotated segmentation PNG files.

# <frame_id> is the 5-digit index of frame in video, and not necessary to start from 0.

# <start_frame> is the start frame id of target object.

# <end_frame> is the end frame id of target object.

# <frame_nums> is the number of existing frames of target object.

# <caption> is the expression to describe the target object.

We use DDMemory as the example model to analyze LVOS. For some reasons, DDMemory is unavailable now. (DDMemory will come soon). We take advanteage of AOT-T as an alternative. You can download the result from Google Drive

Please our evaluation toolkits to assess your model's result on validation set. See this repository for more details on the usage of toolkits.

For test set, please use the CodaLab server for convenience of evaluating your own algorithms.

We released the tools and test scripts in this repository. Click on this link for more information.

- The annotations of LVOS are licensed under a Creative Commons Attribution 4.0 License .

- The evaluation toolkits of LVOS are licensed under a BSD-3-Clause license .

- The data of LVOS is released for non-commercial research purpose only.

- All videos and images are from VOT-LT 2019 , LaSOT , and some other datasets, which are not property of Fudan. Fudan is not responsible for the content nor the meaning of these videos and images.

InProceedings{Hong_2023_ICCV,

author = {Hong, Lingyi and Chen, Wenchao and Liu, Zhongying and Zhang, Wei and Guo, Pinxue and Chen, Zhaoyu and Zhang, Wenqiang},

title = {LVOS: A Benchmark for Long-term Video Object Segmentation},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {13480-13492}

}