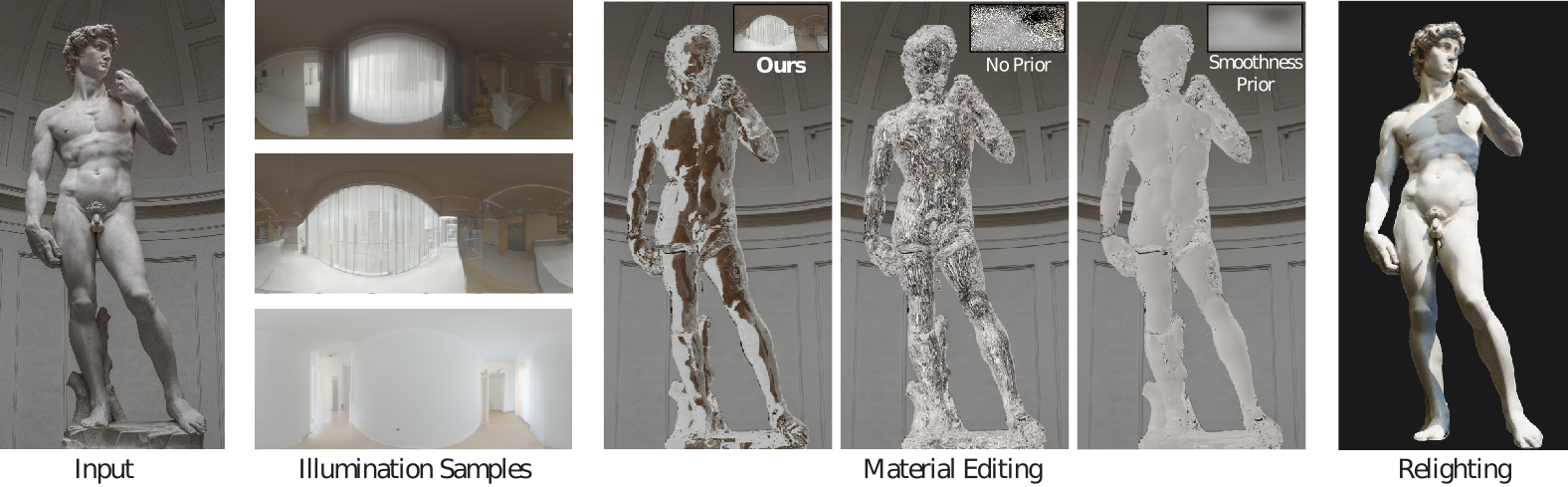

Pytorch implementation of Diffusion Posterior Illumination for Ambiguity-aware Inverse Rendering .

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia), 2023

Linjie Lyu1, Ayush Tewari2, Marc Habermann1, Shunsuke Saito3, Michael Zollhöfer3, Thomas Leimkühler1, and Christian Theobalt1

1Max Planck Institute for Informatics,Saarland Informatics Campus , 2MIT CSAIL,3Reality Labs Research

pip install -r requirements.txt

pip install torch==1.11.0+cu113 torchvision==0.12.0+cu113 torchaudio==0.11.0 --extra-index-url https://download.pytorch.org/whl/cu113

pip install mitsubaSee hotdog for references.

image/

0.exr or .png

1.exr or .png

...

scene.xml

camera.xml

Geometry

For real-world scenes, you can use a neural SDF reconstruction method to extract the mesh for Mitsuba xml file.

Camera

Some camera-reader code is provided in camera. You can always load cameras to Blender and then export a camera.xml with the useful Mitsuba Blender Add-on.

For more details take a look at Mitsuba 3 document.

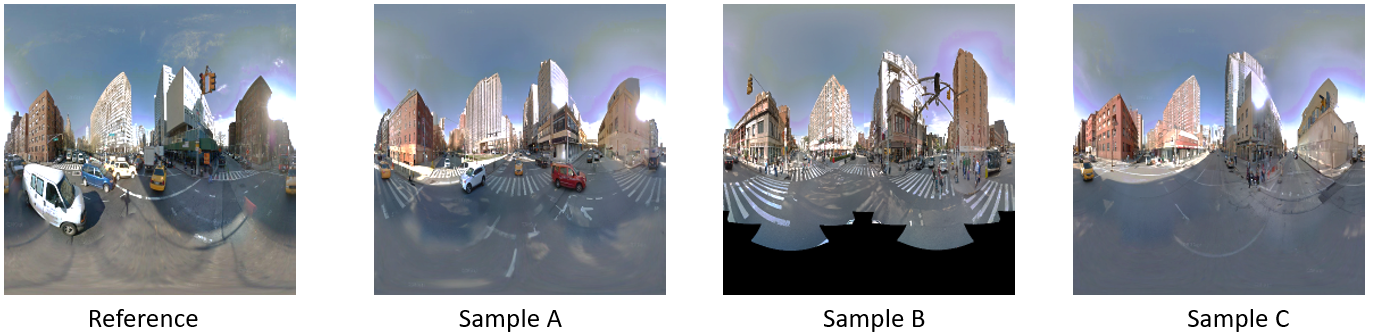

mkdir modelsWe use Laval and Streetlearn as environment map datasets. Refer to guided-diffusion for training details or download pre-trained checkpoints to ./models/.

Here is an example to sample realistic outdoor environment maps take hotdog as input.

Environment Map Sampling:

python sample_condition.py \

--model_config=configs/model_config_outdoor.yaml \

--diffusion_config=configs/diffusion_config.yaml \

--task_config=raytracing_config_outdoor.yaml;Material Refinement:

material_optimization.py --task_config=configs/raytracing_config_outdoor.yaml; For indoor scenes, use indoor configs. Usually the illumi_scale hyperparameter for indoor config is 1.0 - 10.0.

If you want to generate natural environment maps with another differentiable rendering method instead of Mitsuba3, it's easy. Just replace the rendering (forward) and update_material functions in ./guided_diffusion/measurements.py.

@article{lyu2023dpi,

title={Diffusion Posterior Illumination for Ambiguity-aware Inverse Rendering},

author={Lyu, Linjie and Tewari, Ayush and Habermann, Marc and Saito, Shunsuke and Zollh{\"o}fer, Michael and Leimk{\"u}ehler, Thomas and Theobalt, Christian},

journal={ACM Transactions on Graphics},

volume={42},

number={6},

year={2023}

}This code is based on the DPS, and guided-diffusion codebases.