CARAFE FADE

This repository includes the official implementation of FADE, an upsampling operator, presented in our paper:

FADE: Fusing the Assets of Decoder and Encoder for Task-Agnostic Upsampling

Proc. European Conference on Computer Vision (ECCV)

Hao Lu, Wenze Liu, Hongtao Fu, Zhiguo Cao

Huazhong University of Science and Technology, China

- Simple and effective: As an upsampling operator, FADE boosts great improvements despite its tiny body;

- Task-agnostic: Compared with other upsamplers, FADE performs well on both region-sensitive and detail sensitive dense prediction tasks;

- Plug and play: FADE can be easily incorporated into most dense prediction models, particularly encoder-decoder architectures.

Our codes are tested on Python 3.8.8 and PyTorch 1.9.0. mmcv is additionally required for the feature assembly function by CARAFE.

Our experiments are based on A2U matting and SegFormer. Please follow their installation instructions to prepare the models. In the folders a2u_matting and segformer we provide the modified model and the config files for FADE and FADE-Lite.

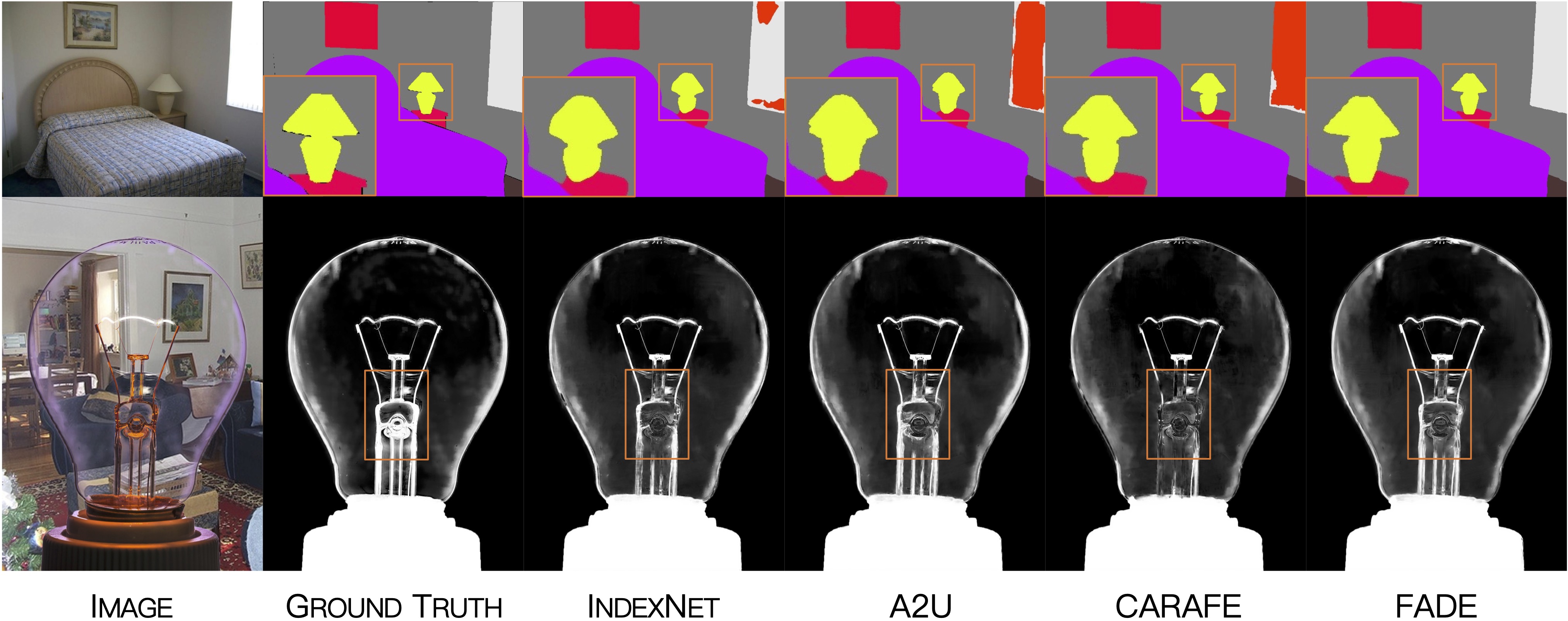

Here are results of image matting and semantic segmentation:

| Image Matting | #Param. | GFLOPs | SAD | MSE | Grad | Conn | Log |

|---|---|---|---|---|---|---|---|

| Bilinear | 8.05M | 8.61 | 37.31 | 0.0103 | 21.38 | 35.39 | -- |

| CARAFE | +0.26M | +6.00 | 41.01 | 0.0118 | 21.39 | 39.01 | -- |

| IndexNet | +12.26M | +31.70 | 34.28 | 0.0081 | 15.94 | 31.91 | -- |

| A2U | +38K | +0.66 | 32.15 | 0.0082 | 16.39 | 29.25 | -- |

| FADE | +0.12M | +8.85 | 31.10 | 0.0073 | 14.52 | 28.11 | link |

| FADE-Lite | +27K | +1.46 | 31.36 | 0.0075 | 14.83 | 28.21 | link |

| Semantic Segmentation | #Param. | GFLOPs | mIoU | bIoU | Log |

|---|---|---|---|---|---|

| Bilinear | 13.7M | 15.91 | 41.68 | 27.80 | link |

| CARAFE | +0.44M | +1.45 | 42.82 | 29.84 | link |

| IndexNet | +12.60M | +30.65 | 41.50 | 28.27 | link |

| A2U | +0.12M | +0.41 | 41.45 | 27.31 | link |

| FADE | +0.29M | +2.65 | 44.41 | 32.65 | link |

| FADE-Lite | +80K | +0.89 | 43.49 | 31.55 | link |

If you find this work or code useful for your research, please cite:

@inproceedings{lu2022fade,

title={FADE: Fusing the Assets of Decoder and Encoder for Task-Agnostic Upsampling},

author={Lu, Hao and Liu, Wenze and Fu, Hongtao and Cao, Zhiguo},

booktitle={Proc. European Conference on Computer Vision (ECCV)},

year={2022}

}

This code is for academic purposes only. Contact: Hao Lu (hlu@hust.edu.cn)