The same weather app built in 10 different frontend frameworks

For automated cross-framework web performance benchmarking

📊 View Results •

🎯 Choose a Framework

I've built the same weather app in 10 different frontend web frameworks.

Along with automated scripts to benchmark each of their performance, quality and capabilities.

To finally answer the age-old question: "Which is the best* frontend framework?"

So, without further ado, let's see how every framework weathers the storm! ⛈️

- To objectively compare frontend frameworks in an automated way

- Because I have no life, and like building the same thing 10 times

- Smallest bundle size and best compression

- Fastest load time (FCP, LCP, TTI, TTFB, etc)

- Lowest resource consumption (CPU & memory usage, etc)

- Most maintainable (least verbose, complex and repetitive code)

- Quickest build time (prod compile, dev server HMR latency, etc)

- Frameworks Covered

- Usage Guide

- Project Outline

- Requirement Spec

- Benchmarking

- Results

- Real-world Applications

- Status

- Attributions and License

Click a framework to view info, test/lint/build/etc statuses, and to preview the demo app

You'll need to ensure you've got Git, Node (LTS or v22+), Python (3.10) and uv installed

git clone git@github.com:lissy93/framework-benchmarks.git

cd framework-benchmarks

npm install

pip install -r scripts/requirements.txt

npm run setupRun npm run dev:[app-name]

Or, you can: cd ./apps/[app-name] then npm i and npm run dev

All apps are tested with the same shared test suite, to ensure they all conform to the same requirements, and are fully functional.

Tests are dome with Playwright and can be found in the tests/ directory.

Either execute tests for all implementations with npm test, or just for a specific app with npm run test:[app] (e.g. npm run test:react).

You should also verify the lint checks pass, with npm run lint or npm run lint:[app].

Build the app for production, with npm run build:[app-name]

Then upload ./apps/[app-name]/dist/ to any web server, CDN or static hosting provider

- Create app directory:

apps/your-framework/withpackage.json,vite.config.js, and asrc/dir - Build your app (ensuring it meets the requirements spec above)

- Update

frameworks.json - Add a test config file in

tests/config/ - Them run

node scripts/setup/generate-scripts.jsandnode scripts/setup/sync-assets.js

framework-benchmarks

├── scripts # Scripts for managing the app (syncing assets, generating mocks, etc)

├── assets # These are shared across all apps for consistency

│ ├── icons # SVG icons, used by all apps

│ ├── styles # CSS classes and variables, used by all apps

│ └── mocks # Mocked data, used by apps when running benchmarks

├── tests # Test suit

└── apps # Directory for each app as a standalone project

├── react/

├── svelte/

├── angular/

└── ...

The scripts/ directory contains

everything for managing the project (setup, testing, benchmarking, reporting, etc).

You can view a list of scripts by running npm run help.

To keep things uniform, all apps will share certain assets

tests/- Same test suit used for all apps. To ensure each app conforms to the spec and is fully functionalassets/- Same static assets (icons, fonts, styles, meta, etc)assets/styles/- Same styles for all apps, and theming is done with CSS variables

- Dependencies: Beyond their framework code, none of the apps use any additional dependencies, libraries or third-party "stuff"

- Data: Apps support using real weather data, from open-meteo api. However, to keep tests fair, we use mocked data when running benchmarks.

npm run setup- Creates mock data, syncs assets, updates scripts and installs dependenciesnpm run test- Runs the test suite for all apps, or a specific appnpm run lint- Runs the linter for all apps, or a specific appnpm run check- Verifies the project is correctly setup and ready to gonpm run build- Builds all apps, or a specific app for productionnpm run start- Starts the demo server, which serves up all built appsnpm run help- Displays a list of all available commands

See the package.json for all commands

Note that the project commands get generated automatically by the generate_scripts.py script, based on the contents of frameworks.json and config.json.

Every app is built with identical requirements (as validated by the shared test suite), and uses the same assets, styles, and data. The only difference is the framework used to build each.

Why a weather app? Because it enables us to use all the critical features of any frontend framework, including:

- Binding user input and validation

- Fetching external data asynchronously

- Basic state management of components

- Handling fallback views (loading, errors)

- Using browser features (location, storage, etc)

- Logic blocks, for iterative content and conditionals

- Lifecycle methods (mounting, updating, unmounting)

For our app to be somewhat complete and useful, it must do the following:

- On initial load, the user should see weather for their current GPS location

- The user should be able to search for a city, and view it's weather

- And the user's city should be stored in localstorage for next time

- The app should show a detailed view of the current weather

- And a summary 7-day forecast, where days can be expanded for more details

There's certain standards every app should follow, and we want to use best practices, so:

- Theming: The app should support both light and dark mode, based on the user's preferences

- Internationalization: The copy should be extracted out of the code, so that it is translatable

- Accessibility: The app should meet AA standard of accessibility

- Mobile: The app should be fully responsive and optimized for mobile

- Performance: The app should be efficiently coded as best as the framework allows

- Testing: The app should meet 90% test coverage

- Error Handling: Errors should be handled, correctly surfaced, and tracible

- Quality: The code should be linted for consistent formatting

- Security: Inputs must be validated, data via HTTPS, and no known vulnerabilities

- SEO: Basic meta and og tags, SSR where possible,

- CI: Automated tests, lints and validation should ensure all changes are compliant

To compare the frameworks, we need to measure:

- Bundle size & output

- Load metrics: FCP, LCP, CLS, TTI, interaction latency

- Hydration/SSR cost, CPU & memory

- Cold vs. warm cache behaviour

- Memory usage: idle, post-flow, leak delta

- Build time & dev server HMR latency

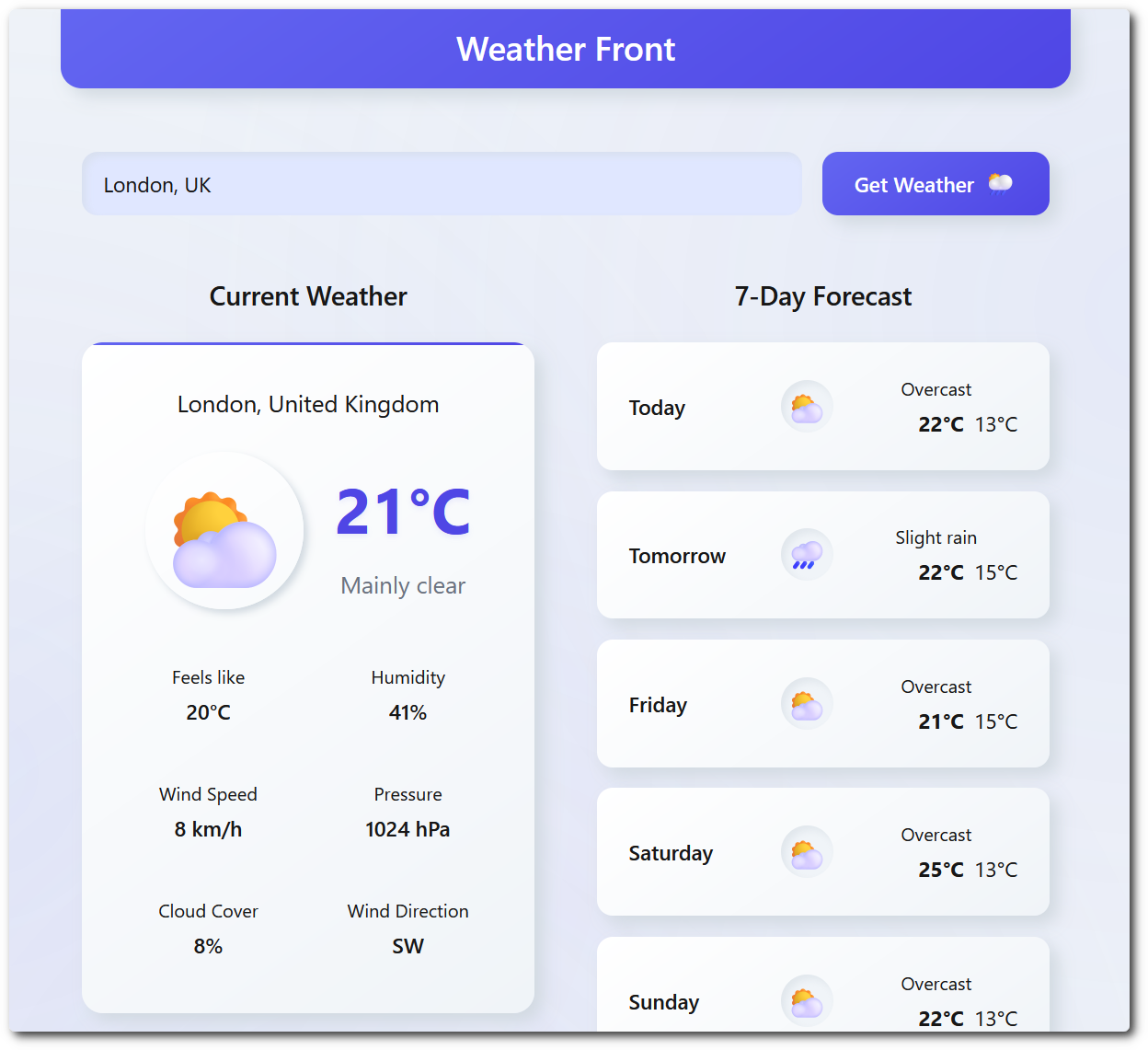

The interface is simple, but must be identical arcorss all apps. As validated by the snapshots in the tests.

The screenshots will all look like this:

A summary of results can be viewed in summary.tsv.

Full, detailed results can be found in the results branch,

or attached as an artifact in the GitHub Actions benchmarking workflow runs.

For slightly more interactive reports, you can view the website at framework-benchmarks.as93.net,

and also view a stats on a per-framework basis.

The following charts show live data from the latest benchmark run. See the web version for interactive charts.

| Framework | Stars | Downloads | Size | Contributors | Age | Last updated | License |

|---|---|---|---|---|---|---|---|

| 238.9k | 193.8M | 1108.4 MB | 1.9k | 12.3y | 8 hours ago | MIT | |

| 98.8k | 17.5M | 553.8 MB | 2.5k | 11.0y | 3 weeks ago | MIT | |

| 84.1k | 11.6M | 113.8 MB | 861 | 8.8y | 12 hours ago | MIT | |

| 38k | 32.5M | 17.7 MB | 364 | 10.0y | 2 weeks ago | MIT | |

| 34.2k | 3.4M | 13.7 MB | 176 | 7.4y | 1 month ago | MIT | |

| 21.6k | 94.7k | 60.2 MB | 616 | 4.6y | 1 day ago | MIT | |

| 51.6k | 35.4M | 36.5 MB | 569 | 7.0y | 1 day ago | MIT | |

| 59.6k | 67.7M | 34.1 MB | 344 | 19.5y | 13 hours ago | MIT | |

| 30.3k | 1.3M | 8.8 MB | 295 | 5.8y | 1 week ago | MIT | |

| 20.3k | 14.1M | 59.0 MB | 205 | 8.2y | 1 week ago | BSD-3-Clause | |

| 4.2k | 7.5k | 3.6 MB | 24 | 2.4y | 3 weeks ago | MIT |

Different frameworks shine in different ways, and therefore have very different usecases.

So, in order to let each one shine, I have I have built real-world apps in each framework.

| Project | Framework | GitHub | Website |

|---|---|---|---|

|

|

🌐 web-check.xyz | |

|

|

🌐 dashy.to | |

|

|

🌐 digital-defense.io | |

|

|

🌐 portainer-templates | |

|

|

🌐 domain-locker.com | |

|

|

🌐 email-comparison | |

|

|

🌐 who-dat.as93.net | |

|

|

🌐 N/A | |

|

|

🌐 awesome-privacy.xyz | |

|

|

🌐 raid-calculator | |

|

|

🌐 permissionator |

Each app gets built and tested to ensure that it is functional, compliant with the spec, and (reasonably) well coded. Below is the current status of each, but for complete details you can see the Workflow Logs via GitHub Actions.

| App | Build | Test | Lint |

|---|---|---|---|

lissy93/framework-benchmarks is licensed under MIT © Alicia Sykes 2025.

For information, see TLDR Legal > MIT

Expand License

The MIT License (MIT)

Copyright (c) Alicia Sykes <alicia@omg.com>

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

in the Software without restriction, including without limitation the rights

to use, copy, modify, merge, publish, distribute, sub-license, and/or sell

copies of the Software, and to permit persons to whom the Software is furnished

to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included install

copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED,

INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANT ABILITY, FITNESS FOR A

PARTICULAR PURPOSE AND NON INFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT

HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION

OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE

SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

© Alicia Sykes 2025

Licensed under MIT

Thanks for visiting :)