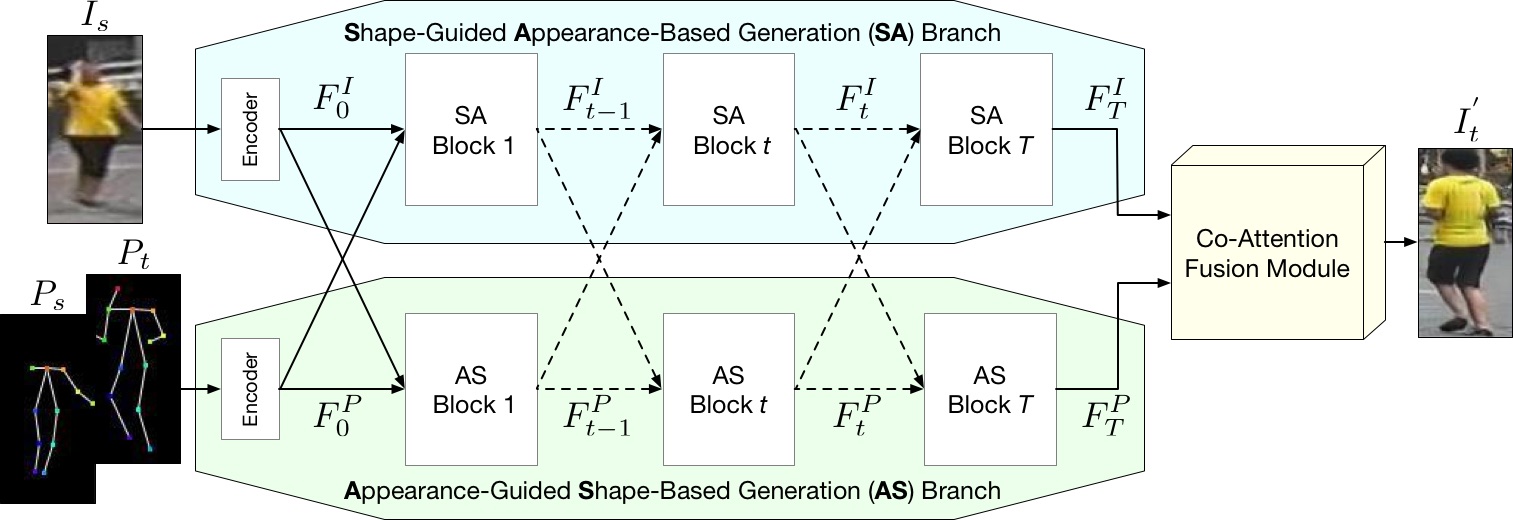

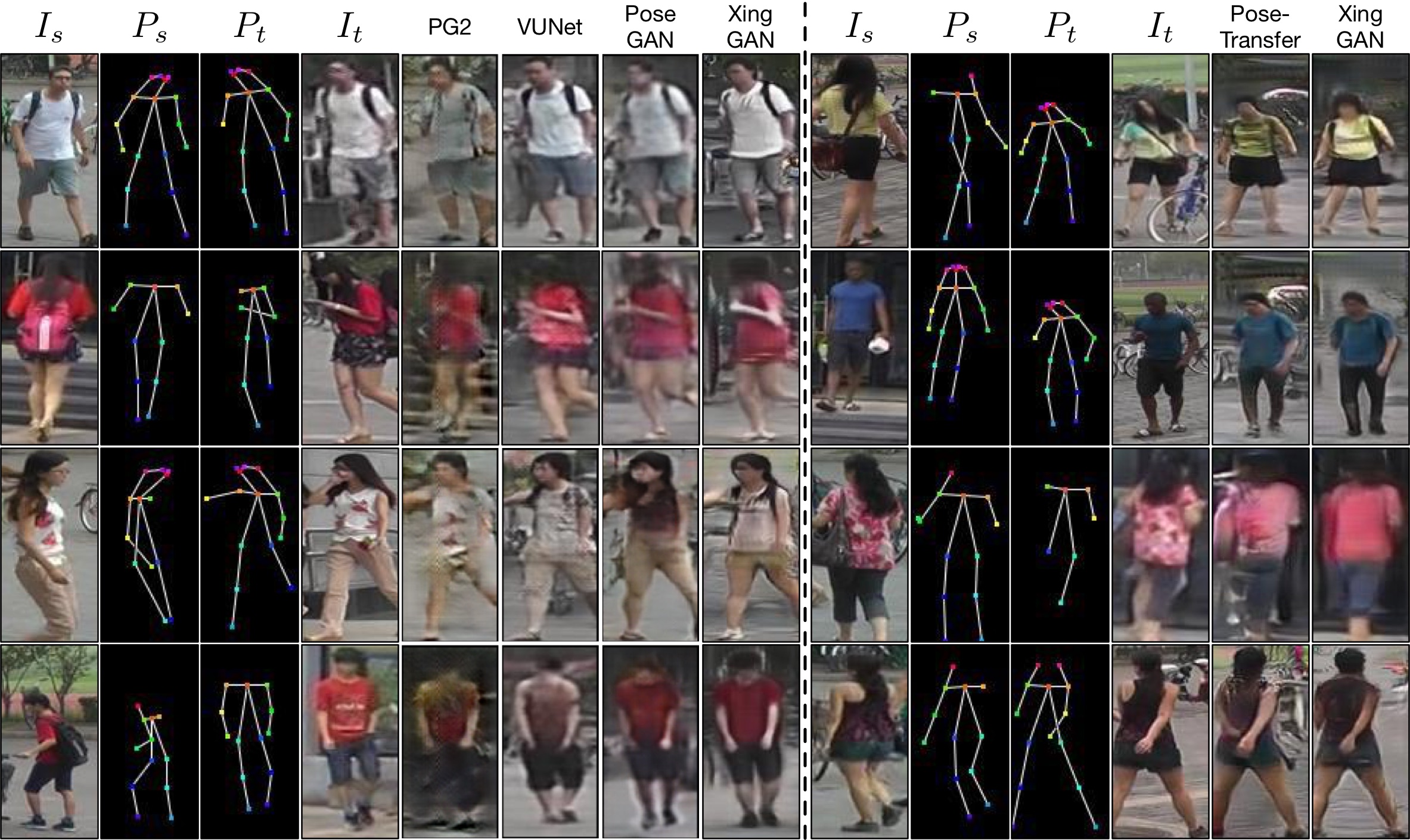

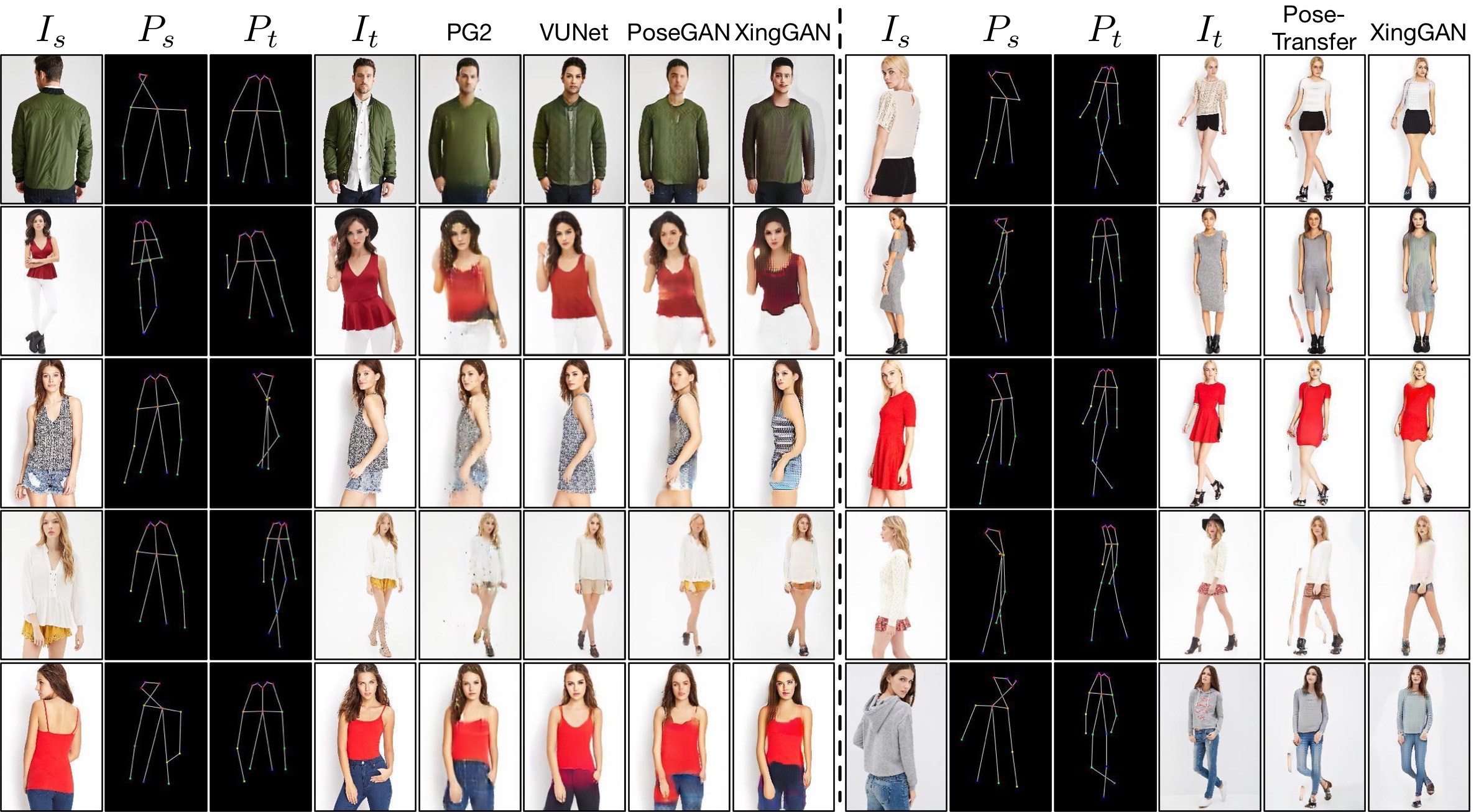

- XingGAN or CrossingGAN

- Installation

- Dataset Preparation

- Generating Images Using Pretrained Model

- Train and Test New Models

- Evaluation

- Acknowledgments

- Related Projects

- Citation

- Contributions

| Project | Paper |

XingGAN for Person Image Generation

Hao Tang12, Song Bai2, Li Zhang2, Philip H.S. Torr2, Nicu Sebe13.

1University of Trento, Italy, 2University of Oxford, UK, 3Huawei Research Ireland, Ireland.

In ECCV 2020.

The repository offers the official implementation of our paper in PyTorch.

In the meantime, check out our related BMVC 2020 oral paper Bipartite Graph Reasoning GANs for Person Image Generation.

Copyright (C) 2020 University of Trento, Italy.

All rights reserved. Licensed under the CC BY-NC-SA 4.0 (Attribution-NonCommercial-ShareAlike 4.0 International)

The code is released for academic research use only. For commercial use, please contact hao.tang@unitn.it.

Clone this repo.

git clone https://github.com/Ha0Tang/XingGAN

cd XingGAN/This code requires PyTorch 1.0.0 and python 3.6.9+. Please install the following dependencies:

- pytorch 1.0.0

- torchvision

- numpy

- scipy

- scikit-image

- pillow

- pandas

- tqdm

- dominate

To reproduce the results reported in the paper, you need to run experiments on NVIDIA DGX1 with 4 32GB V100 GPUs for DeepFashion, and 1 32GB V100 GPU for Market-1501.

Please follow SelectionGAN to directly download both Market-1501 and DeepFashion datasets.

This repository use the same dataset format as SelectionGAN and BiGraphGAN. so you can use the same data for all these methods.

cd scripts/

sh download_xinggan_model.sh market

cd ..Then,

- Change several parameters in

test_market.sh. - Run

sh test_market.shfor testing.

cd scripts/

sh download_xinggan_model.sh deepfashion

cd ..Then,

- Change several parameters in

test_deepfashion.sh. - Run

sh test_deepfashion.shfor testing.

- Change several parameters in

train_market.sh. - Run

sh train_market.shfor training. - Change several parameters in

test_market.sh. - Run

sh test_market.shfor testing.

- Change several parameters in

train_deepfashion.sh. - Run

sh train_deepfashion.shfor training. - Change several parameters in

test_deepfashion.sh. - Run

sh test_deepfashion.shfor testing.

We adopt SSIM, mask-SSIM, IS, mask-IS, and PCKh for evaluation of Market-1501. SSIM, IS, PCKh for DeepFashion.

-

SSIM, mask-SSIM, IS, mask-IS: install

python3.5,tensorflow 1.4.1, andscikit-image==0.14.2. Then run,python tool/getMetrics_market.pyorpython tool/getMetrics_fashion.py. -

PCKh: install

python2, andpip install tensorflow==1.4.0, then setexport KERAS_BACKEND=tensorflow. After that, runpython tool/crop_market.pyorpython tool/crop_fashion.py. Next, download pose estimator and put it under the root folder, and runpython compute_coordinates.py. Lastly, runpython tool/calPCKH_market.pyorpython tool/calPCKH_fashion.py.

Please refer to Pose-Transfer for more details.

This source code is inspired by both Pose-Transfer and SelectionGAN.

BiGraphGAN | GestureGAN | C2GAN | SelectionGAN | Guided-I2I-Translation-Papers

If you use this code for your research, please cite our paper.

XingGAN

@inproceedings{tang2020xinggan,

title={XingGAN for Person Image Generation},

author={Tang, Hao and Bai, Song and Zhang, Li and Torr, Philip HS and Sebe, Nicu},

booktitle={ECCV},

year={2020}

}

If you use the original BiGraphGAN, GestureGAN, C2GAN, and SelectionGAN model, please cite the following papers:

BiGraphGAN

@inproceedings{tang2020bipartite,

title={Bipartite Graph Reasoning GANs for Person Image Generation},

author={Tang, Hao and Bai, Song and Torr, Philip HS and Sebe, Nicu},

booktitle={BMVC},

year={2020}

}

GestureGAN

@article{tang2019unified,

title={Unified Generative Adversarial Networks for Controllable Image-to-Image Translation},

author={Tang, Hao and Liu, Hong and Sebe, Nicu},

journal={IEEE Transactions on Image Processing (TIP)},

year={2020}

}

@inproceedings{tang2018gesturegan,

title={GestureGAN for Hand Gesture-to-Gesture Translation in the Wild},

author={Tang, Hao and Wang, Wei and Xu, Dan and Yan, Yan and Sebe, Nicu},

booktitle={ACM MM},

year={2018}

}

C2GAN

@inproceedings{tang2019cycleincycle,

title={Cycle In Cycle Generative Adversarial Networks for Keypoint-Guided Image Generation},

author={Tang, Hao and Xu, Dan and Liu, Gaowen and Wang, Wei and Sebe, Nicu and Yan, Yan},

booktitle={ACM MM},

year={2019}

}

SelectionGAN

@inproceedings{tang2019multi,

title={Multi-channel attention selection gan with cascaded semantic guidance for cross-view image translation},

author={Tang, Hao and Xu, Dan and Sebe, Nicu and Wang, Yanzhi and Corso, Jason J and Yan, Yan},

booktitle={CVPR},

year={2019}

}

@article{tang2020multi,

title={Multi-channel attention selection gans for guided image-to-image translation},

author={Tang, Hao and Xu, Dan and Yan, Yan and Corso, Jason J and Torr, Philip HS and Sebe, Nicu},

journal={arXiv preprint arXiv:2002.01048},

year={2020}

}

If you have any questions/comments/bug reports, feel free to open a github issue or pull a request or e-mail to the author Hao Tang (hao.tang@unitn.it).