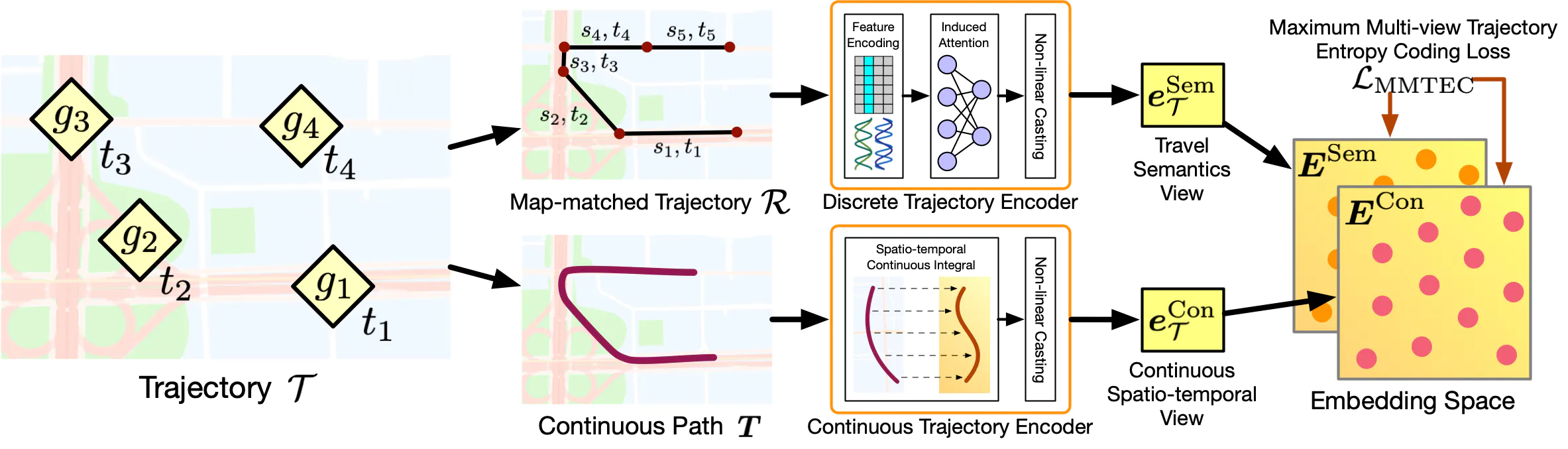

Implementation of the Maximum Multi-view Trajectory Entropy Coding (MMTEC) for pre-training general trajectory embeddings.

Paper: Yan Lin, Huaiyu Wan, Shengnan Guo, Jilin Hu, Christian S. Jensen, and Youfang Lin. “Pre-Training General Trajectory Embeddings With Maximum Multi-View Entropy Coding.” IEEE Transactions on Knowledge and Data Engineering, 2023. https://doi.org/10.1109/TKDE.2023.3347513.

The code provided here is not only an implementation of MMTEC but also serves as a framework for contrastive-style pre-training and evaluating trajectory representations. It is designed to be easily extensible, allowing implemention of new methods.

The pre-trainers and pretext losses are located in the /pretrain directory.

/pretrain/trainer.py includes commonly used pre-trainers:

Trainer: An abstract class with common functions for fetching mini-batches, feeding mini-batches into loss functions, and saving/loading pre-trained models.ContrastiveTrainer: For contrastive-style pre-training.MomentumTrainer: A special version of contrastive-style pre-trainer implementing a momentum training scheme with student-teacher pairs.NoneTrainer: Reserved for end-to-end training scenarios.

contrastive_losses.py contain contrastive--style loss functions. These loss functions:

- Are subclasses of

torch.nn.Module. - Implement the loss calculation in their

forwardfunction.

Included pretext losses:

- Contrastive: Maximum Entropy Coding loss and InfoNCE loss.

Encoder models are stored in the /model directory. Samplers for the encoders are in /model/sample.py.

Four downstream tasks are included for evaluating pre-training representation methods:

- Classification (

Classificationclass) - Destination prediction (

Destinationclass) - Similar trajectory search (

Searchclass) - Travel time estimation (

TTEclass)

These tasks are implemented in downstream/trainer.py. You can add custom tasks by implementing a new downstream trainer based on the abstract Trainer class. To add custom predictors for downstream tasks, add a new model to downstream/predictor.py.

The Data class in data.py helps with dataset pre-processing. It's recommended to pre-calculate and store trajectory sequences and labels for downstream tasks before experiments. You can do this by running data.py directly through Python.

Note: The storage directory for metadata, model parameters, and results is controlled by the

Data.base_pathparameter. Adjust this according to your specific environment using command-line arguments.

The repository also includes sample datasets in the sample/ directory for quick testing and demonstration purposes.

Note: While these samples are useful for initial testing, they may not be representative of the full datasets' complexity and scale. For comprehensive experiments and evaluations, it's recommended to use complete datasets with the same file format.

Configuration files (JSON format) in the /config directory control all experiment parameters. To specify a config file during experiments, use the following command:

python main.py -c <config_file_path> --device <device_name> --base <base_path> --dataset <dataset_path>- Optional: Create a new virtual environment:

python -m venv .venv

source .venv/bin/activateTo deactivate the virtual environment, simply run deactivate.

- Install the required dependencies:

pip install -r requirements.txt-

Prepare your dataset and adjust the

Data.base_pathindata.pyto point to your meta data storage location using the--baseflag and the dataset path using the--datasetparameters. -

Pre-process your data by running:

python data.py -n <dataset_name> -t <meta_data_types> -i <set_indices> --base <base_path> --dataset <dataset_path>For example, to pre-process the included chengdu dataset, run:

python data.py -n chengdu -t trip,class,tte -i 0,1,2 --base ./cache --dataset ./sample-

Create or modify a configuration file in the

/configdirectory to set up your experiment. -

Run the main script with your chosen configuration:

python main.py -c <your_config_name> --device <device_name> --base <base_path> --dataset <dataset_path>For example, to run the included configuration file config/samll_test.json, run:

python main.py -c small_test --device cuda:0 --base ./cache --dataset ./sampleTo implement a new pre-training method or downstream task:

- Add new loss functions in

/pretrain/contrastive_losses.py. - Implement new models in the

/modeldirectory. - For new downstream tasks, add a new trainer class in

downstream/trainer.pyand corresponding predictors indownstream/predictor.py.

If you have any further questions, feel free to contact me directly. My contact information is available on my homepage: https://www.yanlincs.com/