This repository contains an op-for-op PyTorch reimplementation of ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks.

Contains DIV2K, DIV8K, Flickr2K, OST, T91, Set5, Set14, BSDS100 and BSDS200, etc.

Please refer to README.md in the data directory for the method of making a dataset.

Both training and testing only need to modify yaml file.

python3 test.py --config_path ./configs/test/ESRGAN_x4-DFO2K-Set5.yamlpython3 train_net.py --config_path ./configs/train/RRDBNet_x4-DFO2K.yamlModify the ./configs/train/RRDBNet_X4.yaml file.

- line 34:

RESUMED_G_MODELchange to./samples/RRDBNet_X4-DIV2K/g_epoch_xxx.pth.tar.

python3 train_net.py --config_path ./configs/train/RRDBNet_x4-DFO2K.yamlModify the ./configs/train/ESRGAN_X4.yaml file.

- line 39:

PRETRAINED_G_MODELchange to./results/EDSRGAN_x4-DIV2K/g_last.pth.tar.

python3 train_gan.py --config_path ./configs/train/ESRGAN_x4-DFO2K.yamlModify the ./configs/train/ESRGAN_X4.yaml file.

- line 39:

PRETRAINED_G_MODELchange to./results/RRDBNet_x4-DIV2K/g_last.pth.tar. - line 41:

RESUMED_G_MODELchange to./samples/EDSRGAN_x4-DIV2K/g_epoch_xxx.pth.tar. - line 42:

RESUMED_D_MODELchange to./samples/EDSRGAN_x4-DIV2K/d_epoch_xxx.pth.tar.

python3 train_gan.py --config_path ./configs/train/ESRGAN_x4-DFO2K.yamlSource of original paper results: https://arxiv.org/pdf/1809.00219v2.pdf

In the following table, the value in () indicates the result of the project, and - indicates no test.

| Method | Scale | Set5 (PSNR/SSIM) | Set14 (PSNR/SSIM) | BSD100 (PSNR/SSIM) | Urban100 (PSNR/SSIM) | Manga109 (PSNR/SSIM) |

|---|---|---|---|---|---|---|

| RRDB | 4 | 32.73(32.71)/0.9011(0.9018) | 28.99(28.96)/0.7917(0.7917) | 27.85(27.85)/0.7455(0.7473) | 27.03(27.03)/0.8153(0.8156) | 31.66(31.60)/0.9196(0.9195) |

| ESRGAN | 4 | -(30.44)/-(0.8525) | -(26.28)/-(0.6994) | -(25.33)/-(0.6534) | -(24.36)/-(0.7341) | -(29.42)/-(0.8597) |

# Download `ESRGAN_x4-DFO2K-25393df7.pth.tar` weights to `./results/pretrained_models`

# More detail see `README.md<Download weights>`

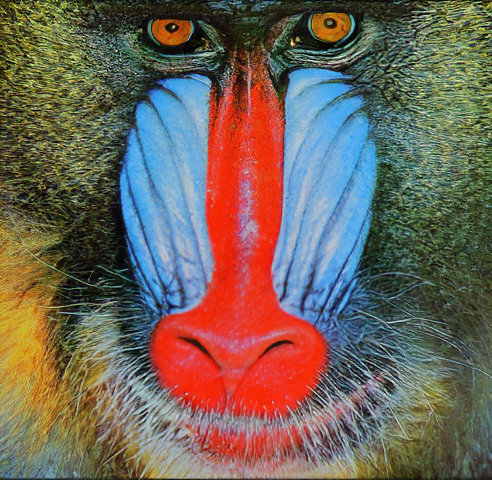

python3 ./inference.pyInput:

Output:

Build `rrdbnet_x4` model successfully.

Load `rrdbnet_x4` model weights `/ESRGAN-PyTorch/results/pretrained_models/ESRGAN_x4-DFO2K.pth.tar` successfully.

SR image save to `./figure/ESRGAN_x4_baboon.png`

If you find a bug, create a GitHub issue, or even better, submit a pull request. Similarly, if you have questions, simply post them as GitHub issues.

I look forward to seeing what the community does with these models!

Xintao Wang, Ke Yu, Shixiang Wu, Jinjin Gu, Yihao Liu, Chao Dong, Chen Change Loy, Yu Qiao, Xiaoou Tang

Abstract

The Super-Resolution Generative Adversarial Network (SRGAN) is a seminal work that is capable of generating realistic textures during single image

super-resolution. However, the hallucinated details are often accompanied with unpleasant artifacts. To further enhance the visual quality, we

thoroughly study three key components of SRGAN - network architecture, adversarial loss and perceptual loss, and improve each of them to derive an

Enhanced SRGAN (ESRGAN). In particular, we introduce the Residual-in-Residual Dense Block (RRDB) without batch normalization as the basic network

building unit. Moreover, we borrow the idea from relativistic GAN to let the discriminator predict relative realness instead of the absolute value.

Finally, we improve the perceptual loss by using the features before activation, which could provide stronger supervision for brightness consistency

and texture recovery. Benefiting from these improvements, the proposed ESRGAN achieves consistently better visual quality with more realistic and

natural textures than SRGAN and won the first place in the PIRM2018-SR Challenge. The code is available

at this https URL.

[Paper] [Author's implements(PyTorch)]

@misc{wang2018esrgan,

title={ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks},

author={Xintao Wang and Ke Yu and Shixiang Wu and Jinjin Gu and Yihao Liu and Chao Dong and Chen Change Loy and Yu Qiao and Xiaoou Tang},

year={2018},

eprint={1809.00219},

archivePrefix={arXiv},

primaryClass={cs.CV}

}