[ECCV 2024] GLARE: Low Light Image Enhancement via Generative Latent Feature based Codebook Retrieval [Paper]

Han Zhou1,*, Wei Dong1,*, Xiaohong Liu2,†, Shuaicheng Liu3, Xiongkuo Min2, Guangtao Zhai2, Jun Chen1,†

This repository represents the official implementation of our ECCV 2024 paper titled GLARE: Low Light Image Enhancement via Generative Latent Feature based Codebook Retrieval. If you find this repo useful, please give it a star ⭐ and consider citing our paper in your research. Thank you.

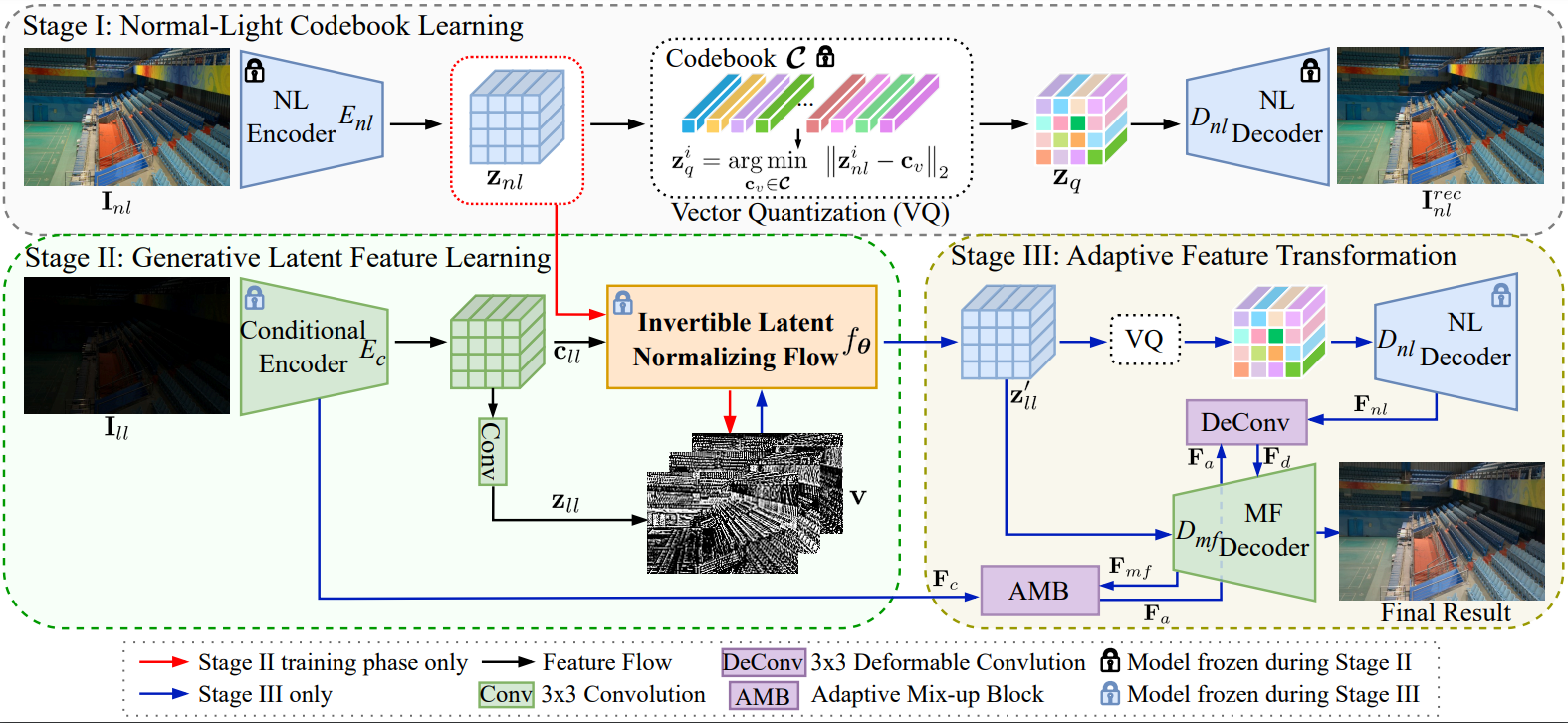

We present GLARE, a novel network for low-light image enhancement.

- Codebook-based LLIE: exploit normal-light (NL) images to extract NL codebook prior as the guidance.

- Generative Feature Learning: develop an invertible latent normalizing flow strategy for feature alignment.

- Adaptive Feature Transformation: adaptively introduces input information into the decoding process and allows flexible adjustments for users.

- Future: network structure can be meticulously optimized to improve efficiency and performance in the future.

2024-09-25: Our another paper ECMamba: Consolidating Selective State Space Model with Retinex Guidance for Efficient Multiple Exposure Correction has been accepted by NeurIPS 2024. Code and pre-print will be released at:

2024-09-21: Inference code for unpaired images and pre-trained models for LOL-v2-real is released! 🚀

2024-07-21: Inference code and pre-trained models for LOL is released! Feel free to use them. ⭐

2024-07-21: License is updated to Apache License, Version 2.0. 💫

2024-07-19: Paper is available at: . 🎉

2024-07-01: Our paper has been accepted by ECCV 2024. Code and Models will be released. 🚀

- 🔜 Training code.

The inference code was tested on:

- Ubuntu 22.04 LTS, Python 3.8, CUDA 11.3, GeForce RTX 2080Ti or higher GPU RAM.

Clone the repository (requires git):

git clone https://github.com/LowLevelAI/GLARE.git

cd GLARE-

Make Conda Environment: Using Conda to create the environment:

conda create -n glare python=3.8 conda activate glare

-

Then install dependencies:

conda install pytorch=1.11 torchvision cudatoolkit=11.3 -c pytorch pip install addict future lmdb numpy opencv-python Pillow pyyaml requests scikit-image scipy tqdm yapf einops tb-nightly natsort pip install pyiqa==0.1.4 pip install pytorch_lightning==1.6.0 pip install --force-reinstall charset-normalizer==3.1.0

-

Build CUDA extensions:

cd GLARE/defor_cuda_ext BASICSR_EXT=True python setup.py develop -

Remove CUDA extensions (/GLARE/defor_cuda_ext/basicsr/ops/dcn/deform_conv_ext.xxxxxx.so) to the path: /GLARE/code/models/modules/ops/dcn/.

LOL Google Drive

LOL-v2 Google Drive

Download pre-trained weights for LOL, pre-trained weights for LOL-v2-real and place them to folder pretrained_weights_lol, pretrained_weights_lol-v2-real, respectively.

For LOL dataset

python code/infer_dataset_lol.pyFor LOL-v2-real dataset

python code/infer_dataset_lolv2-real.pyFor unpaired testing, please make sure the dataroot_unpaired in the .yml file is correct.

python code/infer_unpaired.pyYou can find all results in results/. Enjoy!

Comming Soon~

Please refer to this instruction.

Please cite our paper:

@InProceedings{Han_ECCV24_GLARE,

author = {Zhou, Han and Dong, Wei and Liu, Xiaohong and Liu, Shuaicheng and Min, Xiongkuo and Zhai, Guangtao and Chen, Jun},

title = {GLARE: Low Light Image Enhancement via Generative Latent Feature based Codebook Retrieval},

booktitle = {Proceedings of the European Conference on Computer Vision (ECCV)},

year = {2024}

}

@article{GLARE,

title = {GLARE: Low Light Image Enhancement via Generative Latent Feature based Codebook Retrieval},

author = {Zhou, Han and Dong, Wei and Liu, Xiaohong and Liu, Shuaicheng and Min, Xiongkuo and Zhai, Guangtao and Chen, Jun},

journal = {arXiv preprint arXiv:2407.12431},

year = {2024}

}This work is licensed under the Apache License, Version 2.0 (as defined in the LICENSE).

By downloading and using the code and model you agree to the terms in the LICENSE.