OneTable is an omni-directional converter for table formats that facilitates interoperability across data processing systems and query engines. Currently, OneTable supports widely adopted open-source table formats such as Apache Hudi, Apache Iceberg, and Delta Lake.

OneTable simplifies data lake operations by leveraging a common model for table representation. This allows users to write data in one format while still benefiting from integrations and features available in other formats. For instance, OneTable enables existing Hudi users to seamlessly work with Databricks's Photon Engine or query Iceberg tables with Snowflake. Creating transformations from one format to another is straightforward and only requires the implementation of a few interfaces, which we believe will facilitate the expansion of supported source and target formats in the future.

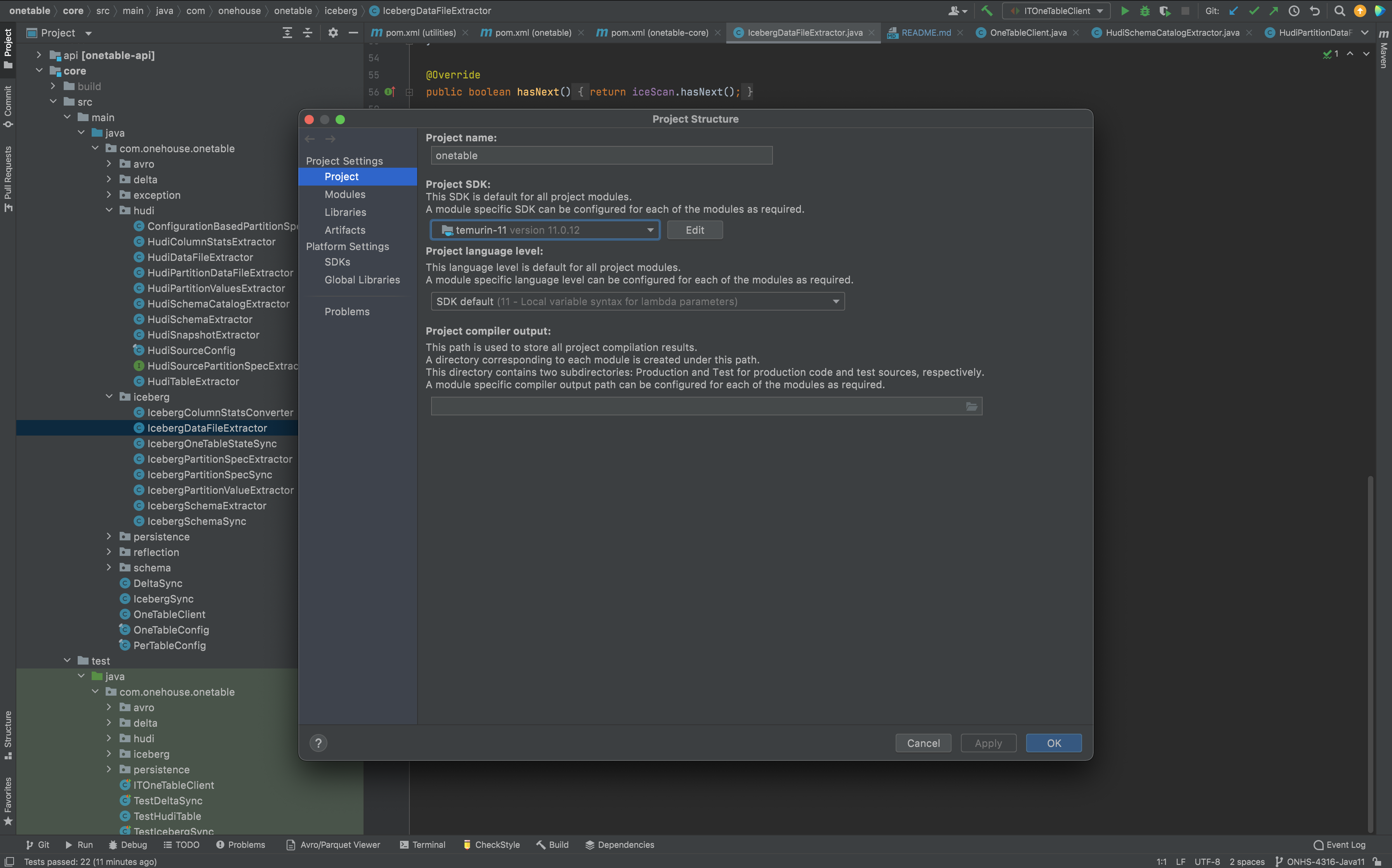

- Use Java11 for building the project. If you are using some other java version, you can use jenv to use multiple java versions locally.

- Build the project using

mvn clean package. Usemvn clean package -DskipTeststo skip tests while building. - Use

mvn clean testormvn testto run all unit tests. If you need to run only a specific test you can do this by something likemvn test -Dtest=TestDeltaSync -pl core. - Similarly, use

mvn clean verifyormvn verifyto run integration tests.

- We use Maven Spotless plugin and Google java format for code style.

- Use

mvn spotless:checkto find out code style violations andmvn spotless:applyto fix them. Code style check is tied to compile phase by default, so code style violations will lead to build failures.

- Get a pre-built bundled jar or create the jar with

mvn install -DskipTests - create a yaml file that follows the format below:

sourceFormat: HUDI

targetFormats:

- DELTA

- ICEBERG

datasets:

-

tableBasePath: s3://tpc-ds-datasets/1GB/hudi/call_center

tableDataPath: s3://tpc-ds-datasets/1GB/hudi/call_center/data

tableName: call_center

namespace: my.db

-

tableBasePath: s3://tpc-ds-datasets/1GB/hudi/catalog_sales

tableName: catalog_sales

partitionSpec: cs_sold_date_sk:VALUE

-

tableBasePath: s3://hudi/multi-partition-dataset

tableName: multi_partition_dataset

partitionSpec: time_millis:DAY:yyyy-MM-dd,type:VALUE

-

tableBasePath: abfs://container@storage.dfs.core.windows.net/multi-partition-dataset

tableName: multi_partition_datasettableFormatsis a list of formats you want to create from your source tablestableBasePathis the basePath of the tabletableDataPathis an optional field specifying the path to the data files. If not specified, the tableBasePath will be used. For Iceberg source tables, you will need to specify the/datapath.namespaceis an optional field specifying the namespace of the table and will be used when syncing to a catalog.partitionSpecis a spec that allows us to infer partition values. This is only required for Hudi source tables. If the table is not partitioned, leave it blank. If it is partitioned, you can specify a spec with a comma separated list with formatpath:type:formatpathis a dot separated path to the partition fieldtypedescribes how the partition value was generated from the column valueVALUE: an identity transform of field value to partition valueYEAR: data is partitioned by a field representing a date and year granularity is usedMONTH: same asYEARbut with month granularityDAY: same asYEARbut with day granularityHOUR: same asYEARbut with hour granularity

format: if your partition type isYEAR,MONTH,DAY, orHOURspecify the format for the date string as it appears in your file paths

- The default implementations of table format clients can be replaced with custom implementations by specifying a client configs yaml file in the format below:

# sourceClientProviderClass: The class name of a table format's client factory, where the client is

# used for reading from a table of this format. All user configurations, including hadoop config

# and client specific configuration, will be available to the factory for instantiation of the

# client.

# targetClientProviderClass: The class name of a table format's client factory, where the client is

# used for writing to a table of this format.

# configuration: A map of configuration values specific to this client.

tableFormatsClients:

HUDI:

sourceClientProviderClass: io.onetable.hudi.HudiSourceClientProvider

DELTA:

targetClientProviderClass: io.onetable.delta.DeltaClient

configuration:

spark.master: local[2]

spark.app.name: onetableclient- A catalog can be used when reading and updating Iceberg tables. The catalog can be specified in a yaml file and passed in with the

--icebergCatalogConfigoption. The format of the catalog config file is:

catalogImpl: io.my.CatalogImpl

catalogName: name

catalogOptions: # all other options are passed through in a map

key1: value1

key2: value2- run with

java -jar utilities/target/utilities-0.1.0-SNAPSHOT-bundled.jar --datasetConfig my_config.yaml [--hadoopConfig hdfs-site.xml] [--clientsConfig clients.yaml] [--icebergCatalogConfig catalog.yaml]The bundled jar includes hadoop dependencies for AWS, Azure, and GCP. Authentication for AWS is done withcom.amazonaws.auth.DefaultAWSCredentialsProviderChain. To override this setting, specify a different implementation with the--awsCredentialsProvideroption.

For setting up the repo on IntelliJ, open the project and change the java version to Java11 in File->ProjectStructure

You have found a bug, or have a cool idea you that want to contribute to the project ? Please file a GitHub issue here

Adding a new target format requires a developer implement TargetClient. Once you have implemented that interface, you can integrate it into the OneTableClient. If you think others may find that target useful, please raise a Pull Request to add it to the project.