This project is an implementation of a neural network for recognizing the American Sign Language (ASL) alphabet using computer vision techniques. The model is trained to recognize hand gestures corresponding to each letter of the ASL alphabet.

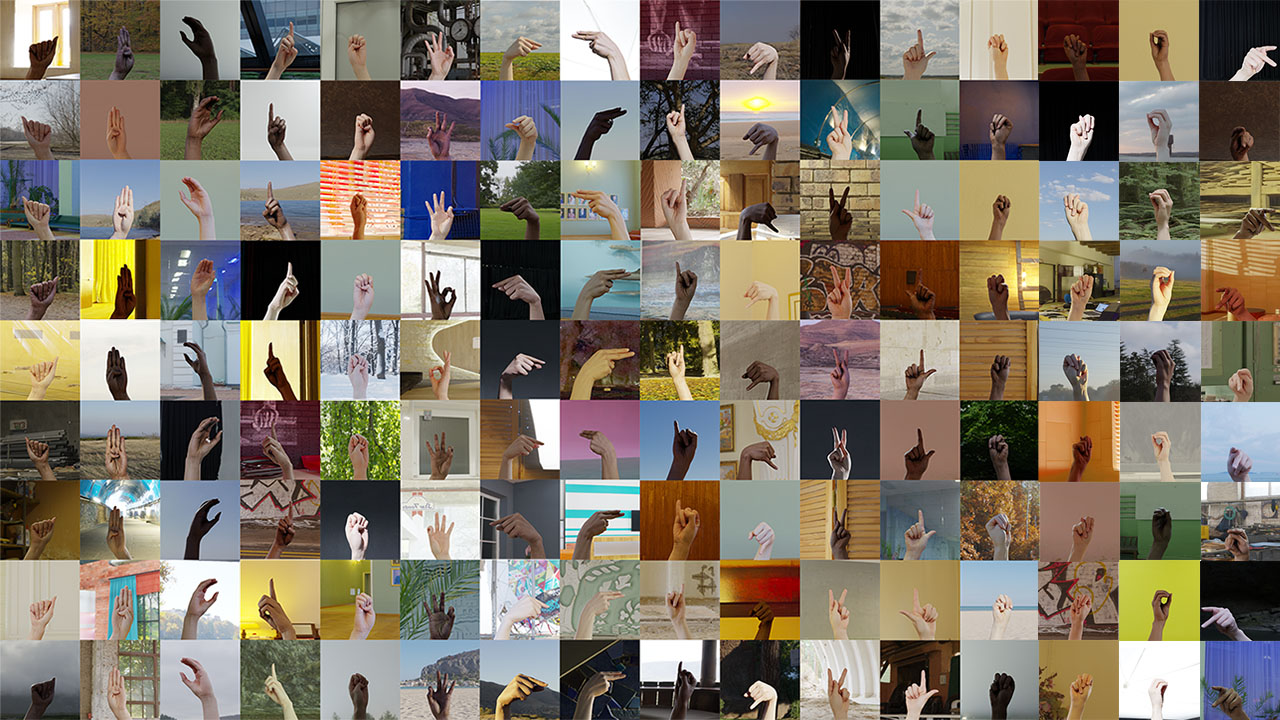

The dataset used in this project is the "Synthetic ASL Alphabet" dataset, which can be found on Kaggle at this link. This dataset is a valuable resource for training and testing ASL alphabet recognition models. Here are some key details about the dataset:

-

Source: The dataset was created by LexSet and made available on Kaggle. It is a synthetic dataset, meaning that the images are generated synthetically rather than being captured from real-life scenarios.

-

Contents: The dataset contains a diverse set of hand gesture images representing the 26 letters of the ASL alphabet, from 'A' to 'Z'. Each letter is associated with multiple images showcasing different hand poses and variations. The images are labeled with their corresponding alphabet letters.

-

Usage: In this project, we use the "Synthetic ASL Alphabet" dataset for training our neural network. The dataset provides a sufficient variety of hand gestures to train a robust model for ASL alphabet recognition.

- Python (>=3.6)

- OpenCV (>=4.0)

- PyTorch (>=1.7)

- NumPy (>=1.19)

- Clone the repository:

git clone https://github.com/yourusername/ASL-OpenCV.git cd ASL-OpenCV - Install the required dependencies:

pip install opencv-python mediapipe torch torchvision torchviz numpy pandas scikit-learn

- Run the test notebook

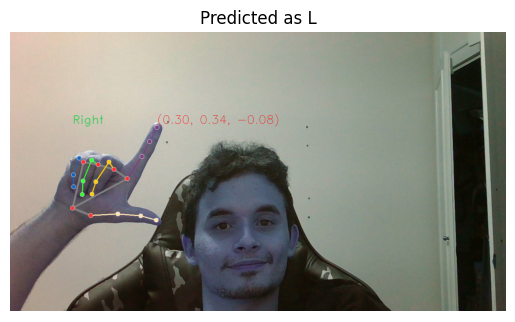

Hand Landmarks: The project uses the MediaPipe library to extract hand landmarks from each image in the dataset. Hand landmarks are specific points or coordinates on the hand that represent key features, such as finger joints and palm locations.

- Data Representation: For each image in the dataset, the extracted hand landmarks are used to create a feature vector. The feature vector represents the spatial coordinates of these landmarks. Each landmark's (x, y, z) coordinates become individual elements in the feature vector.

- Data Organization: The feature vectors from all images are organized into a database-like structure, where each row corresponds to a sample image, and each column represents the coordinates of a specific hand landmark. This structured dataset is used for training and testing the neural net.

Image of a correct prediction example

- models.py: File for saving the models for train, test and prediction of the NN.

- test.ipynb: Notebook for exemplifying the main usage of the application.

- analysis.ipynb: Notebook with preprocessing and analysis of the dataset created.

- dataloader.ipynb: Notebook resposible for handling the image data to a DataFrame format.

- main.py: Notebook with the training/test of a trained NN, with test accuracy of 75.99%