My implementation of "Recurrent Model of Visual Attention"

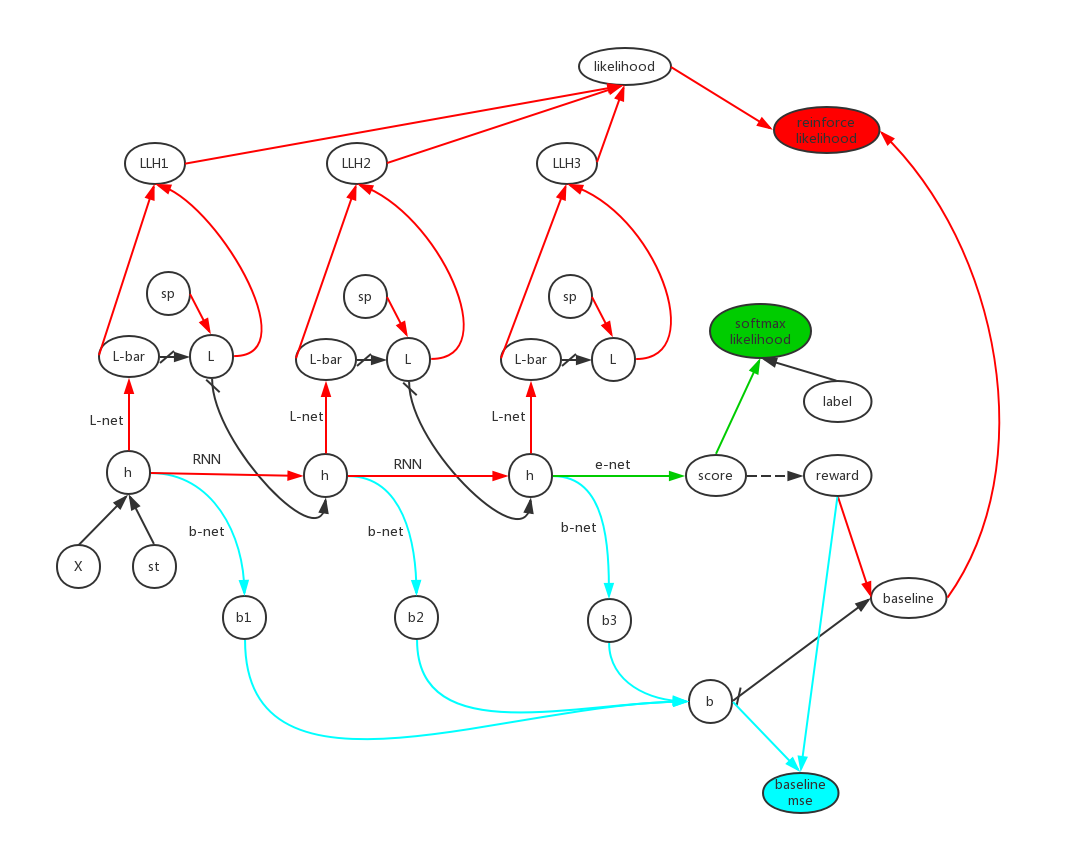

In the graph below, arrow line means forward flow in the network. Colored oval means the source of loss and colored line means the flow of gradient. Special Line with block sign means gradient does not flow though this line.