This project aims to develop an advanced system that integrates Automatic Speech Recognition (ASR), Speech Emotion Recognition (SER), and Text Summarizer. The system will address challenges in accurate speech recognition across diverse accents and noisy environments, providing real-time emotional tone interpretation (sentiment analysis), and generating summaries to retain essential information. Targeting applications such as customer service, business meetings, media, and education, this project seeks to enhance documentation, understanding, and emotional context in communication.

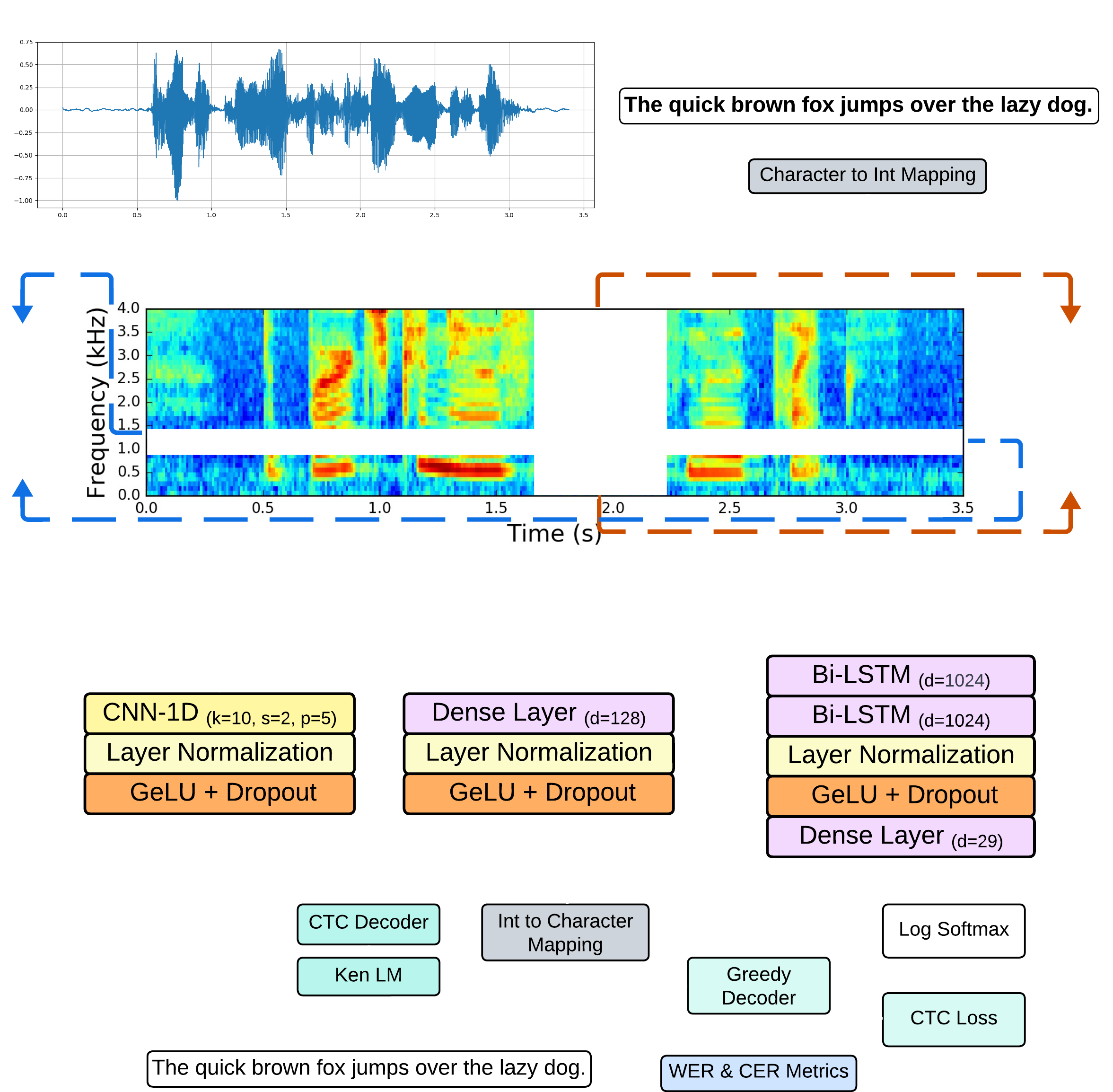

- Baseline Model for ASR: CNN-BiLSTM

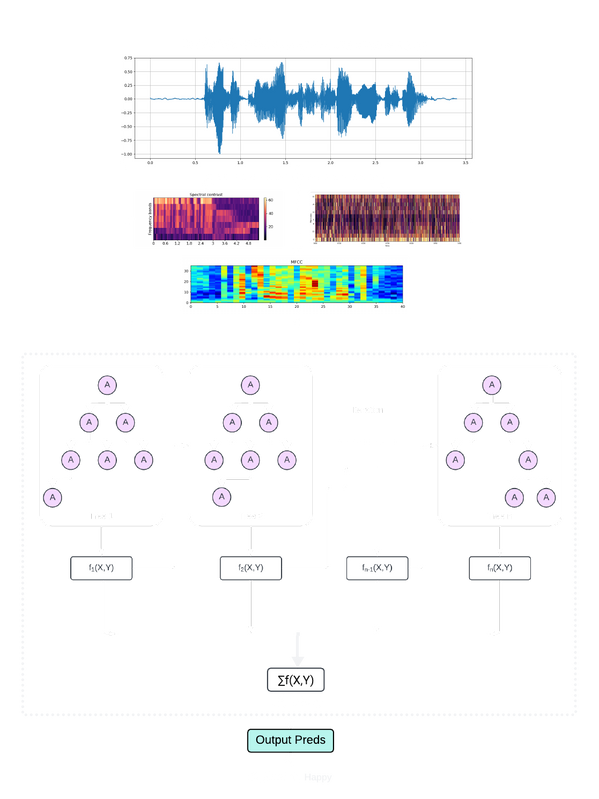

- Baseline Model for SER: XGBoost

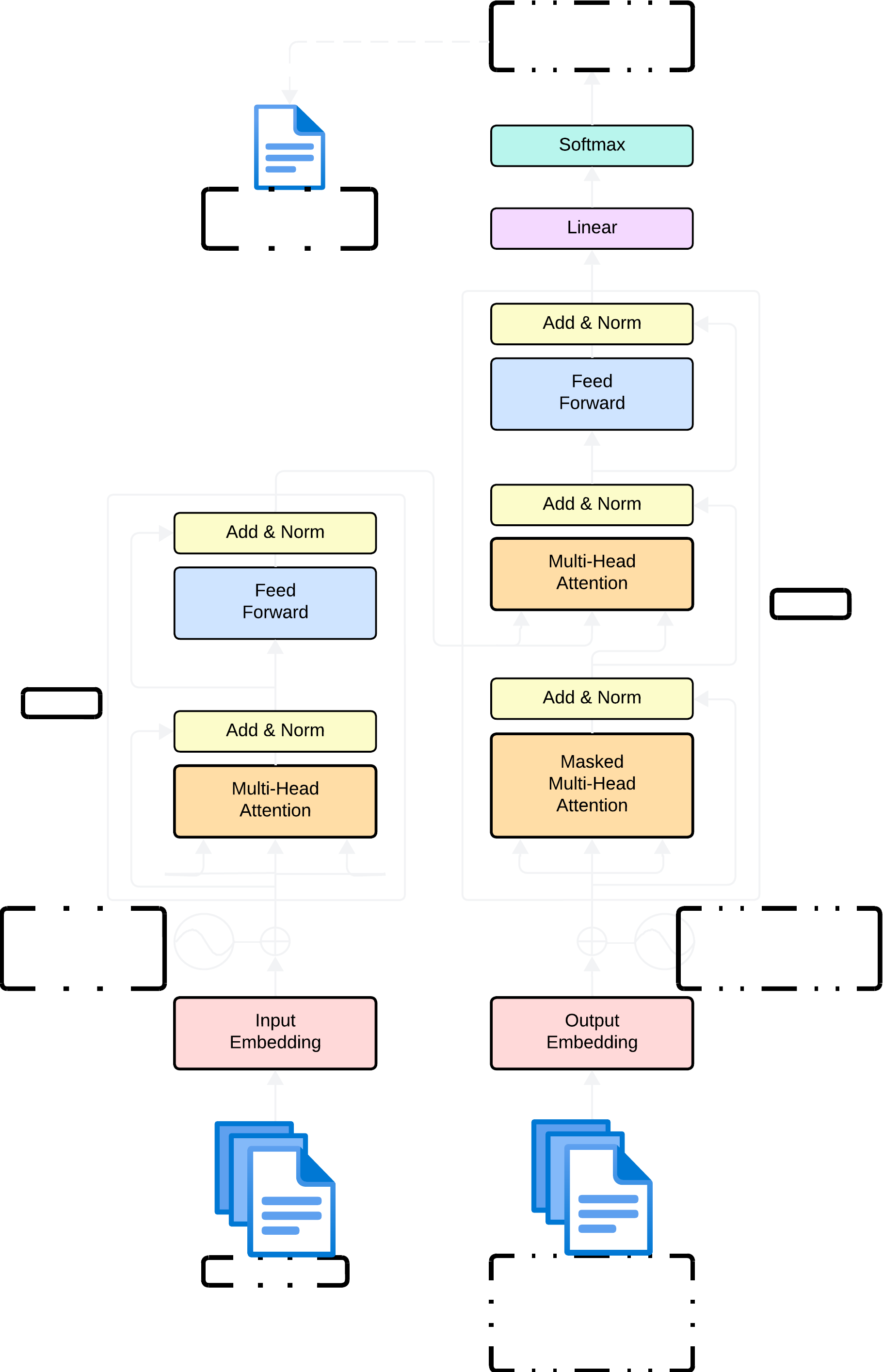

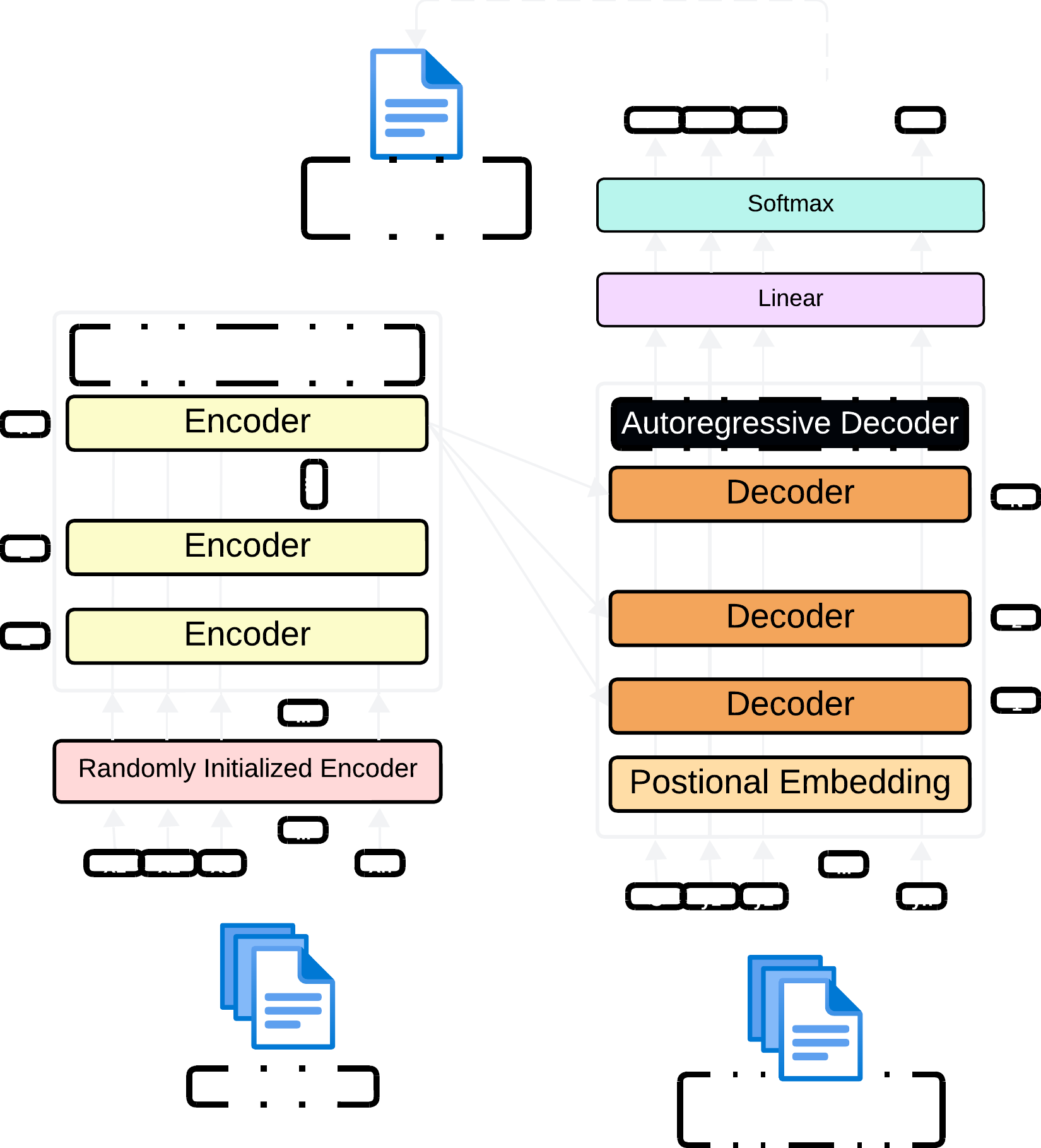

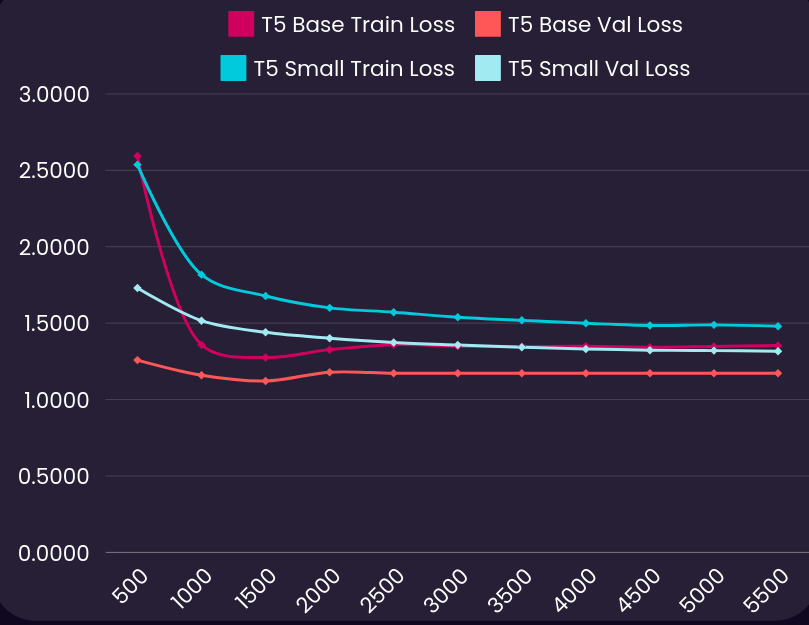

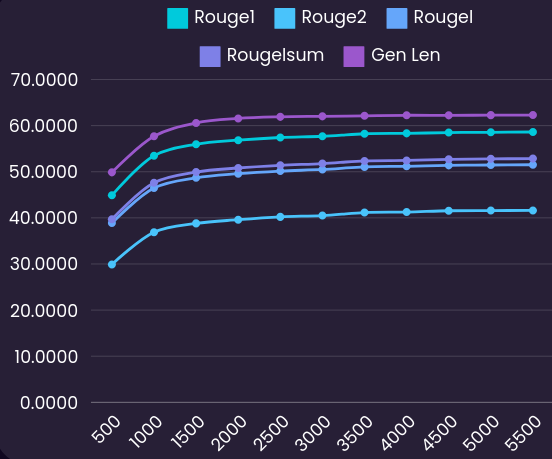

- Baseline Model for Text Summarizer: T5-Small, T5-Base

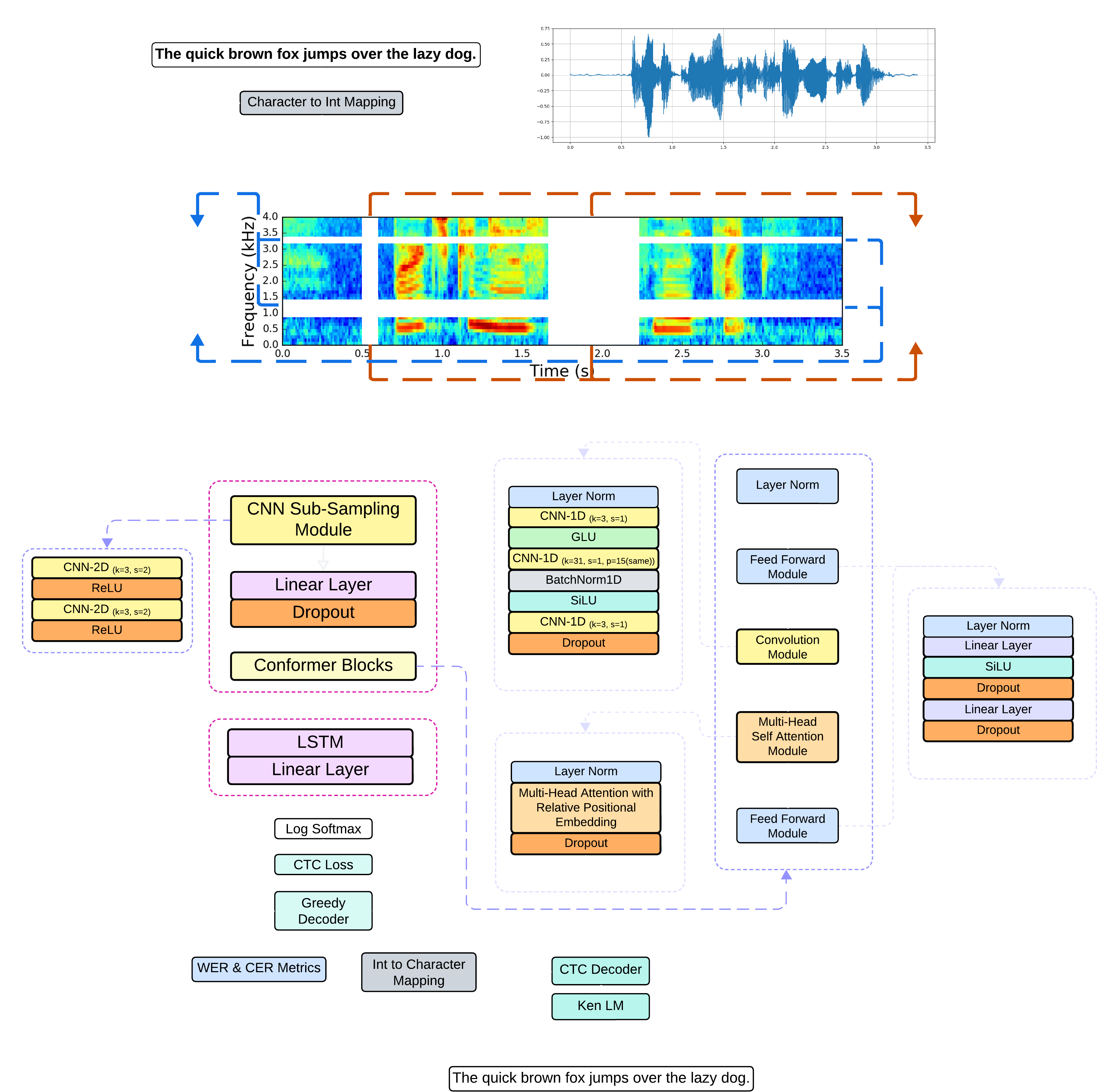

- Final Model for ASR: Conformer

- Final Model for SER: Stacked CNN-BiLSTM with Self-Attention

- Final Model for Text Summarizer: BART Large

- Accurate ASR System: Handle diverse accents and operate effectively in noisy environments

- Emotion Analysis: Through tone of speech

- Meaningful Text Summarizer: Preserve critical information without loss

- Integrated System: Combine all components to provide real-time transcription and summaries

| Base Model (CNN-Bi_LSTM) |

Final Model |

|---|---|

|

|

| Base Model (XGBoost) |

Final Model |

|---|---|

|

|

| Base Model (T5-Small, T5-Base) |

Final Model |

|---|---|

|

! |

Important

To clone the repository with its sub-modules, enter the following command:

git clone --recursive https://github.com/LuluW8071/ASR-with-Speech-Sentiment-and-Text-Summarizer.gitImportant

Before installing dependencies from requirements.txt, make sure you have installed

No need to install CUDA ToolKit and PyTorch CUDA for inferencing. But make sure to install PyTorch CPU.

-

- For Linux:

sudo apt update sudo apt install sox libsox-fmt-all build-essential zlib1g-dev libbz2-dev liblzma-dev

- For Linux:

-

- For Linux:

sudo apt-get install libasound-dev portaudio19-dev libportaudio2 libportaudiocpp0 sudo apt-get install ffmpeg libav-tools sudo pip install pyaudio

- For Linux:

pip install -r requirements.txt2. Configure Comet-ML Integration

Note

Replace dummy_key with your actual Comet-ML API key and project name in the .env file to enable real-time loss curve plotting, system metrics tracking, and confusion matrix visualization.

API_KEY = "dummy_key"

PROJECT_NAME = "dummy_key"Note

--not-convert if you don't want audio conversion

py common_voice.py --file_path file_path/to/validated.tsv --save_json_path file_path/to/save/json -w 4 --percent 10 --output_format wav/flacNote

--checkpoint_path path/to/checkpoint_file to load pre-trained model and fine tune on it.

py train.py --train_json path/to/train.json --valid_json path/to/test.json -w 4 --batch_size 64 -lr 2e-4 --lr_step_size 10 --lr_gamma 0.2 --epochs 50Note

Run the extract_sentence.py to get quick sentence corpus for language modeling.

py extract_sentence.py --file_path file_path/to/validated.tsv --save_txt_path file_path/to/save/txtWarning

CTC Decoder can only be installed for Linux. So, make sure you have installed Ubuntu or Linux OS.

sudo apt update

sudo apt install sox libsox-fmt-all build-essential zlib1g-dev libbz2-dev liblzma-devNote

After installing CTC Decoder, make sure to create the pyproject.toml file inside the src/ASR-with-Speech-Sentiment-Analysis-Text-Summarizer/Automatic_Speech_Recognition/submodules/ctcdecode directory and paste the following code:

[build-system]

requires = ["setuptools", "torch"]Finally, open the terminal in that ctcdecode directory & install CTC Decoder using the following command:

pip3 install .Note

This takes few minutes to install.

Use the previous extracted sentences to build a language model using KenLM.

Build KenLM src/ASR-with-Speech-Sentiment-Analysis-Text-Summarizer/Automatic_Speech_Recognition/submodules/ctcdecode/third_party/kenlm directory using cmake and compile the language model using

mkdir -p build

cd build

cmake ..

make -j 4

lmplz -o n <path/to/corpus.txt> <path/save/language/model.arpa>Follow the instruction on KenLM repository README.md to convert .arpa file to .bin for faster inference.

After training, freeze the model using freeze.py:

py freeze.py --model_checkpoint "path/model/speechrecognition.ckpt" --save_path "path/to/save/"- For Terminal Inference:

python3 engine.py --file_path "path/model/speechrecognition.pt" --kenlm_file "path/to/nglm.arpa or path/to/nglm.bin"- For Web Interface:

python3 app.py --file_path "path/model/speechrecognition.pt" --kenlm_file "path/to/nglm.arpa or path/to/nglm.bin"Note

Run the Speech_Sentiment.ipynb first to get the path and emotions table in csv format and downsample all clips.

py downsample.py --file_path path/to/audio_file.csv --save_csv_path output/path -w 4 --output_format wav/flacpy augment.py --file_path "path/to/emotion_dataset.csv" --save_csv_path "output/path" -w 4 --percent 20py neuralnet/train.py --train_csv "path/to/train.csv" --test_csv "path/to/test.csv" -w 4 --batch_size 256 --epochs 25 -lr 1e-3Note

Just run the Notebook File in src/Text_Summarizer directory.

You may need 🤗 Hugging Face Token with write permission file to upload your trained model directly on the 🤗 HF hub.

| Project | Dataset Source | |

|---|---|---|

| ASR | Mozilla Common Voice, LibriSpeech, Mimic Record Studio | |

| SER | RAVDESS, CremaD, TESS, SAVEE | |

| Text Summarizer | XSum, BillSum |

The code styling adheres to autopep8 formatting.

| Project | Base Model Link | Final Model Link |

|---|---|---|

| ASR | CNN-BiLSTM | Conformer |

| SER | XGBoost |  |

| Text Summarizer | T5 Small-FineTune, T5 Base-FineTune | BART |

| Project | Metrics Used |

|---|---|

| ASR | WER, CER |

| SER | Accuracy, F1-Score, Precision, Recall |

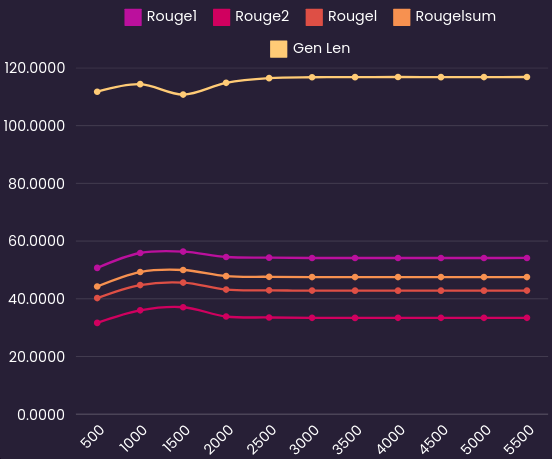

| Text Summarizer | Rouge1, Rouge2, Rougel, Rougelsum, Gen Len |

| Project | Base Model | Final Model Link |

|---|---|---|

| ASR | CNN-BiLSTM |  |

| Speech Sentiment | XGBoost |  |

| Text Summarizer |  |

|

| Project | Base Model | Final Model Link |

|---|---|---|

| ASR | CNN-BiLSTM |  |

| Speech Sentiment | XGBoost |  |

| Text Summarizer |   |

|