PyTorch implementation of our paper "Collaborative Learning for Extremely Low Bit Asymmetric Hashing" [Link].

- Python 2.7

- PyTorch (version >= 0.4.1)

- CIFAR download the CIFAR-10 Matlab version [Link] then run the script

matlab ./data/CIFAR-10/SaveFig.m- NUSWIDE dataset

- MIRFlickr dataset

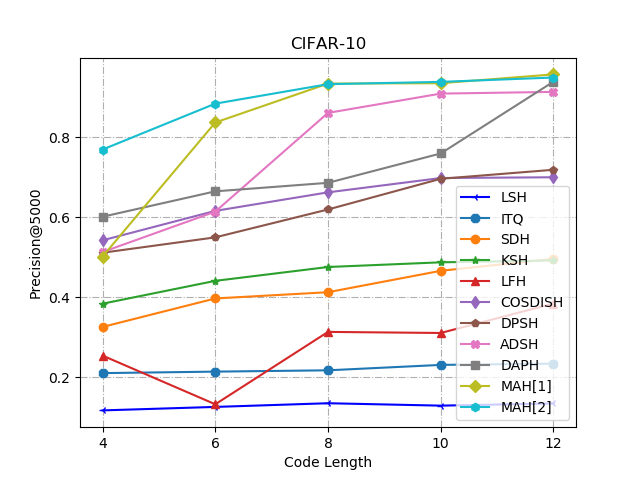

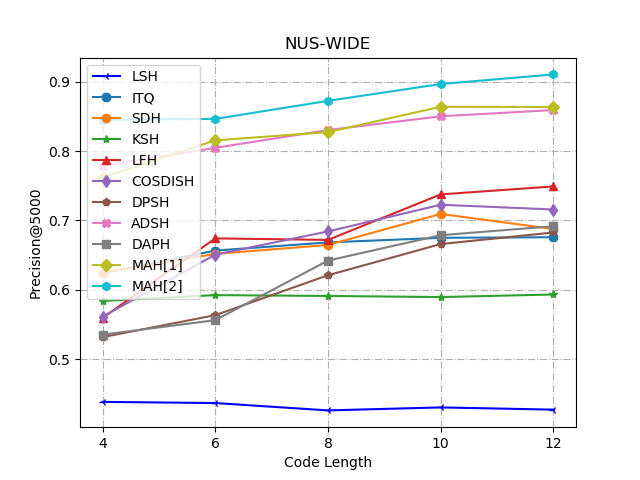

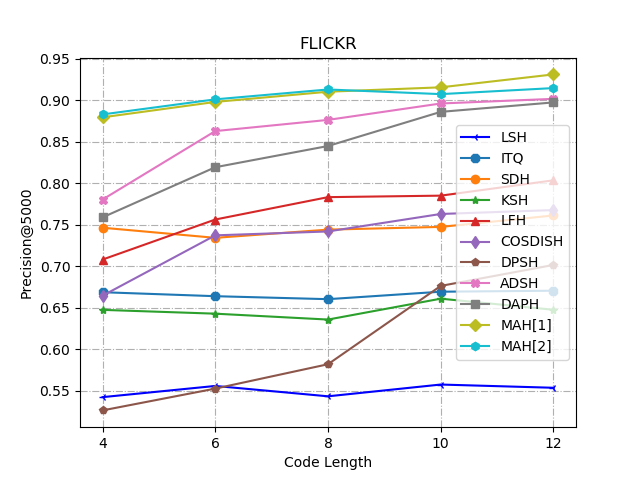

| Method | Backbone | Code Length | ||||

| 4 bits | 6 bits | 8 bits | 10 bits | 12 bits | ||

| MAH-flat | ResNet50 | 0.4759 | 0.8197 | 0.9339 | 0.9335 | 0.9503 |

| MAH-cascade | ResNet50 | 0.7460 | 0.8950 | 0.9429 | 0.9489 | 0.9537 |

For traning with the cascaded multihead structure on different datasets:

python cascade_CIFAR-10.py --bits '4' --gpu '1' --batch-size 64

python cascade_FLICKR.py --bits '4' --gpu '1' --batch-size 64

python cascade_NUS_WIDE.py --bits '4' --gpu '1' --batch-size 64For traning with the flat multihead structure on different datasets:

python flat_CIFAR-10.py --bits '4' --gpu '1' --batch-size 64

python flat_FLICKR.py --bits '4' --gpu '1' --batch-size 64

python flat_NUS_WIDE.py --bits '4' --gpu '1' --batch-size 64Please cite the following paper in your publications if it helps your research:

@article{DBLP:journals/corr/abs-1809-09329,

author = {Yadan Luo and

Yang Li and

Fumin Shen and

Yang Yang and

Peng Cui and

Zi Huang},

title = {Collaborative Learning for Extremely Low Bit Asymmetric Hashing},

journal = {CoRR},

volume = {abs/1809.09329},

year = {2018},

url = {http://arxiv.org/abs/1809.09329},

archivePrefix = {arXiv},

eprint = {1809.09329},

timestamp = {Wed, 13 Mar 2019 15:40:02 +0100},

biburl = {https://dblp.org/rec/bib/journals/corr/abs-1809-09329},

bibsource = {dblp computer science bibliography, https://dblp.org}

}