Towards Better Accuracy-Efficiency Trade-Offs: Dynamic Activity Inference via Collaborative Learning from Various Width-Resolution Configurations

Lutong Qin, Lei Zhang*, Chengrun Li, Chaoda Song, Dongzhou Cheng, Shuoyuan Wang, Hao Wu, Aiguo Song

Nanjing Normal University | Shanghai Jiao Tong University | Case Western Reserve University | University of Macau | Yunnan University | Southeast University

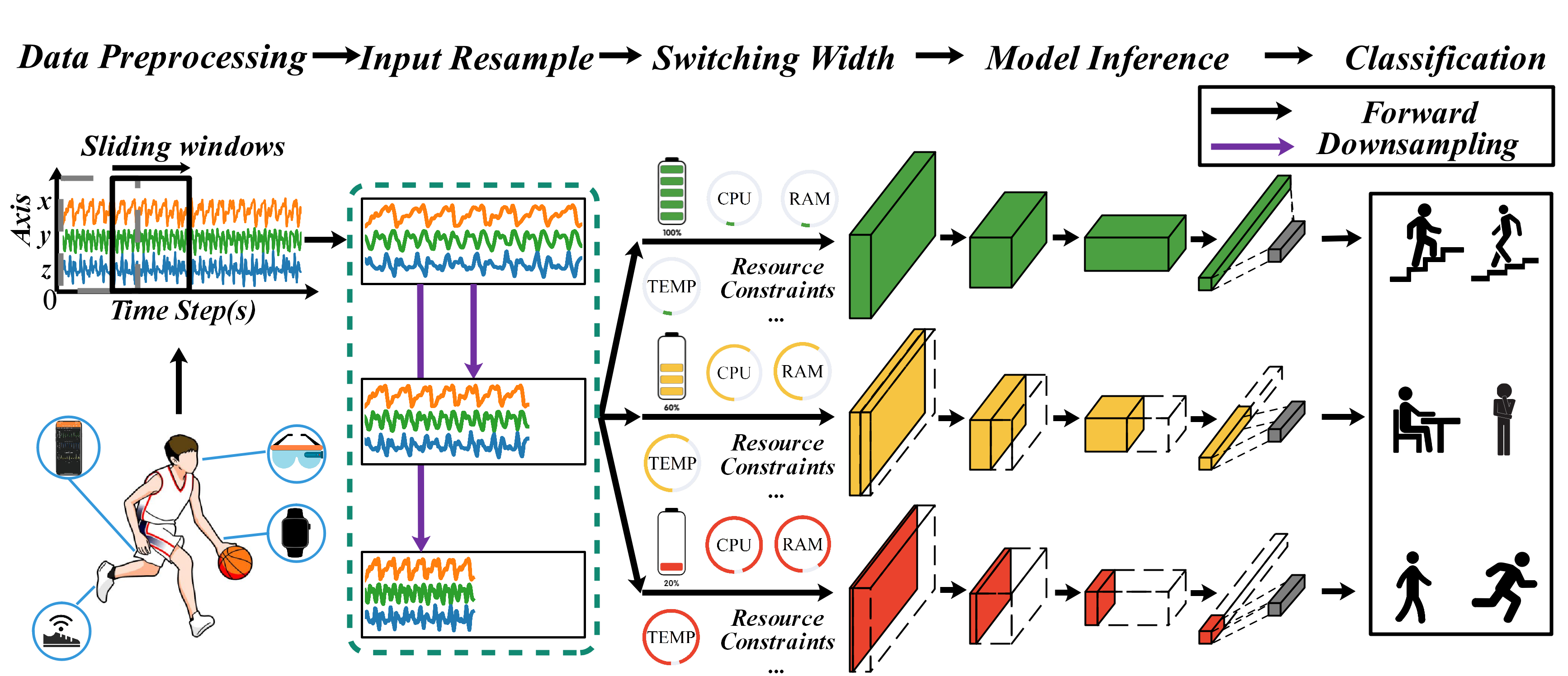

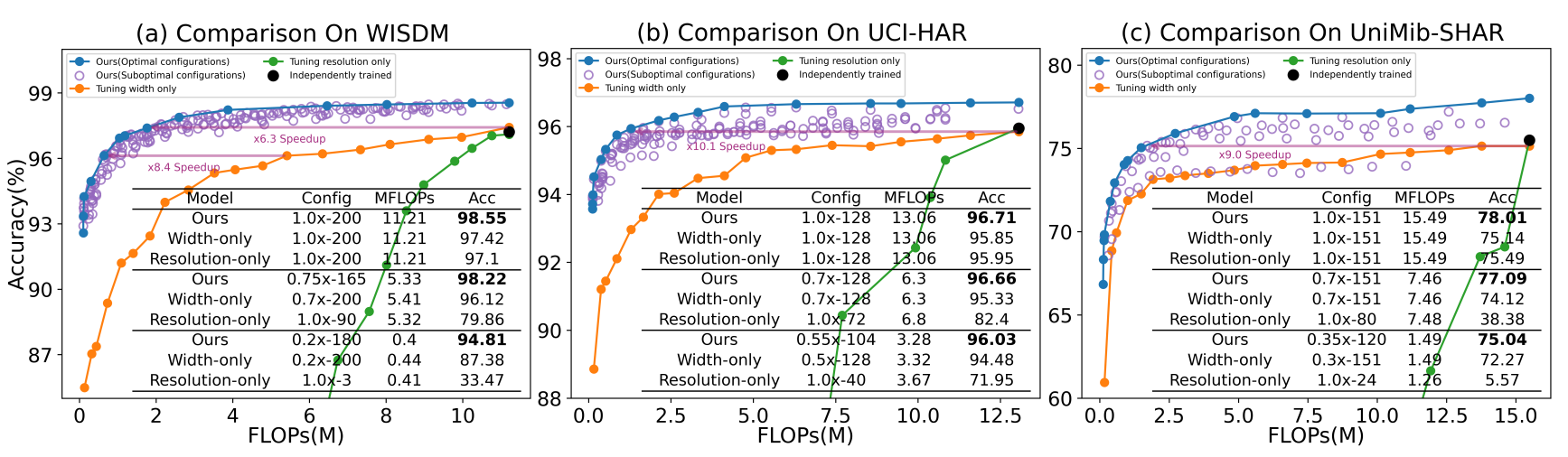

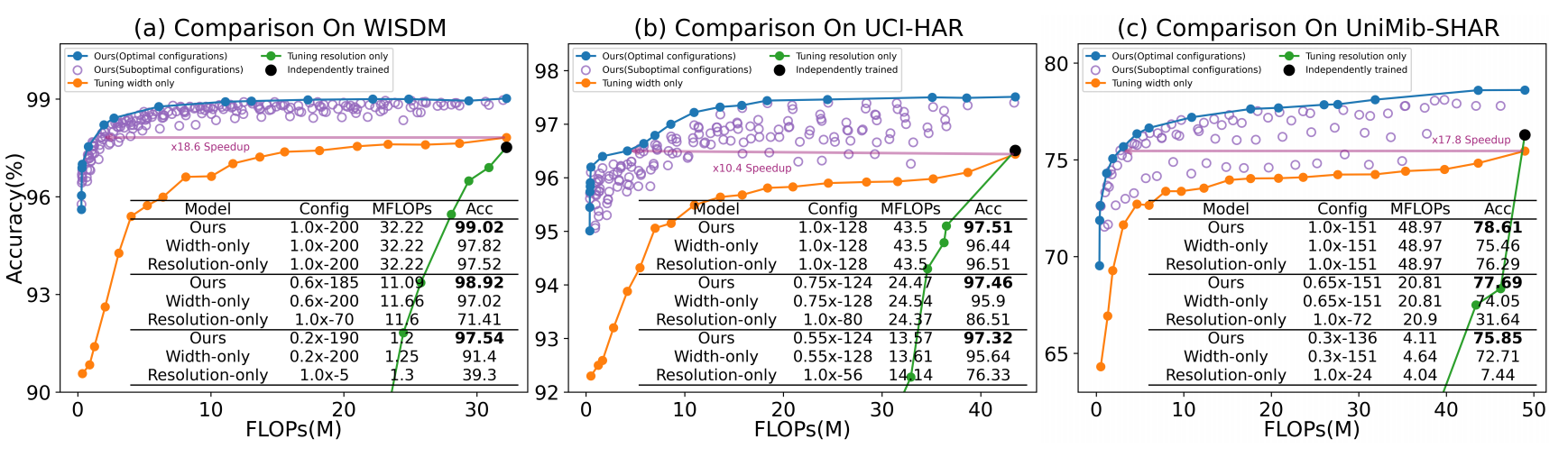

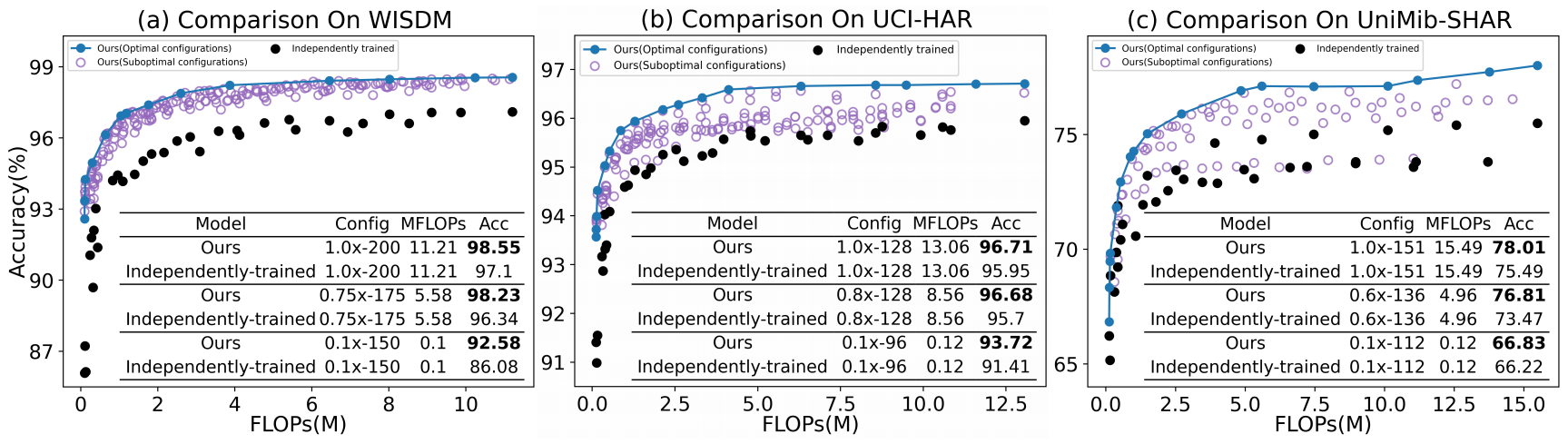

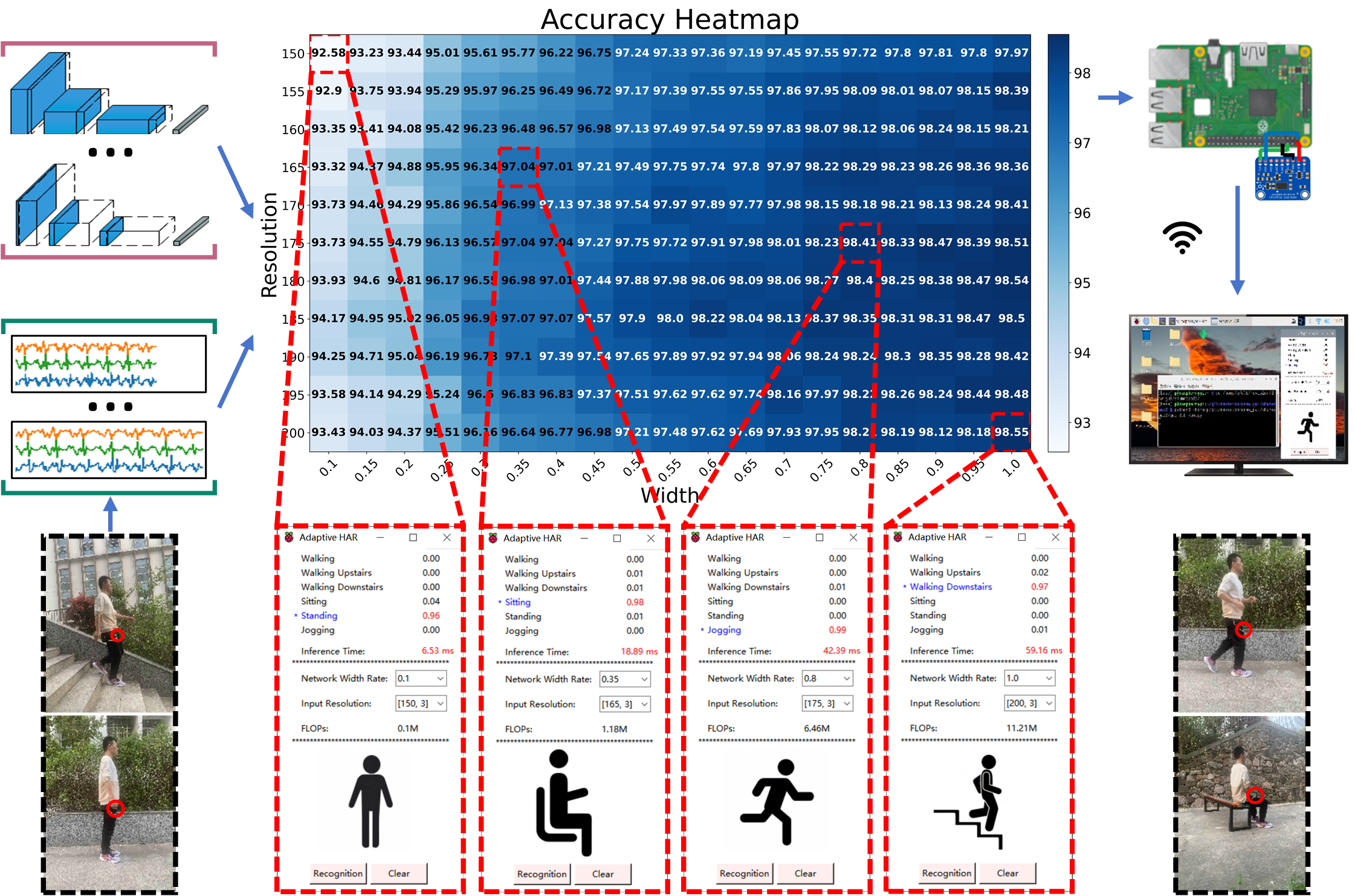

This paper introduces a revolutionary approach: Dynamic Activity Inference via Collaborative Learning. Traditional methods for human activity recognition (HAR) on mobile devices are rigid and lack adaptability to changing resource constraints. Our method breaks this mold by training subnetworks at different widths and resolutions, enabling dynamic accuracy-latency trade-offs at runtime. Unlike conventional approaches requiring multiple networks, ours seamlessly switches between configurations without retraining, slashing costs. Extensive experiments validate its efficacy.

-

Generalizability: It adapts to a wide range of real-world scenarios without the need for human-specific fine-tuning. Previous methods often specialize in specific domains, limiting their applicability.

-

State-of-the-art Results: Our approach achieves current state-of-the-art performance in human activity recognition.

-

Utilize our easy-to-use codebase for re-implementation and further development.

-

Experience our model or deploy the model for inference locally.

- Python 3.8

- PyTorch 1.11.0

- Numpy 1.23.4

- pyyaml 5.1

python train.py --model_name='unimib' # CNN

python train.py --model_name='resnet_unimib' # ResNet

python train.py --model_name='wisdm' # CNN

python train.py --model_name='resnet_wisdm' # ResNet

Get UCI dataset from UCI Machine Learning Repository(http://archive.ics.uci.edu/ml/index.php), do data pre-processing by sliding window strategy and split the data into training and test sets

python train.py --model_name='uci' # CNN

python train.py --model_name='resnet_uci' # ResNet

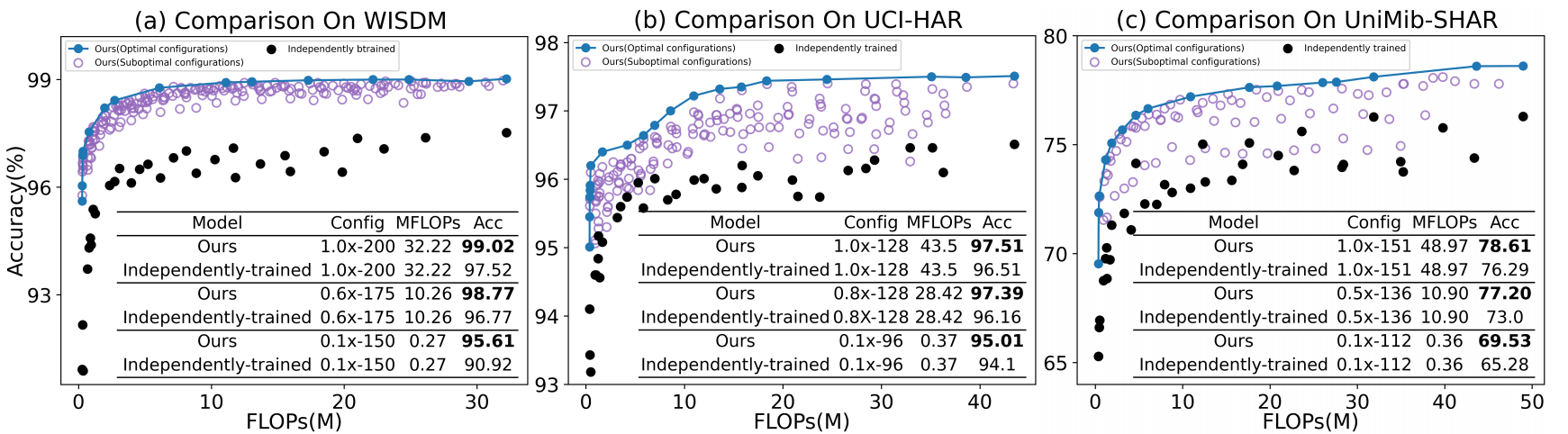

| Dataset | WISDM | UCI-HAR | UniMiB-SHAR |

|---|---|---|---|

| CNN | 97.10% & 11.21M | 95.95% & 13.06M | 75.49% & 15.49M |

| Ours | 98.71% & 11.21M | 97.00% & 13.06M | 78.13% & 15.49M |

| ------------ | ---------------------- | ------------------------- | ------------------------ |

| ResNet | 97.52% & 32.22M | 96.51% & 43.5M | 76.29% & 48.97M |

| Ours | 99.12% & 32.22M | 97.64% & 43.5M | 78.91% & 48.97M |

| ------------ | ---------------------- | ------------------------- | ------------------------ |

For any questions on this project, please contact Lutong Qin (211843003@njnu.edu.cn)