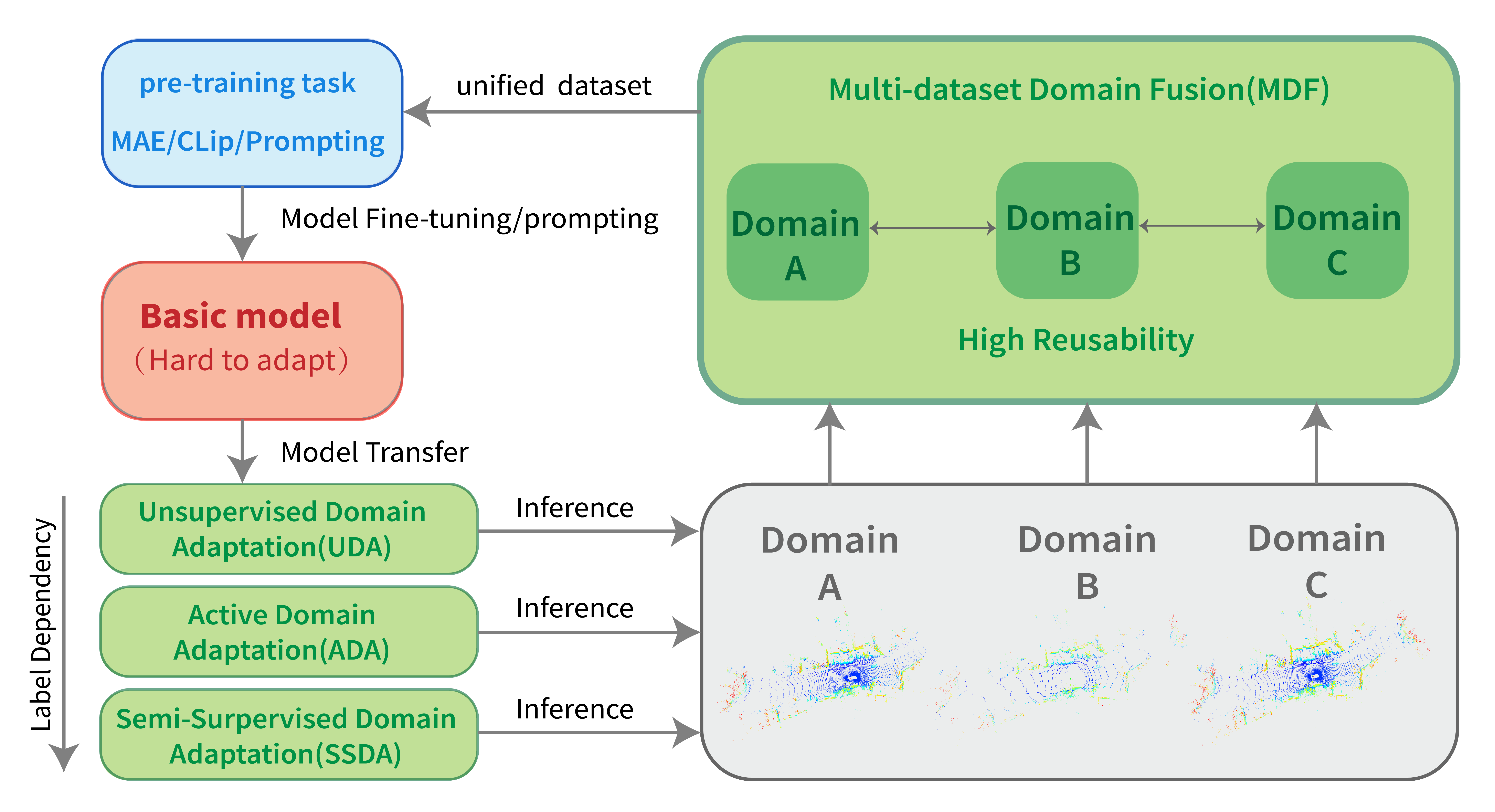

3DTrans is a lightweight, simple, self-contained open-source codebase for exploring the Autonomous Driving-oriented Transfer Learning Techniques, which mainly consists of four functions at present:

- Unsupervised Domain Adaptation (UDA) for 3D Point Clouds

- Active Domain Adaptation (ADA) for 3D Point Clouds

- Semi-Supervised Domain Adaptation (SSDA) for 3D Point Clouds

- Multi-dateset Domain Fusion (MDF) for 3D Point Clouds

This project is developed and maintained by Autonomous Driving Group [at] Shanghai AI Laboratory (ADLab).

- Update News

- TODO List

- 3DTrans Framework Introduction

- Installation for 3DTrans

- Getting Started

- Model Zoo:

- Point Cloud Pre-training for Autonomous Driving Task

- Visualization Tools for 3DTrans

- Acknowledge

- Citation

- Two papers developed using our

3DTranswere accepted by CVPR-2023: Uni3D and Bi3D. (updated on Mar. 2023). - Our

3DTranssupported the Semi-Supervised Domain Adaptation (SSDA) for 3D Object Detection (updated on Nov. 2022). - Our

3DTranssupported the Active Domain Adaptation (ADA) of 3D Object Detection for achieving a good trade-off between high performance and annotation cost (updated on Oct. 2022). - Our

3DTranssupported several typical transfer learning techniques (such as TQS, CLUE, SN, ST3D, Pseudo-labeling, SESS, and Mean-Teacher) for autonomous driving-related model adaptation and transfer. - Our

3DTranssupported the Multi-domain Dataset Fusion (MDF) of 3D Object Detection for enabling the existing 3D models to effectively learn from multiple off-the-shelf 3D datasets (updated on Sep. 2022). - Our

3DTranssupported the Unsupervised Domain Adaptation (UDA) of 3D Object Detection for deploying a well-trained source model to an unlabeled target domain (updated on July 2022). - The self-learning for unlabeled point clouds and new data augmentation operations (such as Object Scaling (ROS) and Size Normalization (SN)) has been supported for

3DTrans, which can achieve a performance boost based on the dataset prior of object-size statistics - Our

3DTranswas initialized based on theOpenPCD-v0.5.2version (updated on May 2022).

🚀 We are actively updating this repository currently, and more cross-dataset fusion solutions (including domain attention and mixture-of-experts) and more low-cost data sampling strategy will be supported by 3DTrans in the furture, which aims to boost the generalization ability and adaptability of the existing state-of-the-art models. 🚀

We expect this repository will inspire the research of 3D model generalization since it will push the limits of perceptual performance. 🗼

- For ADA module, need to add the sequence-level data selection policy (to meet the requirement of practical annotation process).

- Provide experimental findings for the AD-related 3D pre-training (Our ongoing research, which currently achieves promising pre-training results towards downstream tasks by exploiting large-scale unlabeled data in ONCE dataset using

3DTrans).

-

Rapid Target-domain Adaptation:

3DTranscan boost the 3D perception model's adaptability for an unseen domain only using unlabeled data. For example, ST3D supported by3DTransrepository achieves a new state-of-the-art model transfer performance for many adaptation tasks, further boosting the transferability of detection models. Besides,3DTransdevelops many new UDA techniques to solve different types of domain shifts (such as LiDAR-induced shifts or object-size-induced shifts), which includes Pre-SN, Post-SN, and range-map downsampling retraining. -

Annotation-saving Target-domain Transfer:

3DTranscan select the most informative subset of an unseen domain and label them at a minimum cost. For example,3DTransdevelops the Bi3D, which selects partial-yet-important target data and labels them at a minimum cost, to achieve a good trade-off between high performance and low annotation cost. Besides,3DTranshas integrated several typical transfer learning techniques into the 3D object detection pipeline. For example, we integrate the TQS, CLUE, SN, ST3D, Pseudo-labeling, SESS, and Mean-Teacher for supporting autonomous driving-related model transfer. -

Joint Training on Multiple 3D Datasets:

3DTranscan perform the multi-dataset 3D object detection task. For example,3DTransdevelops the Uni3D for multi-dataset 3D object detection, which enables the current 3D baseline models to effectively learn from multiple off-the-shelf 3D datasets, boosting the reusability of 3D data from different autonomous driving manufacturers. -

Multi-dataset Support:

3DTransprovides a unified interface of dataloader, data augmentor, and data processor for multiple public benchmarks, including Waymo, nuScenes, ONCE, Lyft, and KITTI, etc, which is beneficial to study the transferability and generality of 3D perception models among different datasets. Besides, in order to eliminate the domain gaps between different manufacturers and obtain generalizable representations,3DTranshas integrated typical unlabeled pre-training techniques for giving a better parameter initialization of the current 3D baseline models. For example, we integrate the PointContrast and SESS to support point cloud-based pre-training task. -

Extensibility for Multiple Models:

3DTransmakes the baseline model have the ability of cross-domain/dataset safe transfer and multi-dataset joint training. Without making major changes of the code and 3D model structure, a single-dataset 3D baseline model can be successfully adapted to an unseen domain or dataset by using our3DTrans. -

3DTransis developed based onOpenPCDetcodebase, which can be easily integrated with the models developing usingOpenPCDetrepository. Thanks for their valuable open-sourcing!

You may refer to INSTALL.md for the installation of 3DTrans.

-

Please refer to Readme for Datasets to prepare the dataset and convert the data into the 3DTrans format. Besides, 3DTrans supports the reading and writing data from Ceph Petrel-OSS, please refer to Readme for Datasets for more details.

-

Please refer to Readme for UDA for understanding the problem definition of UDA and performing the UDA adaptation process.

-

Please refer to Readme for ADA for understanding the problem definition of ADA and performing the ADA adaptation process.

-

Please refer to Readme for SSDA for understanding the problem definition of SSDA and performing the SSDA adaptation process.

-

Please refer to Readme for MDF for understanding the problem definition of MDF and performing the MDF joint-training process.

We could not provide the Waymo-related pretrained models due to Waymo Dataset License Agreement, but you could easily achieve similar performance by training with the corresponding configs.

Here, we report the cross-dataset (Waymo-to-KITTI) adaptation results using the BEV/3D AP performance as the evaluation metric. Please refer to Readme for UDA for experimental results of more cross-domain settings.

- All LiDAR-based models are trained with 4 NVIDIA A100 GPUs and are available for download.

- For Waymo dataset training, we train the model using 20% data.

- The domain adaptation time is measured with 4 NVIDIA A100 GPUs and PyTorch 1.8.1.

- Pre-SN represents that we perform the SN (statistical normalization) operation during the pre-training source-only model stage.

- Post-SN represents that we perform the SN (statistical normalization) operation during the adaptation stage.

| training time | Adaptation | Car@R40 | download | |

|---|---|---|---|---|

| PointPillar | ~7.1 hours | Source-only with SN | 74.98 / 49.31 | - |

| PointPillar | ~0.6 hours | Pre-SN | 81.71 / 57.11 | model-57M |

| PV-RCNN | ~23 hours | Source-only with SN | 69.92 / 60.17 | - |

| PV-RCNN | ~23 hours | Source-only | 74.42 / 40.35 | - |

| PV-RCNN | ~3.5 hours | Pre-SN | 84.00 / 74.57 | model-156M |

| PV-RCNN | ~1 hours | Post-SN | 84.94 / 75.20 | model-156M |

| Voxel R-CNN | ~16 hours | Source-only with SN | 75.83 / 55.50 | - |

| Voxel R-CNN | ~16 hours | Source-only | 64.88 / 19.90 | - |

| Voxel R-CNN | ~2.5 hours | Pre-SN | 82.56 / 67.32 | model-201M |

| Voxel R-CNN | ~2.2 hours | Post-SN | 85.44 / 76.78 | model-201M |

| PV-RCNN++ | ~20 hours | Source-only with SN | 67.22 / 56.50 | - |

| PV-RCNN++ | ~20 hours | Source-only | 67.68 / 20.82 | - |

| PV-RCNN++ | ~2.2 hours | Post-SN | 86.86 / 79.86 | model-193M |

Here, we report the Waymo-to-KITTI adaptation results using the BEV/3D AP performance. Please refer to Readme for ADA for experimental results of more cross-domain settings.

- All LiDAR-based models are trained with 4 NVIDIA A100 GPUs and are available for download.

- For Waymo dataset training, we train the model using 20% data.

- The domain adaptation time is measured with 4 NVIDIA A100 GPUs and PyTorch 1.8.1.

| training time | Adaptation | Car@R40 | download | |

|---|---|---|---|---|

| PV-RCNN | ~23h@4 A100 | Source Only | 67.95 / 27.65 | - |

| PV-RCNN | ~1.5h@2 A100 | Bi3D (1% annotation budget) | 87.12 / 78.03 | Model-58M |

| PV-RCNN | ~10h@2 A100 | Bi3D (5% annotation budget) | 89.53 / 81.32 | Model-58M |

| PV-RCNN | ~1.5h@2 A100 | TQS | 82.00 / 72.04 | Model-58M |

| PV-RCNN | ~1.5h@2 A100 | CLUE | 82.13 / 73.14 | Model-50M |

| PV-RCNN | ~10h@2 A100 | Bi3D+ST3D | 87.83 / 81.23 | Model-58M |

| Voxel R-CNN | ~16h@4 A100 | Source Only | 64.87 / 19.90 | - |

| Voxel R-CNN | ~1.5h@2 A100 | Bi3D (1% annotation budget) | 88.09 / 79.14 | Model-72M |

| Voxel R-CNN | ~6h@2 A100 | Bi3D (5% annotation budget) | 90.18 / 81.34 | Model-72M |

| Voxel R-CNN | ~1.5h@2 A100 | TQS | 78.26 / 67.11 | Model-72M |

| Voxel R-CNN | ~1.5h@2 A100 | CLUE | 81.93 / 70.89 | Model-72M |

We report the target domain results on Waymo-to-nuScenes adaptation using the BEV/3D AP performance as the evaluation metric, and Waymo-to-ONCE adaptation using ONCE evaluation metric. Please refer to Readme for SSDA for experimental results of more cross-domain settings.

- For Waymo-to-nuScenes adaptation, we employ 4 NVIDIA A100 GPUs for model training.

- The domain adaptation time is measured with 4 NVIDIA A100 GPUs and PyTorch 1.8.1.

- For Waymo dataset training, we train the model using 20% data.

- second_5%_FT denotes that we use 5% nuScenes training data to fine-tune the Second model.

- second_5%_SESS denotes that we utilize the SESS: Self-Ensembling Semi-Supervised method to adapt our baseline model.

- second_5%_PS denotes that we fine-tune the source-only model to nuScenes datasets using 5% labeled data, and perform the pseudo-labeling process on the remaining 95% unlabeled nuScenes data.

| training time | Adaptation | Car@R40 | download | |

|---|---|---|---|---|

| Second | ~11 hours | source-only(Waymo) | 27.85 / 16.43 | - |

| Second | ~0.4 hours | second_5%_FT | 45.95 / 26.98 | model-61M |

| Second | ~1.8 hours | second_5%_SESS | 47.77 / 28.74 | model-61M |

| Second | ~1.7 hours | second_5%_PS | 47.72 / 29.37 | model-61M |

| PV-RCNN | ~24 hours | source-only(Waymo) | 40.31 / 23.32 | - |

| PV-RCNN | ~1.0 hours | pvrcnn_5%_FT | 49.58 / 34.86 | model-150M |

| PV-RCNN | ~5.5 hours | pvrcnn_5%_SESS | 49.92 / 35.28 | model-150M |

| PV-RCNN | ~5.4 hours | pvrcnn_5%_PS | 49.84 / 35.07 | model-150M |

| PV-RCNN++ | ~16 hours | source-only(Waymo) | 31.96 / 19.81 | - |

| PV-RCNN++ | ~1.2 hours | pvplus_5%_FT | 49.94 / 34.28 | model-185M |

| PV-RCNN++ | ~4.2 hours | pvplus_5%_SESS | 51.14 / 35.25 | model-185M |

| PV-RCNN++ | ~3.6 hours | pvplus_5%_PS | 50.84 / 35.39 | model-185M |

- For Waymo-to-ONCE adaptation, we employ 8 NVIDIA A100 GPUs for model training.

- PS denotes that we pseudo-label the unlabeled ONCE and re-train the model on pseudo-labeled data.

- SESS denotes that we utilize the SESS method to adapt the baseline.

- For ONCE, the IoU thresholds for evaluation are 0.7, 0.3, 0.5 for Vehicle, Pedestrian, Cyclist.

| Training ONCE Data | Methods | Vehicle@AP | Pedestrian@AP | Cyclist@AP | download | |

|---|---|---|---|---|---|---|

| Centerpoint | Labeled (4K) | Train from scracth | 74.93 | 46.21 | 67.36 | model-96M |

| Centerpoint_Pede | Labeled (4K) | PS | - | 49.14 | - | model-96M |

| PV-RCNN++ | Labeled (4K) | Train from scracth | 79.78 | 35.91 | 63.18 | model-188M |

| PV-RCNN++ | Small Dataset (100K) | SESS | 80.02 | 46.24 | 66.41 | model-188M |

Here, we report the Waymo-and-nuScenes consolidation results. The models are jointly trained on Waymo and nuScenes datasets, and evaluated on Waymo using the mAP/mAHPH LEVEL_2 and nuScenes using the BEV/3D AP. Please refer to Readme for MDF for more results.

- All LiDAR-based models are trained with 8 NVIDIA A100 GPUs and are available for download.

- The multi-domain dataset fusion (MDF) training time is measured with 8 NVIDIA A100 GPUs and PyTorch 1.8.1.

- For Waymo dataset training, we train the model using 20% training data for saving training time.

- PV-RCNN-nuScenes represents that we train the PV-RCNN model only using nuScenes dataset, and PV-RCNN-DM indicates that we merge the Waymo and nuScenes datasets and train on the merged dataset. Besides, PV-RCNN-DT denotes the domain attention-aware multi-dataset training.

| Baseline | MDF Methods | Waymo@Vehicle | Waymo@Pedestrian | Waymo@Cyclist | nuScenes@Car | nuScenes@Pedestrian | nuScenes@Cyclist |

|---|---|---|---|---|---|---|---|

| PV-RCNN-nuScenes | only nuScenes | 35.59 / 35.21 | 3.95 / 2.55 | 0.94 / 0.92 | 57.78 / 41.10 | 24.52 / 18.56 | 10.24 / 8.25 |

| PV-RCNN-Waymo | only Waymo | 66.49 / 66.01 | 64.09 / 58.06 | 62.09 / 61.02 | 32.99 / 17.55 | 3.34 / 1.94 | 0.02 / 0.01 |

| PV-RCNN-DM | Direct Merging | 57.82 / 57.40 | 48.24 / 42.81 | 54.63 / 53.64 | 48.67 / 30.43 | 12.66 / 8.12 | 1.67 / 1.04 |

| PV-RCNN-Uni3D | Uni3D | 66.98 / 66.50 | 65.70 / 59.14 | 61.49 / 60.43 | 60.77 / 42.66 | 27.44 / 21.85 | 13.50 / 11.87 |

| PV-RCNN-DT | Domain Attention | 67.27 / 66.77 | 65.86 / 59.38 | 61.38 / 60.34 | 60.83 / 43.03 | 27.46 / 22.06 | 13.82 / 11.52 |

| Baseline | MDF Methods | Waymo@Vehicle | Waymo@Pedestrian | Waymo@Cyclist | nuScenes@Car | nuScenes@Pedestrian | nuScenes@Cyclist |

|---|---|---|---|---|---|---|---|

| Voxel-RCNN-nuScenes | only nuScenes | 31.89 / 31.65 | 3.74 / 2.57 | 2.41 / 2.37 | 53.63 / 39.05 | 22.48 / 17.85 | 10.86 / 9.70 |

| Voxel-RCNN-Waymo | only Waymo | 67.05 / 66.41 | 66.75 / 60.83 | 63.13 / 62.15 | 34.10 / 17.31 | 2.99 / 1.69 | 0.05 / 0.01 |

| Voxel-RCNN-DM | Direct Merging | 58.26 / 57.87 | 52.72 / 47.11 | 50.26 / 49.50 | 51.40 / 31.68 | 15.04 / 9.99 | 5.40 / 3.87 |

| Voxel-RCNN-Uni3D | Uni3D | 66.76 / 66.29 | 66.62 / 60.51 | 63.36 / 62.42 | 60.18 / 42.23 | 30.08 / 24.37 | 14.60 / 12.32 |

| Voxel-RCNN-DT | Domain Attention | 66.96 / 66.50 | 68.23 / 62.00 | 62.57 / 61.64 | 60.42 / 42.81 | 30.49 / 24.92 | 15.91 / 13.35 |

| Baseline | MDF Methods | Waymo@Vehicle | Waymo@Pedestrian | Waymo@Cyclist | nuScenes@Car | nuScenes@Pedestrian | nuScenes@Cyclist |

|---|---|---|---|---|---|---|---|

| PV-RCNN++ DM | Direct Merging | 63.79 / 63.38 | 55.03 / 49.75 | 59.88 / 58.99 | 50.91 / 31.46 | 17.07 / 12.15 | 3.10 / 2.20 |

| PV-RCNN++-Uni3D | Uni3D | 68.55 / 68.08 | 69.83 / 63.60 | 64.90 / 63.91 | 62.51 / 44.16 | 33.82 / 27.18 | 22.48 / 19.30 |

| PV-RCNN++-DT | Domain Attention | 68.51 / 68.05 | 69.81 / 63.58 | 64.39 / 63.43 | 62.33 / 44.16 | 33.44 / 26.94 | 21.64 / 18.52 |

Based on our research progress on the cross-domain adaptation of multiple autonomous driving datasets, we can utilize the multi-source datasets for performing the pre-training task. Here, we present several unsupervised and self-supervised pre-training implementations (including PointContrast).

-

Please refer to Readme for point cloud pre-training for starting the journey of 3D perception model pre-training.

-

💪 💪 We are actively exploring the possibility of boosting the 3D pre-training generalization ability. The corresponding code and pre-training checkpoints are coming soon in 3DTrans-v0.2.0.

-

Our

3DTranssupports the sequence-level visualization function Quick Sequence Demo to continuously display the prediction results of ground truth of a selected scene. -

Visualization Demo:

-

Our code is heavily based on OpenPCDet v0.5.2. Thanks OpenPCDet Development Team for their awesome codebase.

-

Our pre-training 3D point cloud task is based on ONCE Dataset. Thanks ONCE Development Team for their inspiring data release.

Our Papers:

- Uni3D: A Unified Baseline for Multi-dataset 3D Object Detection

- Bi3D: Bi-domain Active Learning for Cross-domain 3D Object Detection

- UniDA3D: Unified Domain Adaptive 3D Semantic Segmentation Pipeline

Our Team:

- A Team Home for Member Information and Profile, Project Link

- Welcome to see SUG for exploring the possibilities of adapting a model to multiple unseen domains and studying how to leverage the feature’s multi-modal information residing in a single dataset. You can also use SUG to obtain generalizable features by means of a single dataset, which can obtain good results on unseen domains or datasets.

If you find this project useful in your research, please consider citing:

@inproceedings{zhang2023uni3d,

title={Uni3D: A Unified Baseline for Multi-dataset 3D Object Detection},

author={Bo Zhang, Jiakang Yuan, Botian Shi, Tao Chen, Yikang Li, and Yu Qiao},

booktitle={CVPR},

year={2023},

}

@inproceedings{yuan2023bi3d,

title={Bi3D: Bi-domain Active Learning for Cross-domain 3D Object Detection},

author={Jiakang Yuan, Bo Zhang, Xiangchao Yan, Tao Chen, Botian Shi, Yikang Li, and Yu Qiao},

booktitle={CVPR},

year={2023},

}

@article{fei2023unida3d,

title={UniDA3D: Unified Domain Adaptive 3D Semantic Segmentation Pipeline},

author={Ben Fei, Siyuan Huang, Jiakang Yuan, Botian Shi, Bo Zhang, Weidong Yang, Min Dou, and Yikang Li},

journal={arXiv preprint arXiv:2212.10390},

year={2023}

}