Use behavior tree and DMP for upper-level task planning.

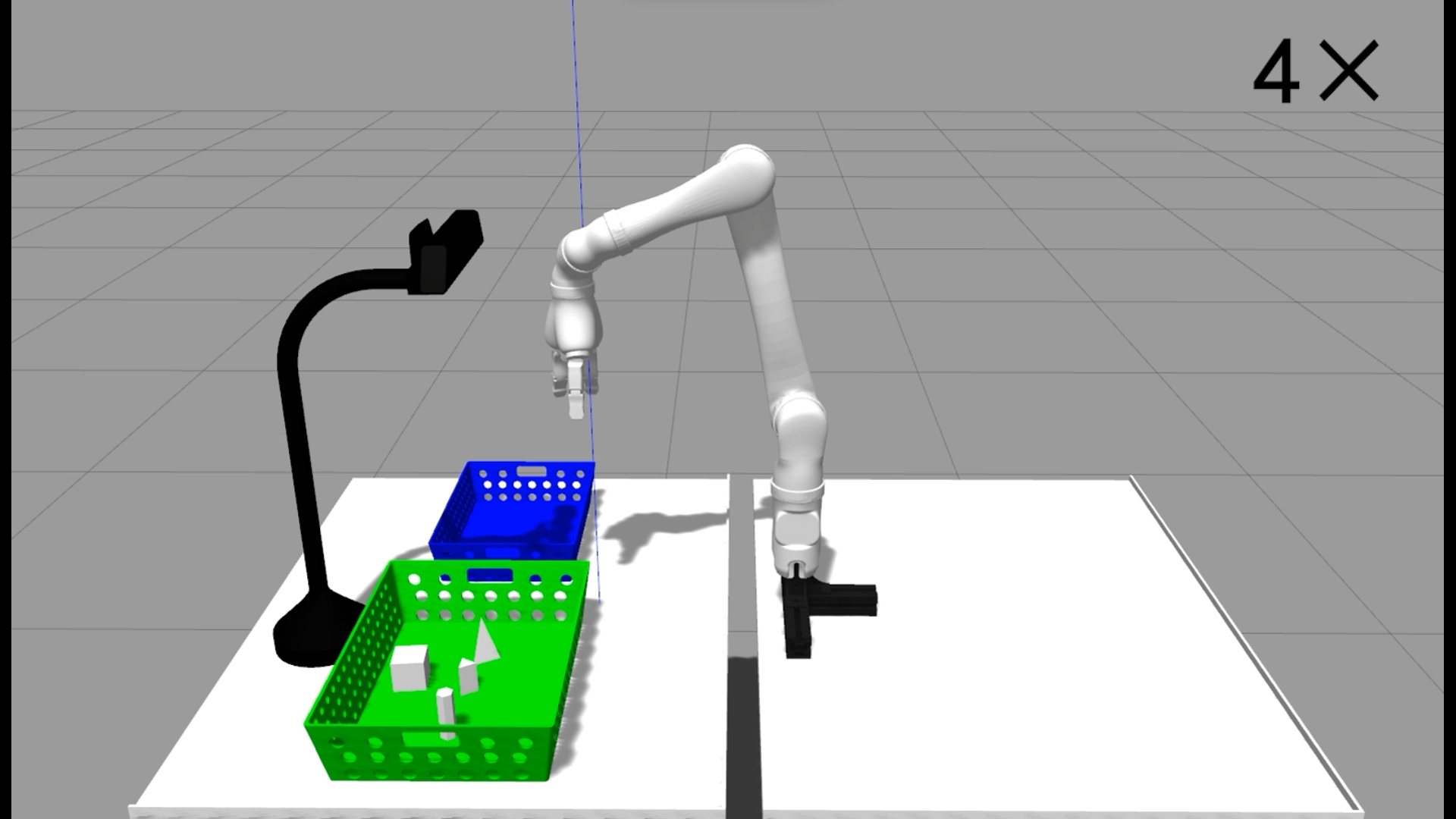

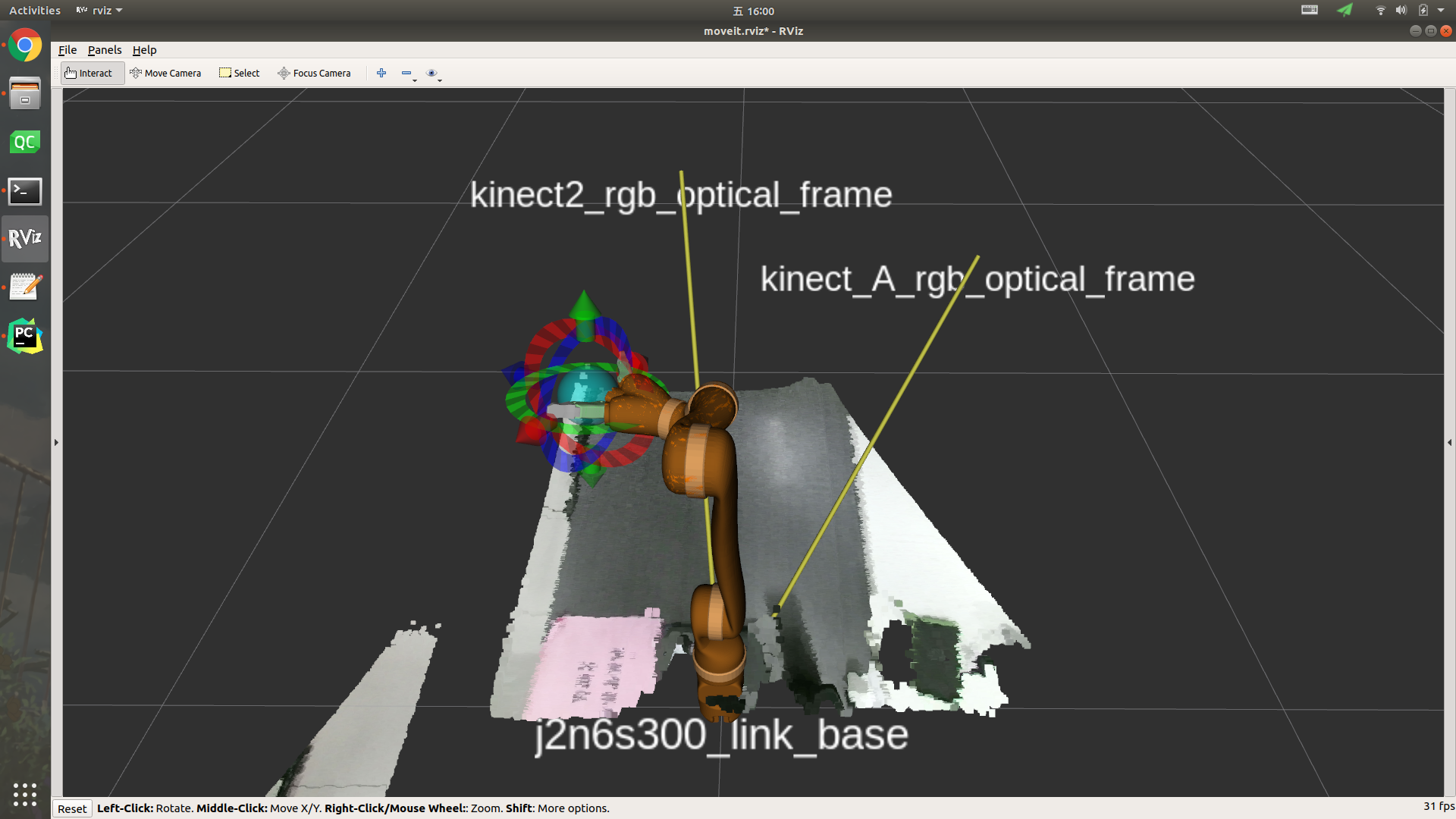

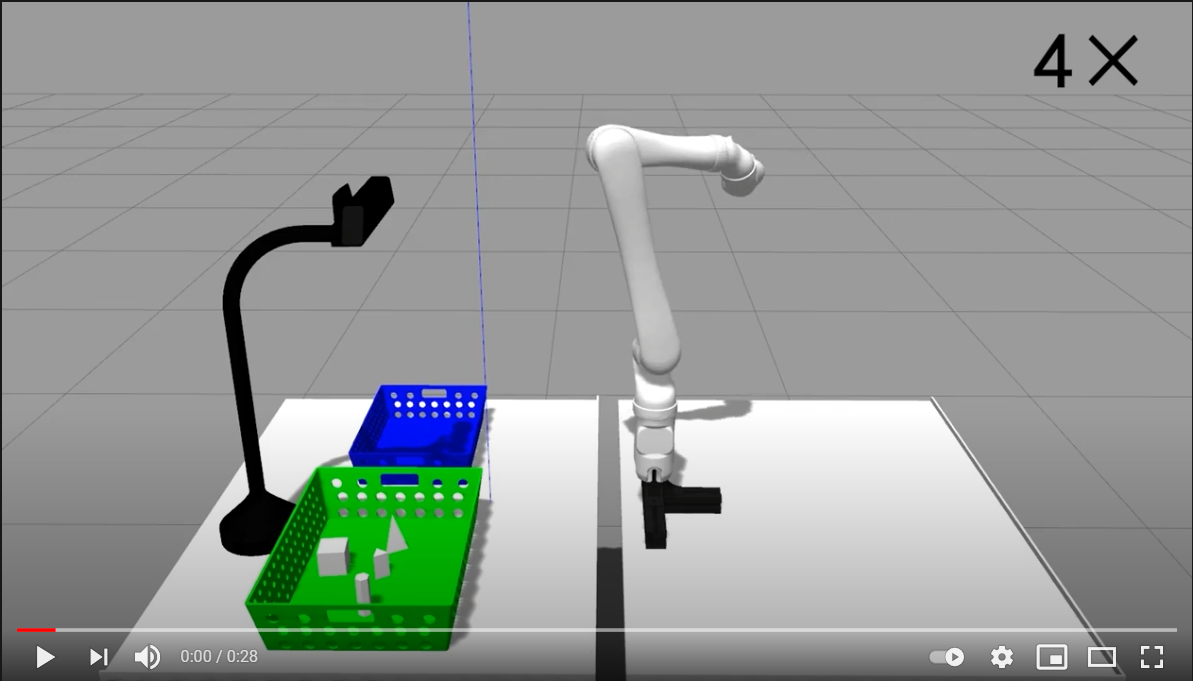

This repository is a Kinova Jaco2 robot arm upper-level task planning Simulation Environment based on DMP and behavior tree.

This project was developed based on ROS Melodic under Ubuntu 18.04, and the simulation environment was improved based on @freeman-1995 project JCAR-Competition. Based on his project, the Kinova Jaco v3 robot arm model was replaced with the Kinova Jaco v2 model.

This Package is tested on ubuntu18.04, ros melodic with GPU RTX 2060.

System environment : source code installation pointcloud library pcl with version1.8.1,vtk with version7.1.1 and opencv with version 4.1.2.

You need to add GPU options when compiling the above three packages.

The package find-object is used for object recognition.

Use the behavior tree framework to plan the tasks of the segmented dynamic motion primitives.

Compile the package

- Clone the repository.

git clone https://github.com/Lygggggg/Upper-level-task-planning-of-Jaco-based-on-behavior-tree

cd Upper-level-task-planning-of-Jaco-based-on-behavior-tree-

Go to the package named JCAR-Competition-master/robot_grasp, find the CmakeLists file inside. Comment out all the add_execytable and target_link.

-

catkin_make the workingspace.

cd Upper-level-task-planning-of-Jaco-based-on-behavior-tree

catkin_make-

recover the items that are commented out before.

-

catkin_make the workingspace angin.

catkin_makeRun

- Start the Gazebo simulation environment.

roslaunch robot_grasp START_Gazebo.launch- Start Rviz.

roslaunch robot_grasp START_Moveit.launch- Load the object to be grabbed into the Gazebo simulation environment.

$ roslaunch robot_grasp load_aruco_object.launch/loadall_object_part1.launch

# load_aruco_object.launch : Indicates that the QR code box is loaded;

# loadall_object_part1.launch : Indicates that some objects are loaded.- Start the find-object function package for object recognition, and select the object to obtain the position of the object.

roslaunch find_object_2d find_object_3d_simu.launch- Send the position of the identified object to the Ros parameter server.

rosrun robot_grasp find_and_pub- Start the behavior tree for object pick and place.

roslaunch behavior_tree_leaves pick_place_tree.launch

# or

roslaunch behavior_tree_leaves new_tree_like_guyue.launchSimulation experiment video.

This project exists thanks to all the people who contribute.