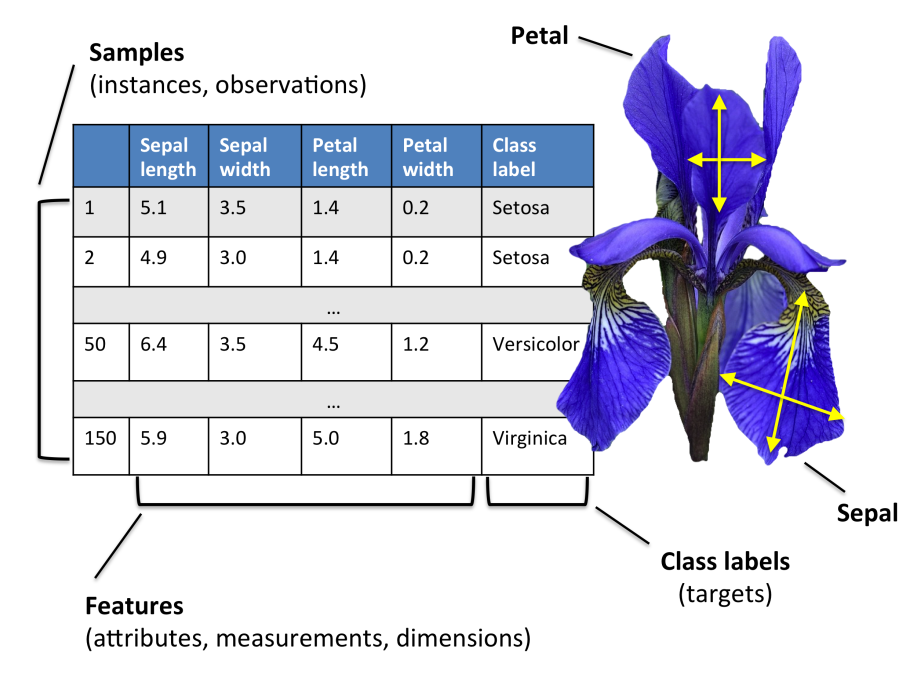

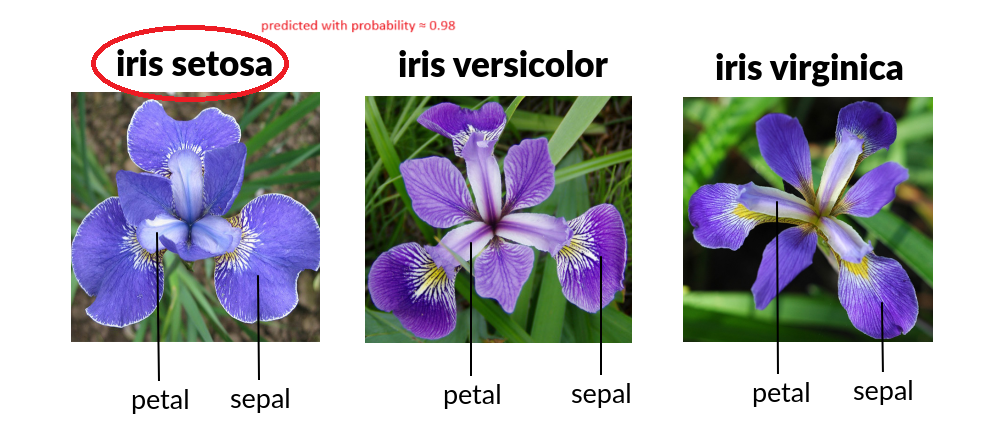

A FastAPI microservice for predicting iris species based on sepal and petal dimensions. This project leverages machine learning to classify iris flowers into their respective species.

To set up the project locally, you can choose between two methods: using Docker or a Python virtual environment.

-

Clone the repository:

git clone https://github.com/LykourgosS/iris-ml-model-microservice.git cd iris-ml-model-microservice -

Build the Docker image:

docker build -t iris-ml-model-microservice . -

Run the Docker container:

docker run -d -p 8000:8000 iris-ml-model-microservice

This will start the server at http://localhost:8000. You can then access the API documentation at http://localhost:8000/docs.

-

Clone the repository:

git clone https://github.com/LykourgosS/iris-ml-model-microservice.git cd iris-ml-model-microservice -

Create a virtual environment (optional but recommended):

python -m venv venv source venv/bin/activate # On Windows use `venv\Scripts\activate`

-

Install the required dependencies:

pip install -r requirements.txt

-

Train the model and save it as LRClassifier.pkl:

python .\app\generate-model.py

-

Start the FastAPI server:

uvicorn app.main:app --reload

This will also start the server at http://localhost:8000, and you can access the API documentation at http://localhost:8000/docs.

Once the server is running (via Docker or virtual environment), you can interact with the API at http://localhost:8000, as mentioned above.

-

Endpoint:

/predict -

Method:

POST -

Request Body:

{ "sepal_length": <float>, "sepal_width": <float>, "petal_length": <float>, "petal_width": <float> } -

Response:

{ "prediction": <string>, "probability": <float> }

Here's an example of how to make a request to the /predict endpoint:

- Using

curl:

curl -X POST "http://localhost:8000/predict" -H "Content-Type: application/json" -d '{

"sepal_length": 5.1,

"sepal_width": 3.5,

"petal_length": 1.4,

"petal_width": 0.2

}'- Using

python:

import requests

new_measurement = {

"sepal_length": 5.1,

"sepal_width": 3.5,

"petal_length": 1.4,

"petal_width": 0.2

}

response = requests.post('http://127.0.0.1:8000/predict', json=new_measurement)

print(response.content)And here is the response:

{

"prediction": "Iris-setosa",

"probability": 0.9793734738197287

}This project requires the following Python packages:

- pandas

- numpy

- pickle

- scikit-learn

- FastAPI

- Uvicorn

You can find the complete list of dependencies with their versions in the requirements.txt file.

Note

Pickle module is a Python built-in module, meaning there is no need to install it separately.

This project is licensed under the GNU General Public License v3.0 - see the LICENSE file for details.

This project is based on the following blog post:

Special thanks to the author for their guide on deploying a machine learning model as a microservice.