Please refer to the paper for more details.

|

|

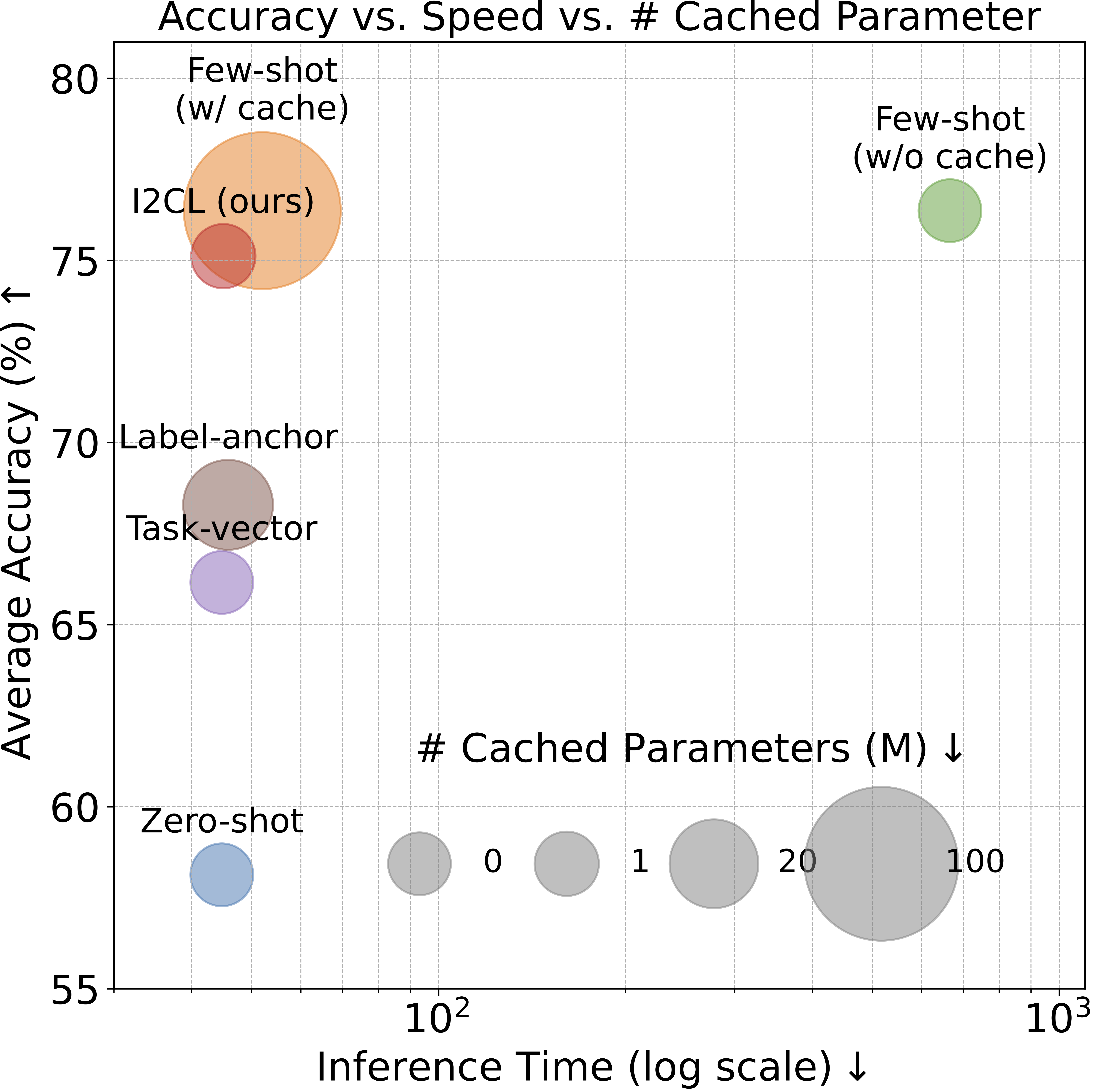

Implicit In-context Learning (I2CL) absorbs a tiny set of demonstration examples in the activation space, diverging from standard In-context Learning (ICL) that prefixes demonstration examples in the token space. As a result, I2CL bypasses the limitation of the context window size.

I2CL is extremely efficient in terms of both computation and memory usage. It achieves few-shot (i.e., ICL) performance with approximately zero-shot cost at inference.

I2CL is robust against the selection and order of demonstration examples. It yields satisfying performance even under deficient demonstration examples which can severely degrade the performance of ICL.

I2CL introduces a set of scalar values that act as task-IDs. These task-IDs effectively indicate the task similarity and can be leveraged to perform transfer learning across tasks.

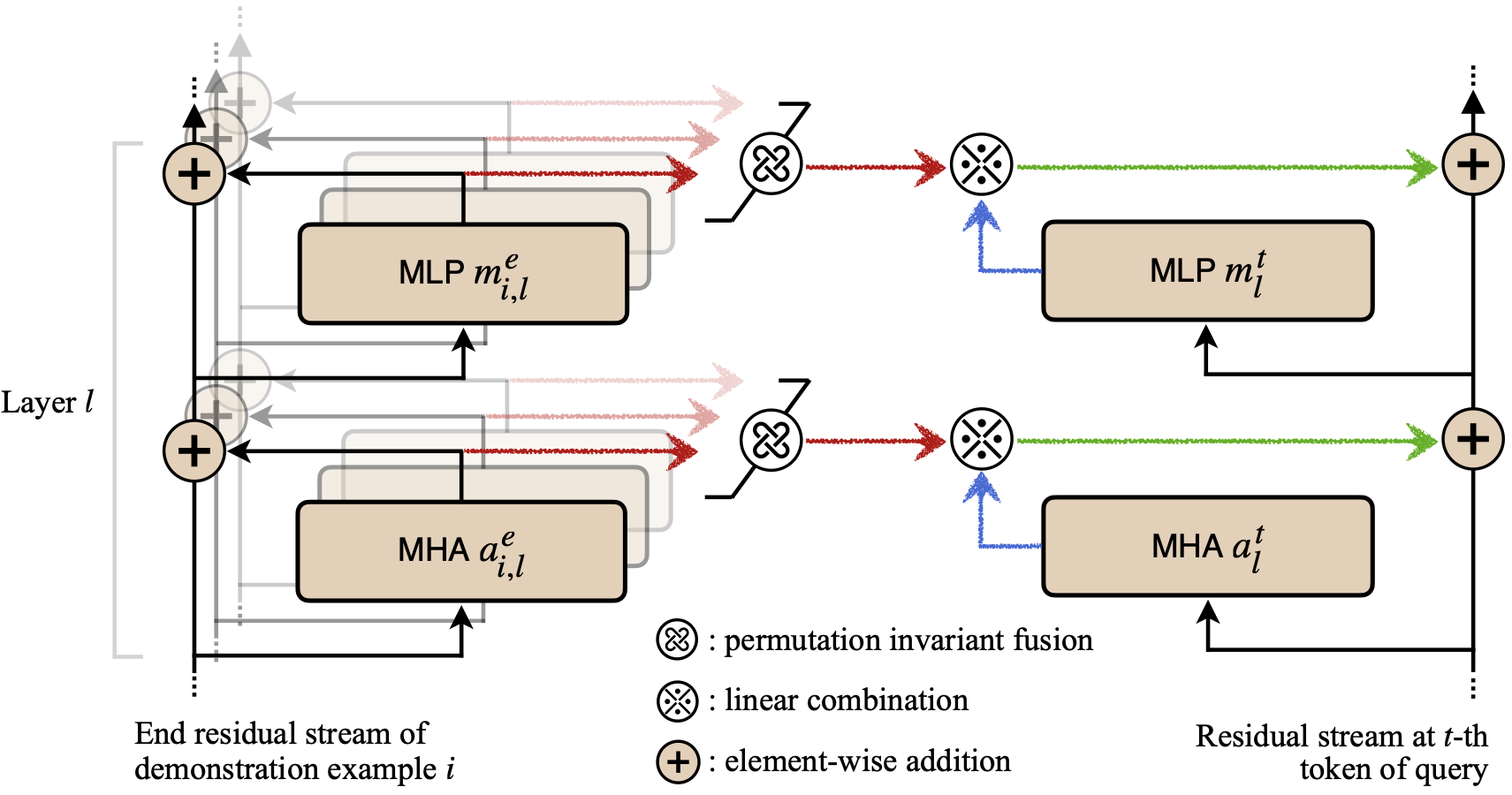

I2CL substantiates a two-stage workflow. A context vector is first generated by condensing the demonstration examples independently. Then a linear combination of the context vector and activation from the query is injected back into the residual streams. The effectiveness of I2CL suggests a potential two-stage workflow for ICL.

-

Clone the Repository:

git clone https://github.com/LzVv123456/I2CL cd yourrepository -

Create a Conda Environment:

Create a new conda environment to avoid conflicts with existing packages.

conda create --name i2cl_env python=3.8 conda activate i2cl_env

-

Install Dependencies:

Use

pipto install the required libraries listed in therequirements.txtfile.pip install -r requirements.txt

To use the code, follow these steps:

-

Navigate to the I2CL Folder:

cd I2CL -

Run I2CL:

To run I2CL, execute the following command:

python run_i2cl.py

-

Run Comparable Methods:

To run other comparable methods, use the following commands:

python run_soft_prompt.py python run_task_vector.py python run_label_anchor.py

-

Apply I2CL to Unseen Demonstrations or Perform Transfer Learning:

First, run

run_i2cl.pyand specify the target path in the configuration files forrun_i2cl_infer.pyandrun_i2cl_transfer_learning.pyas the output result folder ofrun_i2cl.py.Then, execute the following commands:

python run_i2cl_infer.py python run_i2cl_transfer_learning.py

-

Configuration and Ablation Studies

For ablation studies and other configurations, please refer to

configs/config_i2cl.pyand the corresponding code files for more details.

Please don't hesitate to drop an email at zhuowei.li@cs.rutgers.edu if you have question.

This project is licensed under the MIT License - see the LICENSE file for details.

I would also like to acknowledge the repositories that inspired and contributed to this work:

If you found this work useful for your research, feel free to star ⭐ the repo or cite the following paper:

@misc{li2024implicit,

title={Implicit In-context Learning},

author={Zhuowei Li and Zihao Xu and Ligong Han and Yunhe Gao and Song Wen and Di Liu and Hao Wang and Dimitris N. Metaxas},

year={2024},

eprint={2405.14660},

archivePrefix={arXiv},

primaryClass={cs.LG}

}