Aibek Alanov*,

Vadim Titov*,

Dmitry Vetrov

*Equal contribution

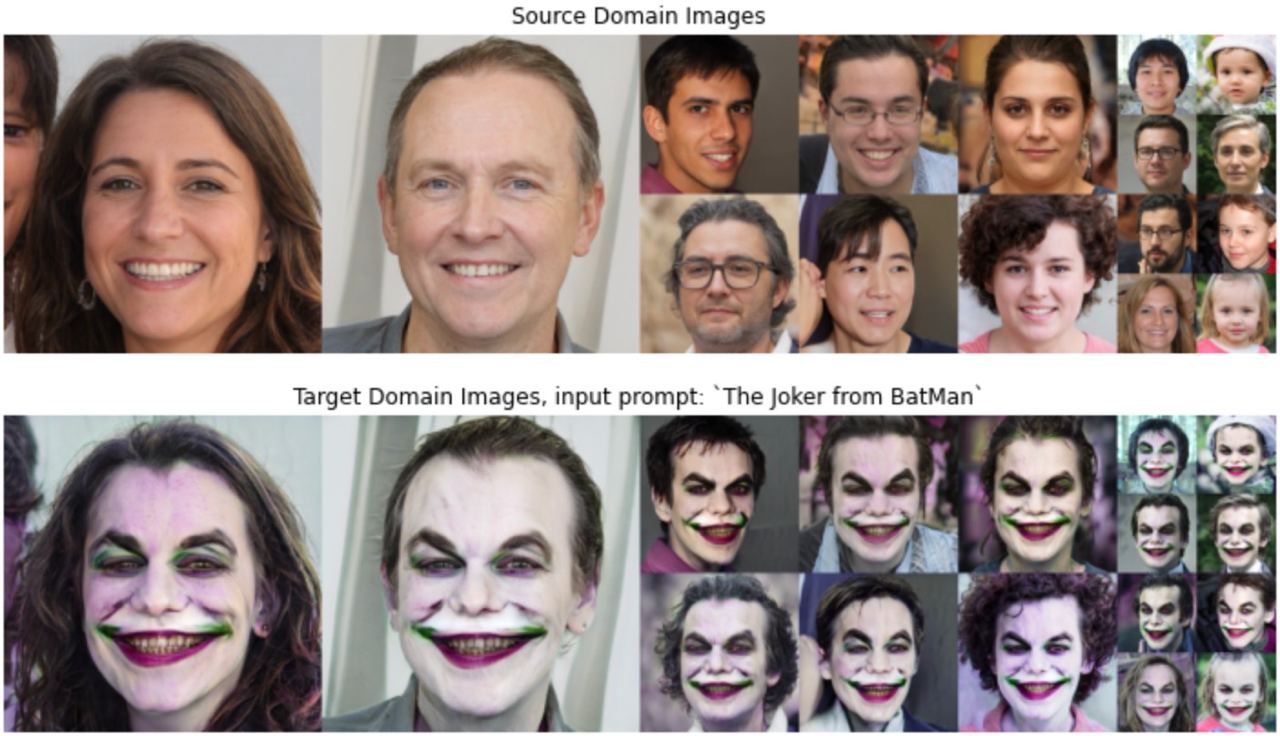

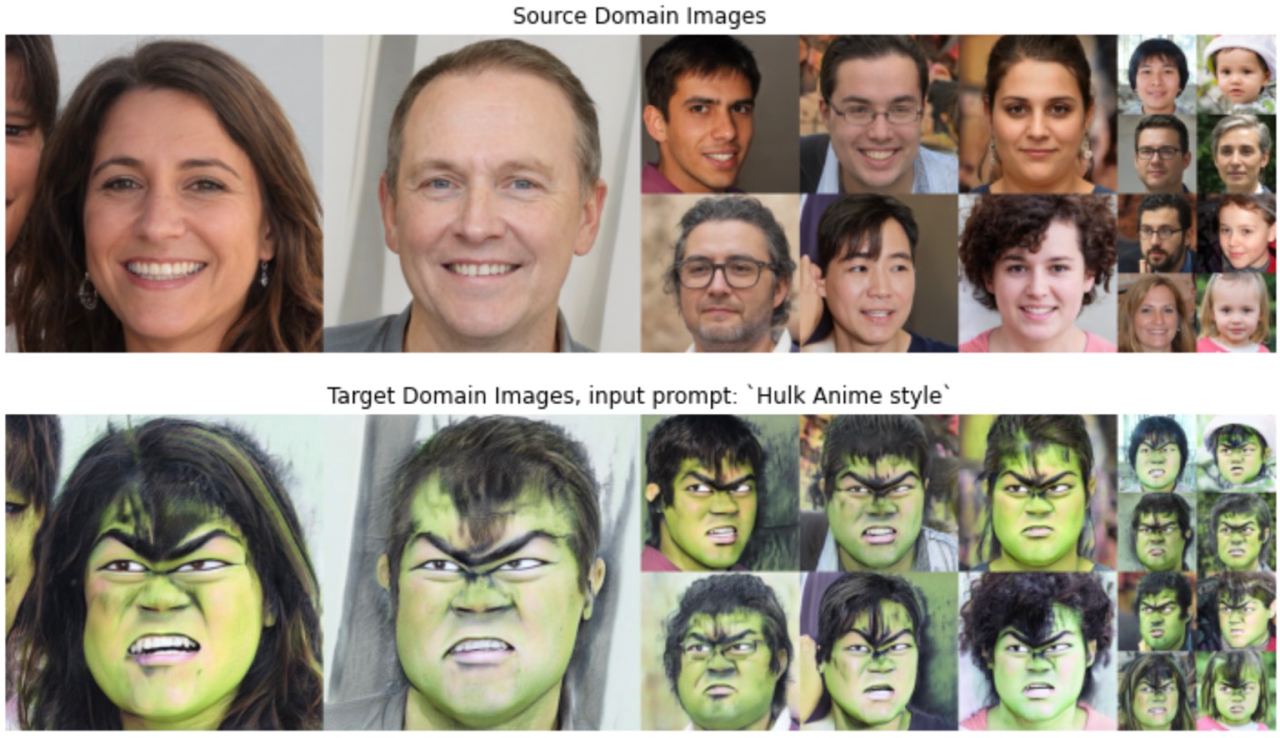

Abstract: Domain adaptation framework of GANs has achieved great progress in recent years as a main successful approach of training contemporary GANs in the case of very limited training data. In this work, we significantly improve this framework by proposing an extremely compact parameter space for fine-tuning the generator. We introduce a novel domain-modulation technique that allows to optimize only 6 thousand-dimensional vector instead of 30 million weights of StyleGAN2 to adapt to a target domain. We apply this parameterization to the state-of-art domain adaptation methods and show that it has almost the same expressiveness as the full parameter space. Additionally, we propose a new regularization loss that considerably enhances the diversity of the fine-tuned generator. Inspired by the reduction in the size of the optimizing parameter space we consider the problem of multi-domain adaptation of GANs, i.e. setting when the same model can adapt to several domains depending on the input query. We propose the HyperDomainNet that is a hypernetwork that predicts our parameterization given the target domain. We empirically confirm that it can successfully learn a number of domains at once and may even generalize to unseen domains.

The repository implements the domain-modulation technique and the HyperDomainNet from the paper "HyperDomainNet: Universal Domain Adaptation for Generative Adversarial Networks".

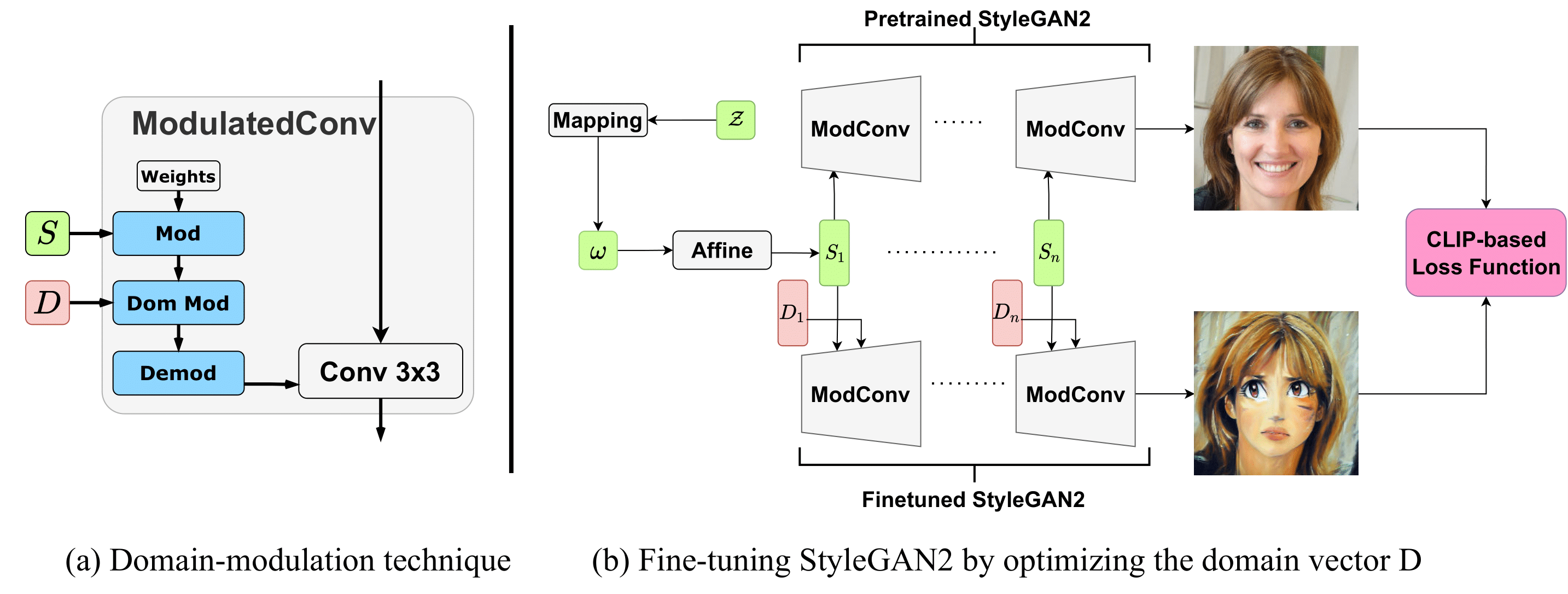

The domain-modulation technique allows to significantly reduce the number of training parameters required for the domain adaptation of the StyleGAN2 from 30 million weights to the only 6 thousand-dimensional domain vector. The overall idea of this mechanism is illustrated in the following diagram:

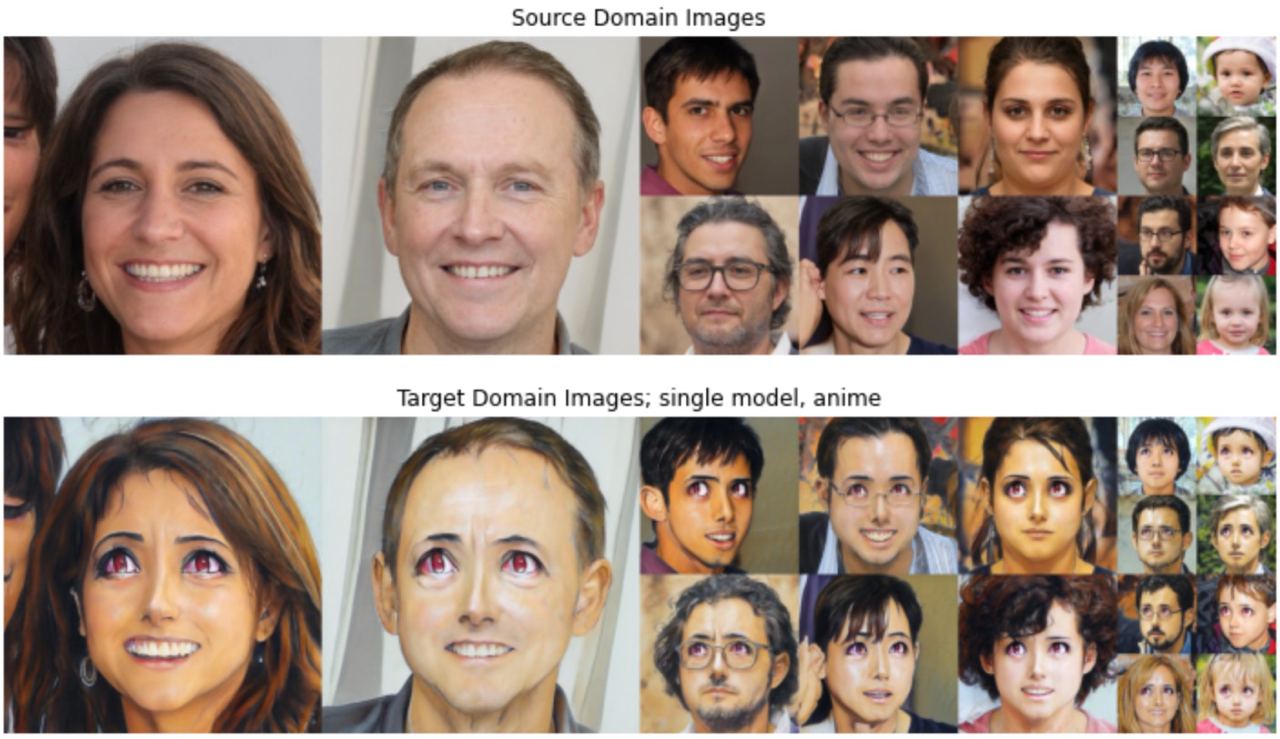

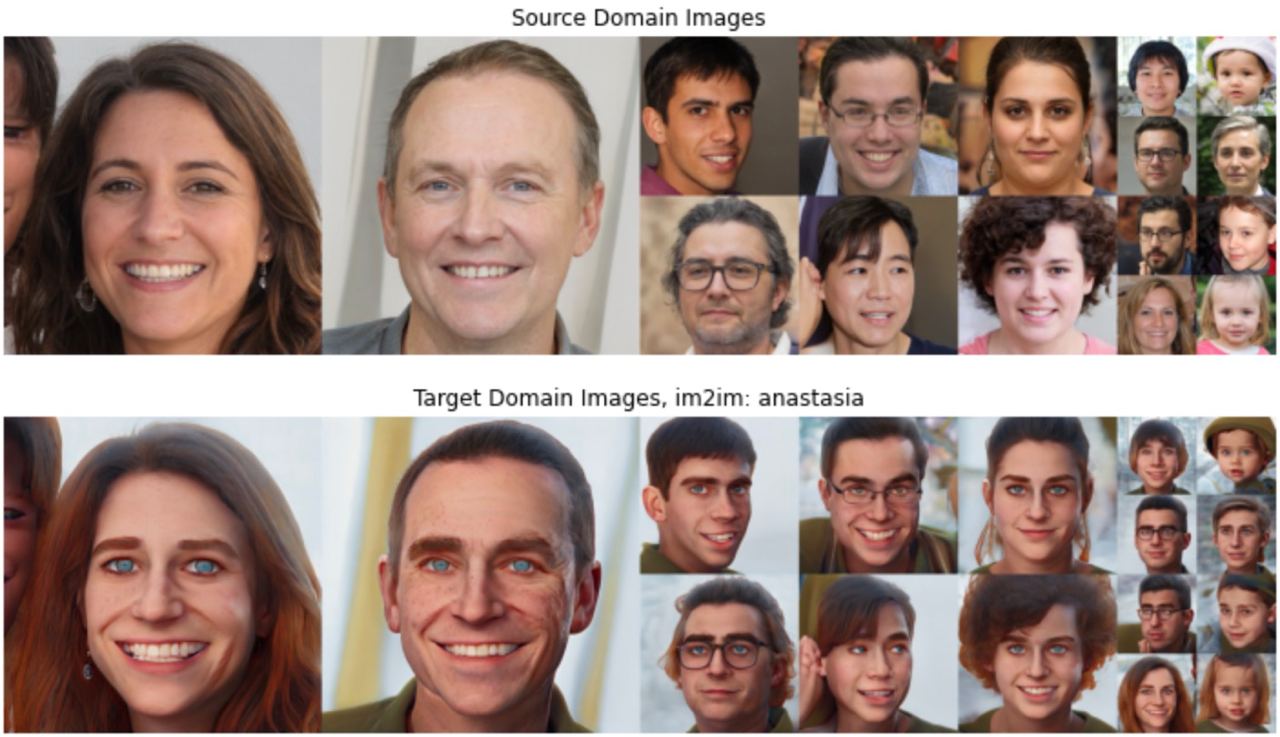

The technique is implemented for two types of adaptation setups:

- text-driven single domain adaptation

- image2image domain adaptation.

You can play with these setup in the colab notebook we set up for you:

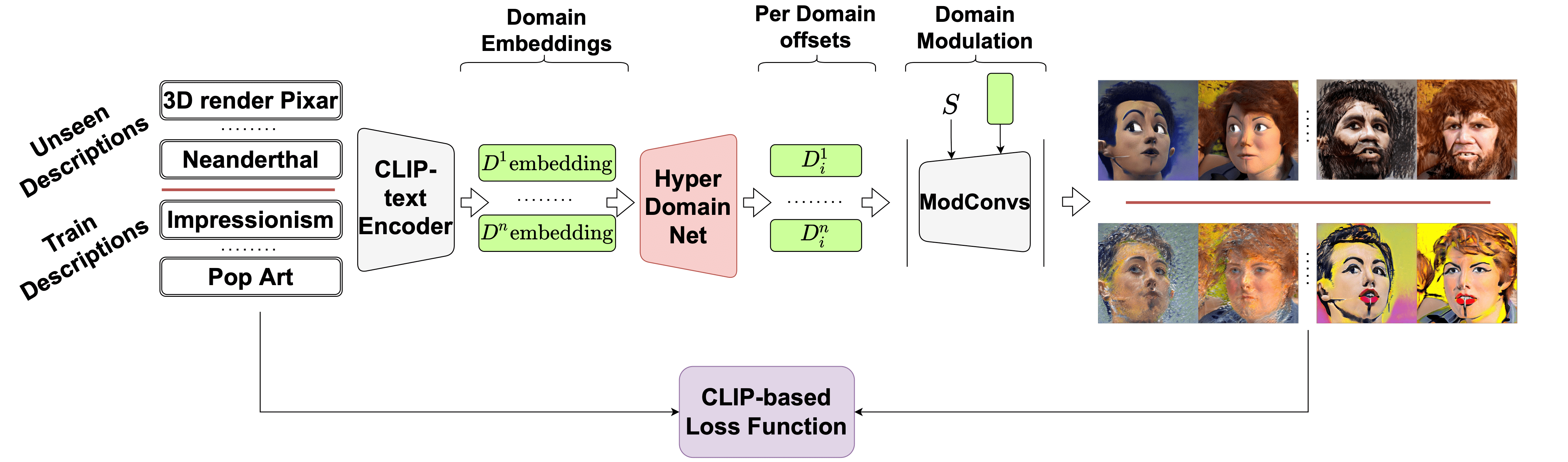

Inspired by the reduction in the size of the optimizing parameter space we propose the HyperDomainNet that can predict our parameterization given the target domain. The following diagram demonstrates its structure and how it can be trained:

There are two setups for the HyperDomainNet:

- HyperDomainNet for any textual description

- HyperDomainNet for any given image (would be improved in future research).

You can also play with the HyperDomainNet in our colab notebook.

15/10/2022 Initial version

For all the methods described in the paper, it is required to have:

- Anaconda

- PyTorch >=1.7.1

- Packages from requirements.txt

Here, the code relies on the Rosinality pytorch implementation of StyleGAN2. Some parts of the StyleGAN implementation were modified, so that the whole implementation is native pytorch.

In addition to the requirements mentioned before, a pretrained StyleGAN2 generator will attempt to be downloaded with script download.py.

All base requirements could be installed via

conda install --yes -c pytorch pytorch=1.7.1 torchvision cudatoolkit=<CUDA_VERSION>

pip install -r requirements.txtHere, we provide the code for the training.

In general trainind could be launched by following command

python main.py exp.config={config_name}

config_dir: configsconfig: config_name.yamlproject:WandbProjectNametags:- tag1

- tag2

name:WandbRunNameseed: 0root: ./notes: empty notesstep_save: 20 – model dump frequencytrainer: trainer_name

iter_num: 400 – number of training iterationsbatch_size: 4device: cuda:0generator: stylegan2patch_key: cin_multphase: mapping – StyleGAN2 part which is fine-tuned, only used whenpatch_key=originalsource_class: Photo – description of source domaintarget_class: 3D Render in the Style of Pixar – description of target domainauto_layer_k: 16auto_layer_iters: 0 – number of iterations for adaptive corresponding stylegan2 layer freezeauto_layer_batch: 8mixing_noise: 0.9

visual_encoders: – clip encoders that are used for clip based losses- ViT-B/32

- ViT-B/16

loss_funcs:- loss_name1

- loss_name2

loss_coefs:- loss_coef1

- loss_coef2

g_reg_every: 4 – stylegan2 regularization coefficient (not recommended to change)optimizer:weight_decay: 0.0lr: 0.01betas:- 0.9

- 0.999

log_every: 10 – loss logging steplog_images: 20 – images logging steptruncation: 0.7 – truncation during images loggingnum_grid_outputs: 1 – number of logging grids

is_on: falsestart_from: falsestep_backup: 100000

When training ends model checkpoints could be found in local_logged_exps/. Each ckpt_name.pt could be inferenced using a helper classes Inferencer in core/utils/example_utils.

Here, we provide the code for using pretrained checkpoints for inference.

Pretrained models for various stylization are provided.

Please refer to download.py and run it with flag --load_type=checkpoints inside root.

Downloaded checkpoints structure

root/

checkpoints/

td_checkpoints/

...

im2im_checkpoints/

...

mapper_20_td.pt

mapper_large_resample_td.pt

mapper_base_im2im.pt

Each model except mapper_base_im2im.pt could be inferenced with Inferencer, to infer mapper_base_im2im.pt Im2ImInferencer

Given a pretrained checkpoint for certain target domain, one can edit a given image

This operation can be done through the examples/inference_playground.ipynb notebook

Core functions are

- mixing_noise (latent code generation)

- Inference (checkpoint processer)

Setup is same as in Inference section

Given a pretrained checkpoint for certain target domain, one can edit a given image

Playground for editing could be found in examples/editing_playground.ipynb notebook and in google-colab:

Here, we provide the code for evaluation based on clip metrics.

Before evaluation trained models needed to be got with one of two mentioned ways (trained/pretrained).

Given a pretrained checkpoint for certain target domain, one can be evaluated through the examples/evaluation.ipynb notebook

Main idea is based on one-shot (text-drive, image2image) methods StyleGAN-NADA and MindTheGap.

To edit real images, we inverted them to the StyleGAN's latent space using ReStyle.

If you use this code for your research, please cite our paper:

@article{alanov2022hyperdomainnet,

title={Hyperdomainnet: Universal domain adaptation for generative adversarial networks},

author={Alanov, Aibek and Titov, Vadim and Vetrov, Dmitry P},

journal={Advances in Neural Information Processing Systems},

volume={35},

pages={29414--29426},

year={2022}

}