Official repository for our paper "McEval: Massively Multilingual Code Evaluation"

🏠 Home Page • 📊 Benchmark Data • 📚 Instruct Data • 🏆 Leaderboard

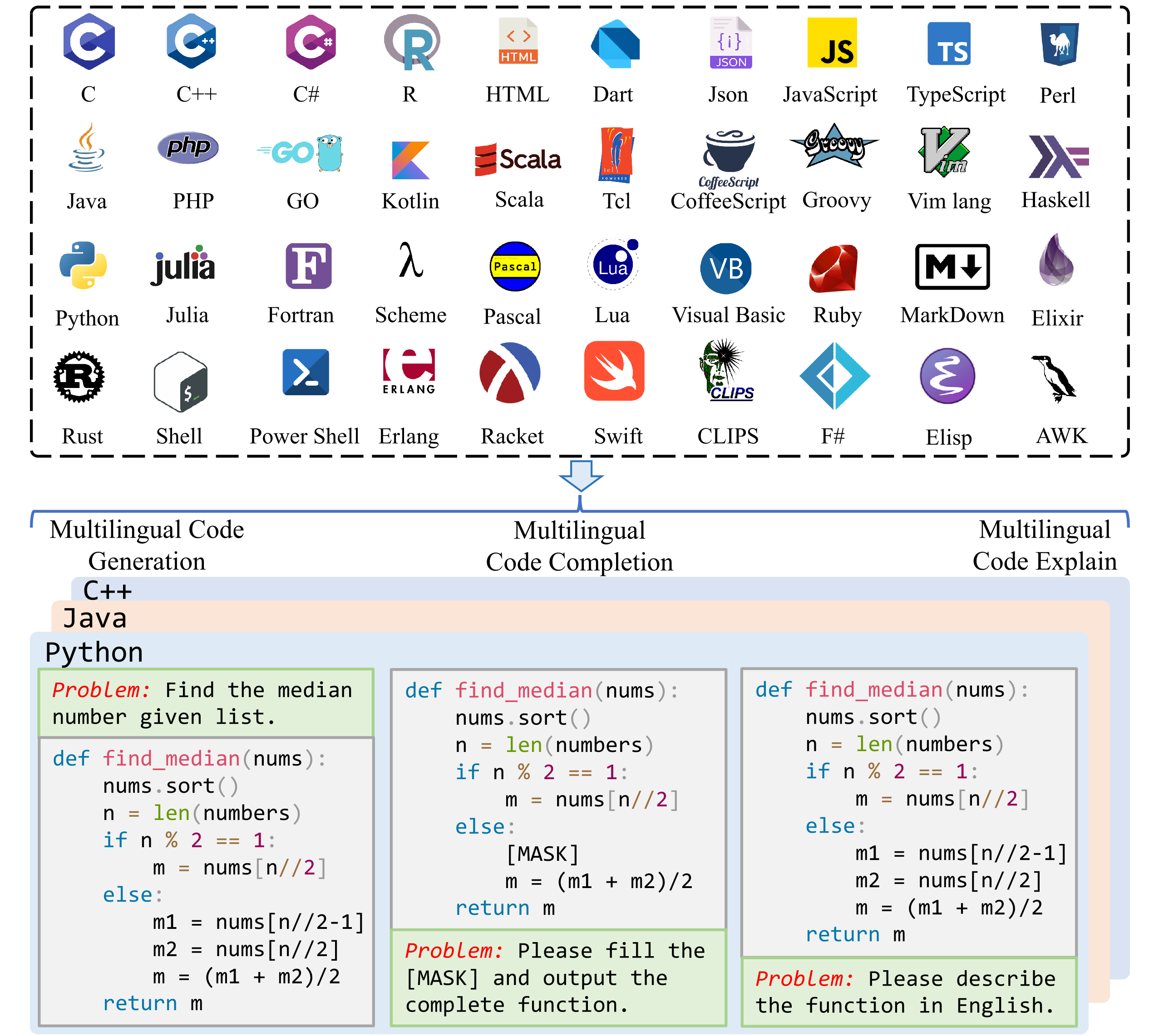

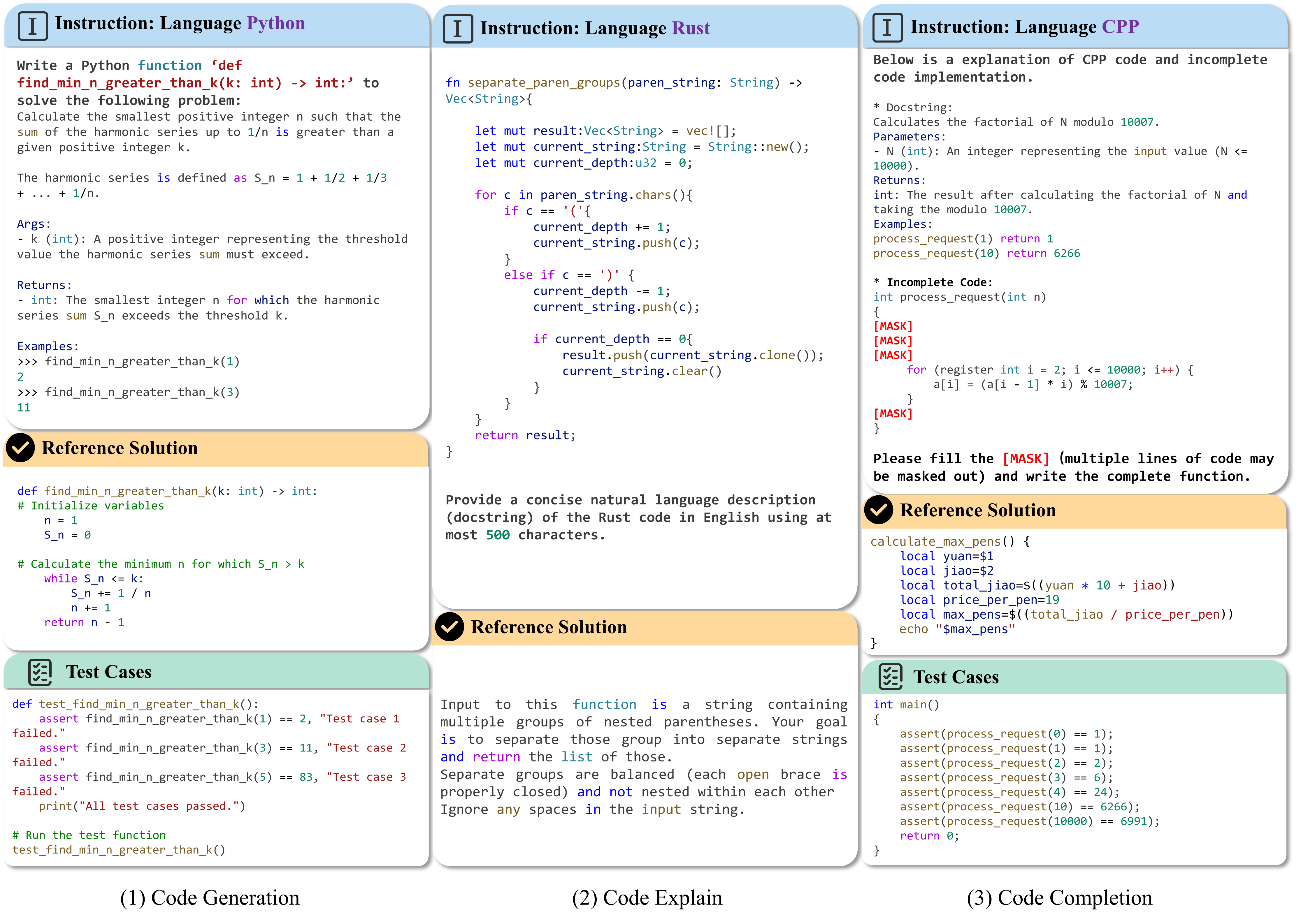

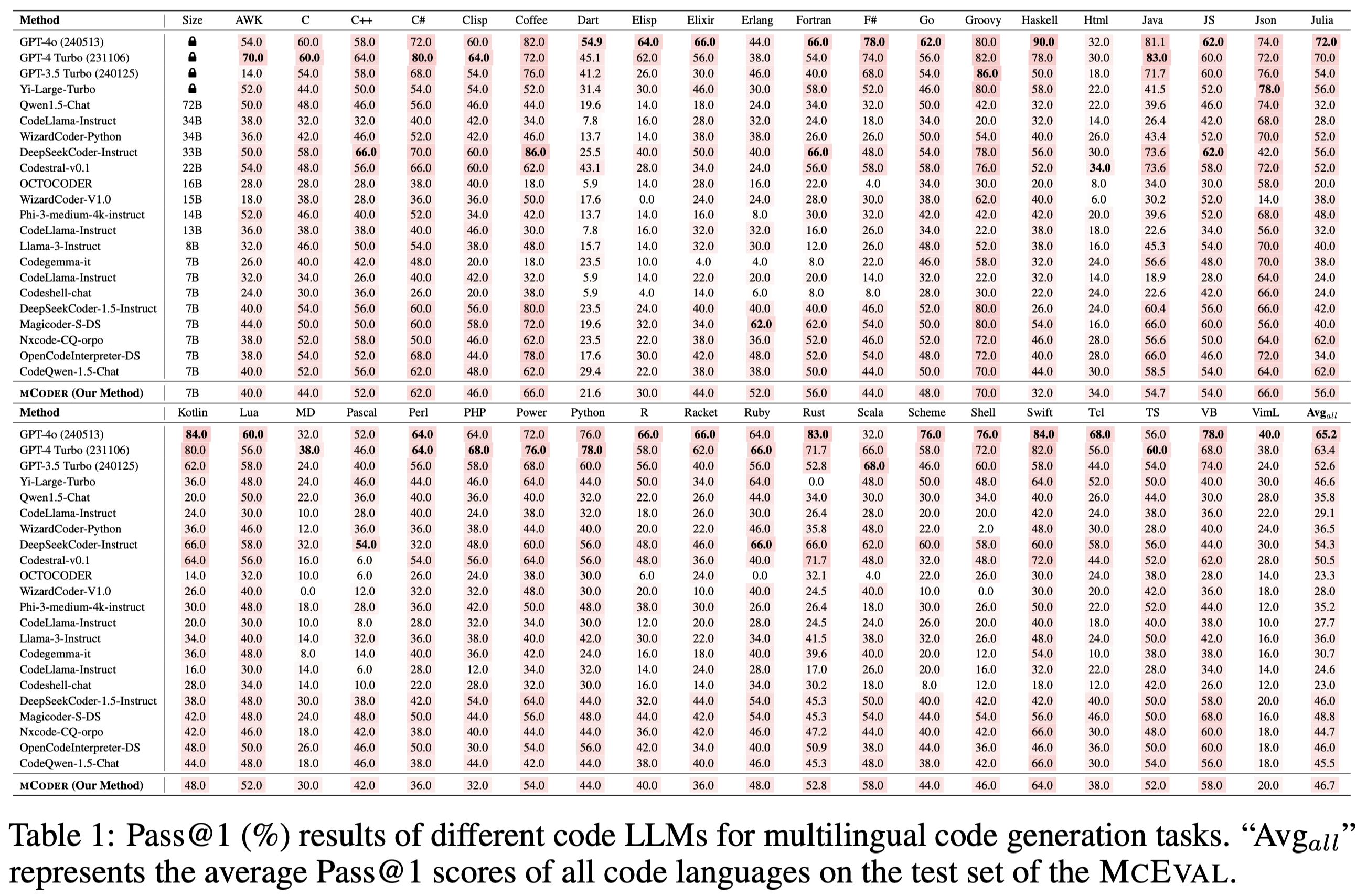

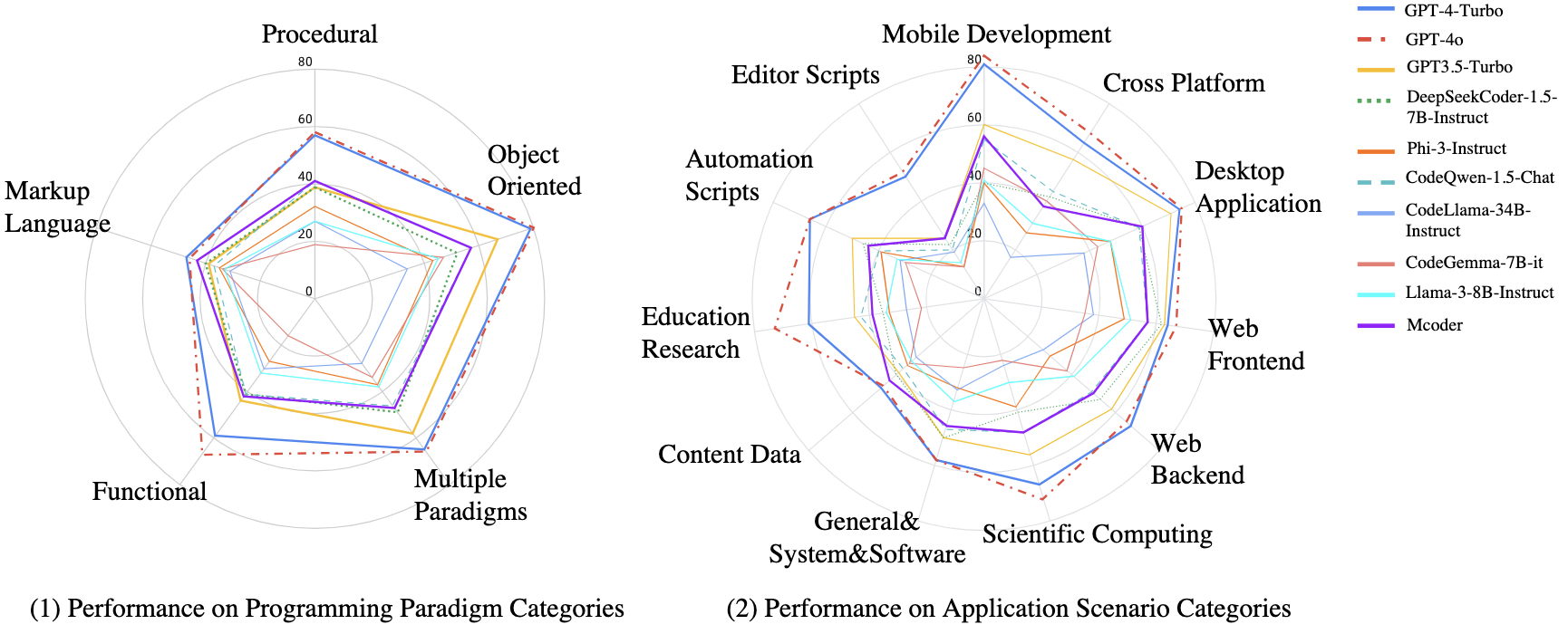

McEval is a massively multilingual code benchmark covering 40 programming languages with 16K test samples, which substantially pushes the limits of code LLMs in multilingual scenarios.

Furthermore, we curate massively multilingual instruction corpora McEval-Instruct.

Refer to our paper for more details.

Refer to our 🏆 Leaderboard for more results.

| Dataset | Download |

|---|---|

| McEval Evaluation Dataset | 🤗 HuggingFace |

| McEval-Instruct | 🤗 HuggingFace |

Runtime environments for different programming languages could be found in Environments

We recommend using Docker for evaluation, we have created a Docker image with all the necessary environments pre-installed.

Directly pull the image from Docker Hub or Aliyun Docker Hub:

# Docker hub:

docker pull multilingualnlp/mceval

# Aliyun docker hub:

docker pull registry.cn-hangzhou.aliyuncs.com/mceval/mceval:v1

docker run -it -d --restart=always --name mceval_dev --workdir / <image-name> /bin/bash

docker attach mceval_devWe provide some model inference codes, including torch and vllm implementations.

Take the evaluation generation task as an example.

cd inference

bash scripts/inference_torch.shTake the evaluation generation task as an example.

cd inference

bash scripts/run_generation_vllm.sh🛎️ Please prepare the inference results of the model in the following format and use them for the next evaluation step.

(1) Folder Structure Place the data in the following folder structure, each file corresponds to the test results of each language.

\evaluate_model_name

- CPP.jsonl

- Python.jsonl

- Java.jsonl

...You can use script split_result.py to split inference results.

python split_result --split_file <inference_result> --save_dir <save_dir>(2) File Format Each line in the file for each test language has the following format. The raw_generation field is the generated code. More examples can be found in Evualute Data Format Examples

{

"task_id": "Lang/1",

"prompt": "",

"canonical_solution": "",

"test": "",

"entry_point": "",

"signature": "",

"docstring": "",

"instruction": "",

"raw_generation": ["<Generated Code>"]

}Take the evaluation generation task as an example.

cd eval

bash scripts/eval_generation.shWe have open-sourced the code for Mcoder training, including CodeQwen1.5 and DeepSeek-Coder as base models.

We will make the model weights of Mcoder available for download soon.

More examples could be found in Examples

This code repository is licensed under the the MIT License. The use of McEval data is subject to the CC-BY-SA-4.0.

If you find our work helpful, please use the following citations.

@article{mceval,

title={McEval: Massively Multilingual Code Evaluation},

author={Chai, Linzheng and Liu, Shukai and Yang, Jian and Yin, Yuwei and Jin, Ke and Liu, Jiaheng and Sun, Tao and Zhang, Ge and Ren, Changyu and Guo, Hongcheng and others},

journal={arXiv e-prints},

pages={arXiv--2406},

year={2024}

}