The following ranking results are produced by

The following ranking results are produced by vit-b:

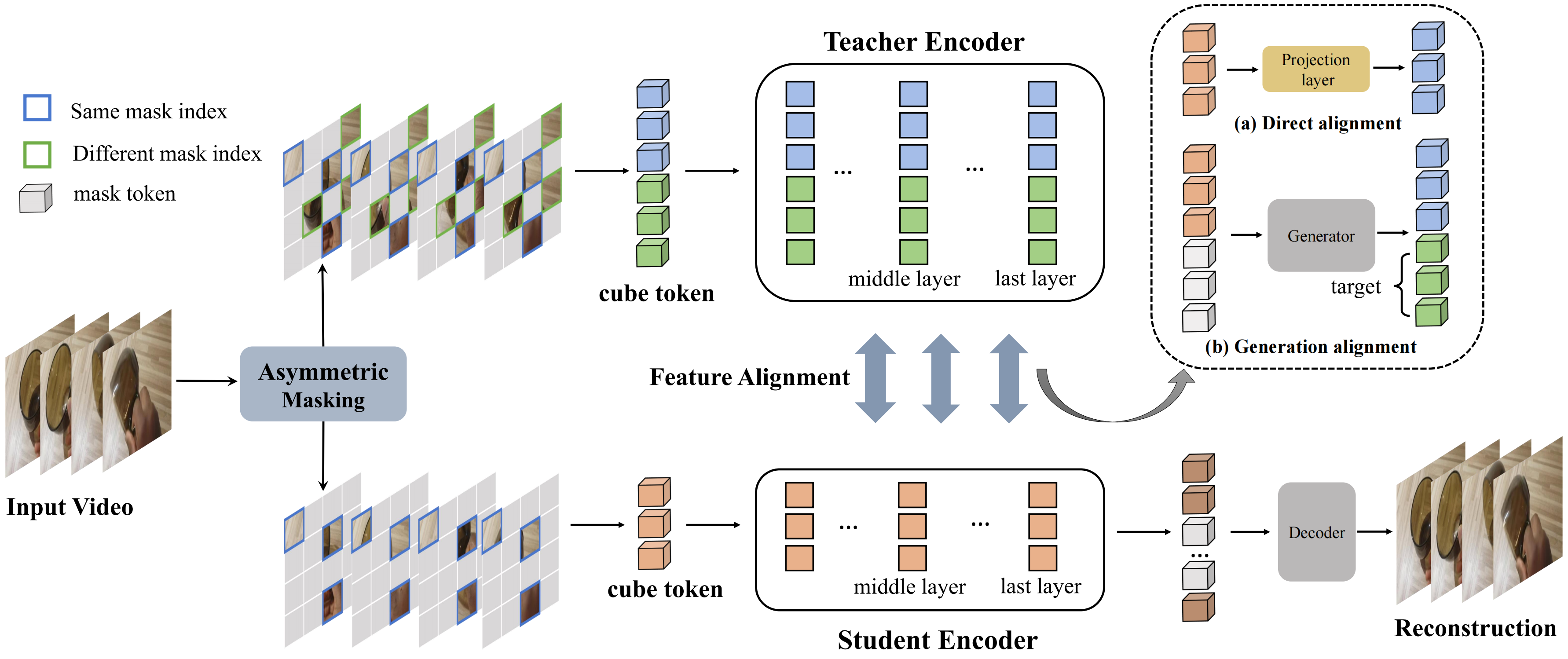

Asymmetric Masked Distillation for Pre-Training Small Foundation Models

Zhiyu Zhao, Bingkun Huang, Sen Xing, Gangshan Wu, Yu Qiao, and Limin Wang

Nanjing University, Shanghai AI Lab

[2024.3.27] Code and models have been released!

[2024.2.29] Code and models will be released in the following days.

[2024.2.27] AMD is accpeted by CVPR2024! 🎉🎉🎉

| Method | Extra Data | Backbone | Resolution | #Frames x Clips x Crops | Top-1 | Top-5 |

|---|---|---|---|---|---|---|

| AMD | no | ViT-S | 224x224 | 16x2x3 | 70.2 | 92.5 |

| AMD | no | ViT-B | 224x224 | 16x2x3 | 73.3 | 94.0 |

| Method | Extra Data | Backbone | Resolution | #Frames x Clips x Crops | Top-1 | Top-5 |

|---|---|---|---|---|---|---|

| AMD | no | ViT-S | 224x224 | 16x5x3 | 80.1 | 94.5 |

| AMD | no | ViT-B | 224x224 | 16x5x3 | 82.2 | 95.3 |

| Method | Extra Data | Extra Label | Backbone | #Frame x Sample Rate | mAP |

|---|---|---|---|---|---|

| AMD | Kinetics-400 | ✗ | ViT-B | 16x4 | 29.9 |

| AMD | Kinetics-400 | ✓ | ViT-B | 16x4 | 33.5 |

| Method | Extra Data | Backbone | UCF101 | HMDB51 |

|---|---|---|---|---|

| AMD | Kinetics-400 | ViT-B | 97.1 | 79.6 |

| Method | Extra Data | Backbone | Resolution | Top-1 |

|---|---|---|---|---|

| AMD | no | ViT-S | 224x224 | 82.1 |

| AMD | no | ViT-B | 224x224 | 84.6 |

Please follow the instructions in INSTALL.md.

Please follow the instructions in DATASET.md for data preparation.

The pre-training instruction is in PRETRAIN.md.

The fine-tuning instruction is in FINETUNE.md.

We provide pre-trained and fine-tuned models in MODEL_ZOO.md.

This project is built upon VideoMAEv2 and MGMAE. Thanks to the contributors of these great codebases.

If you find this repository useful, please use the following BibTeX entry for citation.

@misc{zhao2023amd,

title={Asymmetric Masked Distillation for Pre-Training Small Foundation Models},

author={Zhiyu Zhao and Bingkun Huang and Sen Xing and Gangshan Wu and Yu Qiao and Limin Wang},

year={2023},

eprint={2311.03149},

archivePrefix={arXiv},

primaryClass={cs.CV}

}