VideoMAE for Action Detection (NeurIPS 2022 Spotlight) [Arxiv]

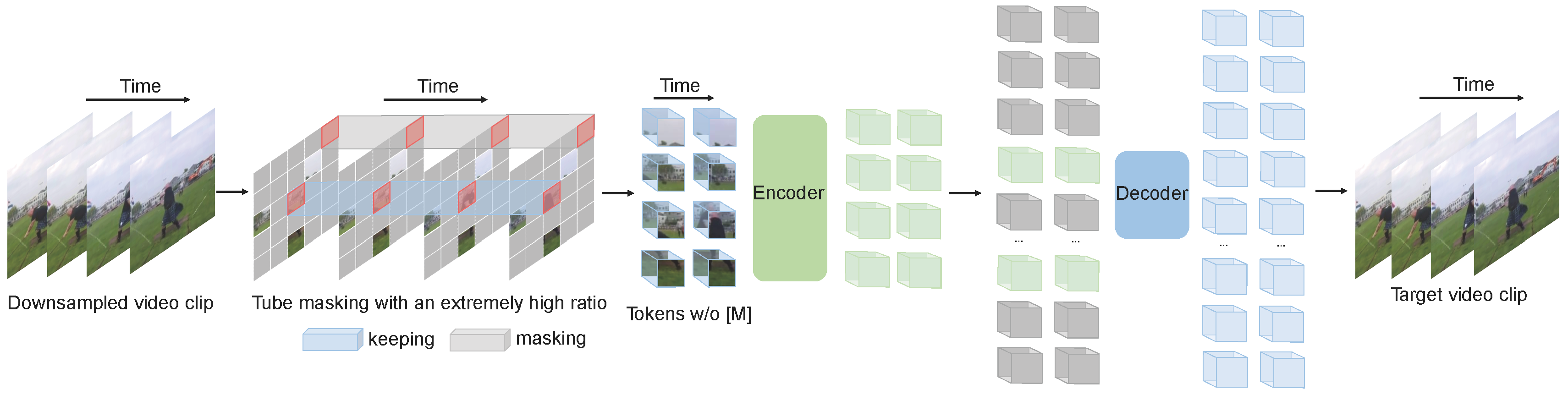

VideoMAE: Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training

Zhan Tong, Yibing Song, Jue Wang, Limin Wang

Nanjing University, Tencent AI Lab

This repo contains the supported code and scripts to reproduce action detection results of VideoMAE. The code of pre-training is available in original repo.

[2023.1.16] Code and pre-trained models are available now!

| Method | Extra Data | Extra Label | Backbone | #Frame x Sample Rate | mAP |

|---|---|---|---|---|---|

| VideoMAE | Kinetics-400 | ✗ | ViT-S | 16x4 | 22.5 |

| VideoMAE | Kinetics-400 | ✓ | ViT-S | 16x4 | 28.4 |

| VideoMAE | Kinetics-400 | ✗ | ViT-B | 16x4 | 26.7 |

| VideoMAE | Kinetics-400 | ✓ | ViT-B | 16x4 | 31.8 |

| VideoMAE | Kinetics-400 | ✗ | ViT-L | 16x4 | 34.3 |

| VideoMAE | Kinetics-400 | ✓ | ViT-L | 16x4 | 37.0 |

| VideoMAE | Kinetics-400 | ✗ | ViT-H | 16x4 | 36.5 |

| VideoMAE | Kinetics-400 | ✓ | ViT-H | 16x4 | 39.5 |

| VideoMAE | Kinetics-700 | ✗ | ViT-L | 16x4 | 36.1 |

| VideoMAE | Kinetics-700 | ✓ | ViT-L | 16x4 | 39.3 |

Please follow the instructions in INSTALL.md.

Please follow the instructions in DATASET.md for data preparation.

The fine-tuning instruction is in FINETUNE.md.

We provide pre-trained and fine-tuned models in MODEL_ZOO.md.

Zhan Tong: tongzhan@smail.nju.edu.cn

Thanks to Lei Chen for support. This project is built upon MAE-pytorch, BEiT and AlphAction. Thanks to the contributors of these great codebases.

The majority of this project is released under the CC-BY-NC 4.0 license as found in the LICENSE file. Portions of the project are available under separate license terms: pytorch-image-models are licensed under the Apache 2.0 license. BEiT is licensed under the MIT license.

If you think this project is helpful, please feel free to leave a star⭐️ and cite our paper:

@inproceedings{tong2022videomae,

title={Video{MAE}: Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training},

author={Zhan Tong and Yibing Song and Jue Wang and Limin Wang},

booktitle={Advances in Neural Information Processing Systems},

year={2022}

}

@article{videomae,

title={VideoMAE: Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training},

author={Tong, Zhan and Song, Yibing and Wang, Jue and Wang, Limin},

journal={arXiv preprint arXiv:2203.12602},

year={2022}

}