Source: DataFlair

The goal of this project is to use a convolutional neural network (CNN) to determine cats and dogs images. How I proceeded exactly and what results I achieved can be read in my blog post: Computer Vision - Convolutional Neural Network

- Introduction

- Software Requirements

- Getting Started

- Folder Structure

- Running the Jupyter Notebook

- Project Results

- Link to the Publication

- Authors

- Project Motivation

- References

For this repository I wrote a preprocessing.py file which automatically randomizes the provided image data and divides it into a training, validation and test part. This is followed by model training using a CNN. The storage of the best model as well as the safeguarding of all important metrics during the model training is also fully automatic. This is a best practice guideline on how to create a binary image classifier and bring it to production.

Required libraries:

- Python 3.x

- Scikit-Learn

- Keras

- TensorFlow

- Numpy

- Pandas

- Matplotlib

- OpenCV

Please run pip install -r requirements.txt

- Make sure Python 3 is installed.

- Clone the repository and navigate to the project's root directory in the terminal

- Download the cats dataset. Unzip the folder and place the images in the cloned repository in the folder

cats. If the folder does not exist yet, please create one. - Download the dogs dataset. Unzip the folder and place the images in the cloned repository in the folder

dogs. If the folder does not exist yet, please create one. - Start the notebook

Computer Vision - CNN for binary Classification.ipynb.

The current folder structure should look like this:

C:.

│ Computer Vision - CNN.ipynb

│ preprocessing_CNN.py

│

├───cats

├───dogs

└───test_pictures

Import all necessary libraries and execute the train-validation-test-split function.

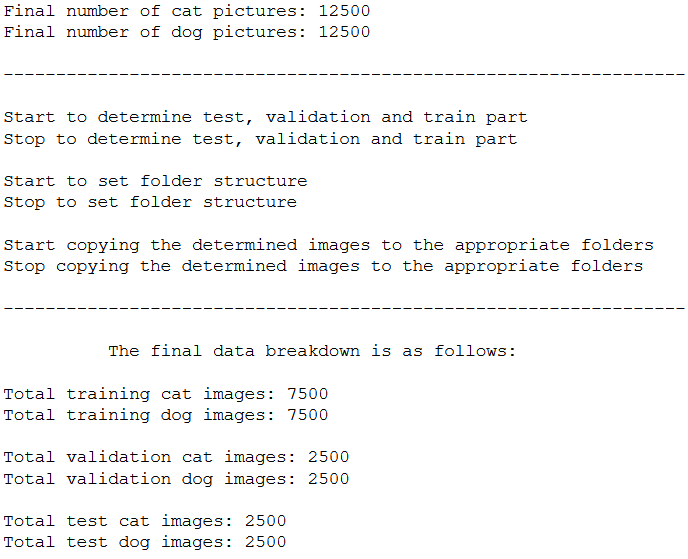

Here is the output of the function:

Execute all remaining lines of code in the notebook.

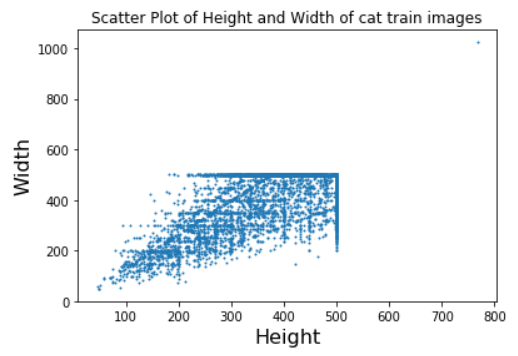

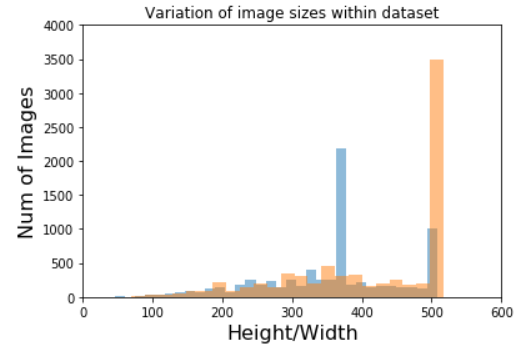

Descriptive statistics

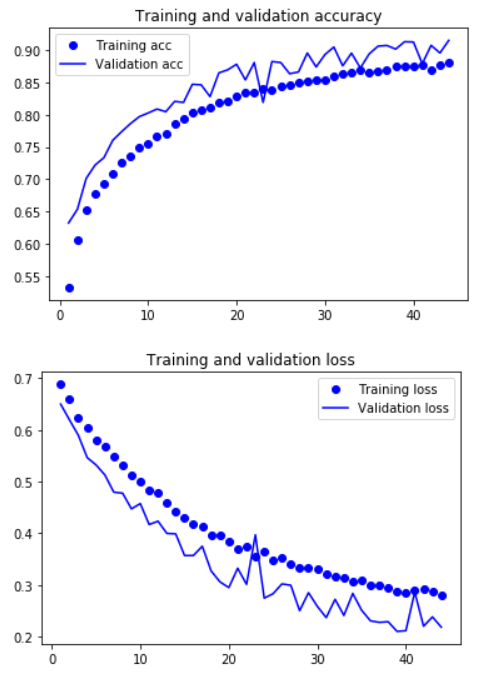

Model Evaluation

Final Folder Structure

The final folder structure should now look like this:

C:.

│ Computer Vision - CNN.ipynb

│ preprocessing_CNN.py

│

├───cats

├───cats_and_dogs

│ ├───test

│ │ ├───cats

│ │ └───dogs

│ ├───train

│ │ ├───cats

│ │ └───dogs

│ └───validation

│ ├───cats

│ └───dogs

├───ckpt_1_simple_CNN

│ Cats_Dogs_CNN_4_Conv_F32_64_128_128_epoch_30.h5

│ class_assignment_df_Cats_Dogs_CNN_4_Conv_F32_64_128_128_epoch_30.csv

│ history_df_Cats_Dogs_CNN_4_Conv_F32_64_128_128_epoch_30.csv

│

├───ckpt_2_CNN_with_augm

│ Cats_Dogs_CNN_4_Conv_F32_64_128_128_epoch_60_es.h5

│ class_assignment_df_Cats_Dogs_CNN_4_Conv_F32_64_128_128_epoch_60_es.csv

│ history_df_Cats_Dogs_CNN_4_Conv_F32_64_128_128_epoch_60_es.csv

│ img_height.pkl

│ img_width.pkl

│

├───dogs

└───test_pictures

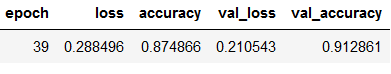

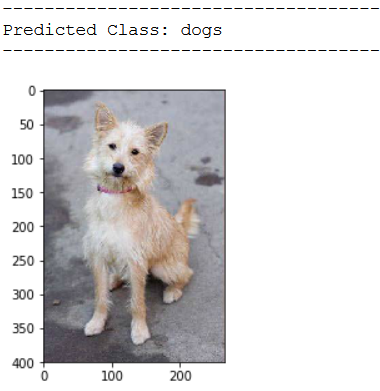

The best model achieved a validation accuracy of over 91%.

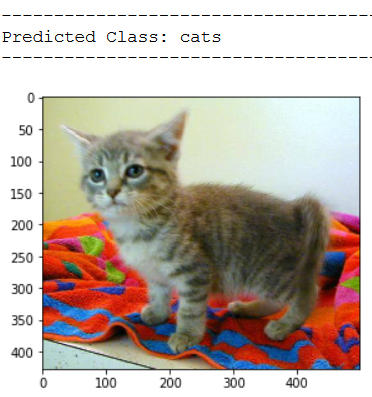

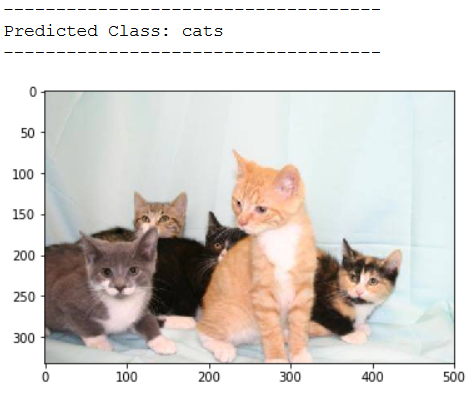

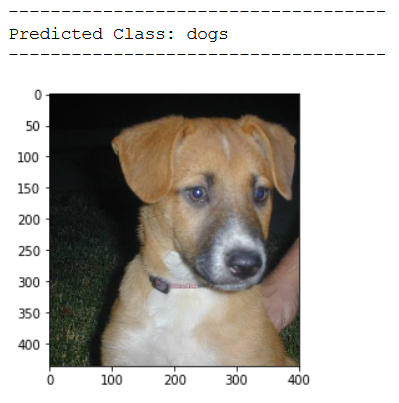

Here are a few more test predictions:

Here is the link to my blog post: Computer Vision - Convolutional Neural Network.

If this repository/publication helped you, you are welcome to read other blog posts I wrote on the topic of Computer Vision:

- Classification of Dog-Breeds using a pre-trained CNN model

- CNN with TFL and Fine-Tuning for Multi-Label Classification

- CNN with TFL and Fine-Tuning

- CNN with Transfer Learning for Multi-Label Classification

- CNN with Transfer Learning

- CNN for Multi-Label Classification

- Automate The Boring Stuff

I've been blogging since 2018 on my homepages about all sorts of topics related to Machine Learning, Data Analytics, Data Science and much more. You are welcome to visit them:

I also publish individual interesting sections from my publications in separate repositories to make their access even easier.

The content of the entire Repository was created using the following sources:

Chollet, F. (2018). Deep learning with Python (Vol. 361). New York: Manning.