This repository is a landing page for the article: Szymon Maksymiuk, Alicja Gosiewska, and Przemysław Biecek. Landscape of R packages for eXplainable Artificial Intelligence. It contains data and code necessary to reproduce all analysis made in the paper.

Find a short introduction

to this work in this talk presented at WhyR 2020.

.

.

The growing availability of data and computing power fuels the development of predictive models. In order to ensure the safe and effective functioning of such models, we need methods for exploration, debugging, and validation. New methods and tools for this purpose are being developed within the eXplainable Artificial Intelligence (XAI) subdomain of machine learning. In this work (1) we present the taxonomy of methods for model explanations, (2) we identify and compare 27 packages available in R to perform XAI analysis, (3) we present an example of an application of particular packages, (4) we acknowledge recent trends in XAI. The article is primarily devoted to the tools available in R, but since it is easy to integrate the Python code, we will also show examples for the most popular libraries from Python.

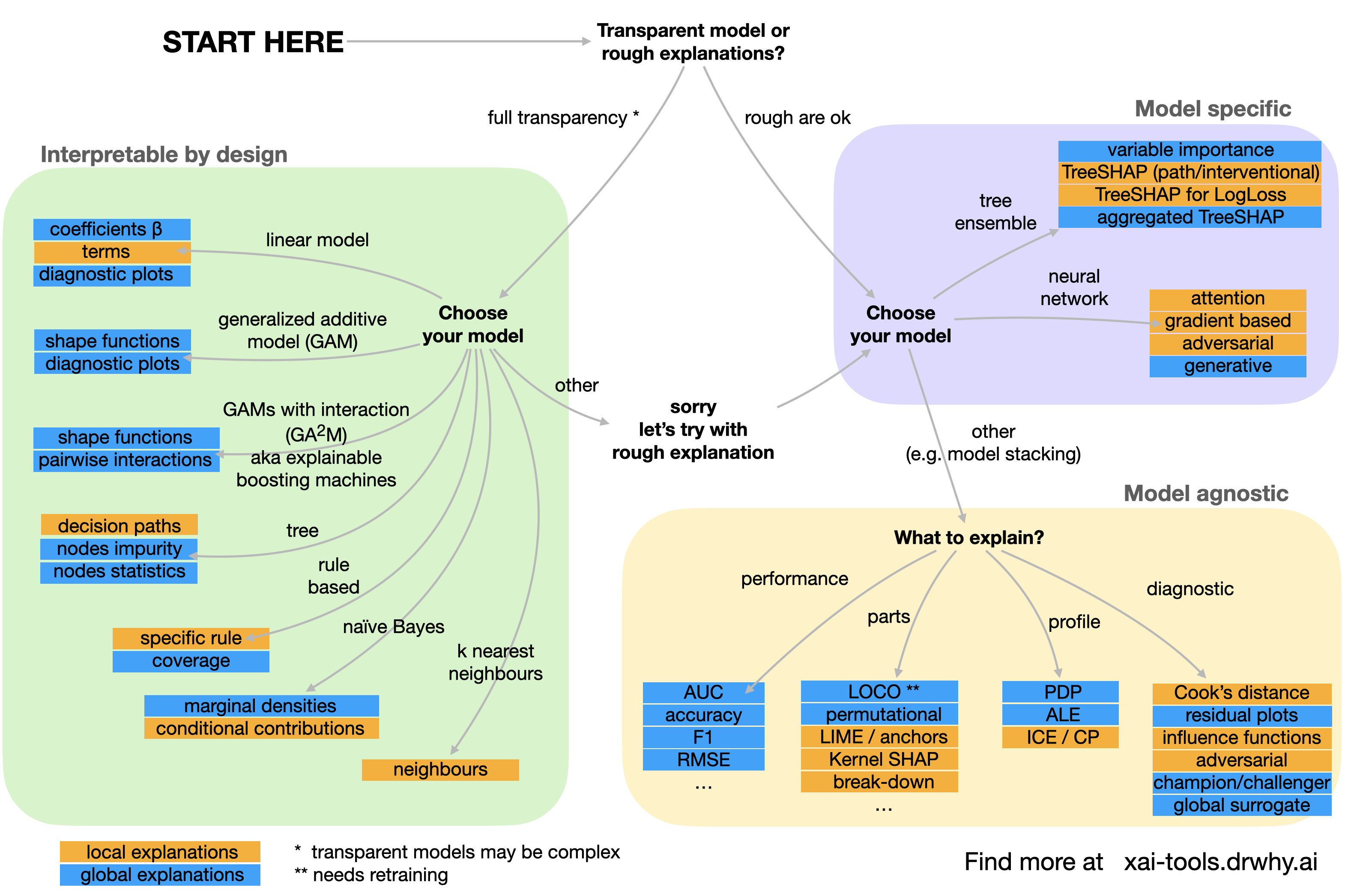

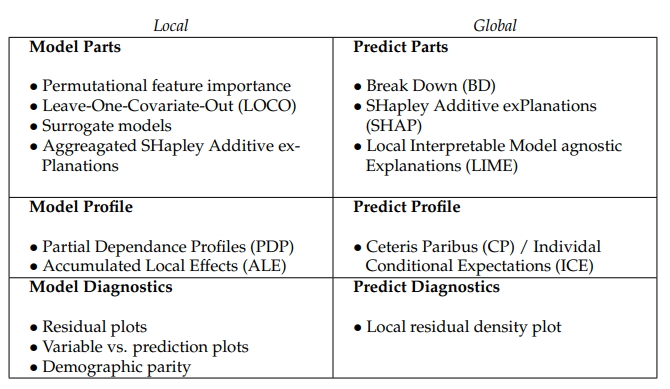

An important part of our article is a discussion about Taxonomies for predictive machine learning model explanations. We divided them into three branches, ie. models interpretable by design, model-specific explanations, and model-agnostics explanations. Visualization of our ideas can be seen in the figure below

Furthermore, model-specific and model-agnostic were divided according to XAI taxonomy we propose as a new way to categorize predictive model explanations.

During the work under the article, we have created a markdown with an example of usage of every compared package. The results can be seen below. As teaser figures used in the article are presented.

ALEplotarules

auditor

ctree

DALEX

DALEXtra

EIX

ExplainPrediction

fairness

fastshap

flashlight

forestmodel

fscaret

glmnet

iBreakDown

ICEbox

iml

ingredients

kknn

naivebayes

lime

live

mcr

modelDown

modelStudio

pdp

randomForestExplainer

shapper

smbinning

survxai

vip

vivo

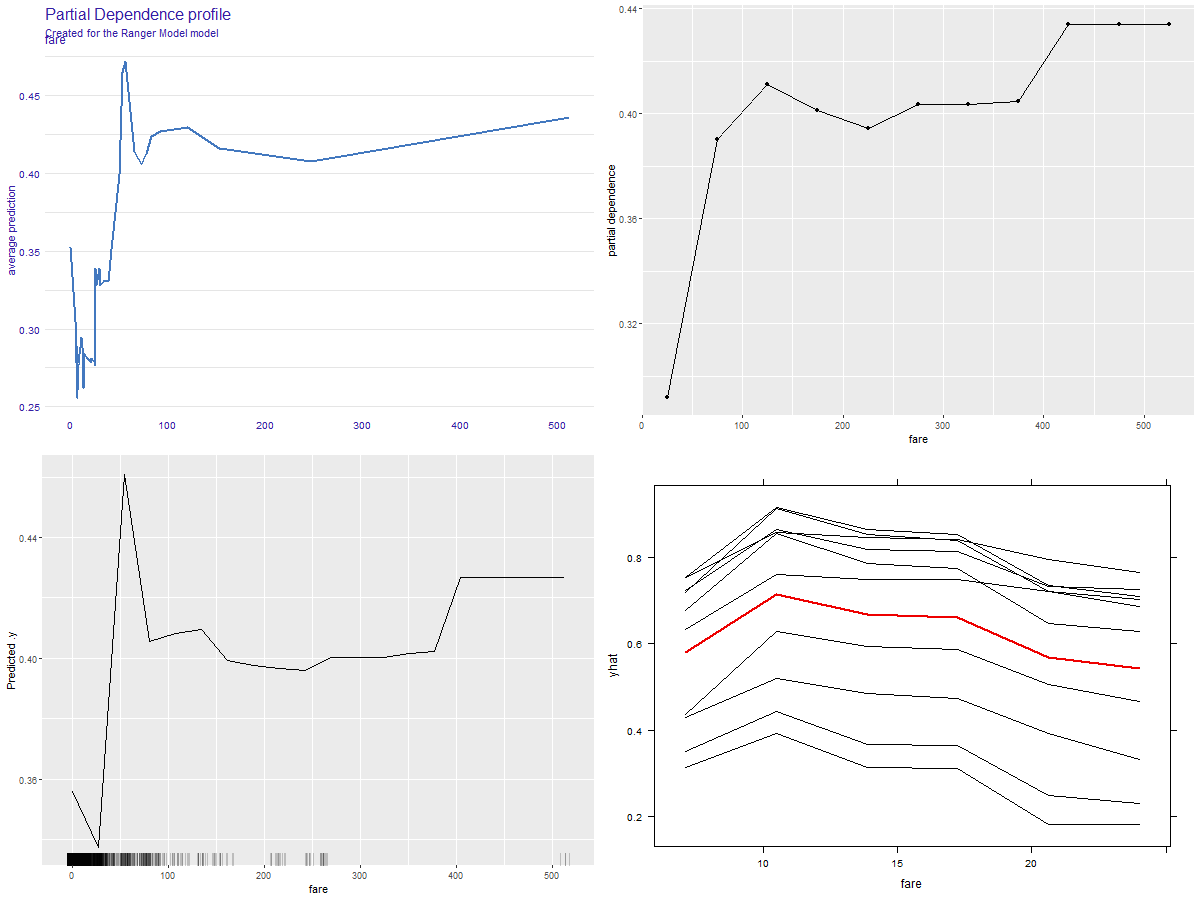

Partial Dependence Porfiles for fare variable from the Titanic data set generated with DALEX(top-left), flashlight(right-top), iml(left-bottom), pdp(right-bottom). We can see that the profiles differs. It is due to the fact that profiles are evaluated on different grids of points.

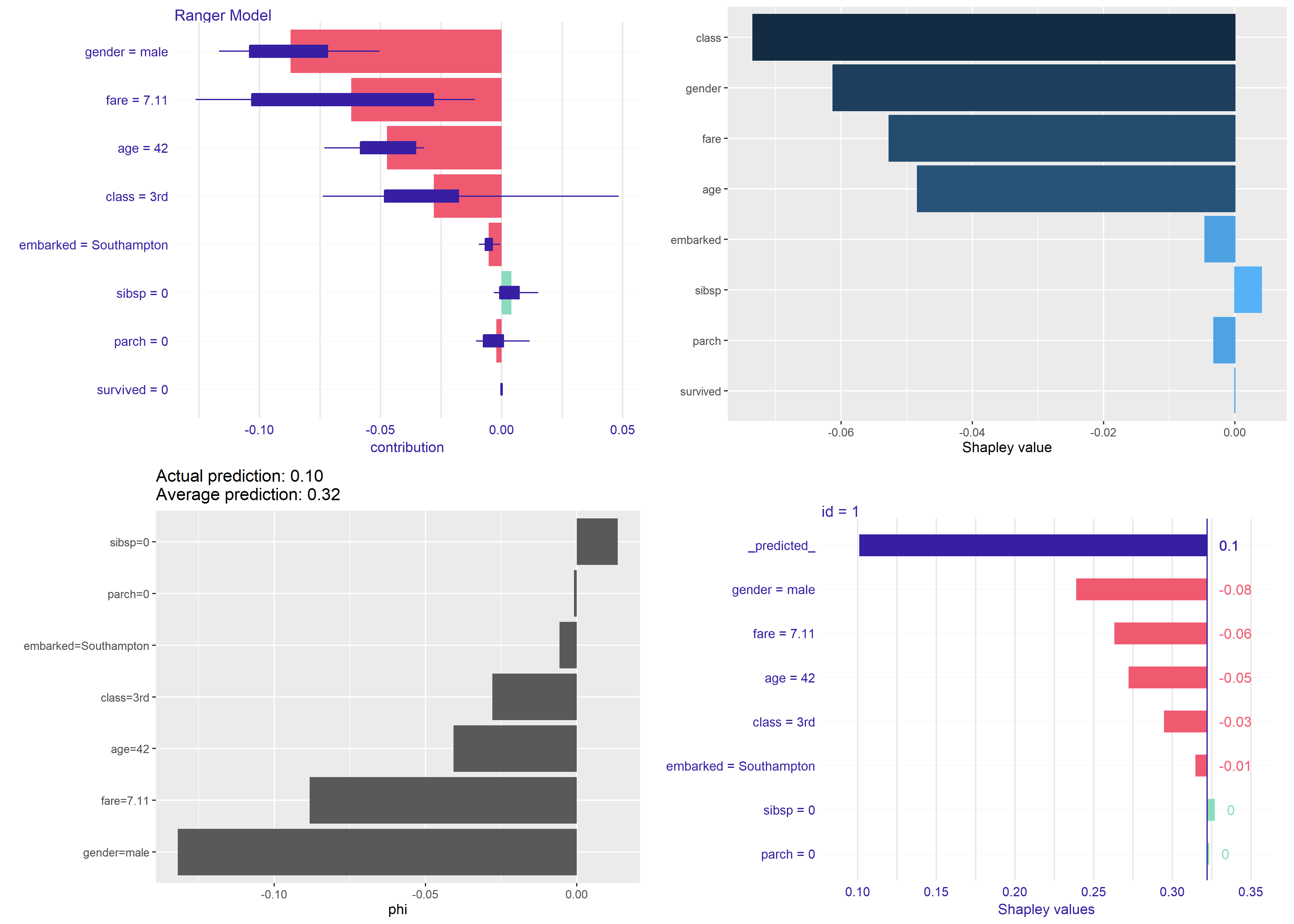

Contribution plots based on Shapley values for the same observation from Titanic dataset generated with DALEX (top-left), flashlight (right-top), iml (left-bottom), pdp (right-bottom).

Versions of all used packages are stored in the renv.lock file and can

be restored with the renv package.

We did our best to show the entire range of the implemented explanations. Please note that the examples may be incomplete. If you think something is missing, feel free to make a pull request in the XAI-tools repository.

Preprint for this work is avaliable at https://arxiv.org/abs/2009.13248 .

In order to cite our work please use the following BiBTeX entry

@article{xailandscape,

title={Landscape of R packages for eXplainable Artificial Intelligence},

author={Szymon Maksymiuk and Alicja Gosiewska and Przemyslaw Biecek},

year={2020},

journal={arXiv},

eprint={2009.13248},

archivePrefix={arXiv},

primaryClass={cs.LG},

URL={https://arxiv.org/abs/2009.13248}

}