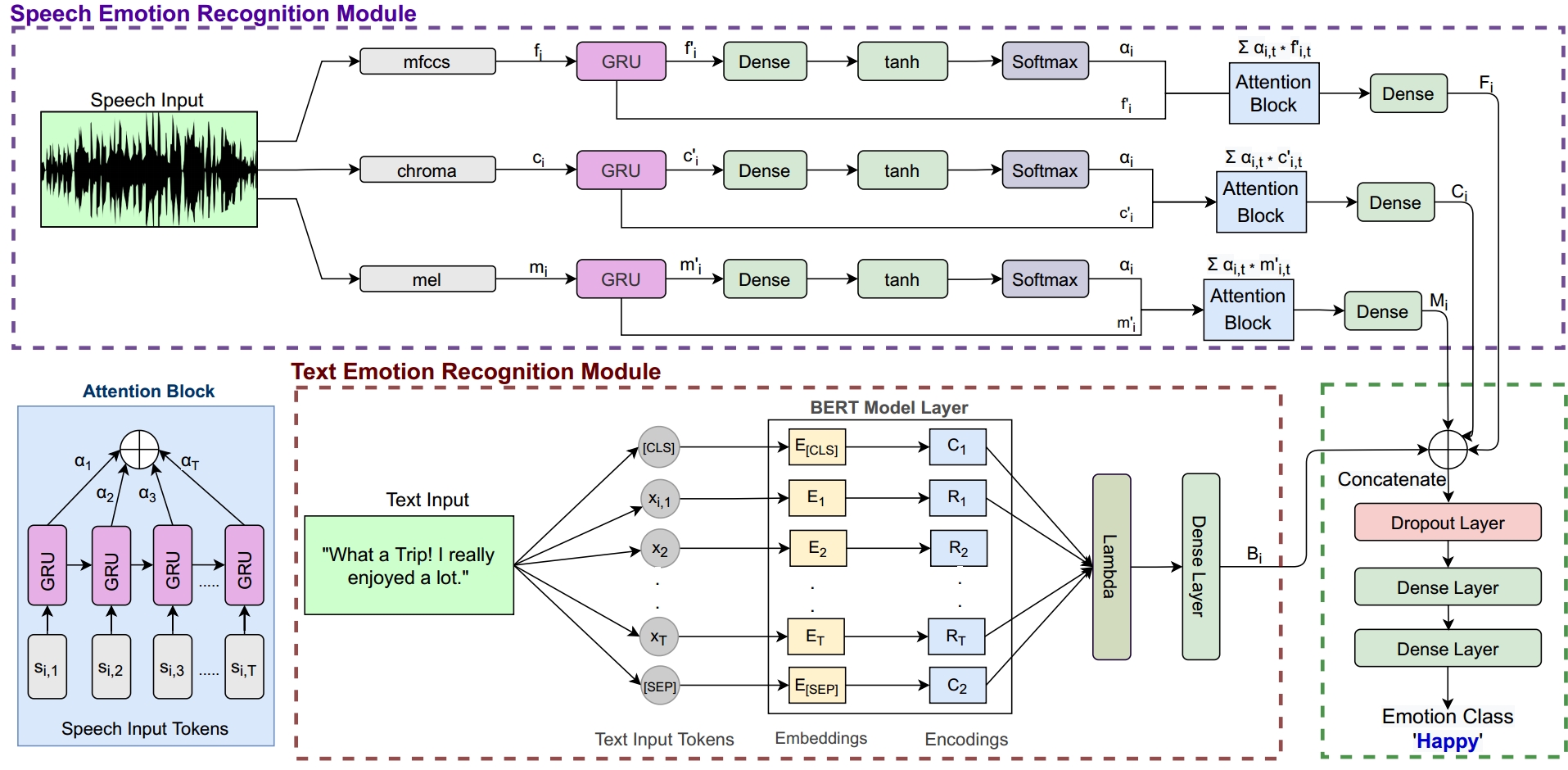

Towards the Explainability of Multimodal Speech Emotion Recognition

Implementation for the paper (Interspeech 2021). The paper has been accepted, its full-text will be shared after publication.

Towards the Explainability of Multimodal Speech Emotion Recognition

Puneet Kumar, Vishesh Kaushik, and Balasubramanian Raman

Setup and Dependencies

- Install Anaconda or Miniconda distribution and create a conda environment with Python 3.6+.

- Install the requirements using the following command:

pip install -r Requirements.txt- Download glove.6B.zip, unzip and keep in

glove.6Bfolder. - Download the required datasets.

Steps to run the Code

- For IEMOCAP Dataset:

RunData_Preprocess(IEMOCAP).ipynbin Jupyter Notebook, then

RunTraining(IEMOCAP).ipynbin Jupyter Notebook, then

RunAnalysis(IEMOCAP).ipynbin Jupyter Notebook.

OR

Runmain_IEMOCAP.pyin the terminal/command-line using the following command:

python main_IEMOCAP.py --epoch=100- For MSP-IMPROV Dataset:

RunData_Preprocess(IMPROV).ipynbin Jupyter Notebook, then

RunTraining(IMPROV).ipynbin Jupyter Notebook, then

RunAnalysis(IMPROV).ipynbin Jupyter Notebook.

OR

Runmain_IMPROV.pyin the terminal/command-line using the following command:

python main_IMPROV.py --epoch=100- For RAVDESS Dataset:

RunData_Preprocess(RAVDESS).ipynbin Jupyter Notebook, then

RunTraining(RAVDESS).ipynbin Jupyter Notebook, then

RunAnalysis(RAVDESS).ipynbin Jupyter Notebook.

OR

Runmain_RAVDESS.pyin the terminal/command-line using the following command:

python main_RAVDESS.py --epoch=100Saving Model Checkpoints

By default, the code saves the model checkpoints in the log-1 folder.

Tensorboard Logging

The tensorboard log files are saved in the log-1 folder. These files can be accessed using the following command:

tensorboard --logdir "/log-1"