Code for paper DEEP METRIC AND HASH-CODE LEARNING FOR CONTENT-BASED RETRIEVAL OF REMOTE SENSING IMAGES accepted in the International Conference on Geoscience and Remote Sensing Symposium (IGARSS) held in Valencia, Spain in July, 2018.

and Journal extension paper Metric-Learning-Based Deep Hashing Network for Content-Based Retrieval of Remote Sensing Images accepted at IEEE Geoscience and Remote Sensing Letters (GRSL).

- Python 2.7

- Tensorflow GPU 1.2.0

- Scipy 1.1.0

- Pillow 5.1.0

N.B. The code has only been tested with Python 2.7 and Tensorflow GPU 1.2.0. Higher versions of the software have not been tested.

In our paper we have experimented with two remote sensing benchmark archives - UC Merced Data Set (UCMD) and AID.

First, download UCMD dataset or the AID dataset and save them on the disk. For the UCMD dataset the parent folder will contain 21 sub-folders, each containing 100 images for each category. Whereas, for the AID dataset the parent folder will contain 30 sub-folders, cumulatively containing 10,000 images.

N.B.: Code for the respective data sets have been provided in separate folders.

Next, download the pre-trained InceptionV3 Tensorflow model from this link.

To extract the feature representations from a pre-trained model:

$ python extract_features.py \

--model_dir=your/localpath/to/models \

--images_dir=your/localpath/to/images/parentfolder \

--dump_dir=dump_dir/

To prepare the training and the testing set:

$ python dataset_generator.py --train_test_split=0.6

To train the network. It is to be noted that same train_test_split should be used as above:

$ python trainer.py\

--HASH_BITS=32 --ALPHA=0.2 --BATCH_SIZE=90\

--ITERS=10000 --train_test_split=0.6

To evaluate the performance and save the retrieved samples:

$ python eval.py --k=20 --interval=10

Train and test splits for UCMD are provided here

If you find this code useful for your research, please cite our IGARSS paper or GRSL paper

@inproceedings{roy2018deep,

title={Deep metric and hash-code learning for content-based retrieval of remote sensing images},

author={Roy, Subhankar and Sangineto, Enver and Demir, Beg{\"u}m and Sebe, Nicu},

booktitle={IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium},

pages={4539--4542},

year={2018},

organization={IEEE}

}

@article{roy2019metric,

title={Metric-learning based deep hashing network for content based retrieval of remote sensing images},

author={Roy, Subhankar and Sangineto, Enver and Demir, Beg{\"u}m and Sebe, Nicu},

journal={arXiv preprint arXiv:1904.01258},

year={2019}

}

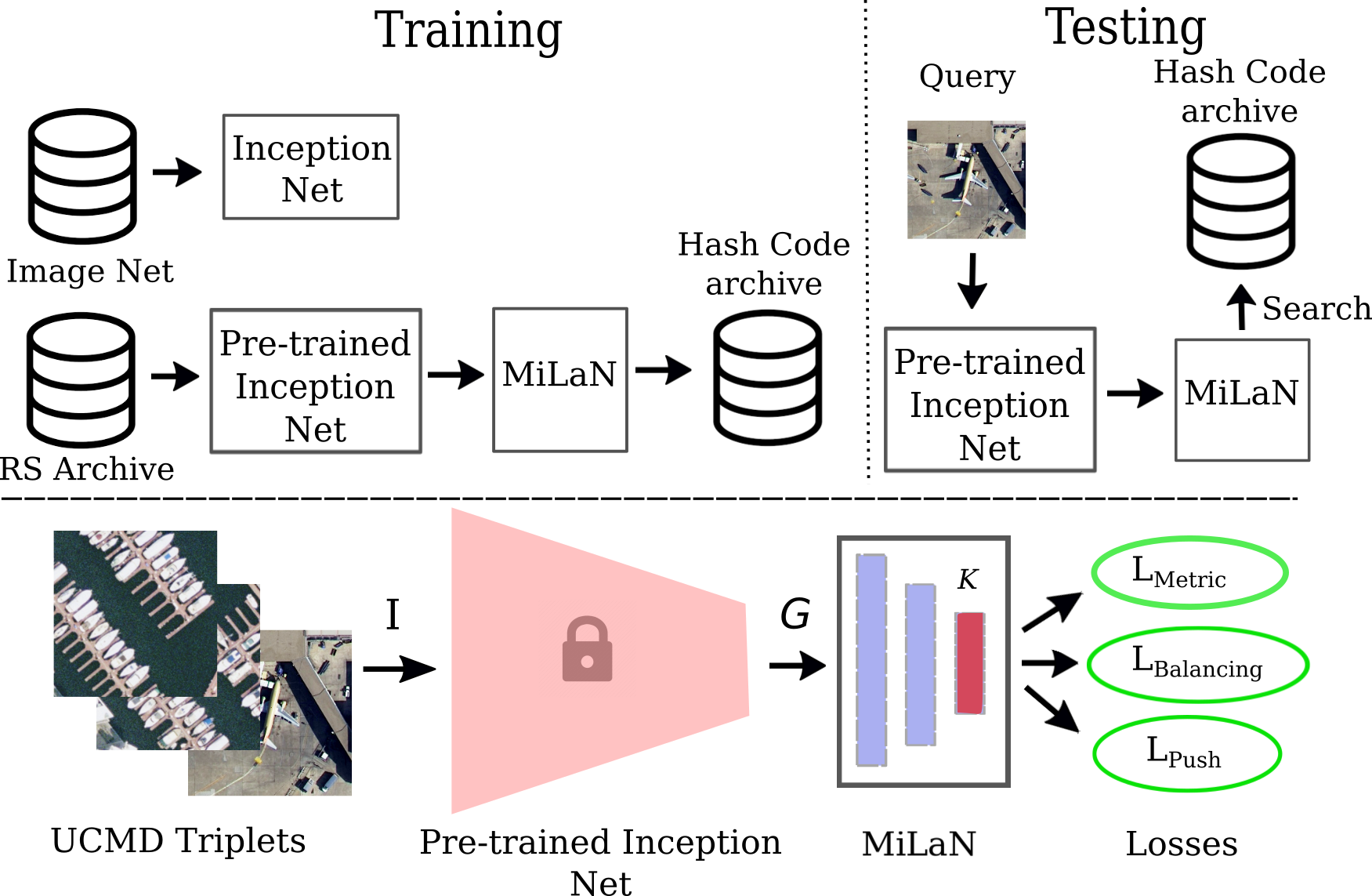

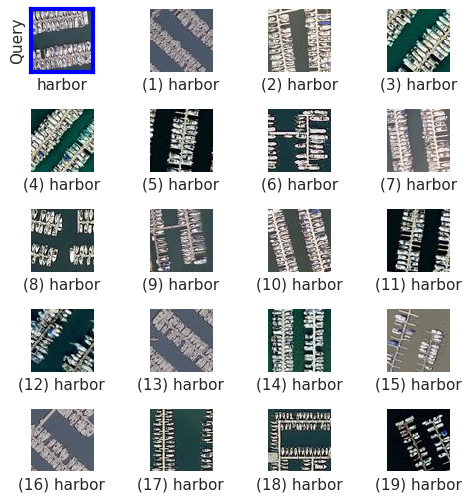

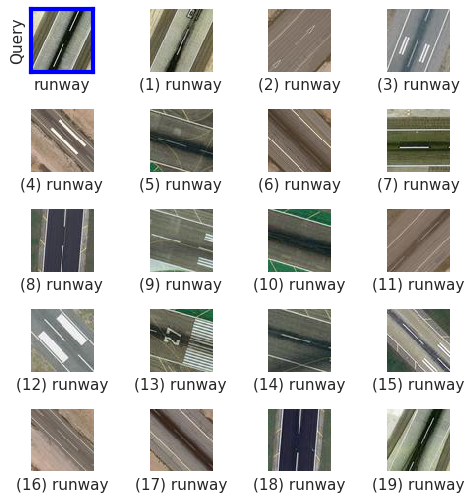

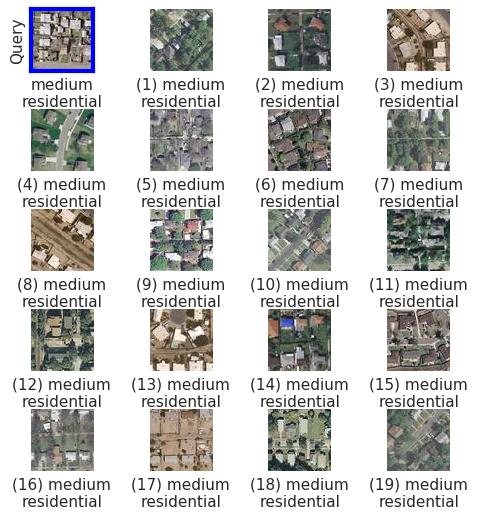

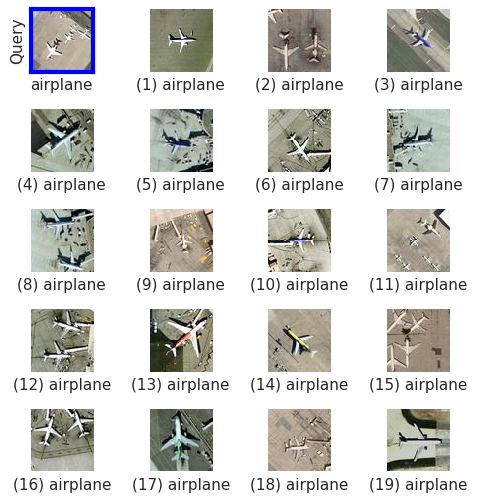

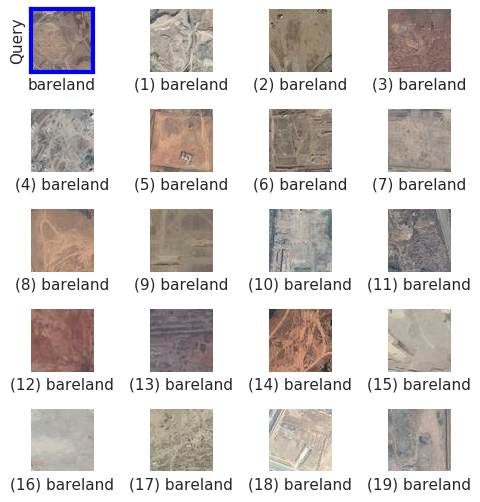

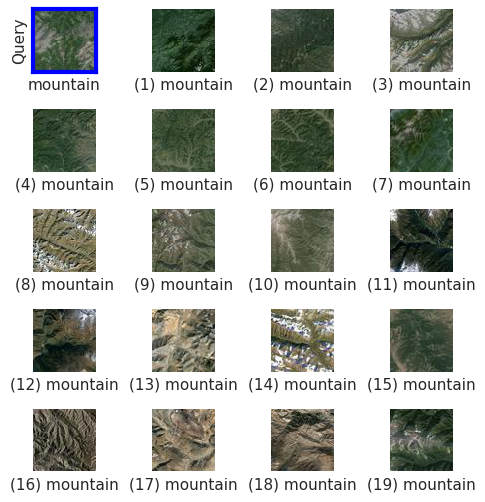

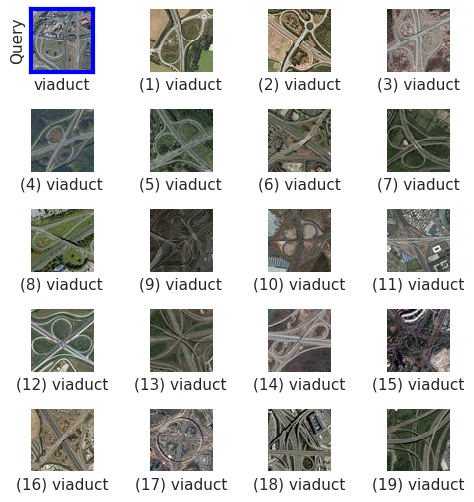

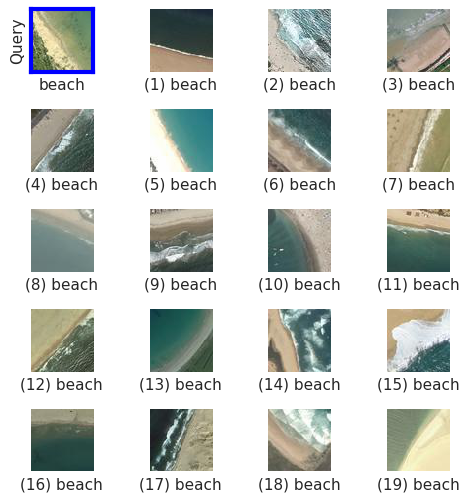

Retrieval results for some sample query images. The query is enclosed by the blue box. The retrieved images are sorted in decreasing order of proximity or semantic similarity from the query image. Top 19 results are displayed.

| Query - Harbour | Query - Runway |

|---|---|

|

|

| Query - Medium Residential | Query - Airplane |

|---|---|

|

|

| Query - Bareland | Query - Mountains |

|---|---|

|

|

| Query - Viaduct | Query - Beach |

|---|---|

|

|