Text Generation with Efficient (Soft) Q-Learning

Han Guo, Bowen Tan, Zhengzhong Liu, Eric P. Xing, Zhiting Hu

Please see requirements.txt and Dockerfile for detailed dependencies. The major ones include

python 3.8 or later(for type annotations and f-string)pytorch==1.8.1transformers==4.5.1

Note: if you ever encounter issues regarding hydra, consider downgrading it.

To build the docker image, run the following script.

DOCKER_BUILDKIT=1 docker build \

-t ${TAG} \

-f Dockerfile .- Install the master branch of

texar(and a few other dependencies) viabash scripts/install_dependencies.sh - Install GEM-metrics. We use the version at commit

2693f3439547a40897bc30c2ab70e27e992883c0. Note that some dependencies might overridetransformersversion.

- Most of the data are available at

https://huggingface.co/datasets. - We use

nltk==3.5in data preprocessing.

python run_experiments.py \

translation.task_name="entailment.snli_entailment_1_sampled" \

translation.training_mode="sql-mixed" \

translation.save_dir=${USER_SPECIFIED_SAVE_DIR} \

translation.num_epochs=101 \

translation.top_k=50 \

translation.reward_shaping_min=-50 \

translation.reward_shaping_max=50 \

translation.reward_name="entailment3" \

translation.warmup_training_mode="sql-offpolicy" \

translation.warmup_num_epochs=5Details

- Maximum Decoding Length set to

10 - Decoder positiion embedding length set to

65

python run_experiments.py \

translation.task_name="attack.mnli" \

translation.training_mode="sql-mixed" \

translation.save_dir=${USER_SPECIFIED_SAVE_DIR} \

translation.num_epochs=51 \

translation.top_k=50 \

translation.num_batches_per_epoch=1000 \

translation.reward_shaping_min=-50 \

translation.reward_shaping_max=50 \

translation.reward_name="entailment2"Details

- Decoder position embedding length set to

75(MNLI) - Change

rewards = (rewards + 10 * nll_reward + 100) / 2

python run_experiments.py \

translation.task_name="prompt.gpt2_mixed" \

translation.training_mode="sql-mixed" \

translation.save_dir=${USER_SPECIFIED_SAVE_DIR} \

translation.num_epochs=501 \

translation.num_batches_per_epoch=100 \

translation.reward_shaping_min=-50 \

translation.reward_shaping_max=50 \

translation.top_k=50 \

translation.reward_name="gpt2-topic" \

translation.warmup_training_mode="sql-offpolicy" \

translation.warmup_num_epochs=100Details

- For different token length, remember to change the

max_length.

python run_experiments.py \

translation.task_name="standard.e2e" \

translation.training_mode="sql-mixed" \

translation.save_dir=${USER_SPECIFIED_SAVE_DIR} \

translation.num_epochs=201 \

translation.reward_shaping_min=-50 \

translation.reward_shaping_max=50 \

translation.reward_name="bleu"This directory contains configurations for models as well as data. Notably, configs/data lists some task-specific configurations such as file-paths, and configs/models lists configurations of models, all in the texar format. configs/config.yaml lists configurations in the hydra format. Please update the paths etc based on your own usages.

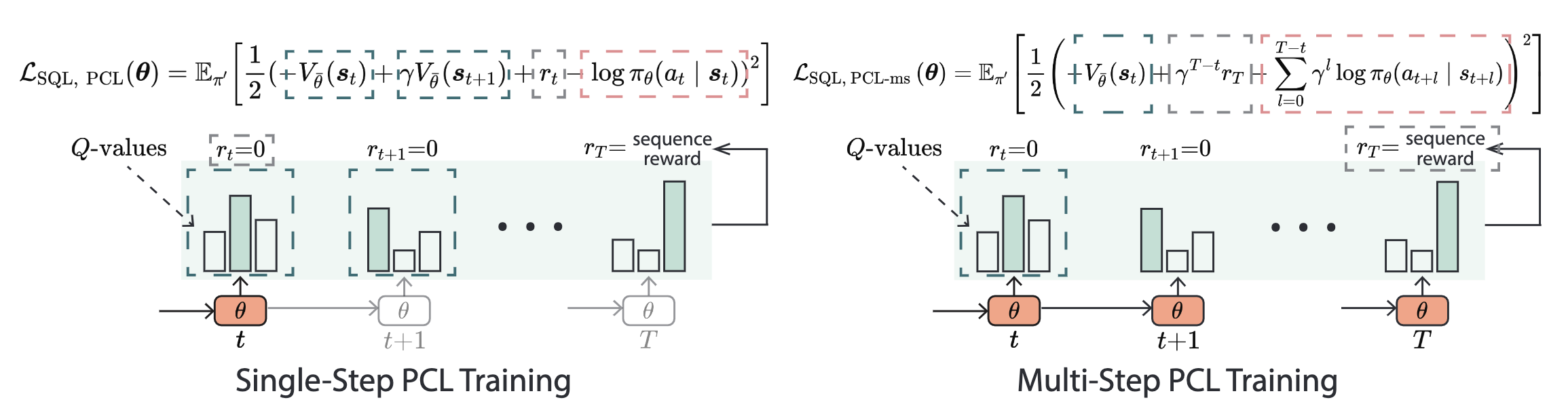

This directory contains the core components of the soft Q-learning algorithm for text generation.

This directory contains the core components of the models and GEM-metrics.