Live Demo: https://bookstore-dynamo.macrometadev.workers.dev/

Macrometa-Cloudflare Bookstore Demo App is a full-stack e-commerce web application that creates a storefront (and backend) for customers to shop for "fictitious" books.

Originally based on the AWS bookstore template app (https://github.com/aws-samples/aws-bookstore-demo-app), this demo replaces all AWS services like below

- AWS DynamoDB,

- AWS Neptune (Graphs),

- AWS ElasticSearch (Search),

- AWS Lambda

- AWS Kinesis

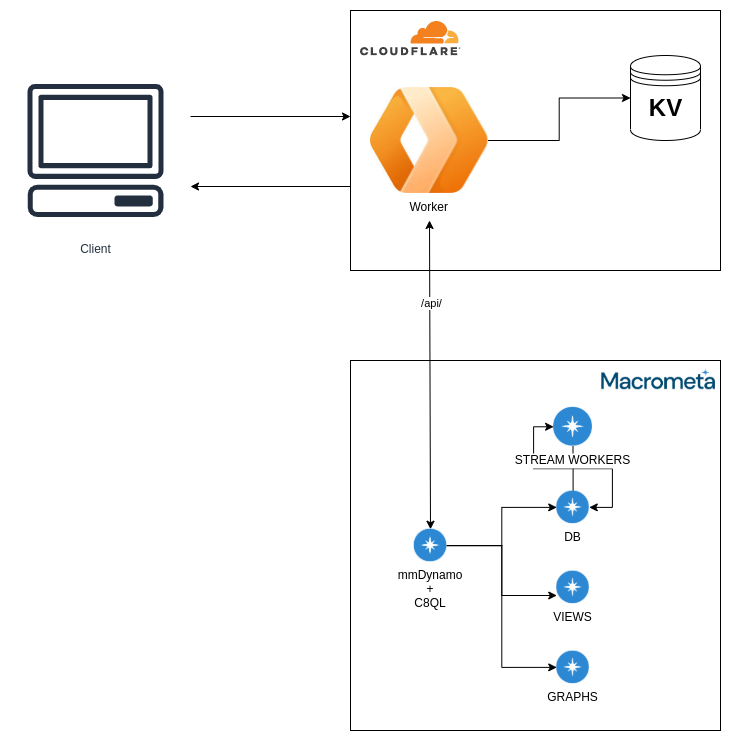

This demo uses Macrometa's geo distributed data platform which provides a K/V store, DynamoDB compatible document database, graph database, streams and stream processing along with Cloudflare edgeworkers for the globally distributed functions as a service.

Unlike typical cloud platforms like AWS, where the backend stack runs in a single region, Macrometa and Cloudflare let you build stateful distributed microservices that run in 100s of regions around the world concurrently. The application logic runs in cloudflare's low latency function as a service runtime on cloudflare PoPs and make stateful data requests to the closest Macrometa region. End to end latency for P90 is < 55ms from almost everywhere in the world.

As a user of the demo, you can browse and search for books, look at recommendations and best sellers, manage your cart, checkout, view your orders, and more.

| Federation | Fabric | |

|---|---|---|

| Play | book_store_dynamo | demo@macrometa.io |

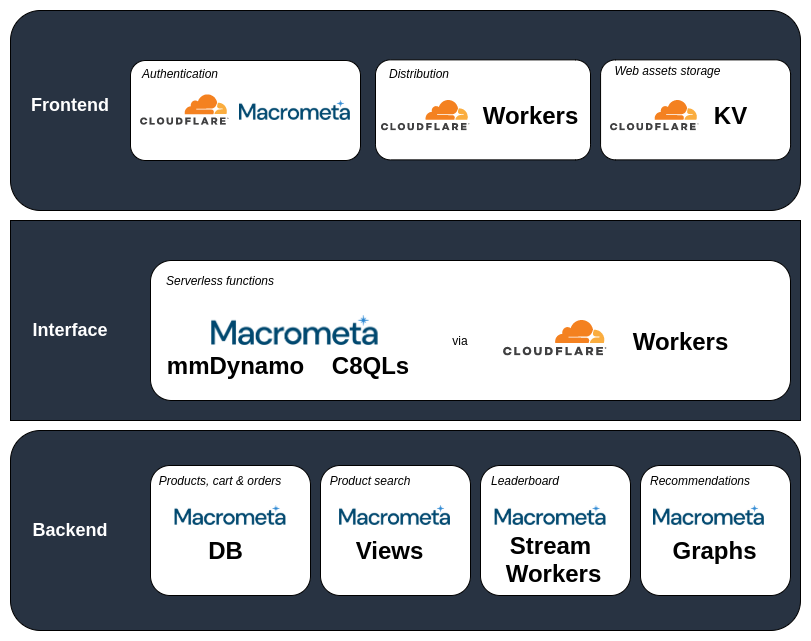

- Frontend is a Reactjs application which is hosted using Cloudflare.

- Web assets are stored on Cloudflare's KV store.

The core of backend infrastructure consists of Macrometa Document store(DB), Macrometa Edge store(DB), Macrometa Views(search), Macrometa Stream Workers, Macrometa Graphs and Cloudflare workers. Cloudflare workers issue calls to Macrometa dynamo driver (mmDynamo) or C8QLs to talk with the GDN network.

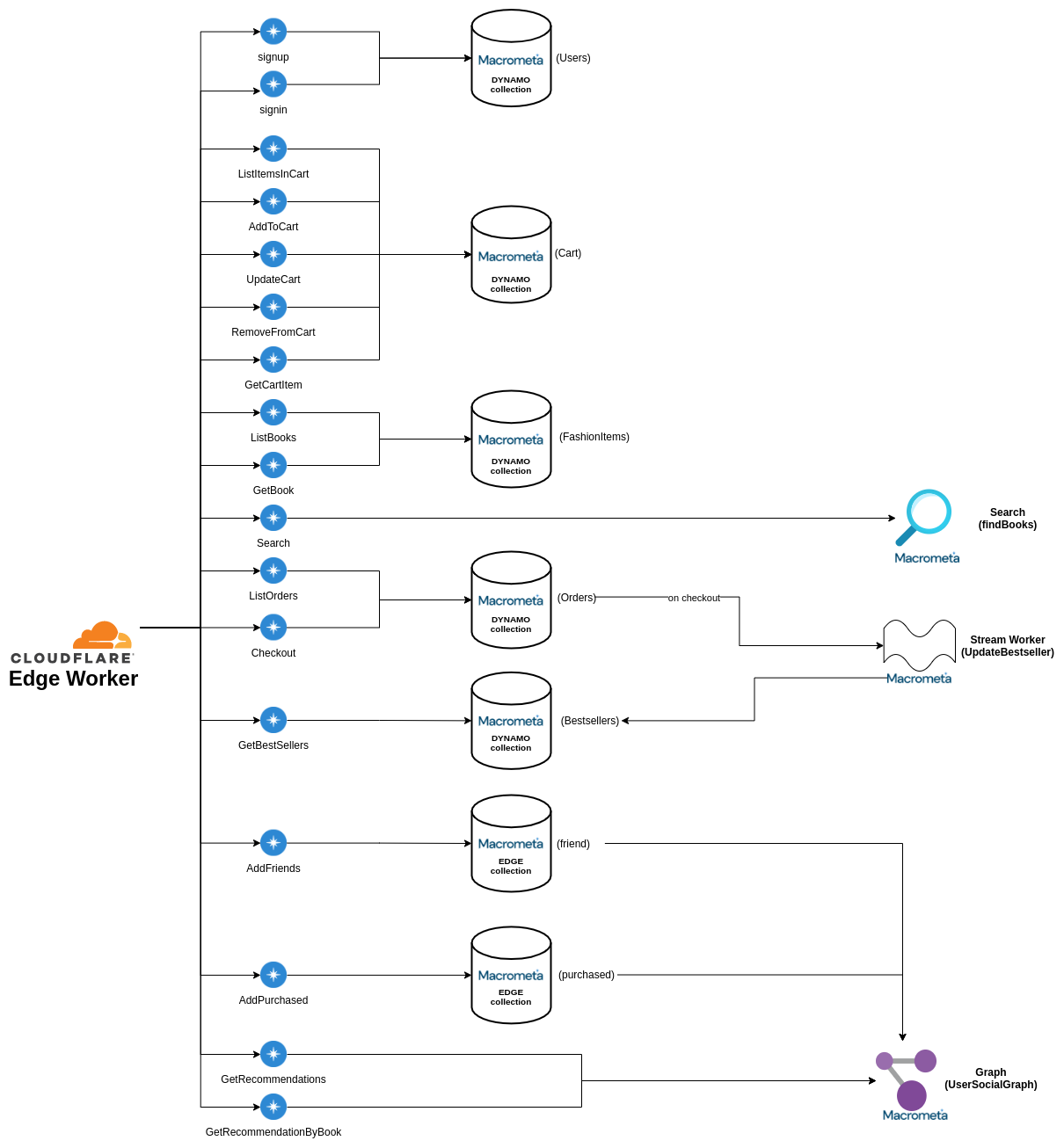

The application leverages Macrometa GDN document store to store all the data for books, orders, the checkout cart and users. When new purchases or new users are added the corresponding Macrometa Edge collection is also updated. These Edge collections along with Document collection acting as vertices are used by the Macrometa Graphs to generate recommendations for the users. When new purchases are added Macrometa Stream Workers also update the BestSellers Collection store in realtime from which the best sellers leaderboard is generated.

Catalog, Cart, Orders:

This is implemented using dynamo collections functionality in Macrometa GDN

| Entity | Collection Name | Collection Type | Comment |

|---|---|---|---|

| Catalog | BooksTable | dynamo | Collection of the available books. |

| Cart | CartTable | dynamo | Books customers have addded in their cart. |

| Orders | OrdersTable | dynamo | Past orders of a customer. |

Recommendations:

This is implemented using graphs functionality in Macrometa GDN. Each node in the graph is a vertex and the links connecting the nodes are edges. Both vertex and edges are dynamo collections. The edges require two additional mandatory indexes i.e., _from and _to.

| Entity | Collection Name | Collection Type | Comment |

|---|---|---|---|

| Friends | Friend | edge | Edge collection to capture friend relations. |

| Purchase | Purchased | edge | Edge collection to capture purchases. |

| Users | UserTable | vertex | Dynamo collection of available users. |

| Catalog | BooksTable | vertex | Collection of the available books. |

| Social | UserSocialGraph | graph | User social graph |

Search:

Search is implemented using views functionality in Macrometa GDN. Search matches on the category or the name of book in BooksTable with phrase matching.

| Entity | Collection Name | Collection Type | Comment |

|---|---|---|---|

| Find | findBooks | view | The view which is queried for search. |

Top Sellers List:

This is implemented using streams and stream processing functionality in Macrometa.

| Entity | Name | Type | Comment |

|---|---|---|---|

| BestSeller | UpdateBestseller | stream worker | Stream worker to process orders and update best sellers in realtime. |

| BestSeller | BestsellersTable | dynamo | Collection to store best sellers. |

Indexes:

Create indexes on the collection for the corresponding attributes

| Collection | Partition Key | Sorting Key |

|---|---|---|

| BestsellersTable | bookId |

- |

| CartTable | cartId |

- |

| BooksTable | bookId |

- |

| friend | N/A | N/A |

| OrdersTable | orderId |

orderDate |

| UsersTable | customer |

- |

Below are the list of APIs being used.

Books (Macrometa Dynamo Store DB)

- GET /books (ListBooks)

- GET /books/{:id} (GetBook)

Cart (Macrometa Dynamo Store DB)

- GET /cart (ListItemsInCart)

- POST /cart (AddToCart)

- PUT /cart (UpdateCart)

- DELETE /cart (RemoveFromCart)

- GET /cart/{:bookId} (GetCartItem)

Orders (Macrometa Dynamo Store DB)

- GET /orders (ListOrders)

- POST /orders (Checkout)

Best Sellers (Macrometa Dynamo Store DB)

- GET /bestsellers (GetBestSellers)

Recommendations (Macrometa Graphs)

- GET /recommendations (GetRecommendations)

- GET /recommendations/{bookId} (GetRecommendationsByBook)

Search (Macrometa Views)

- GET /search (Search)

Dynamo tables and C8QLs are used by the Cloudflare workers to communicate with Macrometa GDN.

GetCartItem:

FOR item IN CartTable FILTER item.customerId == @customerId AND item.bookId == @bookId RETURN itemAddFriends:

LET otherUsers = (FOR users in UsersTable FILTER users._key != @username RETURN users)

FOR user in otherUsers

INSERT { _from: CONCAT("UsersTable/",@username), _to: CONCAT("UsersTable/",user._key) } INTO friendCheckout:

LET items = (FOR item IN CartTable FILTER item.customerId == @customerId RETURN item)

LET books = (FOR item in items

FOR book in BooksTable FILTER book._key == item.bookId return {bookId:book._key ,author: book.author,category:book.category,name:book.name,price:book.price,rating:book.rating,quantity:item.quantity})

INSERT {_key: @orderId, orderId: @orderId, customerId: @customerId, books: books, orderDate: @orderDate} INTO OrdersTable

FOR item IN items REMOVE item IN CartTableAddPurchased:

LET order = first(FOR order in OrdersTable FILTER order._key == @orderId RETURN {customerId: order.customerId, books: order.books})

LET customerId = order.customerId

LET userId = first(FOR user IN UsersTable FILTER user.customerId == customerId RETURN user._id)

LET books = order.books

FOR book IN books

INSERT {_from: userId, _to: CONCAT("BooksTable/",book.bookId)} INTO purchasedGetBestSellers:

FOR bestseller in BestsellersTable

FOR book in BooksTable

FILTER bestseller._key == book._key SORT bestseller.quantity DESC LIMIT 20 RETURN bookGetRecommendations:

LET userId = first(FOR user in UsersTable FILTER user.customerId == @customerId return user._id)

FOR user IN ANY userId friend

FOR books IN OUTBOUND user purchased

RETURN DISTINCT booksGetRecommendationsByBook:

LET userId = first(FOR user in UsersTable FILTER user.customerId == @customerId return user._id)

LET bookId = CONCAT("BooksTable/",@bookId)

FOR friendsPurchased IN INBOUND bookId purchased

FOR user IN ANY userId friend

FILTER user._key == friendsPurchased._key

RETURN userSearch

FOR doc IN findBooks

SEARCH PHRASE(doc.name, @search, "text_en") OR PHRASE(doc.author, @search, "text_en") OR PHRASE(doc.category, @search, "text_en")

SORT BM25(doc) desc

RETURN docSearch functionality is powered by Macrometa Views. This is saved as findFashionItems with below config:

{

"links": {

"BooksTable": {

"analyzers": ["text_en"],

"fields": {},

"includeAllFields": true,

"storeValues": "none",

"trackListPositions": false

}

},

"primarySort": []

}Best seller leader board made with BestsellersTable which is updated with each new purchase via the UpdateBestseller stream worker

@App:name("UpdateBestseller")

@App:description("Updates BestsellerTable when a new order comes in the OrdersTable")

define function getBookQuantity[javascript] return int {

const prevQuantity = arguments[0];

const nextQuantity = arguments[1];

let newQuantity = nextQuantity;

if(prevQuantity){

newQuantity = prevQuantity + nextQuantity;

}

return newQuantity;

};

@source(type='c8db', collection='OrdersTable', @map(type='passThrough'))

define stream OrdersTable (_json string);

@sink(type='c8streams', stream='BestsellerIntermediateStream', @map(type='json'))

define stream BestsellerIntermediateStream (bookId string, quantity int);

@store(type = 'c8db', collection='BestsellersTable')

define table BestsellersTable (_key string, bookId string, quantity int);

select json:getString(jsonElement, '$.bookId') as bookId, json:getInt(jsonElement, '$.quantity') as quantity

from OrdersTable#json:tokenizeAsObject(_json, "$.books[*]")

insert into BestsellerIntermediateStream;

select next.bookId as _key, next.bookId as bookId, getBookQuantity(prev.quantity, next.quantity) as quantity

from BestsellerIntermediateStream as next

left outer join BestsellersTable as prev

on next.bookId == prev._key

update or insert into BestsellersTable

set BestsellersTable.quantity = quantity, BestsellersTable._key = _key, BestsellersTable.bookId = bookId

on BestsellersTable._key == _key;- Book images are stored in Cloudflare KV under

Book_IMAGES. - Root of the repo contains the code for the UI which is in Reactjs

workers-sitefolder contains the backend part. This is responsible for both serving the web assets and also making calls to Macrometa GDN.- Calls with

/api/are treated as calls which want to communicate with Macrometa GDN, others are understood to be calls for the web assets. handleEvent(index.js)get the request and calls the appropriate handler based on the regex of the request with the help of a simple router function defined inrouter.js.payloadGenerator.jscontains the queries ( dynamo and C8QL). C8QLs are executed by calling Macrometa GDN/cursorAPI. Thebind variablesin the body of the request are the parameters to the queries. Dynamo collection calls are executed by executing/dynamoendpoint.

There are multiple ways to install the workers CLI. Official docs say it to install via npm or cargo.

Additionally the binary can also be installed manually. Details of which can be found here under the Manual Install section - I personally have the binaries.

It is advisable to have npm installed via nvm to avoid getting into issues when installing global packages. Additional details can be found in their github repo.

We will need the Macrometa API token to be able to configure the CLI. Please signup for a macrometa account for the token, or create your own by following the docs if you already have an account here

Run wrangler config and enter the above API token when asked for. More details can be found here

wrangler.toml already has the configurations.

Provide a

C8_API_KEYwith a correct API key before proceeding.

vars provides the environment variable we use in the workers itself. They include:

DC_LIST: for stream app initC8_URL: GDN federation URLC8_API_KEY: API key of the tenant being used

Make sure to run npm i on the project's root to install the necessary dependencies.

If there are changes to the UI code then first run npm run build to make the UI build, else you can directly proceed with publishing.

Run wrangler publish and it will deploy your worker along with the static files used by the UI.

Once the worker is deployed, execute the following curl:

curl 'https://bookstore-dynamo.macrometadev.workers.dev/api/init' -H 'authority: bookstore.macrometadev.workers.dev' -H 'sec-ch-ua: "Chromium";v="86", "\"Not\\A;Brand";v="99", "Google Chrome";v="86"' -H 'x-customer-id: null' -H 'sec-ch-ua-mobile: ?0' -H 'user-agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.75 Safari/537.36' -H 'content-type: text/plain;charset=UTF-8' -H 'accept: */*' -H 'origin: https://bookstore-dynamo.macrometadev.workers.dev' -H 'sec-fetch-site: same-origin' -H 'sec-fetch-mode: cors' -H 'sec-fetch-dest: empty' -H 'referer: https://bookstore-dynamo.macrometadev.workers.dev/signup' -H 'accept-language: en-GB,en-US;q=0.9,en;q=0.8' -H 'cookie: __cfduid=de7d15f3918fe96a07cf5cedffdecba081601555750' --data-binary '{}' --compressed

This will create all the collections and dummy data for you.

Note: This will only populate if the collection or stream app is not already present. If it does it wont create the dummy data, even if the collection is empty. So best to delete the collection if you want it to be populated by the curl.

- Now login to the tenant and activate the stream app.

- Edit and save the view with the correct data if not initialised properly. Details can be found in

init.js