Creating a perfect README has always been a crucial part of any project. It's the first impression developers and collaborators get from your repository. However, crafting a well-structured, detailed, and visually appealing README takes time and effort. That's why I decided to automate this process using CrewAI and LLaMA 3 - 70B.

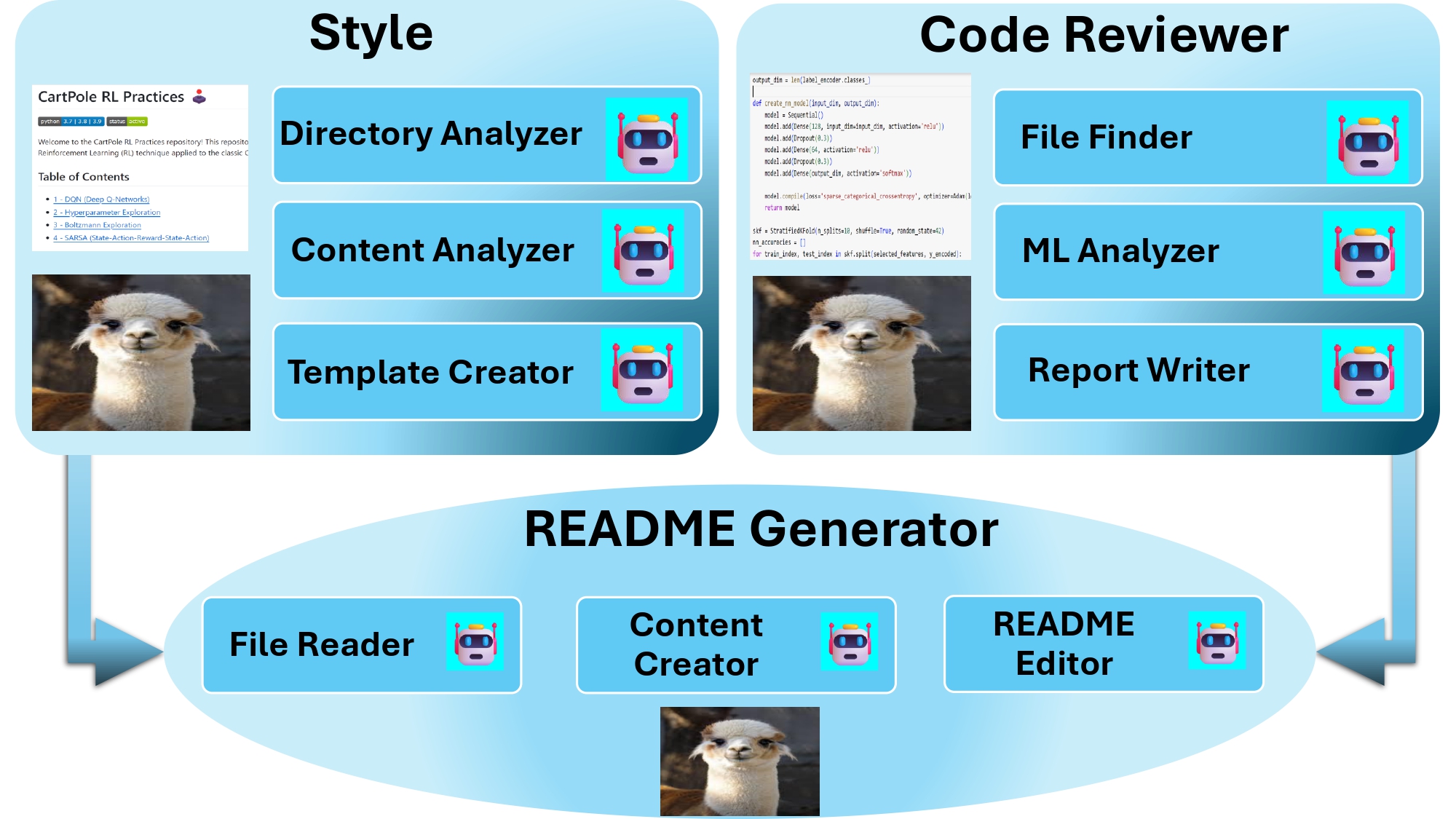

The core of this system revolves around three collaborative crews:

- Style Crew: This crew analyzes a sample README that reflects my preferred style, such as the one from RL Practices - DQN.

- Code Crew: This crew digs deep into the project code, like in Readahead Optimization using ML Models, extracting key information to ensure the README is accurate and thorough.

- README Generator Crew: Combining insights from the Style and Code Crews, this team writes the final README, complete with structure, details, and creative touches.

The project overview below demonstrates how the three crews work together, processing inputs like sample READMEs and source code, powered by CrewAI and the powerful LLaMA 3 - 70B model.

With this setup, I’ve automated the task of producing top-quality, personalized READMEs that align perfectly with my style preferences—making the process faster, consistent, and fun!

This project leverages the powerful LLaMA 3 - 70B language model to analyze and generate natural language content. To make the project accessible for all users, we’ve implemented everything in Google Colab. You can run the entire pipeline from any device, regardless of its computational power, and see the results in real-time.

Simply click the Colab badge to start:

LLaMA 3 - 70B offers a great balance between computational efficiency and power, making it ideal for natural language tasks without the high cost of stronger models like GPT-4. While more powerful models like GPT-4, Claude, and PaLM 2 are available, LLaMA 3 - 70B performs impressively in the free tier, allowing for effective automation without the need for expensive paid APIs.

To get started with the LLM, we integrate it with Langchain and CREW AI, which allow us to break down complex README generation tasks into smaller, manageable steps. Follow these simple steps to set up the environment:

-

Install the required libraries:

pip install --upgrade langchain langchain_core crewai langchain_groq

-

Set up your GROQ API key by adding it to your environment variables:

import os os.environ["GROQ_API_KEY"] = "your-key-here"

-

Initialize the LLM with the LLaMA 3 - 70B model:

from langchain_groq import ChatGroq # Setting up the LLM (GROQ_LLM) GROQ_LLM = ChatGroq( api_key=os.getenv("GROQ_API_KEY"), model="llama3-70b-8192" )

This setup allows you to fully automate the README generation process without worrying about infrastructure or heavy computation.

To automate the creation of the README, the project divides tasks among three specialized agents—each responsible for a distinct aspect of the documentation process. Here’s a breakdown:

The Style Crew ensures that the generated README matches the preferred style by analyzing a sample README provided by the user. In this case, we used the README from RL Practices - DQN.

- Directory Analyzer: Scans the directories to locate the README.md files for analysis.

- Content Analyzer: Reads and extracts the style, structure, and key elements from the sample README.

- Template Creator: Based on the analysis, this agent generates a reusable README template, which serves as a skeleton for the final output.

This is an excerpt from the first part of the final analyzed README that the Style Crew generated based on the preferred style:

# Introduction

This repository provides a comprehensive implementation of [Project Name], a [brief description of the project]. The project aims to [briefly describe the project's objective].

## Setup

### Prerequisites

* List of prerequisites, e.g., Python version, libraries, etc.

* Installation instructions, e.g., pip install, etc.

### Environment Setup

* Instructions for setting up the environment, e.g., creating a virtual environment, etc.

## Implementing Key Components

### [Component 1]

* Brief description of the component

* Code snippet or example

* Explanation of the component's functionality

### [Component 2]

* Brief description of the component

* Code snippet or example

* Explanation of the component's functionality

## Results and Performance Analysis

### [Result 1]

* Description of the result, including any visual aids, e.g., images, tables, etc.

* Analysis of the result, including any relevant metrics or statistics

### [Result 2]

* Description of the result, including any visual aids, e.g., images, tables, etc.

* Analysis of the result, including any relevant metrics or statistics

## Summary

This project provides a comprehensive implementation of [Project Name], demonstrating [key findings or achievements]. The results show [briefly describe the results], highlighting the effectiveness of [key components or techniques].For the full style template found by the Style Crew, you can check the style_found_output.txt in the asset directory.

The Code Crew dives into the project's codebase to extract technical details that need to be documented in the README. This crew ensures that important components like algorithms, functions, and models are clearly described.

- File Finder: Identifies relevant code files and passes them to the next agent for analysis.

- ML Analyzer: Reads and analyzes machine learning methods and their implementation in the code.

- Report Writer: Generates a structured report based on the analysis, which is then included in the README.

For instance, in Readahead Optimization using ML Models, the Code Crew extracted critical details about the training process, models used, and performance metrics.

The README Generator Crew is responsible for compiling the insights from both the Style Crew and Code Crew into a polished, professional README.

- File Reader: Reads the output from the Style and Code Crews to merge content.

- Content Merger: Combines the style template with the technical content to produce a coherent and structured README draft.

- README Editor: Finalizes the draft by adding creative touches like emojis, formatting enhancements, and technical precision.

Here’s an example of the README generated by CrewAI:

Final README Content: Here is the rewritten README file:

# Optimizing Readahead Feature of Linux Page Cache using Machine Learning 📊💻

This repository provides a comprehensive implementation of optimizing the Readahead feature of the Linux Page Cache under varying workloads using machine learning techniques.

## Setup 💻

### Prerequisites

* Python version: 3.x

* Libraries: scikit-learn, numpy, pandas, etc.

* Installation instructions: `pip install -r requirements.txt`

### Environment Setup

* Create a virtual environment: `python -m venv env`

* Activate the virtual environment: `source env/bin/activate`

## Implementing Machine Learning Components 🤖

### Feature Importance Analysis

* Brief description: Random Forest Classifier was used to analyze feature importance, and non-important features were removed.

* Code snippet or example: [Insert code snippet]

* Explanation of the component's functionality: This component is used to identify the most important features that affect the Readahead size.

### Dimensionality Reduction

* Brief description: T-SNE was used to visualize the data in 2D.

* Code snippet or example: [Insert code snippet]

* Explanation of the component's functionality: This component is used to reduce the dimensionality of the data and visualize it in 2D.

### Model Training 🚀

* **Neural Network**

+ Brief description: MLPClassifier was used with hidden layers of 64 and 32 neurons.

+ Code snippet or example: [Insert code snippet]

+ Explanation of the component's functionality: This component is used to train a neural network model to classify workload types and suggest optimal Readahead sizes.

* **Decision Tree**

+ Brief description: DecisionTreeClassifier was used.

+ Code snippet or example: [Insert code snippet]

+ Explanation of the component's functionality: This component is used to train a decision tree model to classify workload types and suggest optimal Readahead sizes.

* **Random Forest**

+ Brief description: RandomForestClassifier was used with 100 estimators.

+ Code snippet or example: [Insert code snippet]

+ Explanation of the component's functionality: This component is used to train a random forest model to classify workload types and suggest optimal Readahead sizes.

## Results and Performance Analysis 📊

### Model Comparison

| Model | Accuracy | Notes |

|------------------|-----------|---------------------------------------------|

| Decision Tree | 100.00% | Simple, interpretable, perfect accuracy |

| Neural Network | 99.85% | High accuracy, complex model with slight variability in precision |

| Random Forest | 100.00% | Combines multiple trees for perfect accuracy and generalization |

### Performance Comparison

* The results show that both the Decision Tree and Random Forest models achieved perfect accuracy, while the Neural Network model had a slightly lower accuracy.

## Summary 📚

This project provides a comprehensive implementation of optimizing the Readahead feature of the Linux Page Cache under varying workloads using machine learning techniques, demonstrating the effectiveness of machine learning techniques in optimizing the Readahead feature under varying workloads. The results show that the Random Forest model stands out for its combination of accuracy and interpretability, making it a strong candidate for real-time systems that require dynamic adjustment of Readahead sizes based on current workloads.

Final README saved to: FINAL_README.mdFor the complete output, check out the file FINAL_README.md

CrewAI effectively automates the creation of high-quality, structured documentation with minimal manual effort. Using LLaMA 3 - 70B, the project balances efficiency and content generation, but more powerful LLMs like GPT-4 or PaLM 2 could enhance analysis for larger, more complex projects.

For technical challenges like Readahead Optimization, CrewAI proves that well-maintained, comprehensive documentation can be generated efficiently without sacrificing quality.