Welcome to the TextSumWatermark repository, dedicated to watermarking large language models. This project is inspired by the groundbreaking paper A Watermark for Large Language Models with custom enhancements guided by Professor Yue Dong.

- We have expanded our codebase to accommodate summarization models such as T5 and Flan, in addition to just decoder models.

- We've introduced evaluation capabilities, allowing you to assess different models performance on datasets like CNN_DailyMail and Xsum seamlessly.

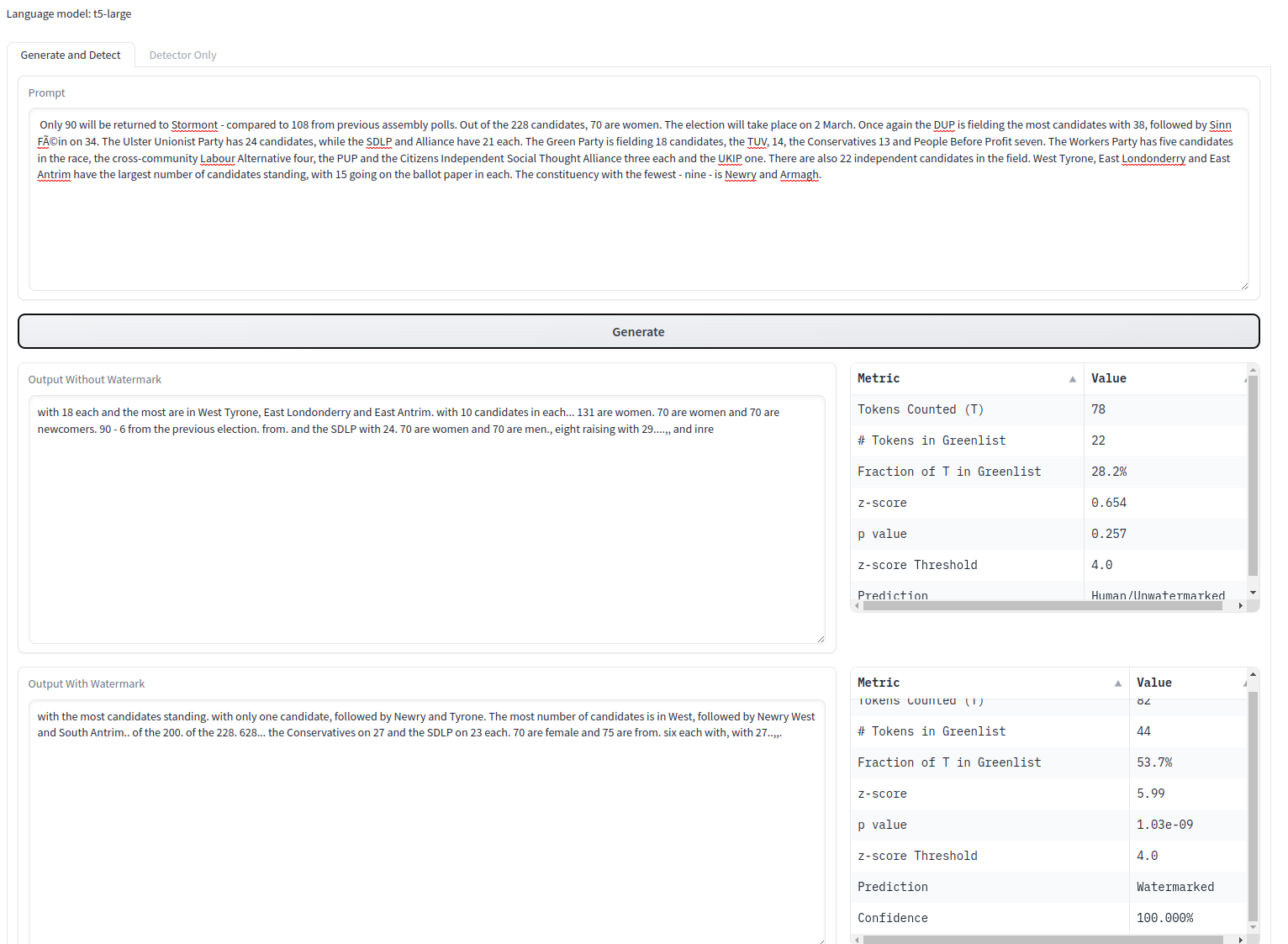

- We've integrated a Gradio demo with the latest models.

- To provide a deeper understanding of the watermarking approach, we've added a script to generate statistical result plots.

The core idea is to insert a subtle but detectable watermark into the generations of a language model. This is done by biasing the model's next token predictions during generation towards a small "greenlist" of candidate tokens determined by the prefix context. The watermark detector then analyzes text and checks if an abnormally high fraction of tokens fall within these expected greenlists, allowing it to distinguish watermarked text from human-written text.

The repository contains the following key files:

-

watermark_processor.py: Implements theWatermarkLogitsProcessorclass which inserts the watermark during generation, and theWatermarkDetectorclass for analyzing text. -

utils.py: Utility functions for loading models, datasets, and running the interactive demo. -

normalizers.py: Text normalizers to defend against textual attacks against the watermark detector. -

config.yaml: Configuration file for model and watermark parameters. -

main.py: Main script to run watermark insertion and detection on a dataset. -

gradio_demo.py: Script to launch an interactive web demo of watermarking using Gradio. -

homoglyphs.py: Helper code for managing homoglyph replacements. -

README: This file.

To run TextSumWatermark effectively, please ensure that you have the following prerequisites in place:

- Python Version: We recommend using Python version 3.9 or higher.

- You'll also need to install the necessary libraries. You can do this by running the following command:

pip install -r requirements.txt

These libraries include PyTorch and Transformers, which are essential for the operation of this codebase. Once you have the prerequisites set up, you're ready to dive into watermarking large language

There are two main ways to use the code:

The main.py script allows watermarking and detection on an input dataset specified in the config file:

python main.py

This will load the model, dataset, and parameters from config.yaml, run generation with and without watermarks on the dataset, and perform detection on the outputs, saving results to results.csv.

To launch a live demo:

python gradio_demo.py

This will launch a Gradio web interface allowing interactive prompt generation and watermark detection with parameters adjustable via the UI.

The key parameters controlling the watermarking scheme are:

gamma: Fraction of tokens to assign to the greenlist at each step. Lower values make the watermark stronger.delta: Amount of bias/boost applied to greenlist tokens during generation. Higher values make the watermark stronger.

The detector can be configured with:

z_threshold: z-score cutoff to call a positive watermark detectionnormalizers: Text normalizations to run during detection like lowercasing.

See the paper for detailed discussion of settings and their effect on generation quality and detection accuracy.