Signlingo aims to automate the process of teaching sign language and make it more engaging by gamifying it. The number of sign-language teachers out there is very low compared to the number of learners, moreover they usually charge money for sessions, our solution attemtpts to make the process of learning sign language accessible to everyone for free.

Our solution takes input from the webcam, and a custom PyTorch based Convolutional Neural Network determines if the user is showing the correct sign.

- Clone the repository.

- Navigate to the cloned directory and run the below script on terminal directly.

python3 main.py

- Boom! You've got your tutor live

- Searched for a suitable Dataset to train the Neural Network.

- Experimented with multiple network architectures, like ResNet-18, VGG19, etc., but the inference time was too high for a real time application.

- Defined a smaller custom network and trained it.

- Once the accuracy was good enough on the validation dataset, we tested the performance on some unseen images.

- Then we tested it out with live webcam feed, and it worked!

- Added a GUI for ease-of use, and also a level based system to make the process more engaging.

- PyTorch

- OpenCV

- Tkinter (UI)

- Python threads

- Finding the right training hyperparameters took more time that we expected.

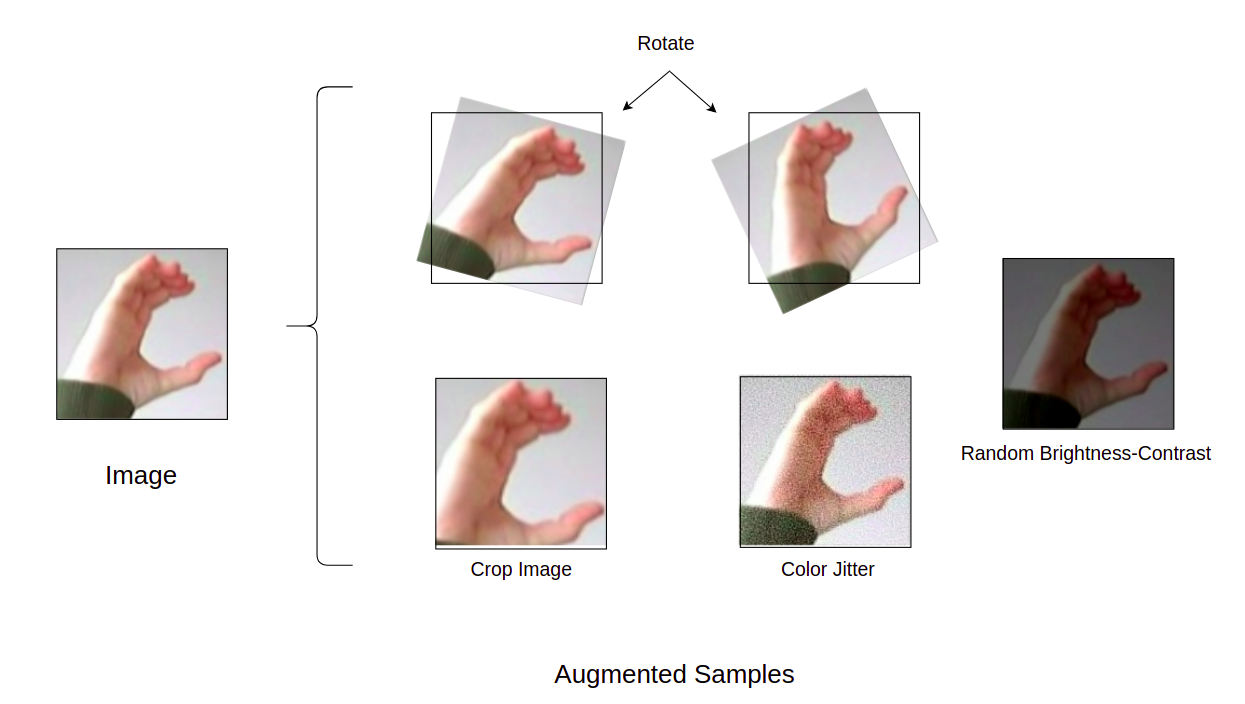

- Making sure the network didnt overfit was challenging. Heavy image augmentation and dropout layers did the trick. The diagram below represents the augmentation techniques we used.

- Getting the GUI to work in harmony with the script was tricky.

- We were surprised when the trained neural network actually worked with real life webcam feed.

- The performance of the model was great too, because of its small architecture.

- Learned the importance of inference time when dealing with trained neural networks, specially for real-time applications.

- Having some sort of reward mechanism is important to keep the user engaged, hence we decided to add the game element to it.

- We tested the app multiple times, and unknowingly taught sign language to ourselves :)