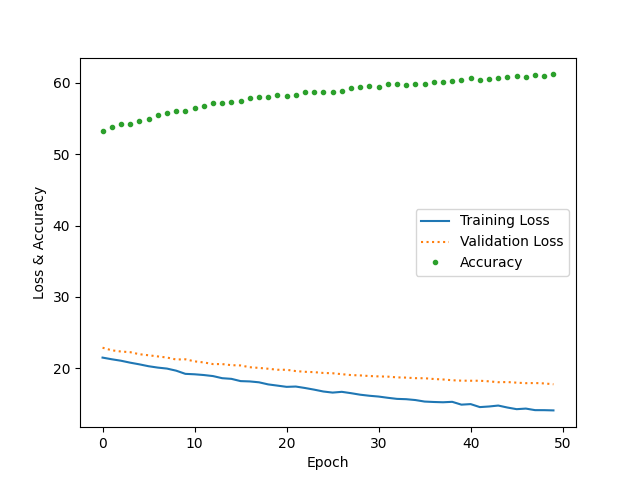

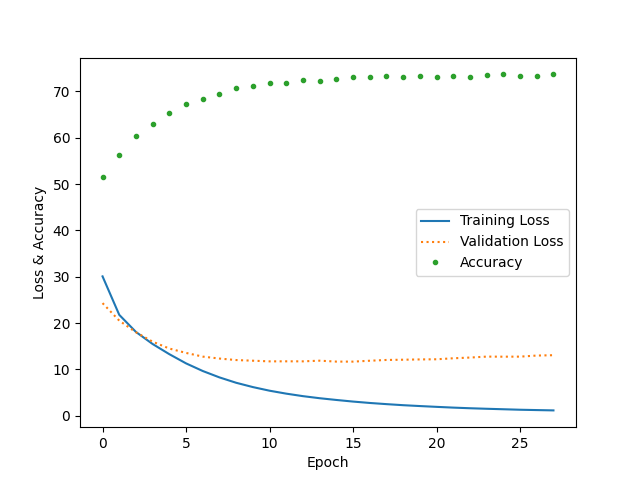

There are 2 models based on encoder-decoder structure. And they are uesd for French-English translation tasks.

The first model is the "encoder + simple decoder" structure, while the second model added the attention mechanism, and used two generation methods: greedy and beam search.

In the training phase, a gradually weakening teacher-forcing method is used to make the model converge as soon as possible but reduce the effect of exposure bias.

In order not to consider the weight of the padding 0 part, the necessary section of weights is intercepted before calculating the attention.

-

For long sequences, the attention mechanism can significantly improve model performance

-

Beam search can increase the diversity of results

-

Teacher-forcing can effectively solve the problem of slow convergence and instability