These are the notes and link to resources that I am creating while learning aws. Also these notes are based on a youtube playlist. You can checkout the lectures with this link (https://www.youtube.com/playlist?list=PLRAV69dS1uWSj3ltu0ym1LwWg4509PZ0N)

So starting of, you must be wondering what is cloud and how is it diffrent from On Premise infrastructure which means having your own physical servers at a place. So lets discuss about it:

Lets discuss the points given in the above image:

Since we are setting up our own physical servers in this infrastructure, it requires high investment firstly to find a place and to purchase servers as well. Although the resources are deployed in-house which means you have complete control over the data. But one of the major issue with this infrastructure is that it is very tough to scale this system because of physical servers. You can imagine how tough it would be to purchase new servers everytime and also keep the maintainance of the old one's which is also very important.

Now as we go through this part you will find many advantages of using cloud over physical servers. Firstly it works on pay as you go model which means we have to pay according to the storage or technology we require and also the amount of users we are handelling which is better that making huge starting investment for buying physical servers. Although the deployment takes place on a third party platform which can create loss of authority of data which reduces the crediblity. But one of the biggest advantage of moving in cloud is scalability and elasticity. You have seen live streams on diffrent platform reaching 1 to 2 crore viewership sometimes. These numbers are managed by the flexibility of cloud infrastructure which helps us to upscale when users are more and even scale down when viewrs are less which is a game changer.

Okay so by the above information, you have understood why are we moving towards cloud and why not (depends on your requirement), now lets discuss about the different cloud services:

IaaS provides virtualized computing resources over the internet. It allows users to rent infrastructure components like virtual machines, storage, and networking resources on a pay-per-use basis.

Example: Amazon Web Services (AWS) EC2 (Elastic Compute Cloud).

Explanation: AWS EC2 allows users to launch and manage virtual servers (EC2 instances) in the cloud. Users can choose the instance type, operating system, and other configurations based on their requirements.

PaaS provides a platform allowing customers to develop, run, and manage applications without dealing with the underlying infrastructure. It offers development and deployment environments with built-in tools and services.

Example: Heroku, netlify etc.

Explanation: Heroku is a cloud platform that allows developers to deploy, manage, and scale web applications easily. It supports various programming languages and frameworks, providing a seamless deployment experience without worrying about infrastructure management.

SaaS delivers software applications over the internet on a subscription basis. Users can access these applications via a web browser without needing to install or maintain any software locally.

Example: Youtube

Explanation:YouTube exemplifies the SaaS model by providing users with access to a software application over the internet, without requiring installation or maintenance, and offering continuous update.

FaaS is a cloud computing service that allows developers to deploy individual functions or pieces of code in the cloud and execute them in response to events or triggers without managing server infrastructure.

Example: AWS Lambda.

Explanation: AWS Lambda allows developers to run code in response to events such as changes to data in Amazon S3 buckets, updates to DynamoDB tables, HTTP requests via Amazon API Gateway, etc. Developers upload their code and Lambda automatically scales and executes it in response to events.

In cloud computing, control over the cloud refers to the degree of authority and management a user or organization has over their cloud infrastructure and resources. Control can vary based on several factors, including the type of cloud deployment (private, public, hybrid, or multi-cloud) and the level of service provided by the cloud provider. Let's define each type of cloud deployment in the context of control:

You own the hardware You own the software Only you use it Security complaince

Cloud resources are shared over a group of people Cloud service provider is involved Control is in the hand of the cloud service provider Users have access to virtual machines through web based console

Best of both private and public Connection through internet and shared link

Use multiple cloud service providers to manage the workload of your organisation eg: aws, azure both together

An Amazon company

On demand cloud

Pay what you use

Compute Storage Access data transfer

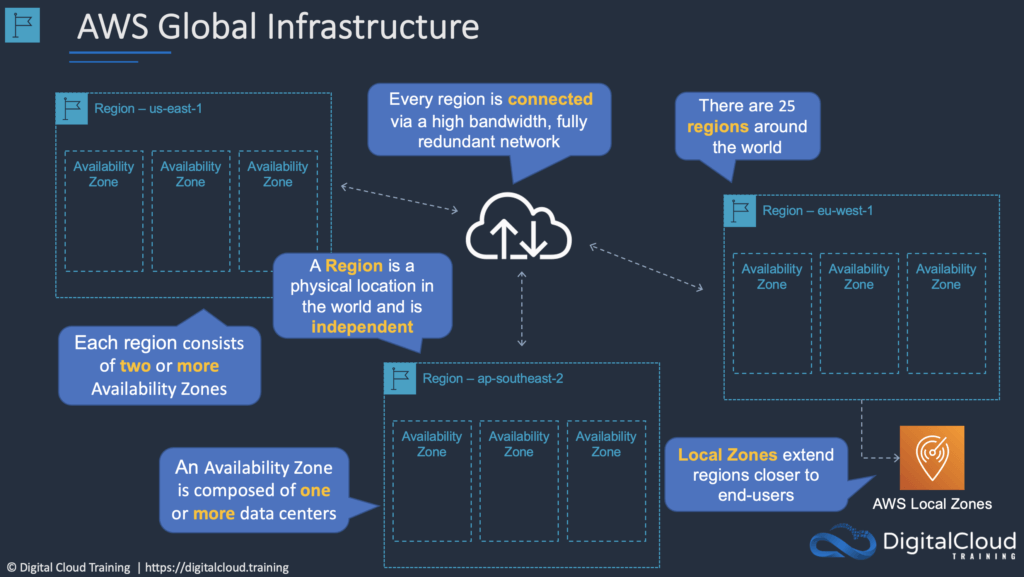

Amazon Web Services (AWS) is known for its extensive and robust global infrastructure, which enables customers to deploy their applications and services with low latency, high availability, and scalability across the globe. Here's a brief overview of the AWS global infrastructure:

- AWS operates a global network of data centers known as regions. A region is a geographical area that consists of multiple Availability Zones (AZs).

- Each region is isolated from other regions to provide fault tolerance and stability. AWS currently has multiple regions worldwide, including regions in North America, South America, Europe, Asia Pacific, and the Middle East.

- Availability Zones are distinct data centers within a region that are engineered to be isolated from failures in other Availability Zones.

- Each AZ is equipped with independent power, cooling, and networking infrastructure to ensure high availability and fault tolerance.

- By deploying resources across multiple AZs within a region, customers can achieve redundancy and improve the resilience of their applications.

- In addition to regions and Availability Zones, AWS has a global network of edge locations that are used for content delivery and caching through the Amazon CloudFront service.

- Edge locations are distributed worldwide and are strategically located to deliver content to end-users with low latency and high data transfer speeds.

- AWS operates a high-speed global network backbone that interconnects its regions and edge locations.

- This network backbone ensures fast and reliable communication between AWS services, regions, and edge locations, enabling customers to transfer data and access resources efficiently.

- AWS Direct Connect provides dedicated network connections between customer data centers, office locations, or colocation environments and AWS.

- By using AWS Direct Connect, customers can establish private and secure connections to AWS, bypassing the public internet, and achieve predictable network performance and reduced latency.

- AWS has introduced Local Zones and Wavelength Zones to bring AWS services closer to end-users and specific geographic locations.

- Local Zones are extensions of AWS regions that are located closer to metropolitan areas, enabling customers to run latency-sensitive applications with single-digit millisecond latency.

- Wavelength Zones are deployed at the edge of telecommunication networks and are optimized for ultra-low latency applications, such as mobile edge computing and 5G-enabled services.

Identity access management or simpely IAM is a web service that helps you securely control access to AWS resources. It allows you to manage users, groups, and permissions within your AWS account. Some of the key features of IAM includes user management, group management, role based access, multifactor authentication, policy management, access key management. We will look into all these features in more detail. Firstly lets understand the IAM architecture.

The user is firstly assigned to a group and is given a particular role. He is then given access to the aws services based on the policies that are made for that particular group. Group is a way to organise the users. It is always a good practice to assign roles to groups rather than to individual users. Roles are assumed and they keep changing.

He has full access to all the services.

He is basically the owner

Root users should be avoided and use multifactor authentication.

No permissions are given from the start

You can create around 5000 accounts (soft limit)

As discussed earlier, use services and prefer groups

##Create Groups

Add policy to group.

User get permission via group in json format.

Give a group least privilage possible.

In AWS Identity and Access Management (IAM), there are primarily six types of policies:

These policies are attached to IAM identities (users, groups, or roles) and specify permissions for those identities.

Identity-based policies can be managed policies (either AWS managed or customer managed) or inline policies.

They define what actions are allowed or denied on which AWS resources.

These policies are attached to AWS resources such as Amazon S3 buckets, Lambda functions, or SQS queues.

Resource-based policies specify who can access the resource and what actions they can perform.

They are defined and managed alongside the resource to which they apply.

Permission boundaries are advanced IAM policies that can be used to set the maximum permissions that an IAM entity (user or role) can have.

They act as a container for permissions and can't grant permissions but can only reduce permissions.

Permission boundaries can be used to ensure that IAM entities cannot be granted more permissions than allowed by the boundary.

SCPs are a feature of AWS Organizations, which is used to centrally manage and enforce security policies across multiple AWS accounts in an organization.

SCPs are attached to organizational units (OUs) or the entire organization and specify the maximum permissions that can be applied to the member accounts.

They are used to set guardrails and restrict what actions and resources are accessible within member accounts.

ACLs are legacy access control mechanisms that are attached to Amazon S3 buckets and can be used to manage access to the bucket and its contents.

ACLs are not as flexible as IAM policies and are generally not recommended for managing access to S3 buckets unless there are specific requirements.

Session policies are temporary policies that are applied during an AWS session after assuming a role using AWS Security Token Service (STS).

They are similar to inline policies and define the permissions that are granted to the session.

Session policies are created dynamically and last only for the duration of the session.

These six types of IAM policies provide various mechanisms for controlling access to AWS resources and managing permissions across different AWS services and features.

Throughout our discussion, we have talked about some of the best practices for the actions we perform. So let me compile all of them together.

Use multifactor authentication

Do not use root account

Rotate keys

Give least privillages in the policy making

IAM access analyser: Tool to generate least privillage policies based to access activites. (Not widely used in organisations)

Regularly remove user, roles, permissions, policies and credintials that are no longer used.

Condition like IP range or geolocation can be applied

To get hands on feel with IAM, you can view this youtube video: (https://www.youtube.com/watch?v=4jLwjylseXA)

Amazon Elastic Compute Cloud (Amazon EC2) provides on-demand, scalable computing capacity in the Amazon Web Services (AWS) Cloud. Using Amazon EC2 reduces hardware costs so you can develop and deploy applications faster. You can use Amazon EC2 to launch as many or as few virtual servers as you need, configure security and networking, and manage storage. You can add capacity (scale up) to handle compute-heavy tasks, such as monthly or yearly processes, or spikes in website traffic. When usage decreases, you can reduce capacity (scale down) again.Under computing, it includes all the services a computing device can offer to you along with the flexibility of a virtual environment. It also allows the user to configure their instances as per their requirements i.e. allocate the RAM, ROM, and storage according to the need of the current task. Even the user can dismantle the virtual device once its task is completed and it is no more required. For providing, all these scalable resources AWS charges some bill amount at the end of every month, the bill amount is entirely dependent on your usage. EC2 allows you to rent virtual computers. The provision of servers on AWS Cloud is one of the easiest ways in EC2. EC2 has resizable capacity. EC2 offers security, reliability, high performance, and cost-effective infrastructure so as to meet the demanding business needs.

Deploying Application: In the AWS EC2 instance, you can deploy your application like .jar,.war, or .ear application without maintaining the underlying infrastructure.

Scaling Application: Once you deployed your web application in the EC2 instance know you can scale your application based upon the demand you are having by scaling the AWS EC2-Instance.

Deploying The ML Models: You can train and deploy your ML models in the EC2-instance because it offers up to 400 Gbps), and storage services purpose-built to optimize the price performance for ML projects.

Hybrid Cloud Environment: You can deploy your web application in EC2-Instance and you can connect to the database which is deployed in the on-premises servers.

Cost-Effective: Amazon EC2-instance is cost-effective so you can deploy your gaming application in the Amazon EC2-Instances.

Different Amazon EC2 instance types are designed for certain activities. Consider the unique requirements of your workloads and applications when choosing an instance type. This might include needs for computing, memory, or storage.

The AWS EC2 Instance Types are as follows:

General Purpose Instances

Compute Optimized Instances

Memory-Optimized Instances

Storage Optimized Instances

Accelerated Computing Instances

EC2 provides its users with a true virtual computing platform, where they can use various operations and even launch another EC2 instance from this virtually created environment. This will increase the security of the virtual devices. Not only creating but also EC2 allows us to customize our environment as per our requirements, at any point of time during the life span of the virtual machine. Amazon EC2 itself comes with a set of default AMI(Amazon Machine Image) options supporting various operating systems along with some pre-configured resources like RAM, ROM, storage, etc. Besides these AMI options, we can also create an AMI curated with a combination of default and user-defined configurations. And for future purposes, we can store this user-defined AMI, so that next time, the user won’t have to re-configure a new AMI(Amazon Machine Image) from scratch. Rather than this whole process, the user can simply use the older reference while creating a new EC2 machine.

Amazon EC2 includes a wide range of operating systems to choose from while selecting your AMI. Not only are these selected options, but users are also even given the privilege to upload their own operating systems and opt for that while selecting AMI during launching an EC2 instance. Currently, AWS has the following most preferred set of operating systems available on the EC2 console.

Amazon Linux

Windows Server

Ubuntu Server

SUSE Linux

Red Hat Linux

AWS EC2 Software

Amazon is single-handedly ruling the cloud computing market, because of the variety of options available on EC2 for its users. It allows its users to choose from various software present to run on their EC2 machines. This whole service is allocated to AWS Marketplace on the AWS platform. Numerous software like SAP, LAMP, Drupal, etc are available on AWS to use.

EC2 provides us the facility to scale up or scale down as per the needs. All dynamic scenarios can be easily tackled by EC2 with the help of this feature. And because of the flexibility of volumes and snapshots, it is highly reliable for its users. Due to the scalable nature of the machine, many organizations like Flipkart, and Amazon rely on these days whenever humongous traffic occurs on their portals.

In this section, we will be deploying jenkins on AWS ec2. Feel free to follow along.