nGraph Library

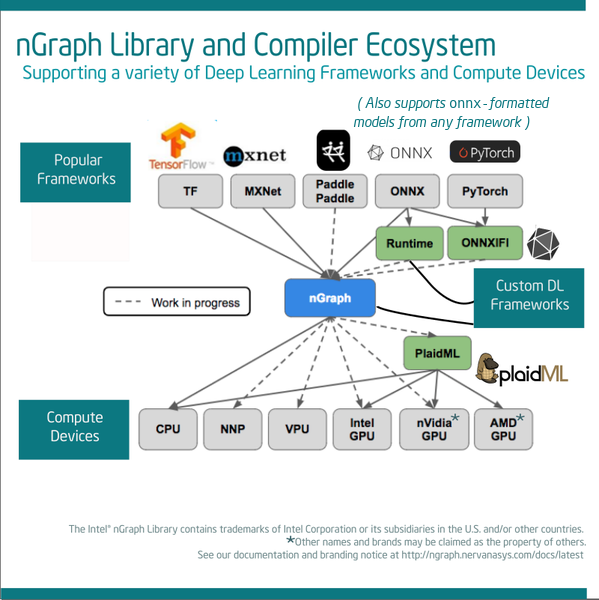

Welcome to the open-source repository for the Intel® nGraph Library. Our code base provides a Compiler and runtime suite of tools (APIs) designed to give developers maximum flexibility for their software design, allowing them to create or customize a scalable solution using any framework while also avoiding device-level hardware lock-in that is so common with many AI vendors. A neural network model compiled with nGraph can run on any of our currently-supported backends, and it will be able to run on any backends we support in the future with minimal disruption to your model. With nGraph, you can co-evolve your software and hardware's capabilities to stay at the forefront of your industry.

The nGraph Compiler is Intel's graph compiler for Artificial Neural Networks. Documentation in this repo describes how you can program any framework to run training and inference computations on a variety of Backends including Intel® Architecture Processors (CPUs), Intel® Nervana™ Neural Network Processors (NNPs), cuDNN-compatible graphics cards (GPUs), custom VPUs like [Movidius], and many others. The default CPU Backend also provides an interactive Interpreter mode that can be used to zero in on a DL model and create custom nGraph optimizations that can be used to further accelerate training or inference, in whatever scenario you need.

nGraph provides both a C++ API for framework developers and a Python API which can run inference on models imported from ONNX.

See the Release Notes for recent changes.

| Framework | bridge available? | ONNX support? |

|---|---|---|

| TensorFlow* | yes | yes |

| MXNet* | yes | yes |

| PaddlePaddle | yes | yes |

| PyTorch* | no | yes |

| Chainer* | no | yes |

| CNTK* | no | yes |

| Caffe2* | no | yes |

| Backend | current support | future support |

|---|---|---|

| Intel® Architecture CPU | yes | yes |

| Intel® Nervana™ Neural Network Processor (NNP) | yes | yes |

| Intel Movidius™ Myriad™ 2 VPUs | coming soon | yes |

| Intel® Architecture GPUs | via PlaidML | yes |

| AMD* GPUs | via PlaidML | yes |

| NVIDIA* GPUs | via PlaidML | some |

| Field Programmable Gate Arrays (FPGA) | no | yes |

Documentation

See our install docs for how to get started.

For this early release, we provide framework integration guides to compile MXNet and TensorFlow-based projects. If you already have a trained model, we've put together a getting started guide for how to import a deep learning model and start working with the nGraph APIs.

Support

Please submit your questions, feature requests and bug reports via GitHub issues.

How to Contribute

We welcome community contributions to nGraph. If you have an idea how to improve the Library:

- See the contrib guide for code formatting and style guidelines.

- Share your proposal via GitHub issues.

- Ensure you can build the product and run all the examples with your patch.

- In the case of a larger feature, create a test.

- Submit a pull request.

- Make sure your PR passes all CI tests. Note: our Travis-CI service runs only on a CPU backend on Linux. We will run additional tests in other environments.

- We will review your contribution and, if any additional fixes or modifications are necessary, may provide feedback to guide you. When accepted, your pull request will be merged to the repository.