Unofficial PyTorch reimplementation of minimal-hand (CVPR2020).

you can also find in youtube or bilibili

This project reimplement following components :

- Training (DetNet) and Evaluation Code

- Shape Estimation

- Pose Estimation: Instead of IKNet in original paper, an analytical inverse kinematics method is used.

Offical project link: [minimal-hand]

-

2021/08/22 many guys may get errors when creating environment from .yaml file, u may refer to here

-

2021/03/09 update about

utils/LM.py, time cost drop from 12s/item to 1.57s/item -

2021/03/12 update about

utils/LM.py, time cost drop from 1.57s/item to 0.27s/item -

2021/03/17 realtime perfomance is achieved when using PSO to estimate shape, coming soon

-

2021/03/20 Add PSO to estimate shape.

AUC is decreased by about 0.01 on STB and RHD datasets, and increased a little on EO and do datasets.Modifiy utlis/vis.py to improve realtime perfomance -

2021/03/24 Fixed some errors in calculating AUC. Update the 3D PCK AUC Diffenence.

-

2021/06/14 A new method to estimate shape parameters by using fully connected neural network is added. This is finished by @maitetsu as part of his undergraduate graduation project. Please refer to ShapeNet.md for details. Thanks to @kishan1823 and @EEWenbinWu for pointing out the mistake. There are a little differences between the manopth I used and the official manopth. More details see issues 11. manopth/rotproj.py is the modified rotproj.py. This could achieve much faster real-time performance!

- Retrieve the code

git clone https://github.com/MengHao666/Minimal-Hand-pytorch

cd Minimal-Hand-pytorch- Create and activate the virtual environment with python dependencies

conda env create --file=environment.yml

conda activate minimal-hand-torch

-

Download MANO model from here and unzip it.

-

Create an account by clicking Sign Up and provide your information

-

Download Models and Code (the downloaded file should have the format mano_v*_*.zip). Note that all code and data from this download falls under the MANO license.

-

unzip and copy the content of the models folder into the

manofolder -

Your structure should look like this:

Minimal-Hand-pytorch/

mano/

models/

webuser/

- CMU HandDB part1 ; part2

- Rendered Handpose Dataset

- GANerated Hands Dataset

-

STB Dataset,or u can find ithere:

STB_supp: for license reason, download link could be found in bihand

-

DO_supp: Google Drive or Baidu Pan (

s892) -

EO_supp: Google Drive or Baidu Pan (

axkm)

- Create a data directory, extract all above datasets or additional materials in it

Now your data folder structure should like this:

data/

CMU/

hand143_panopticdb/

datasets/

...

hand_labels/

datasets/

...

RHD/

RHD_published_v2/

evaluation/

training/

view_sample.py

...

GANeratedHands_Release/

data/

...

STB/

images/

B1Counting/

SK_color_0.png

SK_depth_0.png

SK_depth_seg_0.png <-- merged from STB_supp

...

...

labels/

B1Counting_BB.mat

...

dexter+object/

calibration/

bbox_dexter+object.csv

DO_pred_2d.npy

data/

Grasp1/

annotations/

Grasp13D.txt

my_Grasp13D.txt

...

...

Grasp2/

annotations/

Grasp23D.txt

my_Grasp23D.txt

...

...

Occlusion/

annotations/

Occlusion3D.txt

my_Occlusion3D.txt

...

...

Pinch/

annotations/

Pinch3D.txt

my_Pinch3D.txt

...

...

Rigid/

annotations/

Rigid3D.txt

my_Rigid3D.txt

...

...

Rotate/

annotations/

Rotate3D.txt

my_Rotate3D.txt

...

...

EgoDexter/

preview/

data/

Desk/

annotation.txt_3D.txt

my_annotation.txt_3D.txt

...

Fruits/

annotation.txt_3D.txt

my_annotation.txt_3D.txt

...

Kitchen/

annotation.txt_3D.txt

my_annotation.txt_3D.txt

...

Rotunda/

annotation.txt_3D.txt

my_annotation.txt_3D.txt

...

- All code and data from these download falls under their own licenses.

- DO represents "dexter+object" dataset; EO represents "EgoDexter" dataset

DO_suppandEO_suppare modified from original ones.- DO_pred_2d.npy are 2D predictions from 2D part of DetNet.

- some labels of DO and EO is obviously wrong (u could find some examples with original labels from dexter_object.py or egodexter.py), when projected into image plane, thus should be omitted.

Here come

my_{}3D.txtandmy_annotation.txt_3D.txt.

- my_results: Google Drive or

Baidu Pan (

2rv7) - extract it in project folder

- The parameters used in the real-time demo can be found google_drive or baidu (un06). It is trained with loss of Hand-BMC-pytorch together!!!

python demo.py

python demo_dl.py

Run the training code

python train_detnet.py --data_root data/

Run the evaluation code

python train_detnet.py --data_root data/ --datasets_test testset_name_to_test --evaluate --evaluate_id checkpoints_id_to_load

or use my results

python train_detnet.py --checkpoint my_results/checkpoints --datasets_test "rhd" --evaluate --evaluate_id 106

python train_detnet.py --checkpoint my_results/checkpoints --datasets_test "stb" --evaluate --evaluate_id 71

python train_detnet.py --checkpoint my_results/checkpoints --datasets_test "do" --evaluate --evaluate_id 68

python train_detnet.py --checkpoint my_results/checkpoints --datasets_test "eo" --evaluate --evaluate_id 101

Run the shape optimization code. This can be very time consuming when the weight parameter is quite small.

python optimize_shape.py --weight 1e-5

or use my results

python optimize_shape.py --path my_results/out_testset/

Run the following code which uses a analytical inverse kinematics method.

python aik_pose.py

or use my results

python aik_pose.py --path my_results/out_testset/

Run the following code to see my results

python plot.py --path my_results/out_loss_auc

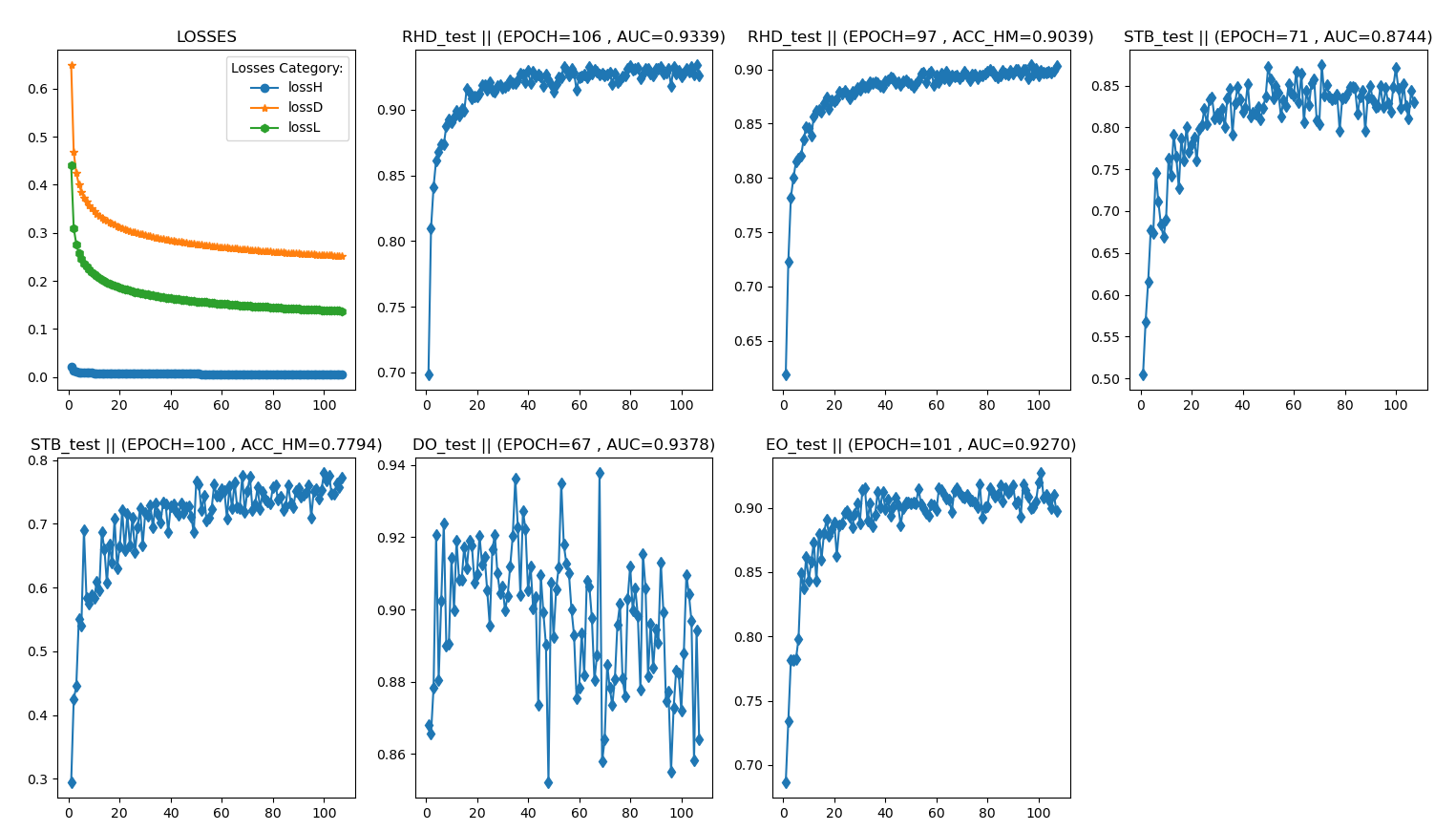

(AUC means 3D PCK, and ACC_HM means 2D PCK)

* means this project

| Dataset | DetNet(paper) | DetNet(*) | DetNet+IKNet(paper) | DetNet+LM+AIK(*) | DetNet+PSO+AIK(*) | DetNet+DL+AIK(*) |

|---|---|---|---|---|---|---|

| RHD | - | 0.9339 | 0.856 | 0.9301 | 0.9310 | 0.9272 |

| STB | 0.891 | 0.8744 | 0.898 | 0.8647 | 0.8671 | 0.8624 |

| DO | 0.923 | 0.9378 | 0.948 | 0.9392 | 0.9342 | 0.9400 |

| EO | 0.804 | 0.9270 | 0.811 | 0.9288 | 0.9277 | 0.9365 |

- Adjusting training parameters carefully, longer training time, more complicated network or Biomechanical Constraint Losses could further boost accuracy.

- As there is no official open source of original paper, above comparison is a little rough.

This is the unofficial pytorch reimplementation of the paper "Monocular Real-time Hand Shape and Motion Capture using Multi-modal Data" (CVPR 2020).

If you find the project helpful, please star this project and cite them:

@inproceedings{zhou2020monocular,

title={Monocular Real-time Hand Shape and Motion Capture using Multi-modal Data},

author={Zhou, Yuxiao and Habermann, Marc and Xu, Weipeng and Habibie, Ikhsanul and Theobalt, Christian and Xu, Feng},

booktitle={Proceedings of the IEEE International Conference on Computer Vision},

pages={0--0},

year={2020}

}

-

Code of Mano Pytorch Layer was adapted from manopth.

-

Code for evaluating the hand PCK and AUC in

utils/eval/zimeval.pywas adapted from hand3d. -

Part code of data augmentation, dataset parsing and utils were adapted from bihand and 3D-Hand-Pose-Estimation.

-

Code of network model was adapted from Minimal-Hand.

-

@Mrsirovo for the starter code of the

utils/LM.py, @maitetsu update it later. -

@maitetsu for the starter code of the

utils/AIK.py,the implementation of PSO and deep-learing method for shape estimation.