Taihong Xiao, Jiapeng Hong and Jinwen Ma

Please cite our paper if you find it useful to your research.

@InProceedings{Xiao_2018_ECCV,

author = {Xiao, Taihong and Hong, Jiapeng and Ma, Jinwen},

title = {ELEGANT: Exchanging Latent Encodings with GAN for Transferring Multiple Face Attributes},

booktitle = {Proceedings of the European Conference on Computer Vision (ECCV)},

pages = {172--187},

month = {September},

year = {2018}

}

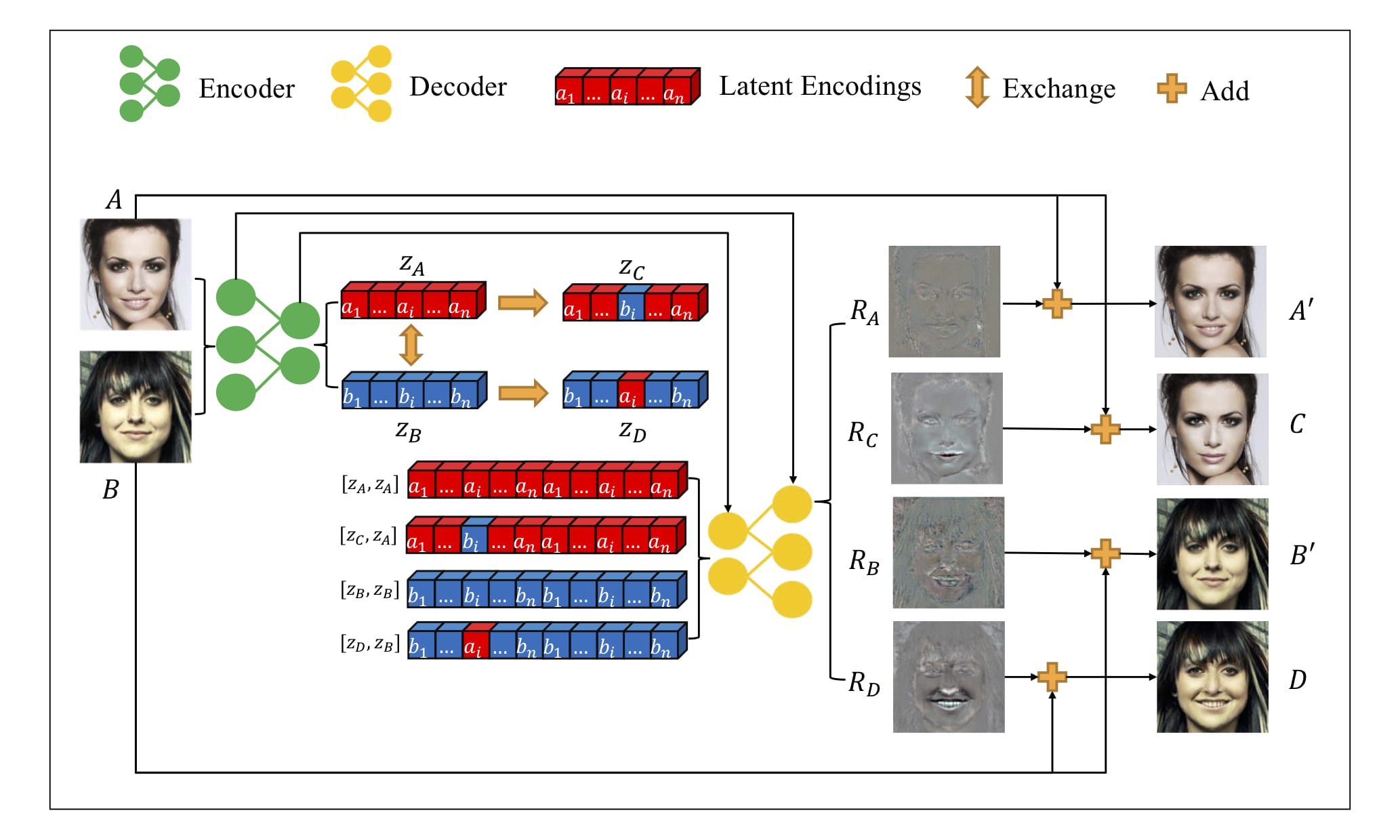

This repo is the pytorch implementation of our paper. ELEGANT is a novel model for transferring multiple face attributes by exchanging latent encodings. The model framework is shown below.

- Download celebA dataset and unzip it into

datasetsdirectory. There are various source providers for CelebA datasets. To ensure that the size of downloaded images is correct, please runidentify datasets/celebA/data/000001.jpg. The size should be 409 x 687 if you are using the same dataset. Besides, please ensure that you have the following directory tree structure in your repo.

├── datasets

│ └── celebA

│ ├── data

│ ├── images.list

│ ├── list_attr_celeba.txt

│ └── list_landmarks_celeba.txt

-

Run

python preprocess.py. It will take only few minutes to preprocess all images. A new directorydatasets/celebA/align_5pwill be created. -

Run

python ELEGANT.py -m train -a Bangs Mustache -g 0to train ELEGANT with respect to two attributesBangsandMustachesimultaneuously. You can play with other attributes as well. Please refer tolist_attr_celeba.txtfor all available attributes. If training ELEGANT with more than one gpu cards, you can accordingly increase the batch size, which is indicated in the first number ofnchwindataset.py. -

Run

tensorboard --logdir=./train_log/log --port=6006to watch your training process. You can use tags matching for inspecting one group of images. For example, if you type0_04in the image tags matching box, then a group of 10 images should be displayed together, including two original images, four residual images and four generated images. In the notation0_04,0indicates the first attribute and the04indicates the 4-th group.

We provide four types of mode for testing. Let me explain all the parameters for testing.

-a: All attributes' names.-r: Restore checkpoint.-g: The GPU id(s) for testing.- Don't add this parameter to your shell command if you don't want to use gpu for testing.

- No more than 1 GPU should be specified during test, because 1 image cannot be split into multiple GPUs.

--swap: Swap attribute of two images.--linear: Linear interpolation by adding or removing one certain attribute.--matrix: Matrix interpolation with respect to one or two attributes.--swap_list: The attribute id(s) for testing.- For example,

--swap_list 0indicates the first attribute. - Receives two integers only in the interpolation with respect to two attributes.

- In other cases, only one integer is required.

- For example,

--input: Input images path that you want to transfer.--target: Target image(s) path for reference.- Only one target image is needed in the

--swapand--linearmode. - Three target images are needed in the

--matrixmode with respect to one attribute. - Two target images are required in the

--matrixmode with respect to two attributes.

- Only one target image is needed in the

-s: The output size for interpolation.- One integer is needed in the

--linearmode. - Two integers are required for the

--matrixmode.

- One integer is needed in the

We can swap the Mustache attribute of two images. Here --swap_list 1 indicates the second

attribute should be swapped and -r 34000 means restoring trained model of step 34000.

You can choose the best model by inspecting the quality of generated images in tensorboard or

in the directory train_log/img/.

python ELEGANT.py -m test -a Bangs Mustache -r 34000 --swap --swap_list 1 --input ./images/goodfellow_aligned.png --target ./images/bengio_aligned.png

We can see the linear interpolation results of adding mustache to Bengio by running the following.

-s 4 indicates the number of intermediate images.

python ELEGANT.py -m test -a Bangs Mustache -r 34000 --linear --swap_list 1 --input ./images/bengio_aligned.png --target ./images/goodfellow_aligned.png -s 4

We can also add different kinds of bangs to a single person. Here, --swap_list 0 indicates we are

dealing with the first attribute, and there are three target images provided for reference.

python ELEGANT.py -m test -a Bangs Mustache -r 34000 --matrix --swap_list 0 --input ./images/ng_aligned.png --target ./images/bengio_aligned.png ./images/goodfellow_aligned.png ./images/jian_sun_aligned.png -s 4 4

We can transfer two attributes simultaneously by running the following command.

python ELEGANT.py -m test -a Bangs Mustache -r 34000 --matrix --swap_list 0 1 --input ./images/lecun_aligned.png --target ./images/bengio_aligned.png ./images/goodfellow_aligned.png -s 4 4

The original image gradually owns the first attribute Bangs in the vertical direction and the second attribute Mustache in the horizontal direction.