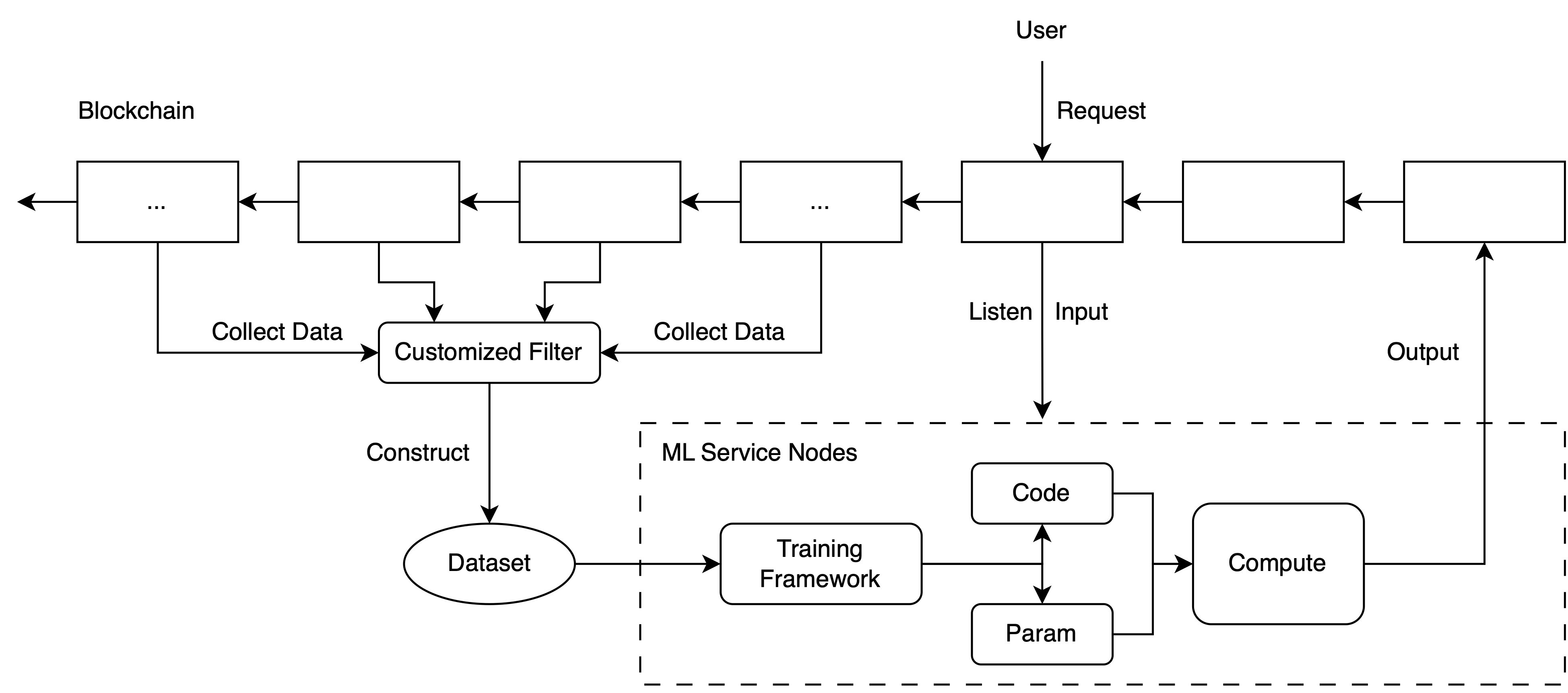

A simple workflow template to develop ML models with on-chain data and interact with on-chain contracts using ML models. (Without any proof or verification)

You can brainstorm any use cases if you can bring ML computation on chain.

Notice: This only serves as an example, and it doesn't have any ML components. We are waiting for you to fill it. Of course you can make any modification or even implement your own workflow.

Bringing machine learning algorithms on chain is difficult. This is because many neural network based algorithms require lots of computation which is very expensive on EVM. If users want to deploy neural network code and model parameters on Ethereum, they need to pay a lot for smart contract storage and computation. Therefore, we deploy the neural network codes to off chain devices, where the local computer performs various vector computations and sends the results back to the chain.

First, to build a machine learning model for blockchain, you have to construct the dataset. The blockchain provides a large amount of data, including, but not limited to, transaction information between different users, interaction between users and smart contracts, and interaction between smart contracts. You can get all sorts of information from a smart contract's emitted events. To get all of them, you only need to write several lines of codes using existing libraries. (Some helpful codes for you to get historical data on chain can be found in this notebook.)

When you have a dataset, you can train your favorite model to analyze the data.

After you get a model, you can fulfil other users' request for the model computation (Or you can make it an automated process, there is plenty of space for you to customize).

As a user, you can use similar codes as in this file to make your request to the coordinator contract. Users' requests include the input data, the model they want to run(modelId), the callback contract address, and the callback function in that contract.

As a node operator, you can listen to other users' requests and fulfil the request using user-specified models. The node operator should use the specified model, compute on the given input, and send the output back to the coordinator.

In this example, there are two contract, one is Coordinator, and the other is OutputCollector. They have been deployed on Sepolia. You can click the above link to interact with them. The source code for the contracts is in src/contracts.

The Coordinator contract will be responsible for managing the requests from the users. The workflow of the example coordinator is as followed:

- A user can call the

makeRequestto upload a request to the coordinator. - Then, the coordinator will emit an event including the information in that request. (requestId, modelId, input, callbackContract and callbackFunction)

- Node operators keep listening for the event in the coordinator.

- Once one operator get the request, it should check whether the request is fulfilled. If not, the operator will run the model with that input, and generate an output.

- The operator then sends the output back to the coordinator contract.

- The coordinator will call the callbackFunction in callbackContract specified by the user before.

- Finally, that request is fulfilled.

The example outputCollector serves as a simple callback contract in this template. The callback function is setOutput. After the coordinator set the output in this contract, you can use etherscan to check whether the model's output has been stored in this contract.

Notice: The defined functions may have lots of security problems. But you can play with those codes.

The node operator may be malicious.

How can users verify the node operator actually used the data on chain to train their models? (Since the interpretability of deep learning neural networks is a difficult problem.)

How can users verify the node operator actually used the specified input to perform the inference?

How can users verify the node operator actually used the model parameters or codes to perform the inference?

Actually, users know nothing about what the node operator is doing in this example, because the operator doesn't upload any kind of proof for their computation or storage.

There are some related works of using ZK Proof or Fraud Proof to generate the proof for some ML computations. But many of them are still under development. We hope we can make it securer and computational feasible in the future.