The project comprises tackling the supervised problem of body level classification: given numerical and categorical features regarding an individual’s health such as their height, weight, family history, age, and eleven others, the objective is to predict the body level of the individual (out of four possible levels).

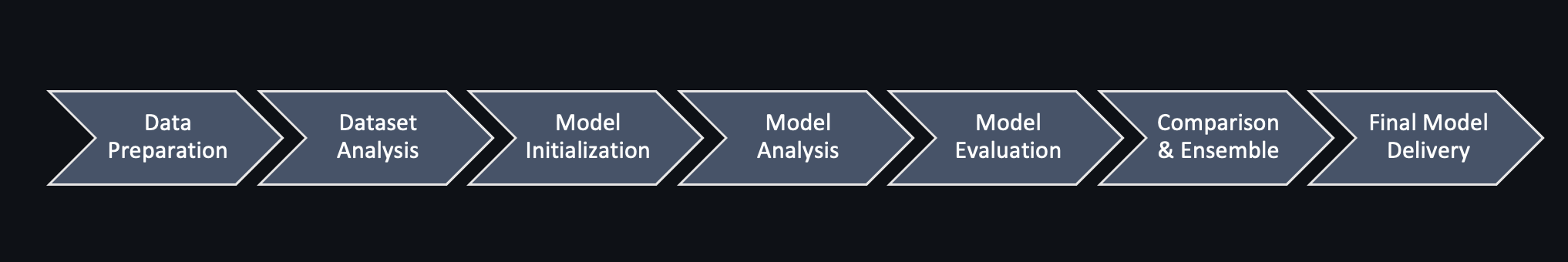

Our solution to said problem utilitizes the following pipeline

The following is the implied folder structure:

.

├── DataFiles

│ ├── dataset.csv

│ ├── train.csv

│ └── val.csv

├── DataPreparation

│ ├── CovarianceAnalysis.py

│ ├── DataPreparation.ipynb

│ └── DataPreparation.py

├── HandleClassImbalance

│ ├── HandleClassImbalance.ipynb

│ ├── HandleClassImbalance.py

├── ModelBaselines

│ └── Baseline.ipynb

├── Model Pipelines

│ ├── AdaBoost

│ │ └── Adaboost.ipynb

│ ├── Bagging

│ │ ├── Analysis.ipynb

│ │ └── SVMBagging.ipynb

│ ├── LogisticRegression

│ │ ├── Analysis.ipynb

│ │ └── LogisticRegression.ipynb

│ ├── Perceptron

│ │ ├── Analysis.ipynb

│ │ └── Perceptron.ipynb

│ ├── RandomForest

│ │ ├── Analysis.ipynb

│ │ └── RandomForest.ipynb

│ ├── SVM

│ │ ├── Analysis.ipynb

│ │ └── SVM.ipynb

│ ├── StackingEnsemble

│ │ └── StackingEnsemble.ipynb

│ ├── VotingEnsemble

│ │ └── VotingEnsemble.ipynb

│ ├── ModelAnalysis.py

│ ├── ModelVisualization.py

├── ModelScoring

│ └── Pipeline.py

├── References

│ └── ML Project Document.pdf

├── Saved

├── Quests

├── README.md

└── utils.py

pip install requirements.txt

# To run any stage of the pipeline, consider the stage's folder. There will always be a demonstration notebook.We started by designing and running a dataset analysis pipeline (i.e., studying the target function) which has lead to our initiation of SVM, LR, GNB, RF, Perceptron and Adaboost models. Then we proceeded by designing a model analysis cycle that we implemented for each of these models with the objective of studying the model's performance and tuning over the best hyperparameters, set of features and data preparation choices.

Best results for WF1 are as follows under 10-Repeated-10-Fold Cross Validation (which get better under other cval approaches)

| SVM | Logistic Regression | Random Forest |

|---|---|---|

| 98.65% | 98.38% | 97.63% |

The following visually depicts SVM's over the most important features

Which we did not end up choosing in the end, instead we considered forming Ensembles of these models via Voting, Bagging and Stacking. Yielding the following

| Bagging SVM | Voting | Stacked Generalization |

|---|---|---|

| 98.37% | 96.5% | 99.12% |

By this, our final model was stacking. It yields 99% on the competition's test set; as for why it did not make it high on the leaderboard, check the autopsy report.

We shall illustrate the whole pipeline including the analysis stages in the rest of the README. For an extensive overview of the the insights extracted and analysis results for the rest of the models please check the report or the demonstration notebooks herein.

Data preparation involves reading the data and putting in a suitable form. Options employed in this stage beyond reading the data are:

- To read specific splits of the data (by default train)

- To read only columns of numerical or categorical types (or both)

- Label,one-hot or frequency encoding for categorical features

- To standardize the data

This module was used to ingest the data for all subsequent models and analysis.

In light of guiding model initiation by studying the population and the target function we have performed the following analyses:

| Number of Samples | Number of Features | Number of Classes |

|---|---|---|

| 1180 | 16 | 4 |

| Variable | Gender | H_Cal_Consump | Smoking | Fam_Hist | H_Cal_Burn | Alcohol_Consump | Food_Between_Meals | Transport | Age | Height | Weight | Veg_Consump | Water_Consump | Meal_Count | Phys_Act | Time_E_Dev |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| #Uniques | 2 | 2 | 2 | 2 | 2 | 3 | 4 | 5 | Numerical | Numerical | Numerical | Numerical | Numerical | Numerical | Numerical | Numerical |

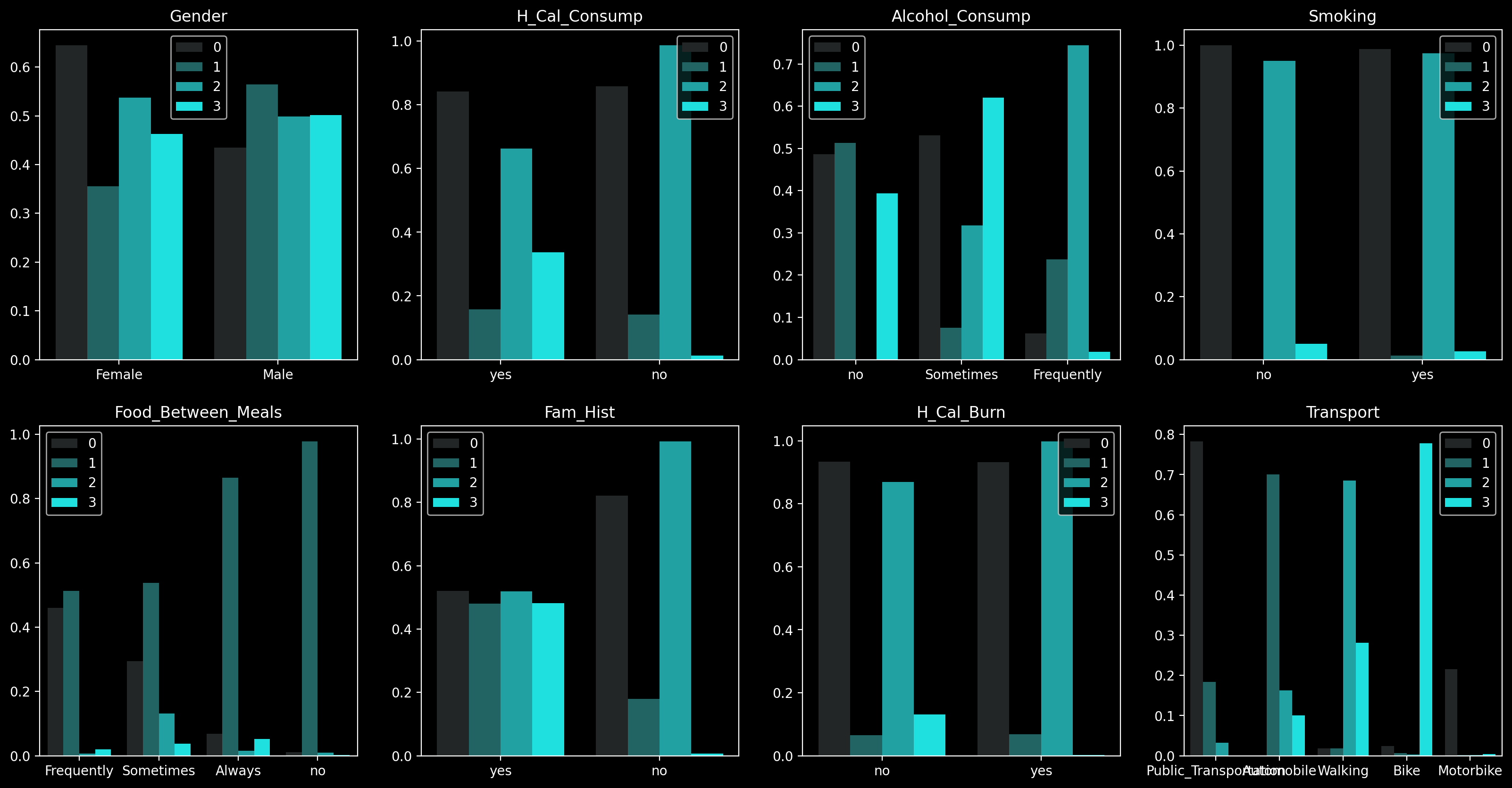

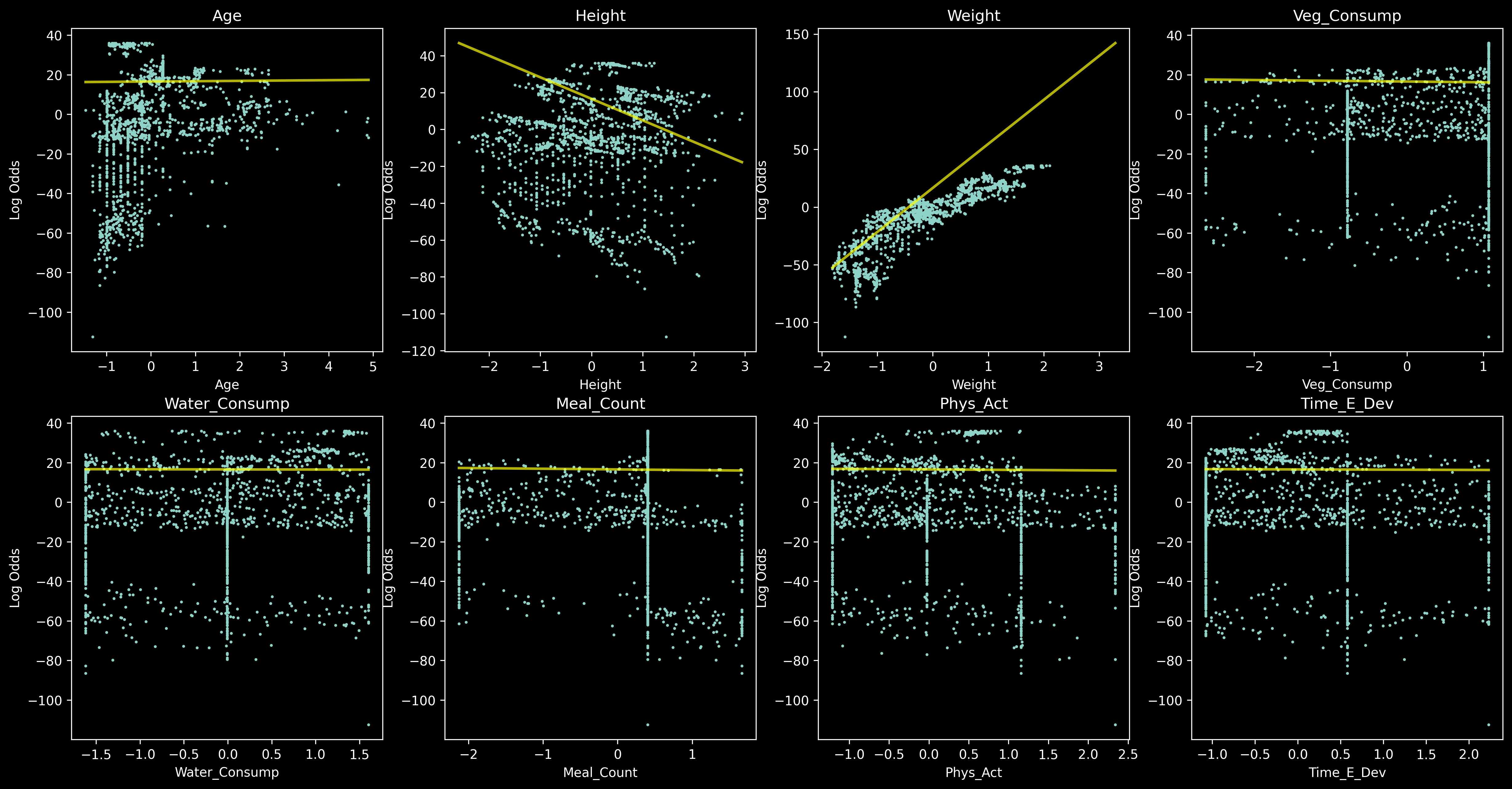

In this, we analyzed the distribution of each variable via a bar chart or kernel estimated density depending on whether its numerical or categorical respectively.

For purposes of studying imbalance between classes.

We analyzed correlations among all pairs of numerical variables using Pearson's, then all pairs of categorical variables using Crammer's V and then all pairs of numerical and categorical variables using Pearson's correlation ratio.

Here, the separability of the target is analyzed under all possible pairs of numerical variables.

In this, we study the separability of the target under different categories of each categorical variable.

We automated a generalization check given any two of validation set size, maximum allowed error and probability of violating that error. This was used to inspire the decision of the number of splits for cross validation (tuning each model) and the size of the validation set (choosing between models).

Hoeffding's Inequality states:

We considered two trivial baselines (MostFrequent and UniformRandom) and another nontrivial baseline (Gaussian Naive Bayes) so that we can set the bar regarding the bias of further models we consider. We then initiated and analyzed the following models:

- SupportVectorMachines

- LogisticRegression

- Perceptron

- RandomForest

- AdaptiveBoosting

We designed a unified analysis cycle that applies to any of the models as demonstrated in the report and the notebooks. It consists of the following stages at no particular order:

| Analysis Stage | Components |

|---|---|

| Model Greetings | Initiating Model and Viewing Hyperparameters |

| Studying the Hyperparameters and their Importance (documentation) | |

| Basic Model Analysis | Testing Model Assumptions (if any) |

| VC Dimension Check for Generalization | |

| Bias Variance Analysis | |

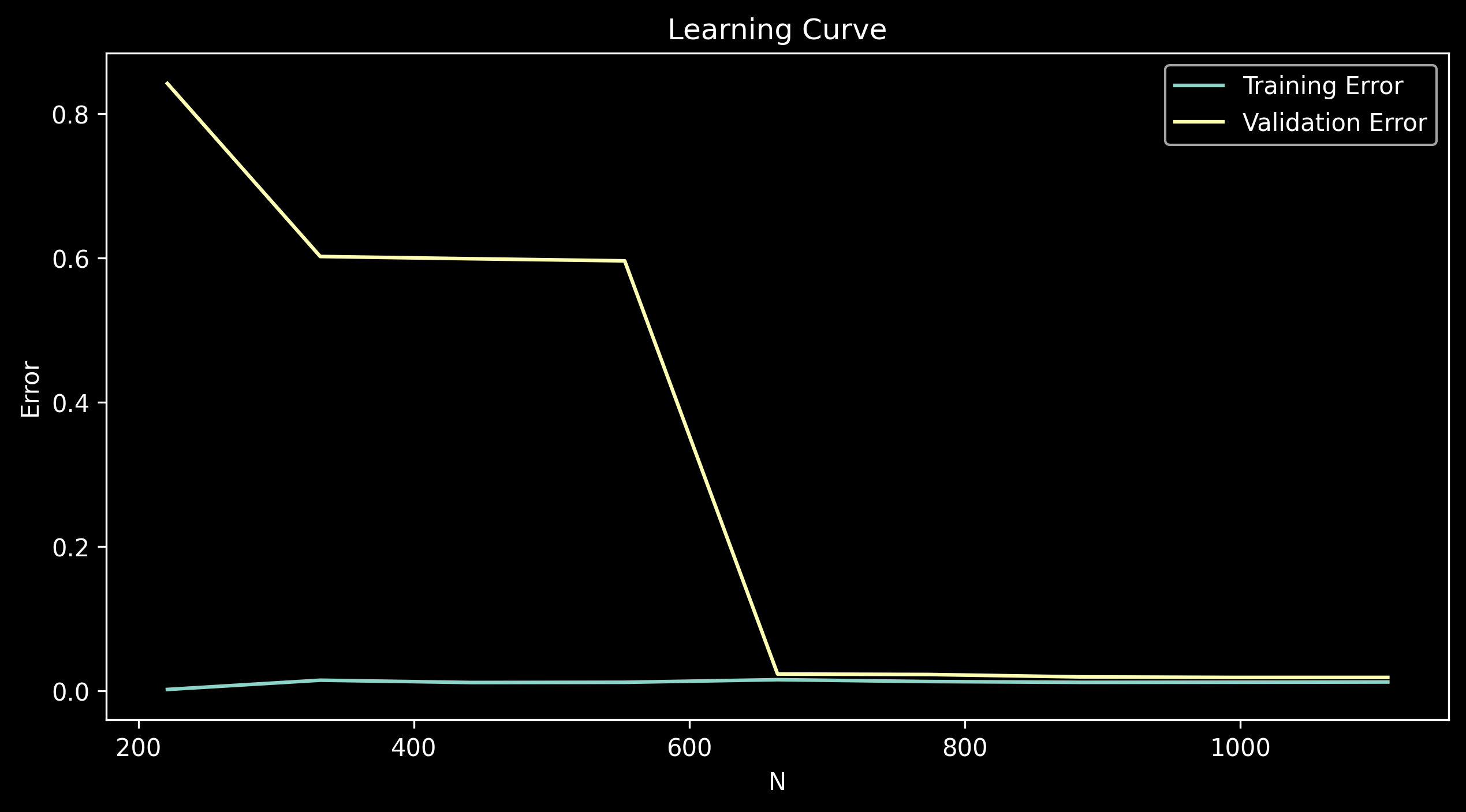

| Learning Curve | |

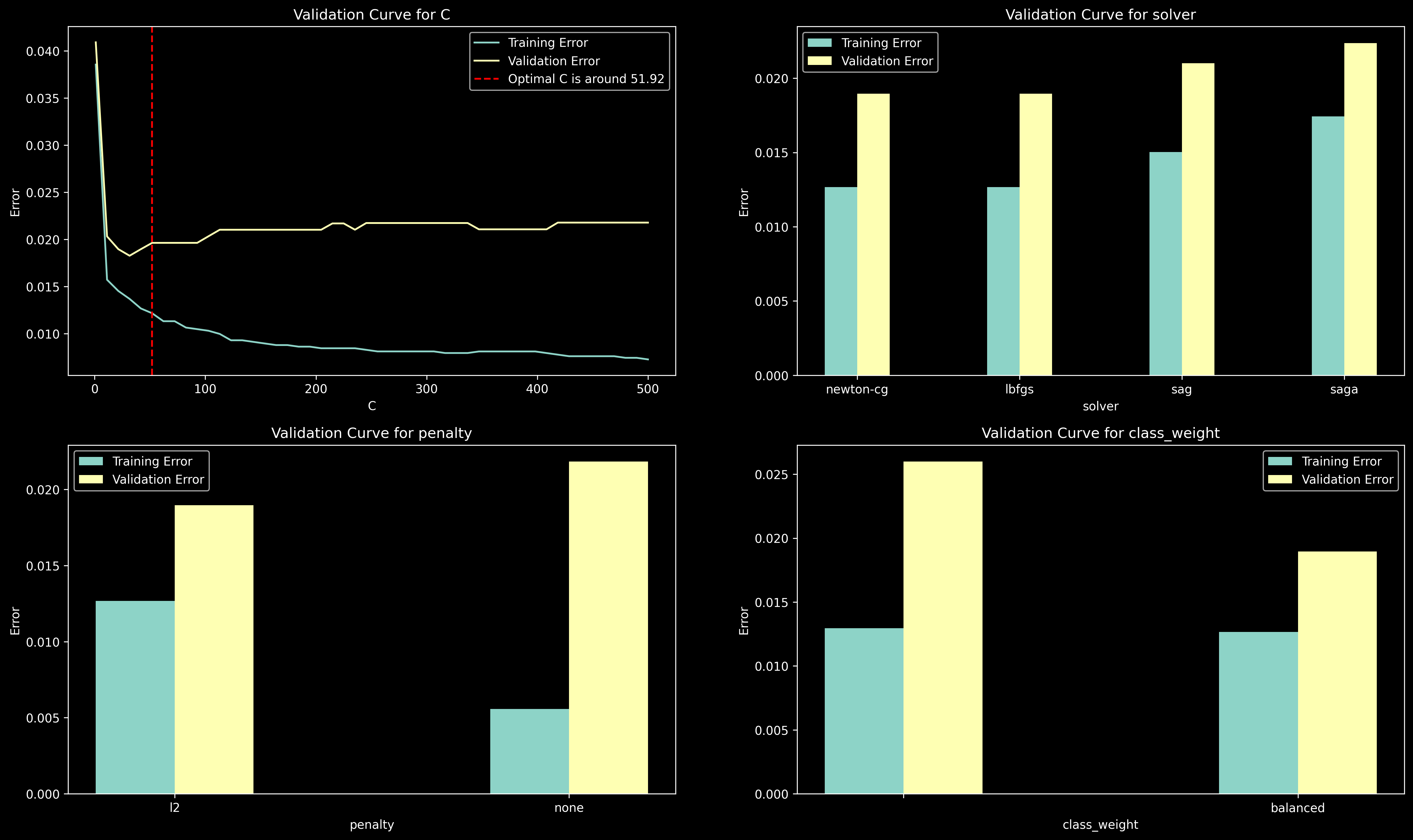

| Hyperparameter Analysis | Validation Curves |

| Hyperparameter Search | |

| Hyperparameter Logging | |

| Feature Analysis | Feature Importance |

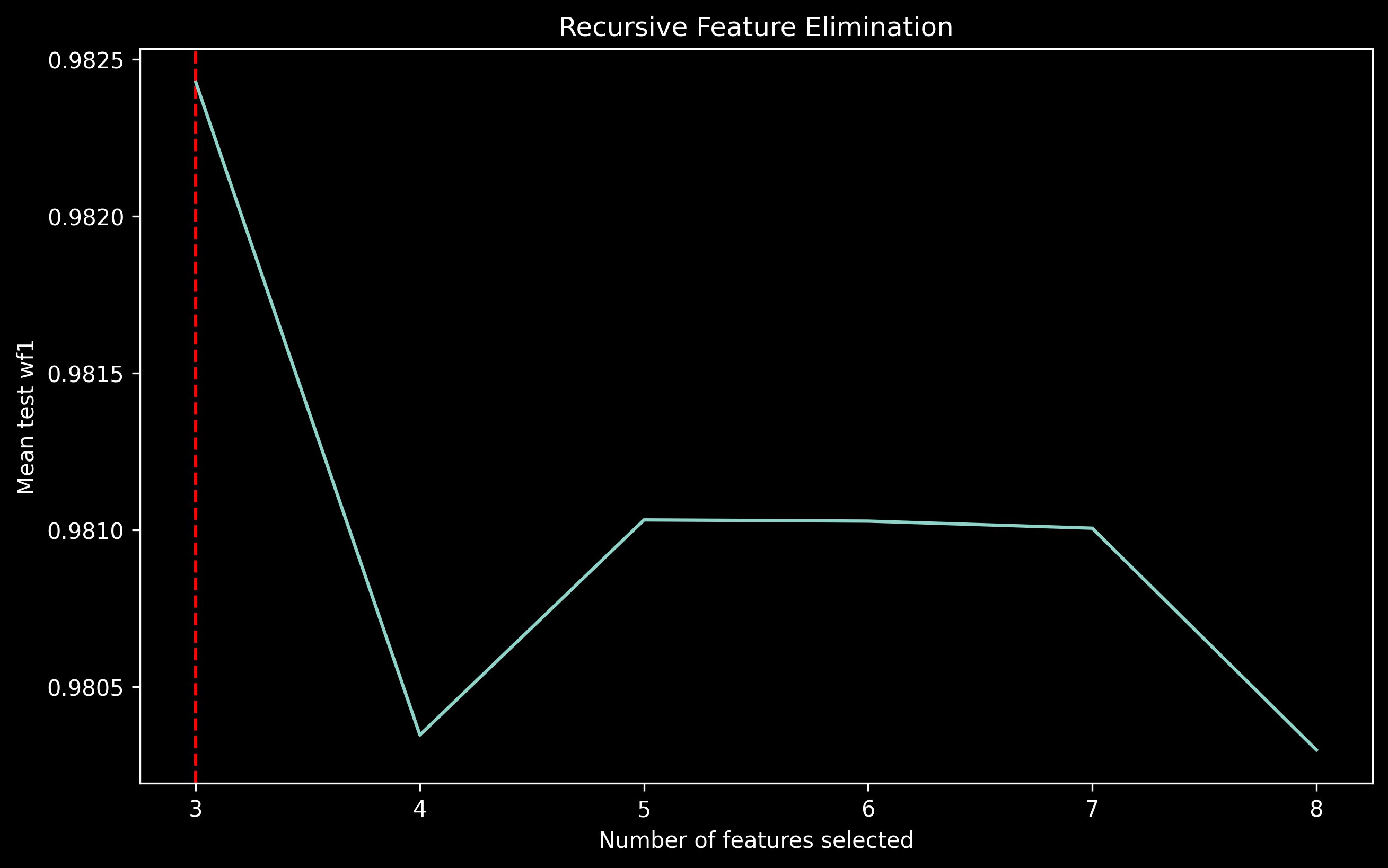

| Recursive Feature Elimination | |

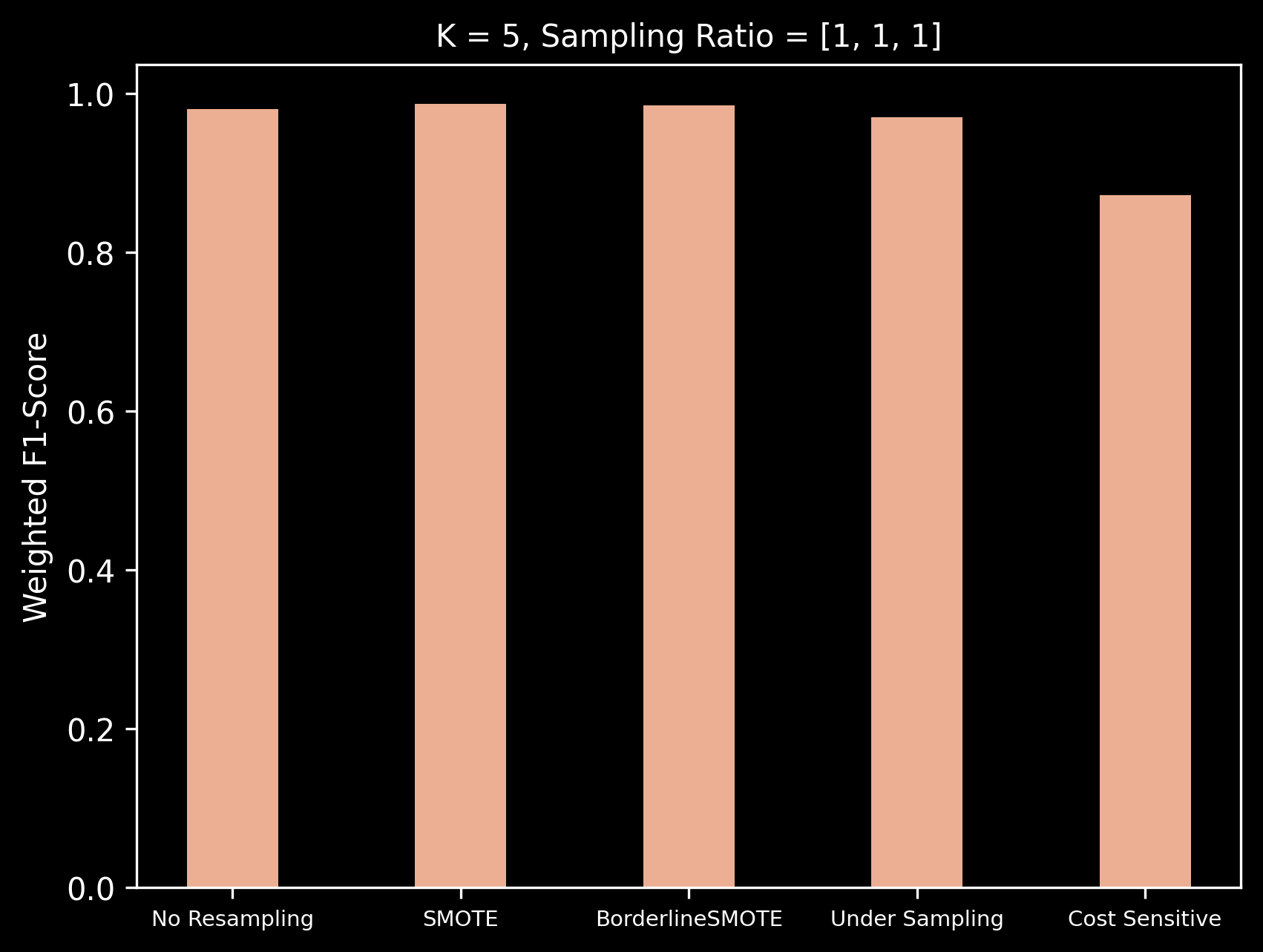

| Class Imbalance Analysis | Analyzing Different Methods |

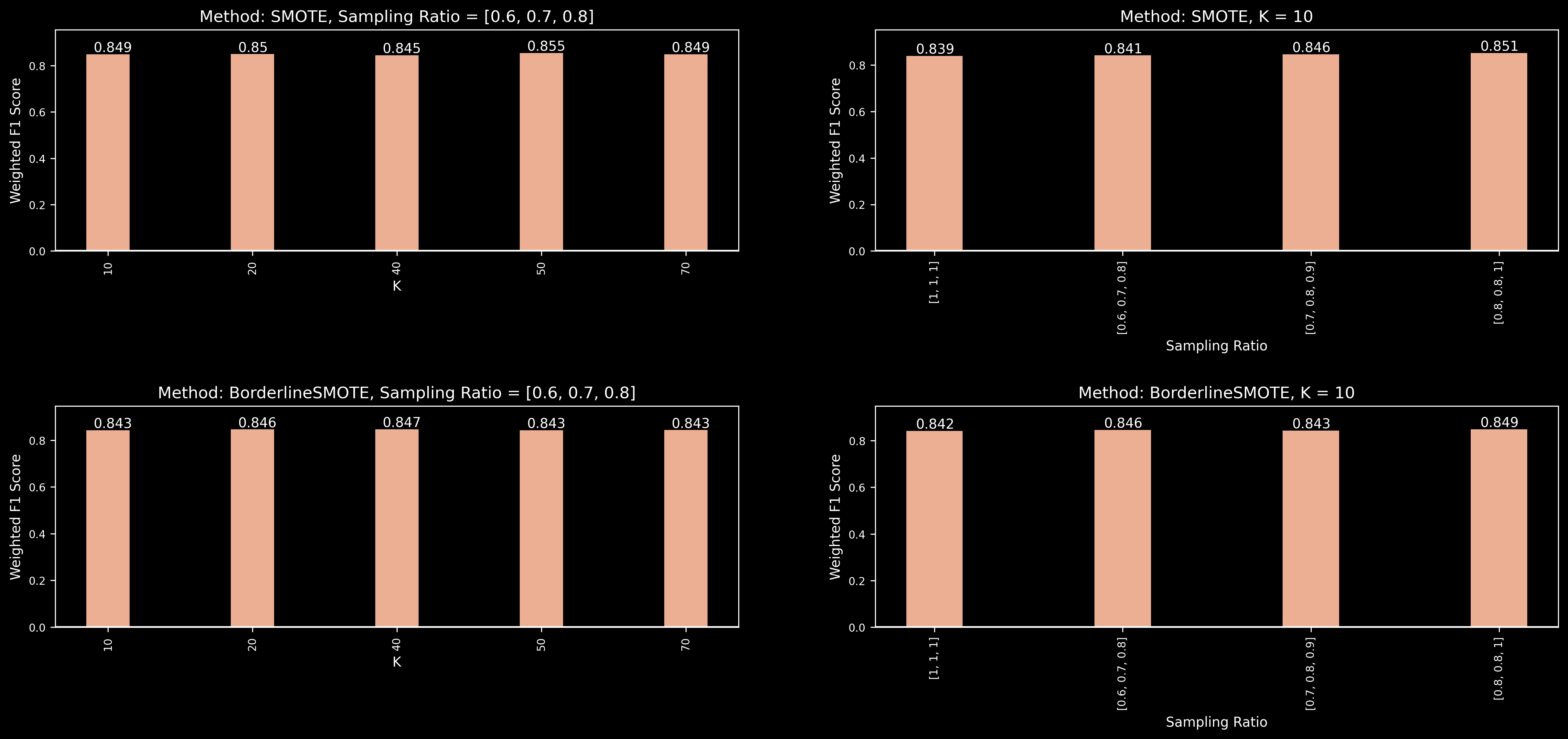

| Analysis Different Hyperparameters |

We will demonstrate this for Logistic Regression, for the extracted insights and other models report or the notebooks.

| C | class_weight | dual | fit_intercept | intercept_scaling | l1_ratio | max_iter | multi_class | n_jobs | penalty | random_state | solver | tol | verbose | warm_start |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 40.074 | balanced | False | True | 1 | None | 100 | multinomial | None | l2 | None | newton-cg | 0.0001 | 0 | False |

The purpose of this stage is to get familiar with the model and its hyperparameters which involved research or reading the documentation.

By estimating the VC dimension of the model,

we have

In this, the number of parameters of the model were used to estimate its generalization ability using the VC bound rule of thumb.

| Train WF1 | Val WF1 | Avoidable Bias | Variance |

|---|---|---|---|

| 0.986 | 0.981 | 0.014 | 0.005 |

The bias and variance of the model were heuristically computed here (Andrew NG's style)

This helps indicate the bias of the model and sheds light on whether it would benefit from adding more data.

To study the effects of specific hyperparameters on the model's performance (in-sample and out-of-sample error) and mark the point where the model starts to overfit.

| C | class_weight | multi_class | penalty | solver | WF1 |

|---|---|---|---|---|---|

| 40.074 | balanced | multinomial | l2 | newton-cg | 0.98104 |

Here we used random search to find an optimal set of hyperparameters.

| info | read_data | LogisticRegression | metrics | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| time | date | duration | id | split | kind | standardize | selected | encode | class_weight | multi_class | penalty | solver | dual | tol | fit_intercept | intercept_scaling | max_iter | verbose | warm_start | train_wf1 | val_wf1 |

| 16:00:51 | 05/14/23 | 49.76 s | 3 | train | Numerical | True | True | balanced | multinomial | l2 | newton-cg | False | 0.0 | True | 1 | 100 | 0 | False | 0.985 | 0.9814 | |

| 16:19:45 | 05/14/23 | 7.69 s | 4 | all | Numerical | True | balanced | multinomial | l2 | newton-cg | False | 0.0 | True | 1 | 100 | 0 | False | 0.991 | 0.9831 | ||

| 01:48:36 | 05/15/23 | 17.70 s | 6 | all | Numerical | True | balanced | multinomial | l2 | newton-cg | False | 0.0 | True | 1 | 100 | 0 | False | 0.986 | 0.9838 | ||

We used in-notebook experiment logging using the MLPath library . Shown above is a sample of the log table.

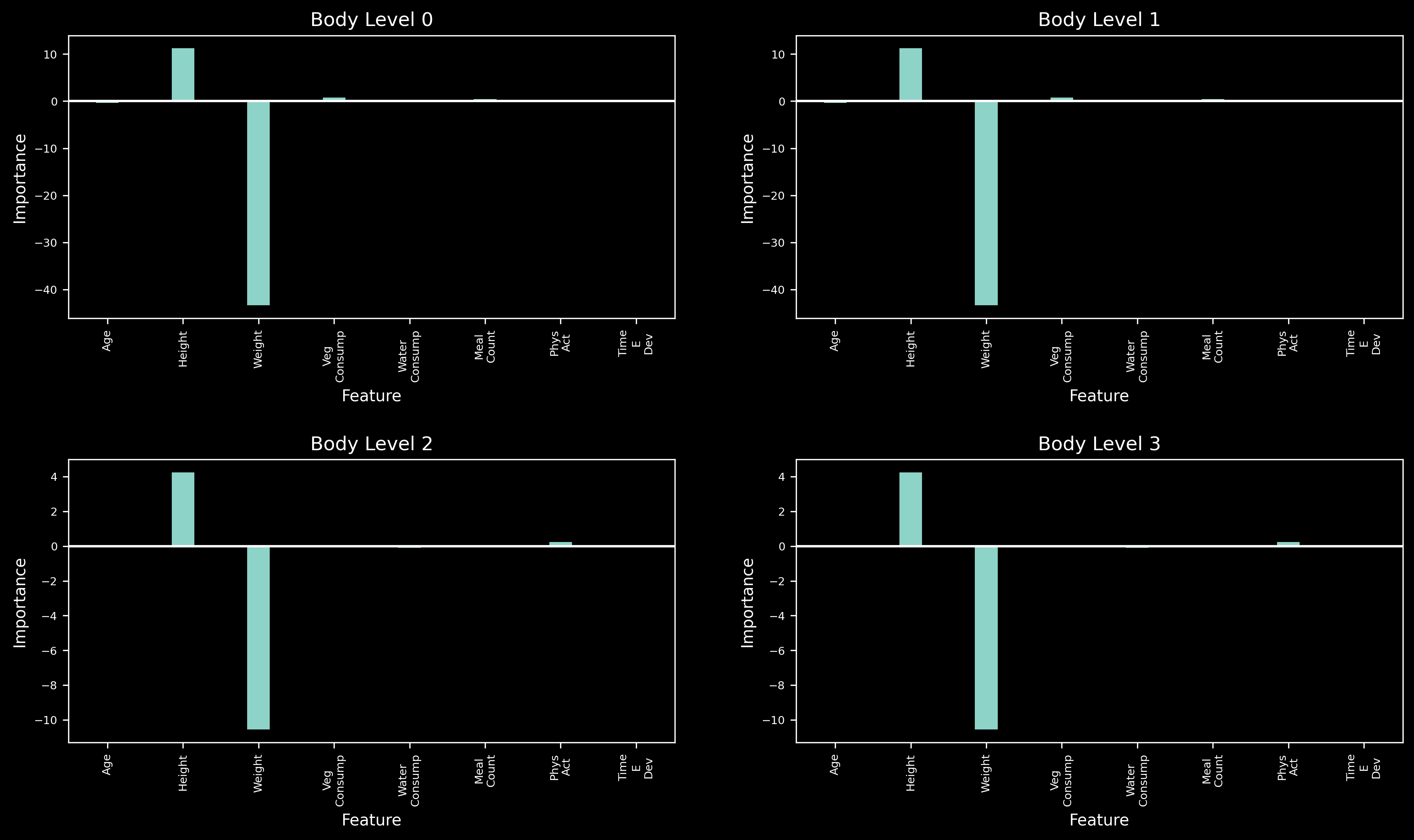

In this, we analyzed the importance of each feature as assigned by the model's weights.

| Veg_Consump | Height | Weight |

|---|---|---|

| 0.32722 | 7.841 | 26.777 |

As suggested by one of Vapnik’s papers, a decent feature selection strategy is to remove the least important feature until a minimum number of features is reached or the metric is no longer improving.

The purpose of this was to compare different resampling approaches and class-weighting.

Different hyperparameters within specific resampling approach(es) were analyzed here.

As illustrated above.

We have set the following set of working standards before undertaking the project. If you wish to contribute for any reason then please respect such standards.

|

Essam |

Mariem Muhammed |

Marim Naser |

MUHAMMAD SAAD |

We have utilized Notion for progress tracking and task assignment among the team.