- Initialize replay memory capacity.

- Initialize the network with random weights.

- For each episode:

- Initialize the starting state.

- For each time step:

- Select an action.

- Via exploration or exploitation

- Execute selected action in an emulator.

- Observe reward and next state.

- Store experience in replay memory.

- Sample random batch from replay memory.

- Preprocess states from batch.

- Pass batch of preprocessed states to policy network.

- Calculate loss between output Q-values and target Q-values.

- Requires a second pass to the network for the next state

- Gradient descent updates weights in the policy network to minimize loss.

- Select an action.

Find all the TODOs 🕵️♂️

-

Enviroment:

-

Set rewards (

snake_env.py/calculate_reward()) -

Define the state space. What is the agent allowed to observe? (

snake_env.py/get_state())

-

-

Agent:

- Build the neural network model which will estimate the Q-value. (

agent.py/build_model()) - Implement

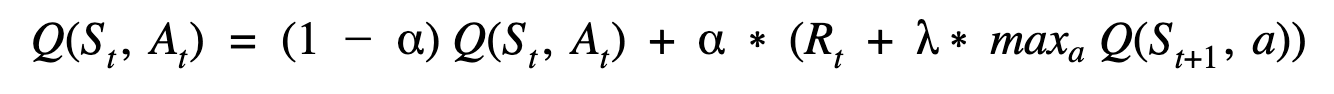

agent.py/get_action()to fetch which action to perfom given a state. Remember to consider exploration vs. explotation. - Implement the Bellman Equation in

agent.py/train_with_experience_replay()to actually train your model from previous (state, action)-pairs. - OPTIONAL - Gradually change exploration vs. explotation by changing (

agent.py/update_exploration_strategy())

Stuck? Check out the different git brances solution examples.

- Build the neural network model which will estimate the Q-value. (

- Introduce a target network

One of the interesting things about Deep Q-Learning is that the learning process uses 2 neural networks. These networks have the same architecture but different weights. Every N steps, the weights from the main network are copied to the target network. Using both of these networks leads to more stability in the learning process and helps the algorithm to learn more effectively. In our implementation, the main network weights replace the target network weights every 100 steps. source

Recommended Python 3.8 downloaded from python.org on Mac OS.

You may need to install a tensorflow version manually, e.g. pip install https://storage.googleapis.com/tensorflow/mac/cpu/tensorflow-2.4.0-cp38-cp38-macosx_10_14_x86_64.whl (for Python 3.8)

Solution: Download and install python from python.org as this is probebly an issue with the homebrew-version.

agent.py: Edit this to implement the meat of the DQN algorithmenvironment.py: Here you can edit the state and rewards given.play_snake.py: Play snake and check if your requirements are in place.train.py: Train your modeltest.py: Test your saved models. Eg. python test.py 1043(id) 650(total_reward) (models stored in models/{timestamp}/{total_reward})