NUS ME5413 Autonomous Mobile Robotics Final Project By Group 9 Date:2024.4.7

Authors: Zhang Yuanbo; Cao Ruoxi; Li Yuze; Wang Xiangyu; Yin Jiaju; Li Qi

Config:

Camera:Intel realsense D435 + mono

In our first experiment, we trained handwritten digits based on YOLOv3

Finally, we used find_object_2d (template matching) to obtain the pixel coordinates of the target object in the frame, and combined with depth information for navigation implementation

- System Requirements:

- Ubuntu 20.04

- ROS Noetic (Melodic not yet tested)

- C++11 and above

- CMake: 3.0.2 and above

- This repo depends on the following standard ROS pkgs:

roscpprospyrvizstd_msgsnav_msgsgeometry_msgsvisualization_msgstf2tf2_rostf2_geometry_msgspluginlibmap_servergazebo_rosjsk_rviz_pluginsjackal_gazebojackal_navigationvelodyne_simulatorteleop_twist_keyboardfind_object_2d

- And this gazebo_model repositiory Other required libraries are located in the src directory

This repo is a ros workspace, containing three rospkgs:

interactive_toolsare customized tools to interact with gazebo and your robotjackal_descriptioncontains the modified jackal robot model descriptionsme5413_worldthe main pkg containing the gazebo world, and the launch files

Note: If you are working on this project, it is encouraged to fork this repository and work on your own fork!

After forking this repo to your own github:

# Clone your own fork of this repo (assuming home here `~/`)

cd

git clone https://github.com/<YOUR_GITHUB_USERNAME>/ME5413_Final_Project.git

cd ME5413_Final_Project

# Install all dependencies

rosdep install --from-paths src --ignore-src -r -y

# Build

catkin_make

# Source

source devel/setup.bashTo properly load the gazebo world, you will need to have the necessary model files in the ~/.gazebo/models/ directory.

There are two sources of models needed:

-

# Create the destination directory cd mkdir -p .gazebo/models # Clone the official gazebo models repo (assuming home here `~/`) git clone https://github.com/osrf/gazebo_models.git # Copy the models into the `~/.gazebo/models` directory cp -r ~/gazebo_models/* ~/.gazebo/models

-

# Copy the customized models into the `~/.gazebo/models` directory cp -r ~/ME5413_Final_Project/src/me5413_world/models/* ~/.gazebo/models

This command will launch the gazebo with the project world

# Launch Gazebo World together with our robot

roslaunch me5413_world world.launchAfter launching Step 0, in the second terminal:

# Launch GMapping

roslaunch me5413_world mapping.launch After finishing mapping, run the following command in the thrid terminal to save the map:

# Save the map as `my_map` in the `maps/` folder

roscd me5413_world/maps/

rosrun map_server map_saver -f my_map map:=/mapOnce completed Step 1 mapping and saved your map, quit the mapping process.

Then, in the second terminal:

# Load a map, launch AMCL localizer and time-elastic-band navigation

roslaunch me5413_world teb_navigation.launchAlternatively, you can also try elastic band planner or basic planner:

# Other navigation methods, less stable

roslaunch me5413_world eband_navigation.launch

roslaunch me5413_world navigation.launchTo find the desired box (number 2), run the node to do template matching.

roslaunch me5413_world find_object_2d.launchThen, press the rviz botton Vehicle-2 to navigate the robot to the entrance of the package area.

Once the robot has reached, press rviz botton Box-2 to publish the estimated goal pose and navigate.

With depth camera, run this node to do 3D detection.

roslaunch me5413_world find_object_3d.launchPress rviz botton Box-3 to navigate to box 2 if depth camera has detected box 2.

The goal is published by 3D frame transformation from depth information.

- You may use any SLAM algorithm you like, any type:

- 2D LiDAR

- 3D LiDAR

- Vision

- Multi-sensor

- Verify your SLAM accuracy by comparing your odometry with the published

/gazebo/ground_truth/statetopic (nav_msgs::Odometry), which contains the gournd truth odometry of the robot. - You may want to use tools like EVO to quantitatively evaluate the performance of your SLAM algorithm.

-

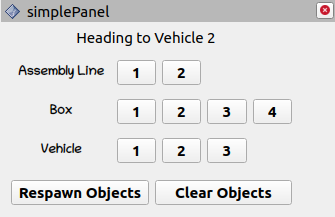

From the starting point, move to the given pose within each area in sequence

- Assembly Line 1, 2

- Random Box 1, 2, 3, 4

- Delivery Vehicle 1, 2, 3

-

We have provided you a GUI in RVIZ that allows you to click and publish these given goal poses to the

/move_base_simple/goaltopic: -

We also provides you four topics (and visualized in RVIZ) that computes the real-time pose error between your robot and the selelcted goal pose:

/me5413_world/absolute/heading_error(in degrees, wrtworldframe,std_msgs::Float32)/me5413_world/absolute/position_error(in meters, wrtworldframe,std_msgs::Float32)/me5413_world/relative/heading_error(in degrees, wrtmapframe,std_msgs::Float32)/me5413_world/relative/position_error(in meters wrtmapframe,std_msgs::Float32)

You are welcome contributing to this repo by opening a pull-request

We are following:

The ME5413_Final_Project is released under the MIT License