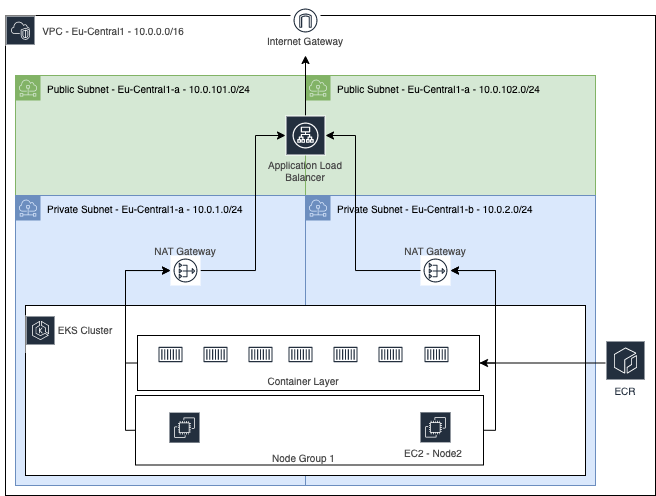

MatchUp is currently in the process of moving off of Vercel to its own AWS EKS Cluster.

This repo contains the following infrastucture:

- AWS VPC

- two subnets spread across two regions in one AZ

- AWS EKS Cluster

- Single Node group

- Required IAM Roles and Polices

- Kubernetes deployment

- Prometheus Helm Chart

- Grafana Helm Chart

- Karpanter node autoscaling provisioner

- AWS Load balancer controller

- Custom personal application deployment

- Ingress rules for application load balacer(ALB) setup

- Route53

- Hosted zone

- A name records

- SSL cert in AWS Cert Manager

The following variables will need to be configured in your CI/CD pipeline or local environment variables. This repo comes with a simple example pipeline for CircleCI.

AWS_ACCESS_KEY_ID = "sample_ID"

AWS_SECRET_ACCESS_KEY = "sample_key"

The following will need to be configured in your local "terraform.tfvars" file. If using a CI/CD pipeline they will be added as environment variables with the TF_VAR abbreviation.

GRAFANA_ADMIN = "sample_user"

GRAFANA_PASSWORD = "sample_password"

TF_VAR_GRAFANA_ADMIN = "sample_user"

TF_VAR_GRAFANA_PASSWORD = "sample_password"

The Terraform backend for this project is configured for S3. This configuration can be found in main.tf and will need to match your configuration for your S3 bucket. More information can be found here. If you wish to just use a simple local state file then remove the backend configuration in main.tf entirely.

You will need to add the required helm repos to your local system or have these commands run before a terraform apply within your pipeline setup. Run the following commands:

helm repo add grafana https://grafana.github.io/helm-charts

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo add eks https://aws.github.io/eks-charts

helm repo update

For further helm customization see the available parameters for Grafana Here and Prometheus Here. These paremeters can be added via set within the Terraform Helm Release Resource with examples Here and within the kubernetes.tf file.

This deployment uses Karpenter based horizontal node scaling. If you have never run spot instaces on your aws account before the following command will need to be run via the aws cli:

aws iam create-service-linked-role --aws-service-name spot.amazonaws.com

To view the planned deployment run the following command:

terraform plan -out=plan.file

To deploy the infrastructure into your aws environment run the following command:

terraform apply "plan.file"

To destroy this infrastructure and start from a clean slate run the following command:

terraform destroy

The Terraform output should include a variable called load_balancer_hostname

This is the url for Grafana access.

Note that the helm resource in kubernetes.tf requires a minimum aws cli version to use the exec plugin to retrieve the cluster access token.

- See GitHub related issue link

If you get the error "terraform helm cannot reuse a name that is still in use" on a terraform destroy

you will need to run the following commands through kubectl:

- kubectl -n (namespace) get secrets

- for any secret of type helm.sh/release.v1 run the following command on

- kubectl delete secret (SecretName) -n (namespace)

- rerun terraform destory