Video Captioning is a sequential learning model that employs an encoder-decoder architecture. It accepts a video as input and produces a descriptive caption that summarizes the content of the video.

The significance of captioning stems from its capacity to enhance accessibility to videos in various ways. An automated video caption generator aids in improving the searchability of videos on websites. Additionally, it facilitates the grouping of videos based on their content by making the process more straightforward.

While exploring new projects, I discovered video captioning and noticed the scarcity of reliable resources available. With this project, I aim to simplify the implementation of video captioning, making it more accessible for individuals interested in this field.

This project utilizes the MSVD dataset, which consists of 1450 training videos and 100 testing videos, to facilitate the development of video captioning models.

Clone the repository : git clone https://github.com/MathurUtkarsh/Video-Captioning-Using-LSTM-and-Keras.git

Video Caption Generator: cd Video-Captioning

Create environment: conda create -n video_caption python=3.7

Activate environment: conda activate video_caption

Install requirements: pip install -r requirements.txt

To utilize the pre-trained models, follow these steps:

- Add a video to the "data/testing_data/video" folder.

- Execute the "predict_realtime.py" file using the command: python predict_realtime.py.

For quicker results, extract the features of the video and save them in the "feat" folder within the "testing_data" directory.

To convert the video into features, run the "extract_features.py" file using the command: python extract_features.py.

For local training, run the "train.py" file. Alternatively, you can use the "Video_Captioning.ipynb" notebook.

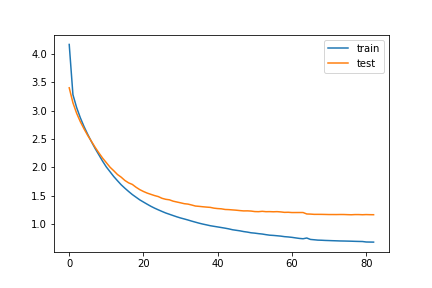

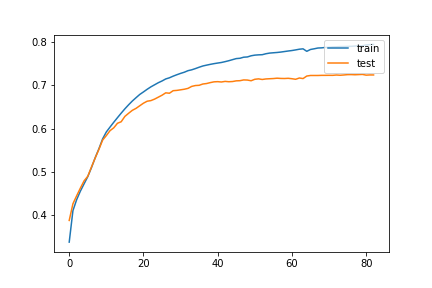

This is the graph of epochs vs loss. The loss used here is categorical crossentropy. This is the graph of epochs vs metric. The metric used here is accuracy.- Realtime implementation

- Two types of search algorithms depending upon the requirements

- Beam search and Greedy search

The greedy search algorithm chooses the word with the highest probability at each step of generating the output sequence. In contrast, the beam search algorithm considers multiple alternative words at each timestep based on their conditional probabilities.

For a more detailed understanding of these search algorithms, you can refer to this informative blog post.

- train.py contains the model architecture

- predict_test.py is to check for predicted results and store them in a txt file along with the time taken for each prediction

- predict_realtime.py checks the results in realtime

- model_final folder contains the trained encoder model along with the tokenizerl and decoder model weights.

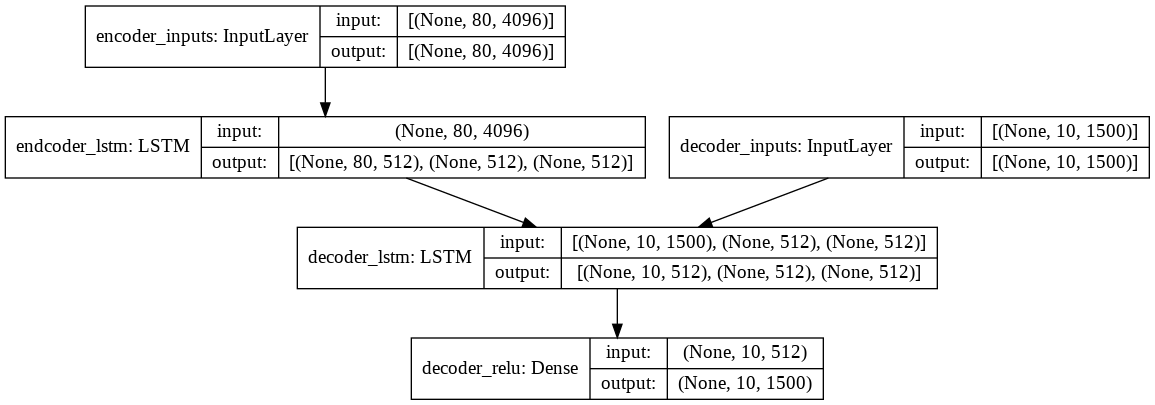

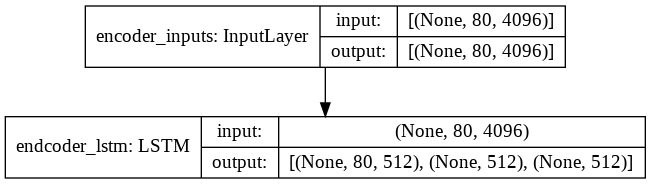

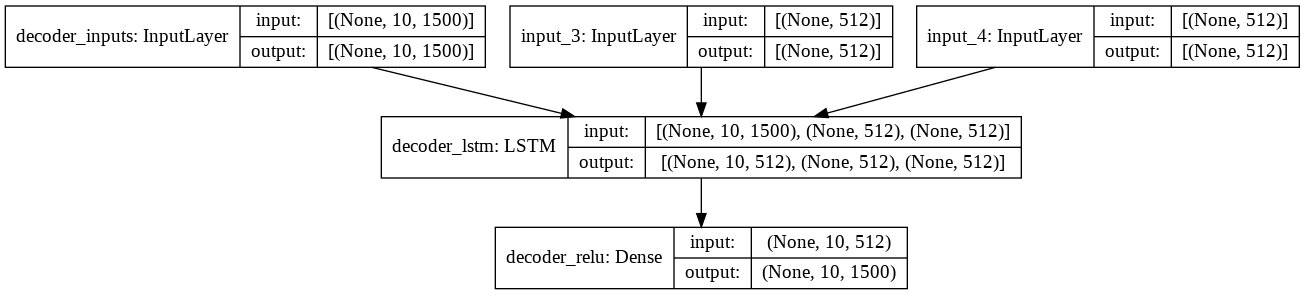

- features.py extracts 80 frames evenly spread from the video and then those video frames are processed by a pre-trained VGG16 so each frame has 4096 dimensions. So for a video we create a numoy array of shape(80, 4096) config.py contains all the configurations i am using

- Video_Captioning.ipynb is the notebook i used for training and building this project.

- Integrating attention blocks and pretrained embeddings (e.g., GloVe) to enhance the model's comprehension of sentences.

- Exploring the use of other pretrained models, such as I3D, specifically designed for video understanding, to improve feature extraction.

- Expanding the model's capability to handle longer videos, as it currently supports only 80 frames.

- Incorporating a user interface (UI) into the project for a more user-friendly experience.

- Using Chat-GPT API provided by Open-AI to get more creative and compelling captions.