Author: Matthieu OLEKHNOVITCH

This is an unofficial implementation of the paper Fast AutoAugment for time series data. The original paper is for image data. The code is inspired from the official implementation of the paper Fast AutoAugment.

The dataset used is the UCR Time Series Classification Archive. The model used is a ResNet model.

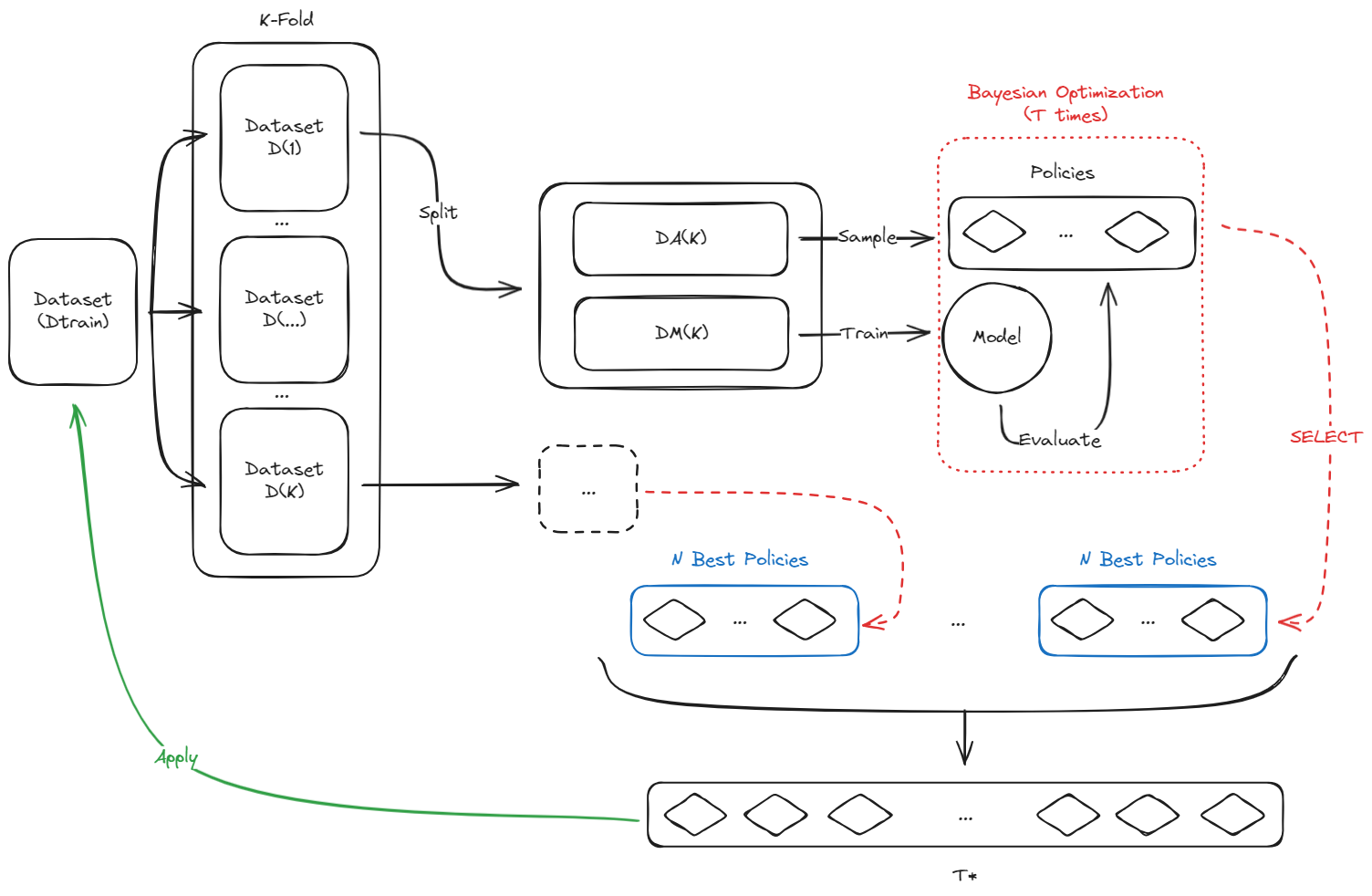

The architecture of the augmented model is as follows:

The transformations used are as follows:

- Identity

- Additive Noise

- Random Crop

- Drift

- Reverse

- Python 3.12

I personally recommend using uv as package manager. You can install it using the following command:

pip install uvYou can then create the environment and install the dependencies using the following commands:

uv venv. venv/bin/activateuv pip install -r requirements.txtSet the device to 'cuda' or 'cpu' if you want to override automatic device detection. Set is_wandb to True if you want to log the results to WnB. You may have to log in to WnB using the following command:

wandb loginYou can run FastAA augmentation comparison using the following command:

python FastAA/main.py --dataset=ECG5000 --compare --runs=5Other parameters are detailed in the help:

python FastAA/main.py --helpYou can run FastAA augmentation on all datasets using the following command:

python FastAA/run_full_datasets_exploration.pyNote: This will take a long time to run. You can run it in the background using the following command:

nohup python FastAA/run_full_datasets_exploration.py &The results will be saved in the data/logs folder as soon as they are computed.

Plot the metrics comparison with the command:

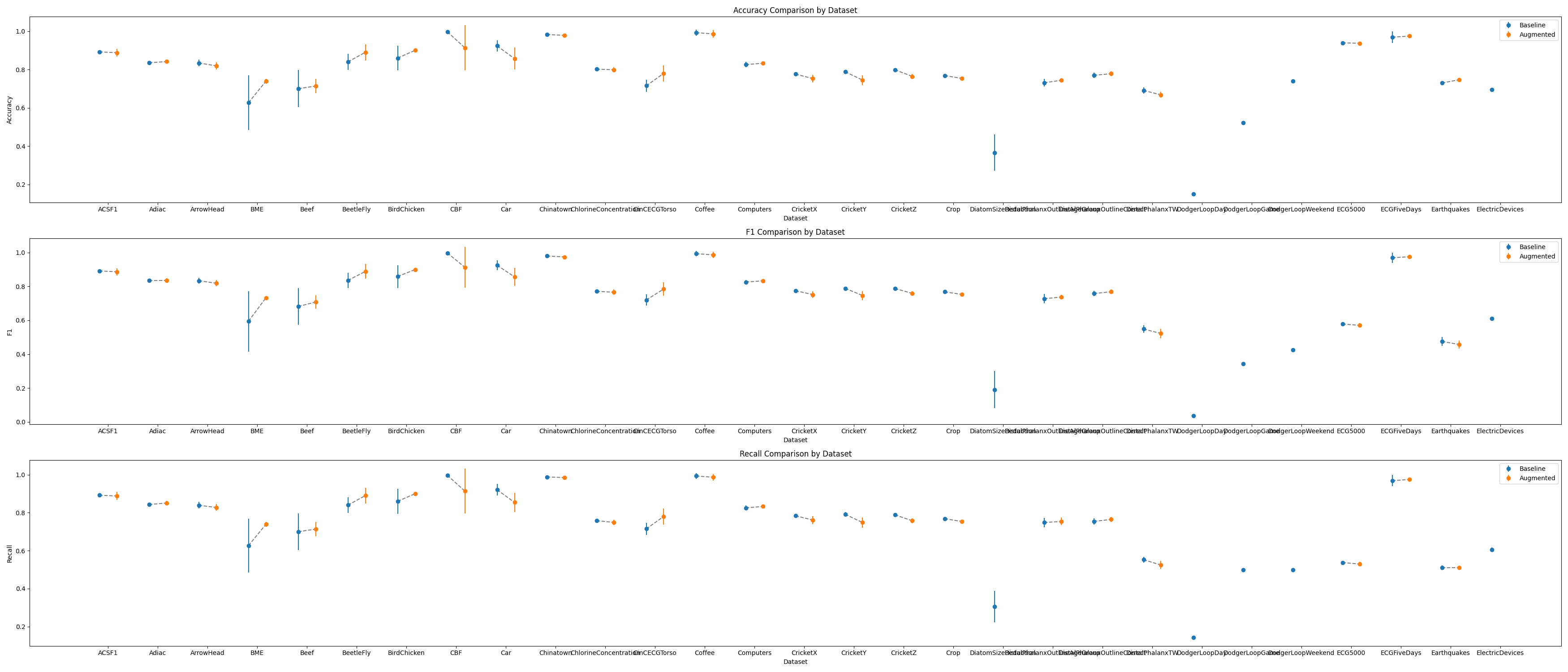

python results/plot_result_table.py And access the last computed results in results/metrics_comparison.png :

We can see that significant improvements are made on some datasets, while others are not improved, or even worsened. Therefore, we propose to study the impact of the transformations on the datasets and try to understand why some datasets are improved and others are not.