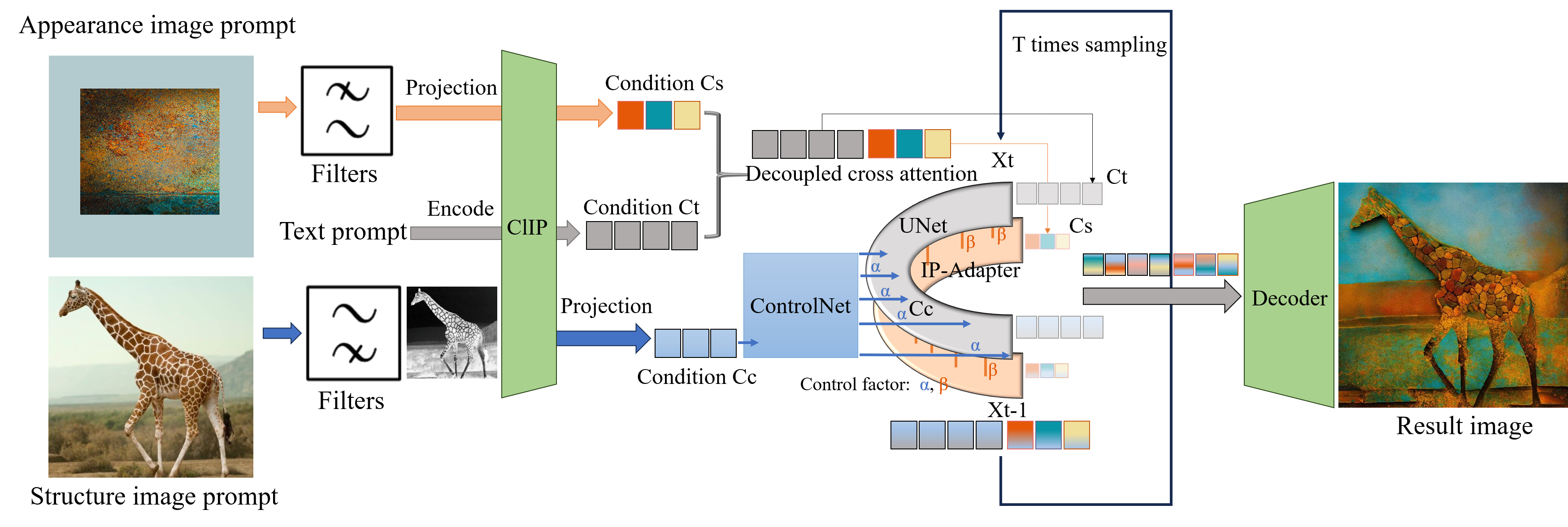

We propose FilterPrompt, an approach to enhance the model control effect. It can be universally applied to any diffusion model, allowing users to adjust the representation of specific image features in accordance with task requirements, thereby facilitating more precise and controllable generation outcomes. In particular, our designed experiments demonstrate that the FilterPrompt optimizes feature correlation, mitigates content conflicts during the generation process, and enhances the model's control capability.

- 2024.04.20: The arXiv paper of FilterPrompt is online.

- 2024.05.01: Project-Page of FilterPrompt.

- Release the code.

- Public Demo for users to try FilterPrompt online.

- We recommend running this repository using Anaconda.

- NVIDIA GPU (Available memory is greater than 20GB)

- CUDA CuDNN (version ≥ 11.1, we actually use 11.7)

- Python 3.11.3 (Gradio requires Python 3.8 or higher)

- PyTorch: Find the torch version that is suitable for the current cuda

- 【example】:

pip install torch==2.0.0+cu117 torchvision==0.15.1+cu117 torchaudio==2.0.1+cu117 --extra-index-url https://download.pytorch.org/whl/cu117

- 【example】:

Specifically, inspired by the concept of decoupled cross-attention in IP-Adapter, we apply a similar methodology. Please follow the instructions below to complete the environment configuration required for the code:

- Cloning this repo

git clone --single-branch --branch main https://github.com/Meaoxixi/FilterPrompt.git

- Dependencies

All dependencies for defining the environment are provided in requirements.txt.

cd FilterPrompt

conda create --name fp_env python=3.11.3

conda activate fp_env

pip install torch==2.0.0+cu117 torchvision==0.15.1+cu117 torchaudio==2.0.1+cu117 --extra-index-url https://download.pytorch.org/whl/cu117

pip install -r requirements.txt

- Download the necessary modules in the relative path

models/from the following links

| Path | Description |

|---|---|

models/ |

root path |

├── ControlNet/ |

Place the pre-trained model of ControlNet |

├── control_v11f1p_sd15_depth |

ControlNet_depth |

└── control_v11p_sd15_softedge |

ControlNet_softEdge |

├── IP-Adapter/ |

IP-Adapter |

├── image_encoder |

image_encoder of IP-Adapter |

└── other needed configuration files |

|

├── sd-vae-ft-mse/ |

Place the model of sd-vae-ft-mse |

├── stable-diffusion-v1-5/ |

Place the model of stable-diffusion-v1-5 |

├── Realistic_Vision_V4.0_noVAE/ |

Place the model of Realistic_Vision_V4.0_noVAE |

After installation and downloading the models, you can use python app.py to perform code in gradio. We have designed four task types to facilitate you to experience the application scenarios of FilterPrompt.

If you find FilterPrompt helpful in your research/applications, please cite using this BibTeX:

@misc{wang2024filterprompt,

title={FilterPrompt: Guiding Image Transfer in Diffusion Models},

author={Xi Wang and Yichen Peng and Heng Fang and Haoran Xie and Xi Yang and Chuntao Li},

year={2024},

eprint={2404.13263},

archivePrefix={arXiv},

primaryClass={cs.CV}

}