Note: I submitted this project too to see how many points it'd get

Staying consistent with fitness routines is challenging, especially for beginners. Gyms can be intimidating, and personal trainers aren't always available.

The Fitness Assistant provides a conversational AI that helps users choose exercises and find alternatives, making fitness more manageable.

This project was implemented for LLM Zoomcamp - a free course about LLMs and RAG.

To see a demo of the project and instructions on how to run it on github codespaces, check this video:

The Fitness Assistant is a RAG application designed to assist users with their fitness routines.

The main use cases include:

- Exercise Selection: Recommending exercises based on the type of activity, targeted muscle groups, or available equipment.

- Exercise Replacement: Replacing an exercise with suitable alternatives.

- Exercise Instructions: Providing guidance on how to perform a specific exercise.

- Conversational Interaction: Making it easy to get information without sifting through manuals or websites.

The dataset used in this project contains information about various exercises, including:

- Exercise Name: The name of the exercise (e.g., Push-Ups, Squats).

- Type of Activity: The general category of the exercise (e.g., Strength, Mobility, Cardio).

- Type of Equipment: The equipment needed for the exercise (e.g., Bodyweight, Dumbbells, Kettlebell).

- Body Part: The part of the body primarily targeted by the exercise (e.g., Upper Body, Core, Lower Body).

- Type: The movement type (e.g., Push, Pull, Hold, Stretch).

- Muscle Groups Activated: The specific muscles engaged during the exercise (e.g., Pectorals, Triceps, Quadriceps).

- Instructions: Step-by-step guidance on how to perform the exercise correctly.

The dataset was generated using ChatGPT and contains 207 records. It serves as the foundation for the Fitness Assistant's exercise recommendations and instructional support.

You can find the data in data/data.csv.

- Python 3.12

- Docker and Docker Compose for containerization

- Minsearch for full-text search

- Flask as the API interface (see Background for more information on Flask)

- Grafana for monitoring and PostgreSQL as the backend for it

- OpenAI as an LLM

Since we use OpenAI, you need to provide the API key:

- Install

direnv. If you use Ubuntu, runsudo apt install direnvand thendirenv hook bash >> ~/.bashrc. - Copy

.envrc_templateinto.envrcand insert your key there. - For OpenAI, it's recommended to create a new project and use a separate key.

- Run

direnv allowto load the key into your environment.

For dependency management, we use pipenv, so you need to install it:

pip install pipenvOnce installed, you can install the app dependencies:

pipenv install --devBefore the application starts for the first time, the database needs to be initialized.

First, run postgres:

docker-compose up postgresThen run the db_prep.py script:

pipenv shell

cd fitness_assistant

export POSTGRES_HOST=localhost

python db_prep.pyTo check the content of the database, use pgcli (already

installed with pipenv):

pipenv run pgcli -h localhost -U your_username -d course_assistant -WYou can view the schema using the \d command:

\d conversations;And select from this table:

select * from conversations;The easiest way to run the application is with docker-compose:

docker-compose upIf you want to run the application locally, start only postres and grafana:

docker-compose up postgres grafanaIf you previously started all applications with

docker-compose up, you need to stop the app:

docker-compose stop appNow run the app on your host machine:

pipenv shell

cd fitness_assistant

export POSTGRES_HOST=localhost

python app.pySometimes you might want to run the application in Docker without Docker Compose, e.g., for debugging purposes.

First, prepare the environment by running Docker Compose as in the previous section.

Next, build the image:

docker build -t fitness-assistant .And run it:

docker run -it --rm \

--network="fitness-assistant_default" \

--env-file=".env" \

-e OPENAI_API_KEY=${OPENAI_API_KEY} \

-e DATA_PATH="data/data.csv" \

-p 5000:5000 \

fitness-assistantWhen inserting logs into the database, ensure the timestamps are correct. Otherwise, they won't be displayed accurately in Grafana.

When you start the application, you will see the following in your logs:

Database timezone: Etc/UTC

Database current time (UTC): 2024-08-24 06:43:12.169624+00:00

Database current time (Europe/Berlin): 2024-08-24 08:43:12.169624+02:00

Python current time: 2024-08-24 08:43:12.170246+02:00

Inserted time (UTC): 2024-08-24 06:43:12.170246+00:00

Inserted time (Europe/Berlin): 2024-08-24 08:43:12.170246+02:00

Selected time (UTC): 2024-08-24 06:43:12.170246+00:00

Selected time (Europe/Berlin): 2024-08-24 08:43:12.170246+02:00

Make sure the time is correct.

You can change the timezone by replacing TZ in .env.

On some systems, specifically WSL, the clock in Docker may get out of sync with the host system. You can check that by running:

docker run ubuntu dateIf the time doesn't match yours, you need to sync the clock:

wsl

sudo apt install ntpdate

sudo ntpdate time.windows.comNote that the time is in UTC.

After that, start the application (and the database) again.

When the application is running, we can start using it.

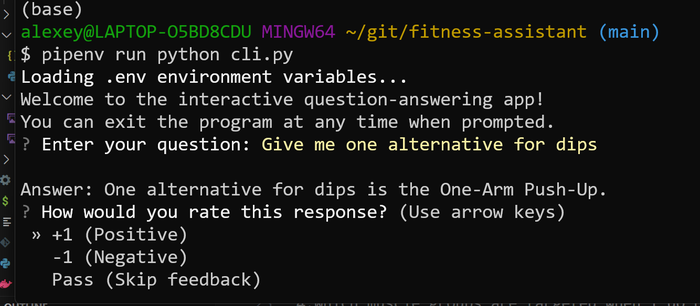

We built an interactive CLI application using questionary.

To start it, run:

pipenv run python cli.pyYou can also make it randomly select a question from our ground truth dataset:

pipenv run python cli.py --randomWhen the application is running, you can use requests to send questions—use test.py for testing it:

pipenv run python test.pyIt will pick a random question from the ground truth dataset and send it to the app.

You can also use curl for interacting with the API:

URL=http://localhost:5000

QUESTION="Is the Lat Pulldown considered a strength training activity, and if so, why?"

DATA='{

"question": "'${QUESTION}'"

}'

curl -X POST \

-H "Content-Type: application/json" \

-d "${DATA}" \

${URL}/questionYou will see something like the following in the response:

{

"answer": "Yes, the Lat Pulldown is considered a strength training activity. This classification is due to it targeting specific muscle groups, specifically the Latissimus Dorsi and Biceps, which are essential for building upper body strength. The exercise utilizes a machine, allowing for controlled resistance during the pulling action, which is a hallmark of strength training.",

"conversation_id": "4e1cef04-bfd9-4a2c-9cdd-2771d8f70e4d",

"question": "Is the Lat Pulldown considered a strength training activity, and if so, why?"

}Sending feedback:

ID="4e1cef04-bfd9-4a2c-9cdd-2771d8f70e4d"

URL=http://localhost:5000

FEEDBACK_DATA='{

"conversation_id": "'${ID}'",

"feedback": 1

}'

curl -X POST \

-H "Content-Type: application/json" \

-d "${FEEDBACK_DATA}" \

${URL}/feedbackAfter sending it, you'll receive the acknowledgement:

{

"message": "Feedback received for conversation 4e1cef04-bfd9-4a2c-9cdd-2771d8f70e4d: 1"

}The code for the application is in the fitness_assistant folder:

app.py- the Flask API, the main entrypoint to the applicationrag.py- the main RAG logic for building the retrieving the data and building the promptingest.py- loading the data into the knowledge baseminsearch.py- an in-memory search enginedb.py- the logic for logging the requests and responses to postgresdb_prep.py- the script for initializing the database

We also have some code in the project root directory:

We use Flask for serving the application as an API.

Refer to the "Using the Application" section for examples on how to interact with the application.

The ingestion script is in ingest.py.

Since we use an in-memory database, minsearch, as our

knowledge base, we run the ingestion script at the startup

of the application.

It's executed inside rag.py

when we import it.

For experiments, we use Jupyter notebooks.

They are in the notebooks folder.

To start Jupyter, run:

cd notebooks

pipenv run jupyter notebookWe have the following notebooks:

rag-test.ipynb: The RAG flow and evaluating the system.evaluation-data-generation.ipynb: Generating the ground truth dataset for retrieval evaluation.

The basic approach - using minsearch without any boosting - gave the following metrics:

- Hit rate: 94%

- MRR: 82%

The improved version (with tuned boosting):

- Hit rate: 94%

- MRR: 90%

The best boosting parameters:

boost = {

'exercise_name': 2.11,

'type_of_activity': 1.46,

'type_of_equipment': 0.65,

'body_part': 2.65,

'type': 1.31,

'muscle_groups_activated': 2.54,

'instructions': 0.74

}We used the LLM-as-a-Judge metric to evaluate the quality of our RAG flow.

For gpt-4o-mini, in a sample with 200 records, we had:

- 167 (83%)

RELEVANT - 30 (15%)

PARTLY_RELEVANT - 3 (1.5%)

NON_RELEVANT

We also tested gpt-4o:

- 168 (84%)

RELEVANT - 30 (15%)

PARTLY_RELEVANT - 2 (1%)

NON_RELEVANT

The difference is minimal, so we opted for gpt-4o-mini.

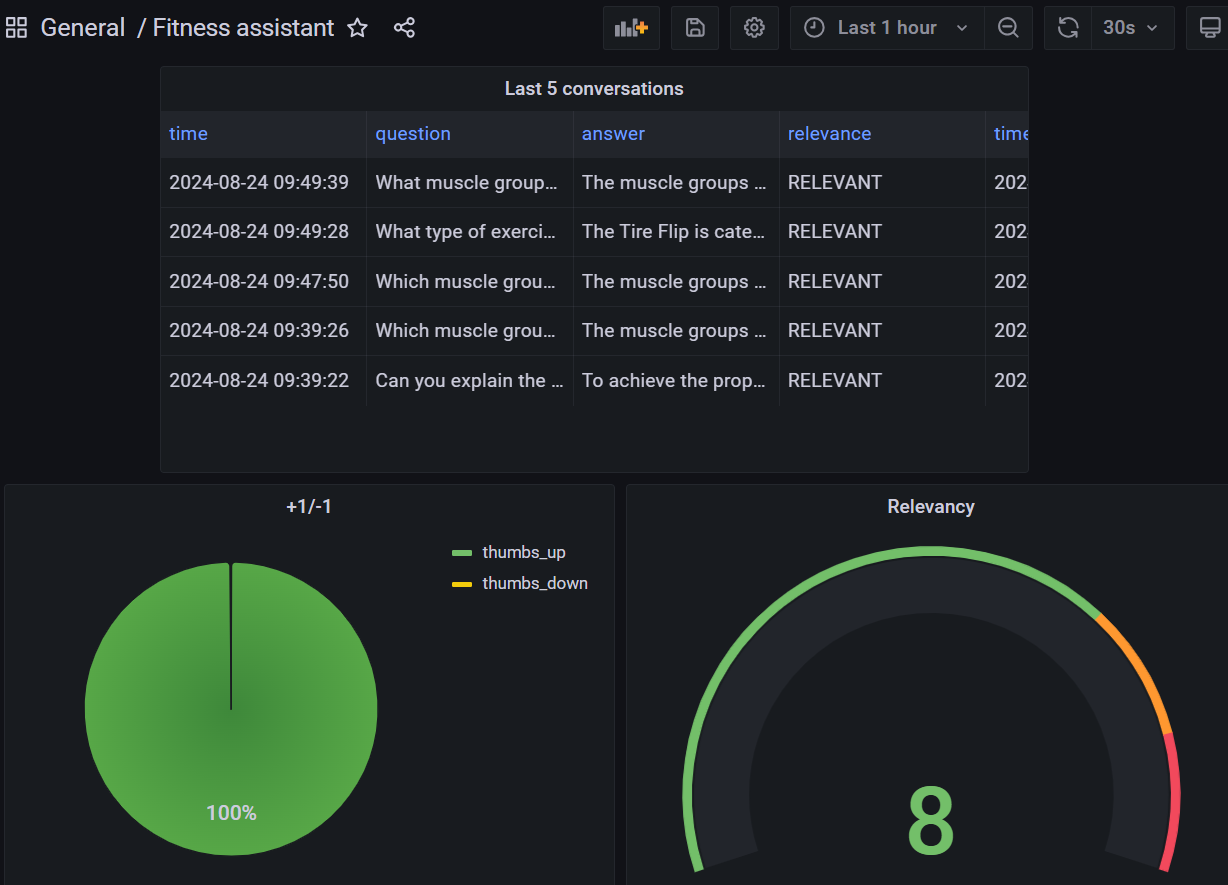

We use Grafana for monitoring the application.

It's accessible at localhost:3000:

- Login: "admin"

- Password: "admin"

The monitoring dashboard contains several panels:

- Last 5 Conversations (Table): Displays a table showing the five most recent conversations, including details such as the question, answer, relevance, and timestamp. This panel helps monitor recent interactions with users.

- +1/-1 (Pie Chart): A pie chart that visualizes the feedback from users, showing the count of positive (thumbs up) and negative (thumbs down) feedback received. This panel helps track user satisfaction.

- Relevancy (Gauge): A gauge chart representing the relevance of the responses provided during conversations. The chart categorizes relevance and indicates thresholds using different colors to highlight varying levels of response quality.

- OpenAI Cost (Time Series): A time series line chart depicting the cost associated with OpenAI usage over time. This panel helps monitor and analyze the expenditure linked to the AI model's usage.

- Tokens (Time Series): Another time series chart that tracks the number of tokens used in conversations over time. This helps to understand the usage patterns and the volume of data processed.

- Model Used (Bar Chart): A bar chart displaying the count of conversations based on the different models used. This panel provides insights into which AI models are most frequently used.

- Response Time (Time Series): A time series chart showing the response time of conversations over time. This panel is useful for identifying performance issues and ensuring the system's responsiveness.

All Grafana configurations are in the grafana folder:

init.py- for initializing the datasource and the dashboard.dashboard.json- the actual dashboard (taken from LLM Zoomcamp without changes).

To initialize the dashboard, first ensure Grafana is

running (it starts automatically when you do docker-compose up).

Then run:

pipenv shell

cd grafana

# make sure the POSTGRES_HOST variable is not overwritten

env | grep POSTGRES_HOST

python init.pyThen go to localhost:3000:

- Login: "admin"

- Password: "admin"

When prompted, keep "admin" as the new password.

Here we provide background on some tech not used in the course and links for further reading.

We use Flask for creating the API interface for our application.

It's a web application framework for Python: we can easily

create an endpoint for asking questions and use web clients

(like curl or requests) for communicating with it.

In our case, we can send questions to http://localhost:5000/question.

For more information, visit the official Flask documentation.

I thank the course participants for all your energy and positive feedback as well as the course sponsors for making it possible to run this course for free.

I hope you enjoyed doing the course =)