Lingchen Meng*1, Hengduo Li*2, Bor-Chun Chen3, Shiyi Lan2, Zuxuan Wu1, Yu-Gang Jiang1, Ser-Nam Lim3

1Shanghai Key Lab of Intelligent Information Processing, School of Computer Science, Fudan Univeristy, 2University of Maryland, 3Meta AI

* Equal contribution

This repository is an official implementation of the AdaViT.

Our codes are based on the pytorch-image-models and T2T-ViT

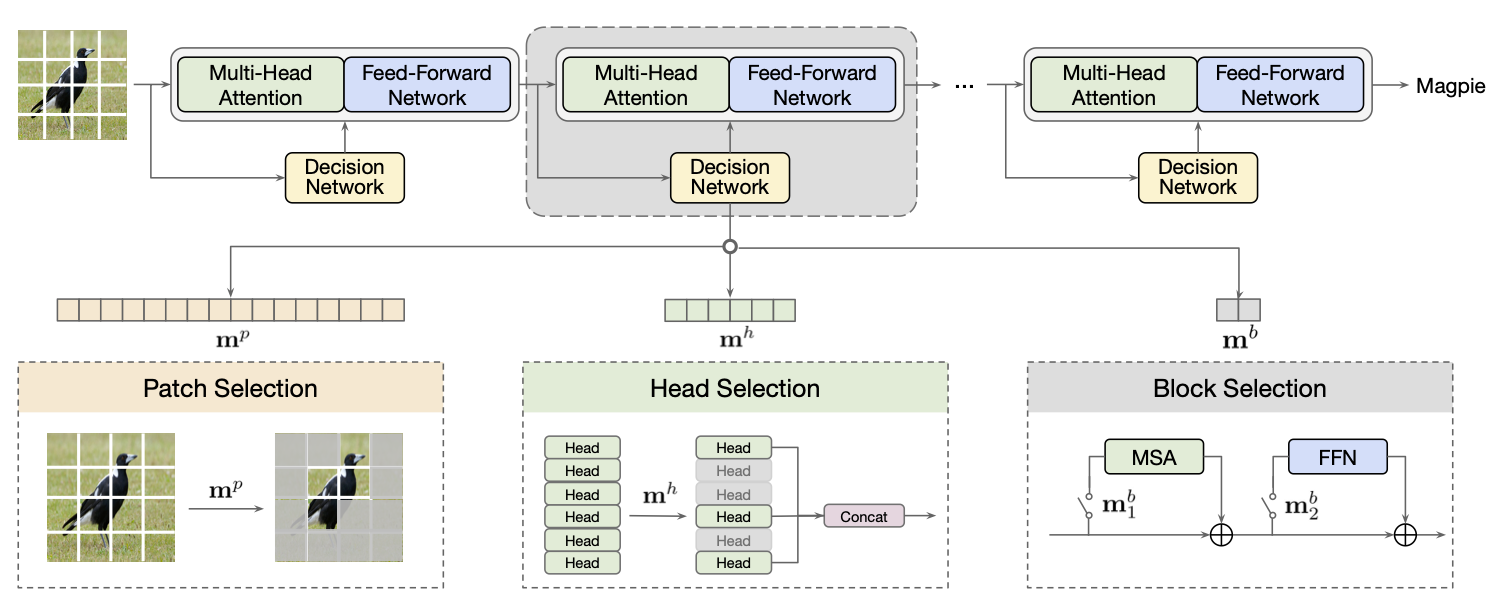

Built on top of self-attention mechanisms, vision transformers have demonstrated remarkable performance on a variety of tasks recently. While achieving excellent performance, they still require relatively intensive computational cost that scales up drastically as the numbers of patches, self-attention heads and transformer blocks increase. In this paper, we argue that due to the large variations among images, their

need for modeling long-range dependencies between patches differ. To this end, we introduce AdaViT, an adaptive computation framework that learns to derive usage policies on which patches, self-attention heads and transformer blocks to use throughout the backbone on a per-input basis, aiming to improve inference efficiency of vision transformers with a minimal drop of accuracy for image recognition. Optimized jointly with a transformer backbone in an end-to-end manner, a light-weight decision network is attached to the backbone to produce decisions on-the-fly. Extensive experiments on ImageNet demonstrate that our method obtains more than

We have put our model checkpoints here.

| Model | Top1 Acc | MACs | Download |

|---|---|---|---|

| Ada-T2T-ViT-19 | 81.1 | 3.9G | link |

| Ada-DeiT-S | 77.3 | 2.3G | link |

Download our AdaViT with T2T-ViT-19 from google drive and perform the command below. You can expect to get the Acc about 81.1 with 3.9 GFLOPS.

python3 ada_main.py /path/to/your/imagenet \

--model ada_step_t2t_vit_19_lnorm \

--ada-head --ada-layer --ada-token-with-mlp \

--flops-dict adavit_ckpt/t2t-19-h-l-tmlp_flops_dict.pth \

--eval_checkpoint /path/to/your/checkpoint

python3 ada_main.py /path/to/your/imagenet \

--model ada_step_deit_small_patch16_224 \

--ada-head --ada-layer --ada-token-with-mlp \

--flops-dict adavit_ckpt/deit-s-h-l-tmlp_flops_dict.pth \

--eval_checkpoint /path/to/your/checkpoint@inproceedings{meng2022adavit,

title={AdaViT: Adaptive Vision Transformers for Efficient Image Recognition},

author={Meng, Lingchen and Li, Hengduo and Chen, Bor-Chun and Lan, Shiyi and Wu, Zuxuan and Jiang, Yu-Gang and Lim, Ser-Nam},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={12309--12318},

year={2022}

}